Thanks for your great work!

when I train the dataset on Colab like this, this is a problem:

!python train.py --config configs/v3/ddad.txt --dataset_dir ddad --val_mode photo

/usr/local/lib/python3.7/dist-packages/torchvision/models/_utils.py:253: UserWarning: Accessing the model URLs via the internal dictionary of the module is deprecated since 0.13 and will be removed in 0.15. Please access them via the appropriate Weights Enum instead.

"Accessing the model URLs via the internal dictionary of the module is deprecated since 0.13 and will "

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

22896 samples found for training

22896 samples found for validation

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params

0 | depth_net | DepthNet | 14.8 M

1 | pose_net | PoseNet | 13.0 M

27.9 M Trainable params

0 Non-trainable params

27.9 M Total params

111.417 Total estimated model params size (MB)

Sanity Checking: 0it [00:00, ?it/s]/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py:566: UserWarning: This DataLoader will create 4 worker processes in total. Our suggested max number of worker in current system is 2, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

cpuset_checked))

Traceback (most recent call last):

File "/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py", line 1163, in _try_get_data

data = self._data_queue.get(timeout=timeout)

File "/usr/lib/python3.7/queue.py", line 179, in get

self.not_empty.wait(remaining)

File "/usr/lib/python3.7/threading.py", line 300, in wait

gotit = waiter.acquire(True, timeout)

File "/usr/local/lib/python3.7/dist-packages/torch/utils/data/_utils/signal_handling.py", line 66, in handler

_error_if_any_worker_fails()

RuntimeError: DataLoader worker (pid 782) is killed by signal: Killed.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "train.py", line 60, in

trainer.fit(system, dm)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 697, in fit

self._fit_impl, model, train_dataloaders, val_dataloaders, datamodule, ckpt_path

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 650, in _call_and_handle_interrupt

return trainer_fn(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 735, in _fit_impl

results = self._run(model, ckpt_path=self.ckpt_path)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 1166, in _run

results = self._run_stage()

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 1252, in _run_stage

return self._run_train()

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 1274, in _run_train

self._run_sanity_check()

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 1343, in _run_sanity_check

val_loop.run()

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/loops/loop.py", line 200, in run

self.advance(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/loops/dataloader/evaluation_loop.py", line 155, in advance

dl_outputs = self.epoch_loop.run(self._data_fetcher, dl_max_batches, kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/loops/loop.py", line 200, in run

self.advance(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/loops/epoch/evaluation_epoch_loop.py", line 127, in advance

batch = next(data_fetcher)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/utilities/fetching.py", line 184, in next

return self.fetching_function()

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/utilities/fetching.py", line 263, in fetching_function

self._fetch_next_batch(self.dataloader_iter)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/utilities/fetching.py", line 277, in _fetch_next_batch

batch = next(iterator)

File "/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py", line 681, in next

data = self._next_data()

File "/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py", line 1359, in _next_data

idx, data = self._get_data()

File "/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py", line 1315, in _get_data

success, data = self._try_get_data()

File "/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py", line 1176, in _try_get_data

raise RuntimeError('DataLoader worker (pid(s) {}) exited unexpectedly'.format(pids_str)) from e

RuntimeError: DataLoader worker (pid(s) 782) exited unexpectedly

Since there is only one GPU, I set the num_worker=1( 0 the same error), then it comes to:

/usr/local/lib/python3.7/dist-packages/torchvision/models/_utils.py:253: UserWarning: Accessing the model URLs via the internal dictionary of the module is deprecated since 0.13 and will be removed in 0.15. Please access them via the appropriate Weights Enum instead.

"Accessing the model URLs via the internal dictionary of the module is deprecated since 0.13 and will "

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

22896 samples found for training

22896 samples found for validation

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params

0 | depth_net | DepthNet | 14.8 M

1 | pose_net | PoseNet | 13.0 M

27.9 M Trainable params

0 Non-trainable params

27.9 M Total params

111.417 Total estimated model params size (MB)

Sanity Checking DataLoader 0: 0% 0/5 [00:00<?, ?it/s]Traceback (most recent call last):

File "train.py", line 60, in

trainer.fit(system, dm)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 697, in fit

self._fit_impl, model, train_dataloaders, val_dataloaders, datamodule, ckpt_path

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 650, in _call_and_handle_interrupt

return trainer_fn(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 735, in _fit_impl

results = self._run(model, ckpt_path=self.ckpt_path)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 1166, in _run

results = self._run_stage()

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 1252, in _run_stage

return self._run_train()

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 1274, in _run_train

self._run_sanity_check()

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 1343, in _run_sanity_check

val_loop.run()

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/loops/loop.py", line 200, in run

self.advance(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/loops/dataloader/evaluation_loop.py", line 155, in advance

dl_outputs = self.epoch_loop.run(self._data_fetcher, dl_max_batches, kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/loops/loop.py", line 200, in run

self.advance(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/loops/epoch/evaluation_epoch_loop.py", line 143, in advance

output = self._evaluation_step(**kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/loops/epoch/evaluation_epoch_loop.py", line 240, in _evaluation_step

output = self.trainer._call_strategy_hook(hook_name, *kwargs.values())

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/trainer/trainer.py", line 1704, in _call_strategy_hook

output = fn(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/pytorch_lightning/strategies/strategy.py", line 370, in validation_step

return self.model.validation_step(*args, **kwargs)

File "/content/sc_depth_pl/SC_DepthV3.py", line 90, in validation_step

tgt_depth = self.depth_net(tgt_img)

File "/usr/local/lib/python3.7/dist-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/content/sc_depth_pl/models/DepthNet.py", line 133, in forward

features = self.encoder(x)

File "/usr/local/lib/python3.7/dist-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/content/sc_depth_pl/models/resnet_encoder.py", line 100, in forward

x = self.encoder.conv1(x)

File "/usr/local/lib/python3.7/dist-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/torch/nn/modules/conv.py", line 457, in forward

return self._conv_forward(input, self.weight, self.bias)

File "/usr/local/lib/python3.7/dist-packages/torch/nn/modules/conv.py", line 454, in _conv_forward

self.padding, self.dilation, self.groups)

RuntimeError: Given groups=1, weight of size [64, 3, 7, 7], expected input[4, 320, 256, 320] to have 3 channels, but got 320 channels instead

What should be changed? Thank you very much for your help!

Is there any skills in traning? And what should I pay attention to in training?

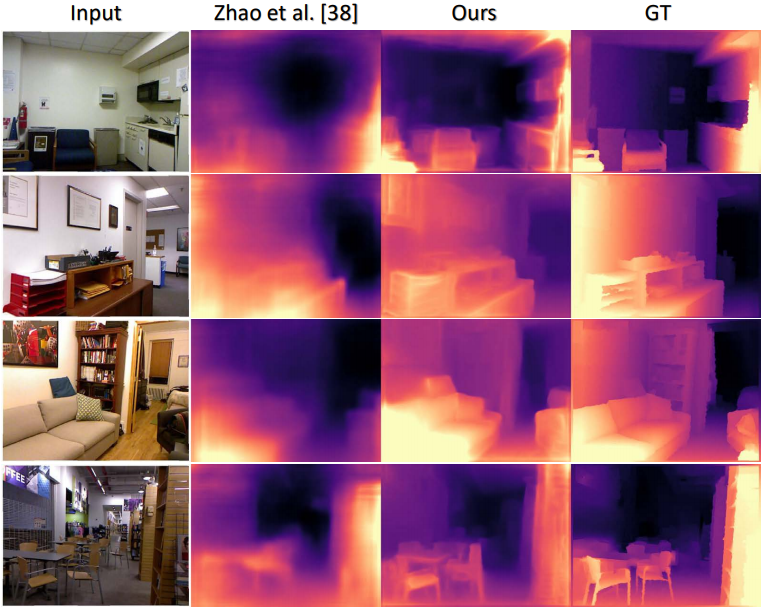

Is there any skills in traning? And what should I pay attention to in training? according to the Rodriguez formula,We can deduce the rotation matrix,as shown in image2

according to the Rodriguez formula,We can deduce the rotation matrix,as shown in image2

i find there are difference between formula and codes. Could you tell me the details of the changes you have made?

i find there are difference between formula and codes. Could you tell me the details of the changes you have made? Enter the command:CUDA_VISIBLE_DEVICES=0 python train.py --config /home/ubuntu/wl/sc_depth_pl-master/configs/v3/nyu.txt --dataset_dir /home/ubuntu/data0/dataset_endo_colon

get error:/home/ubuntu/miniconda3/envs/sc_depth_env/lib/python3.8/site-packages/torchvision/models/_utils.py:252: UserWarning: Accessing the model URLs via the internal dictionary of the module is deprecated since 0.13 and will be removed in 0.15. Please access them via the appropriate Weights Enum instead.

warnings.warn(

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

Enter the command:CUDA_VISIBLE_DEVICES=0 python train.py --config /home/ubuntu/wl/sc_depth_pl-master/configs/v3/nyu.txt --dataset_dir /home/ubuntu/data0/dataset_endo_colon

get error:/home/ubuntu/miniconda3/envs/sc_depth_env/lib/python3.8/site-packages/torchvision/models/_utils.py:252: UserWarning: Accessing the model URLs via the internal dictionary of the module is deprecated since 0.13 and will be removed in 0.15. Please access them via the appropriate Weights Enum instead.

warnings.warn(

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs