Summary

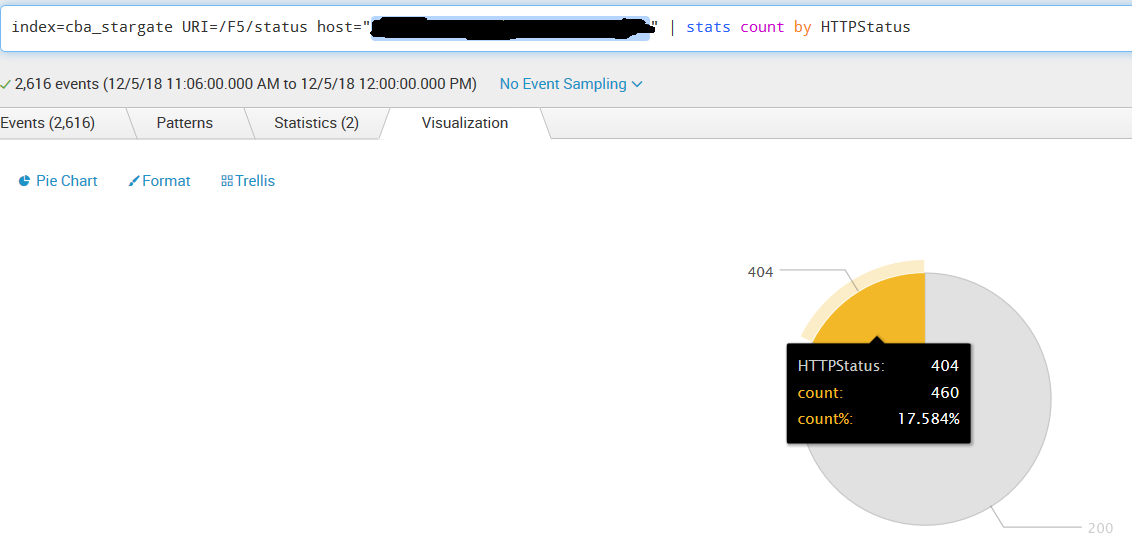

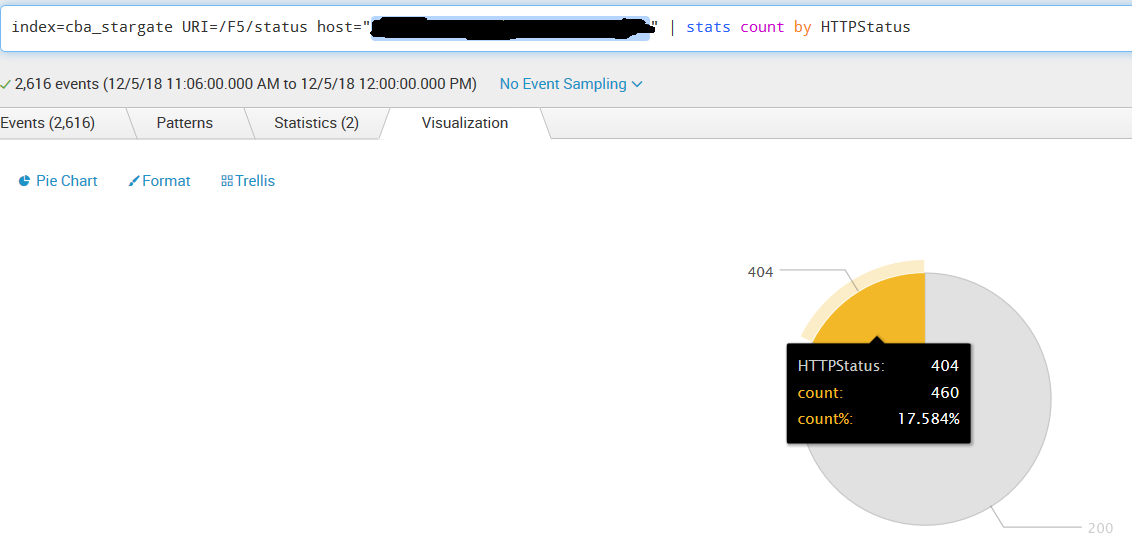

We have noticed in our Kong Gateway nodes times when common endpoints the gateway exposes throwing 404 route not found on a % of API calls. The specific proxy we focused on with this post( "/F5/status") does not route, has no auth, and serves as a ping up time endpoint that returns static 200 success. We do notice this as well on other endpoints that have auth and plugins as well, but the frequency in which our ping endpoint gets consumed is consistent and provides the best insight.

Steps To Reproduce

Reproducing consistently seems impossible from our perspective at this time, but we will elaborate with as much detail and screenshots as we can.

-

Create combination of services and resources 1 to 1. We see 130 service route pairs. 300 plugins. Some global, some applied directly to routes(acl/auth).

-

Add additional services + routes pairs with standard plugins over extended time.

-

Suspicion is eventually the 404's will reveal themselves, and we have a high degree of confidence it does not happen globally across all worker processes.

New arbitrary Service+Route was made on the Gateway, specific important to note is created timestamp:

Converted to UTC:

Above time matches identically with when the existing "/F5/status" began throwing 404 route not found errors:

You can see a direct correlation to when that new service+route pair was created to when the gateway began to throw 404 not found errors on a route that exists and previously had no problems. Note the "/F5/status" endpoint takes consistent traffic at all time from health check monitors.

Interesting bit its the % of errors to this individual Kong Node, we run 6 worker processes and the error rate % is almost perfect for 1 worker process showing impact:

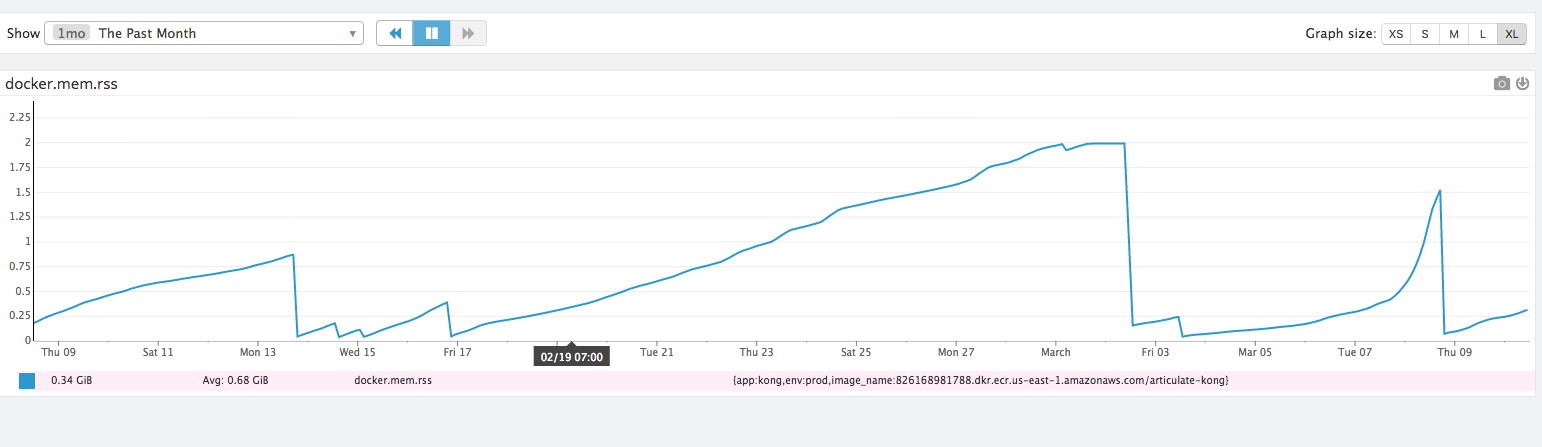

To discuss our architecture we run Kong with Cassandra 3.X in 2 datacenters, 1 Kong node per data center. We run a 6 node Cassandra cluster, 3 C* nodes per datacenter. The errors only occurred in a single datacenter on the Kong node in our examples above, but both datacenters share identical settings. We redeploy Kong on a weekly basis every Monday early AM, but this error presented above Started on a Wednesday so we can't correlate the problem to any sort of Kong startup issue.

To us the behavior points to cache rebuilding during new resource creation based on what we can correlate. Sadly nothing in Kong logging catches anything of interest when we notice issue presenting itself. Also note it does not happen every time obviously so its a very hard issue to nail down.

We also notice the issue correcting itself too at times, we have not traced the correction to anything specific just yet, but I assume very likely its when further services and routes are created after the errors are occurring and what seems to be a problematic worker process has its router cleaned up again.

Other points I can make are that production has not seen this issue with identical Kong configurations and architecture. But production has fewer proxies and has new services or routes added at a much lower frequency(1-2 per week vs 20+ in non-prod).

I wonder at this time if it may be also safer for us to switch cache TTL back from the 0 infinity value to some arbitrary number of hours to force cycle on the resources. I suppose if it is indeed the cache as we suspect that that would actually make the frequency of this issue more prevalent possibly though.

I may write a Kong health script that just arbitrarily grabs all routes on the gateway and calls each 1 one by 1 to ensure they don't return a 404 as a sanity check to run daily too right now. My biggest fear is as production grows in size and/or higher frequency in services/routes created daily we may begin to see the issue present itself there as well and that would cause dramatic impact to existing priority services if they start to 404 respond due to Kong not recognizing the proxy route exists in the db and caching appropriately.

Sorry I could not provide a 100% reproducible scenario for this situation, can only go off the analytics we have. Although if it yields some underlying bug in how Kong currently manages services and routes, that would bring huge stability to the core product.

Additional Details & Logs

-

Kong version 0.14.1

-

Kong error logs -

Kong Error logs reveal nothing about the 404 not found's from Kong's perspetive. Nothing gets logged during these events in terms of normal or debug execution.

-

Kong configuration (the output of a GET request to Kong's Admin port - see

https://docs.konghq.com/latest/admin-api/#retrieve-node-information)

{

"plugins": {

"enabled_in_cluster": [

"correlation-id",

"acl",

"kong-oidc-multi-idp",

"oauth2",

"rate-limiting",

"cors",

"jwt",

"kong-spec-expose",

"request-size-limiting",

"kong-response-size-limiting",

"request-transformer",

"request-termination",

"kong-error-log",

"kong-oidc-implicit-token",

"kong-splunk-log",

"kong-upstream-jwt",

"kong-cluster-drain",

"statsd"

],

"available_on_server": {

"kong-path-based-routing": true,

"kong-spec-expose": true,

"kong-cluster-drain": true,

"correlation-id": true,

"statsd": true,

"jwt": true,

"cors": true,

"kong-oidc-multi-idp": true,

"kong-response-size-limiting": true,

"kong-oidc-auth": true,

"kong-upstream-jwt": true,

"kong-error-log": true,

"request-termination": true,

"request-size-limiting": true,

"acl": true,

"rate-limiting": true,

"kong-service-virtualization": true,

"request-transformer": true,

"kong-oidc-implicit-token": true,

"kong-splunk-log": true,

"oauth2": true

}

},

"tagline": "Welcome to kong",

"configuration": {

"plugins": [

"kong-error-log",

"kong-oidc-implicit-token",

"kong-response-size-limiting",

"cors",

"request-transformer",

"kong-service-virtualization",

"kong-cluster-drain",

"kong-upstream-jwt",

"kong-splunk-log",

"kong-spec-expose",

"kong-oidc-auth",

"kong-path-based-routing",

"kong-oidc-multi-idp",

"correlation-id",

"oauth2",

"statsd",

"jwt",

"rate-limiting",

"acl",

"request-size-limiting",

"request-termination"

],

"admin_ssl_enabled": false,

"lua_ssl_verify_depth": 3,

"trusted_ips": [

"0.0.0.0/0",

"::/0"

],

"lua_ssl_trusted_certificate": "/usr/local/kong/ssl/kongcert.pem",

"loaded_plugins": {

"kong-path-based-routing": true,

"kong-spec-expose": true,

"kong-cluster-drain": true,

"correlation-id": true,

"statsd": true,

"jwt": true,

"cors": true,

"rate-limiting": true,

"kong-response-size-limiting": true,

"kong-oidc-auth": true,

"kong-upstream-jwt": true,

"acl": true,

"oauth2": true,

"kong-splunk-log": true,

"kong-oidc-implicit-token": true,

"kong-error-log": true,

"kong-service-virtualization": true,

"request-transformer": true,

"kong-oidc-multi-idp": true,

"request-size-limiting": true,

"request-termination": true

},

"cassandra_username": "****",

"admin_ssl_cert_csr_default": "/usr/local/kong/ssl/admin-kong-default.csr",

"ssl_cert_key": "/usr/local/kong/ssl/kongprivatekey.key",

"dns_resolver": {},

"pg_user": "kong",

"mem_cache_size": "1024m",

"cassandra_data_centers": [

"dc1:2",

"dc2:3"

],

"nginx_admin_directives": {},

"cassandra_password": "******",

"custom_plugins": {},

"pg_host": "127.0.0.1",

"nginx_acc_logs": "/usr/local/kong/logs/access.log",

"proxy_listen": [

"0.0.0.0:8000",

"0.0.0.0:8443 ssl http2"

],

"client_ssl_cert_default": "/usr/local/kong/ssl/kong-default.crt",

"ssl_cert_key_default": "/usr/local/kong/ssl/kong-default.key",

"dns_no_sync": false,

"db_update_propagation": 5,

"nginx_err_logs": "/usr/local/kong/logs/error.log",

"cassandra_port": 9042,

"dns_order": [

"LAST",

"SRV",

"A",

"CNAME"

],

"dns_error_ttl": 1,

"headers": [

"latency_tokens"

],

"dns_stale_ttl": 4,

"nginx_optimizations": true,

"database": "cassandra",

"pg_database": "kong",

"nginx_worker_processes": "auto",

"lua_package_cpath": "",

"admin_acc_logs": "/usr/local/kong/logs/admin_access.log",

"lua_package_path": "./?.lua;./?/init.lua;",

"nginx_pid": "/usr/local/kong/pids/nginx.pid",

"upstream_keepalive": 120,

"cassandra_contact_points": [

"server8429",

"server8431",

"server8432",

"server8433",

"server8445",

"server8428"

],

"admin_access_log": "off",

"client_ssl_cert_csr_default": "/usr/local/kong/ssl/kong-default.csr",

"proxy_listeners": [

{

"ssl": false,

"ip": "0.0.0.0",

"proxy_protocol": false,

"port": 8000,

"http2": false,

"listener": "0.0.0.0:8000"

},

{

"ssl": true,

"ip": "0.0.0.0",

"proxy_protocol": false,

"port": 8443,

"http2": true,

"listener": "0.0.0.0:8443 ssl http2"

}

],

"proxy_ssl_enabled": true,

"proxy_access_log": "off",

"ssl_cert_csr_default": "/usr/local/kong/ssl/kong-default.csr",

"enabled_headers": {

"latency_tokens": true,

"X-Upstream-Status": false,

"X-Proxy-Latency": true,

"server_tokens": false,

"Server": false,

"Via": false,

"X-Upstream-Latency": true

},

"cassandra_ssl": true,

"cassandra_local_datacenter": "DC2",

"db_resurrect_ttl": 30,

"db_update_frequency": 5,

"cassandra_consistency": "LOCAL_QUORUM",

"client_max_body_size": "100m",

"admin_error_log": "/dev/stderr",

"pg_ssl_verify": false,

"dns_not_found_ttl": 30,

"pg_ssl": false,

"cassandra_repl_factor": 1,

"cassandra_lb_policy": "RequestDCAwareRoundRobin",

"cassandra_repl_strategy": "SimpleStrategy",

"nginx_kong_conf": "/usr/local/kong/nginx-kong.conf",

"error_default_type": "text/plain",

"nginx_http_directives": {},

"real_ip_header": "X-Forwarded-For",

"kong_env": "/usr/local/kong/.kong_env",

"cassandra_schema_consensus_timeout": 10000,

"dns_hostsfile": "/etc/hosts",

"admin_listeners": [

{

"ssl": false,

"ip": "0.0.0.0",

"proxy_protocol": false,

"port": 8001,

"http2": false,

"listener": "0.0.0.0:8001"

}

],

"ssl_ciphers": "ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256",

"ssl_cert": "/usr/local/kong/ssl/kongcert.crt",

"prefix": "/usr/local/kong",

"admin_ssl_cert_key_default": "/usr/local/kong/ssl/admin-kong-default.key",

"cassandra_ssl_verify": true,

"db_cache_ttl": 0,

"ssl_cipher_suite": "modern",

"real_ip_recursive": "on",

"proxy_error_log": "/dev/stderr",

"client_ssl_cert_key_default": "/usr/local/kong/ssl/kong-default.key",

"nginx_daemon": "off",

"anonymous_reports": false,

"cassandra_timeout": 5000,

"nginx_proxy_directives": {},

"pg_port": 5432,

"log_level": "debug",

"client_body_buffer_size": "50m",

"client_ssl": false,

"lua_socket_pool_size": 30,

"admin_ssl_cert_default": "/usr/local/kong/ssl/admin-kong-default.crt",

"cassandra_keyspace": "kong_stage",

"ssl_cert_default": "/usr/local/kong/ssl/kong-default.crt",

"nginx_conf": "/usr/local/kong/nginx.conf",

"admin_listen": [

"0.0.0.0:8001"

]

},

"version": "0.14.1",

"node_id": "cf0c92a9-724d-4972-baed-599785cff5ed",

"lua_version": "LuaJIT 2.1.0-beta3",

"prng_seeds": {

"pid: 73": 183184219419,

"pid: 71": 114224634222,

"pid: 72": 213242339120,

"pid: 70": 218221808514,

"pid: 69": 233240145991,

"pid: 68": 231238177547

},

"timers": {

"pending": 4,

"running": 0

},

"hostname": "kong-62-hxqnq"

}

- Operating system: Kong's Docker Alpine version 3.6 in github repo