GBIM

手势交互地图 GBIM(Gesture-Based Interaction map),基于视觉深度神经网络的交互地图,通过电脑摄像头观察使用者的手势变化,进而控制地图进行简单的交互。网络使用PaddleX提供的轻量级模型PPYOLO Tiny以及MobileNet V3 small,使得整个模型大小约10MB左右,即使在CPU下也能快速定位和识别手势。

手势

| 手势 | 交互 | 手势 | 交互 | 手势 | 交互 |

|---|---|---|---|---|---|

|

向上滑动 |  |

向左滑动 |  |

地图放大 |

| 手势 | 交互 | 手势 | 交互 | 手势 | 交互 |

|

向下滑动 |  |

向右滑动 |  |

地图缩小 |

进度安排

基础

- 确认用于交互的手势。

- 使用

det_acq.py采集一些电脑摄像头拍摄的人手姿势数据。 - 数据标注,训练手的目标检测模型

- 捕获目标手,使用

clas_acq.py获取手部图像进行标注,并用于训练手势分类模型。 - 交互手势的检测与识别组合验证。

- 打开百度地图网页版,进行模拟按键交互。

- 组合功能,验证基本功能。

进阶

- 将图像分类改为序列图像分类,提高手势识别的流畅度和准确度。

- 重新采集和标注数据,调参训练模型。

- 搭建可用于参数调节的地图。

- 界面整合,整理及美化。

数据集 & 模型

手势检测

- 数据集使用来自联想小新笔记本摄像头采集的数据,使用labelImg标注为VOC格式,共1011张。该数据集场景、环境和人物单一,仅作为测试使用,不提供数据集下载。数据组织参考PaddelX下的PascalVOC数据组织方式。

- 模型使用超轻量级PPYOLO Tiny,模型大小小于4MB,随便训练了100轮后保留best_model作为测试模型,由于数据集和未调参训练的原因,当前默认识别效果较差。

手势分类

- 数据集使用来自联想小新笔记本摄像头采集的数据,通过手势检测模型提出出手图像,人工分为7类,分别为6种交互手势以及“其他”,共1102张。该数据集数量较少,手型及手势单一,仅作为测试使用,不提供数据集下载。数据组织形式如下:

dataset

├-- Images

| ├-- up

┆ ┆ └-- xxx.jpg

| └-- other

┆ └-- xxx.jpg

├-- labels.txt

├-- train_list.txt

└-- val_list.txt

- 模型使用超轻量级MobileNet V3 small,模型大小小于7MB,由于数据量很小,随便训练了20轮后保留best_model作为测试模型,当前识别分类效果较差。

模型文件上传使用LFS,下拉时注意需要安装LFS,参考LFS文档。后续将重新采集和标注更加多样的大量数据集,并采用更好的调参方法获得更加准确的识别模型

演示

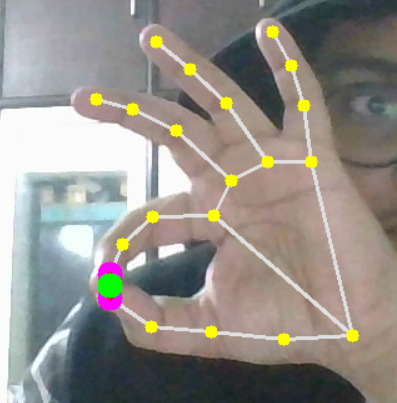

手势识别

地图交互

*未显示Capture界面

使用

- 克隆当前项目到本地,按照

requirements.txt安装所依赖的包opencv、paddlex以及pynput。PaddleX对应请安装最新版的PaddlePaddle,由于模型轻量,CPU版本足矣,参考下面代码,细节参考官方网站:

python -m pip install paddlepaddle -i https://mirror.baidu.com/pypi/simple

- 进入

demo.py,将浏览器路径修改为自己使用的浏览器路径:

web_path = '"D:/Twinkstar/Twinkstar Browser/twinkstar.exe"' # 自己的浏览器路径

- 运行

demo.py启动程序:

cd GBIM

python demo.py

常见问题及解决

-

Q: 拉项目时卡住不动

A:首先确认按照文档安装LFS。如果已经安装那极大可能是网络问题,可以等待一段时间,或先跳过LFS文件,再单独拉取,参考下面git代码:

// 开启跳过无法clone的LFS文件 git lfs install --skip-smudge // clone当前项目 git clone "current project" // 进入当前项目,单独拉取LFS文件 cd "current project" git lfs pull // 恢复LFS设置 git lfs install --force

-

Q:按

q或者手势交互无效A:请注意当前鼠标点击的焦点,焦点在Capture,则接受

q退出;焦点在浏览器,则交互结果将驱动浏览器中的地图进行变换。 -

Q:安装PaddleX时报错,关于MV C++

A:若在Windows下安装coco tool时报错,则可能缺少Microsoft Visual C++,可在微软官方下载网页进行下载安装后重启,即可解决。

-

Q:运行未报错,但没有保存数据到本地

A:请检查路径是否有中文,

cv2.imwrite保存图像时不能有中文路径。

参考

交流与反馈

Email:[email protected]