UniMoCo: Unsupervised, Semi-Supervised and Full-Supervised Visual Representation Learning

This is the official PyTorch implementation for UniMoCo paper:

@article{dai2021unimoco,

author = {Zhigang Dai and Bolun Cai and Yugeng Lin and Junying Chen},

title = {UniMoCo: Unsupervised, Semi-Supervised and Full-Supervised Visual Representation Learning},

journal = {arXiv preprint arXiv:2103.10773},

year = {2021},

}

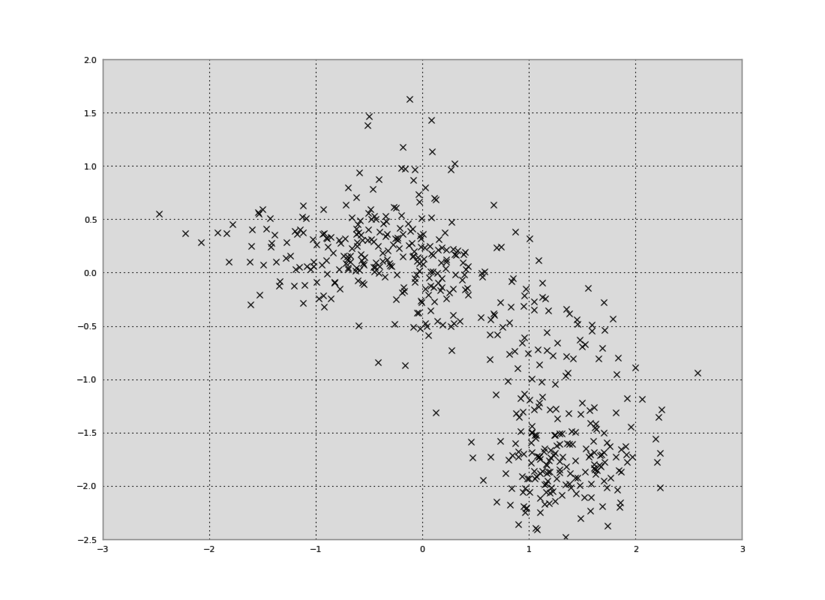

In UniMoCo, we generalize MoCo to a unified contrastive learning framework, which supports unsupervised, semi-supervised and full-supervised visual representation learning. Based on MoCo, we maintain a label queue to store supervised labels. With the label queue, we can construct the multi-hot target on-the-fly, which represents postives and negatives of the given query. Besides, we propose a unified contrastive loss to deal with arbitrary number of positives and negatives. There is a comparison between MoCo and UniMoCo.

ImageNet Pre-training

Data Preparation

Install PyTorch and ImageNet dataset following the official PyTorch ImageNet training code.

Pre-training

To perform supervised contrastive learning of ResNet-50 model on ImageNet with 8 gpus for 800 epochs, run:

python main_unimoco.py \

-a resnet50 \

--lr 0.03 \

--batch-size 256 \

--epochs 800 \

--dist-url 'tcp://localhost:10001' \

--multiprocessing-distributed --world-size 1 --rank 0 \

--mlp \

--moco-t 0.2 \

--aug-plus \

--cos \

[your imagenet-folder with train and val folders]

By default, the script performs full-supervised contrasitve learning.

Set --supervised-list to perform semi-supervised contrastive learning with different label ratios. For exmaple, 60% labels: --supervised-list ./label_info/60percent.txt.

This script uses all the default hyper-parameters as described in the MoCo v2.

Results

ImageNet Linear classification and COCO detection 1x schedule (R50-C4) results:

| model | ratios | top-1 acc. | top-5 acc. | COCO AP |

|---|---|---|---|---|

| UniMoCo | 0% | 71.1 | 90.1 | 39.0 |

| UniMoCo | 10% | 72.0 | 90.3 | 39.3 |

| UniMoCo | 30% | 75.1 | 92.5 | 39.6 |

| UniMoCo | 60% | 76.2 | 93.0 | 39.8 |

| UniMoCo | 100% | 76.4 | 93.1 | 39.6 |

Check more details about linear classification and detection fine-tuning on MoCo.

Models are coming soon.

License

This project is under the CC-BY-NC 4.0 license. See LICENSE for details.

![[AAAI2021] The source code for our paper 《Enhancing Unsupervised Video Representation Learning by Decoupling the Scene and the Motion》.](https://github.com/FingerRec/DSM-decoupling-scene-motion/raw/main/figures/ppl.png)

![[CVPR 2021] Unsupervised Degradation Representation Learning for Blind Super-Resolution](https://github.com/LongguangWang/DASR/raw/main/Figs/fig.1.png)

![[NeurIPS 2021] ORL: Unsupervised Object-Level Representation Learning from Scene Images](https://github.com/Jiahao000/ORL/raw/master/highlights.png)