This repository has gone stale as I unfortunately do not have the time to maintain it anymore. If you would like to continue the development of it as a collaborator send me an email at [email protected].

Keras-GAN

Collection of Keras implementations of Generative Adversarial Networks (GANs) suggested in research papers. These models are in some cases simplified versions of the ones ultimately described in the papers, but I have chosen to focus on getting the core ideas covered instead of getting every layer configuration right. Contributions and suggestions of GAN varieties to implement are very welcomed.

See also: PyTorch-GAN

Table of Contents

- Installation

- Implementations

- Auxiliary Classifier GAN

- Adversarial Autoencoder

- Bidirectional GAN

- Boundary-Seeking GAN

- Conditional GAN

- Context-Conditional GAN

- Context Encoder

- Coupled GANs

- CycleGAN

- Deep Convolutional GAN

- DiscoGAN

- DualGAN

- Generative Adversarial Network

- InfoGAN

- LSGAN

- Pix2Pix

- PixelDA

- Semi-Supervised GAN

- Super-Resolution GAN

- Wasserstein GAN

- Wasserstein GAN GP

Installation

$ git clone https://github.com/eriklindernoren/Keras-GAN

$ cd Keras-GAN/

$ sudo pip3 install -r requirements.txt

Implementations

AC-GAN

Implementation of Auxiliary Classifier Generative Adversarial Network.

Paper: https://arxiv.org/abs/1610.09585

Example

$ cd acgan/

$ python3 acgan.py

Adversarial Autoencoder

Implementation of Adversarial Autoencoder.

Paper: https://arxiv.org/abs/1511.05644

Example

$ cd aae/

$ python3 aae.py

BiGAN

Implementation of Bidirectional Generative Adversarial Network.

Paper: https://arxiv.org/abs/1605.09782

Example

$ cd bigan/

$ python3 bigan.py

BGAN

Implementation of Boundary-Seeking Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1702.08431

Example

$ cd bgan/

$ python3 bgan.py

CC-GAN

Implementation of Semi-Supervised Learning with Context-Conditional Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1611.06430

Example

$ cd ccgan/

$ python3 ccgan.py

CGAN

Implementation of Conditional Generative Adversarial Nets.

Paper:https://arxiv.org/abs/1411.1784

Example

$ cd cgan/

$ python3 cgan.py

Context Encoder

Implementation of Context Encoders: Feature Learning by Inpainting.

Paper: https://arxiv.org/abs/1604.07379

Example

$ cd context_encoder/

$ python3 context_encoder.py

CoGAN

Implementation of Coupled generative adversarial networks.

Paper: https://arxiv.org/abs/1606.07536

Example

$ cd cogan/

$ python3 cogan.py

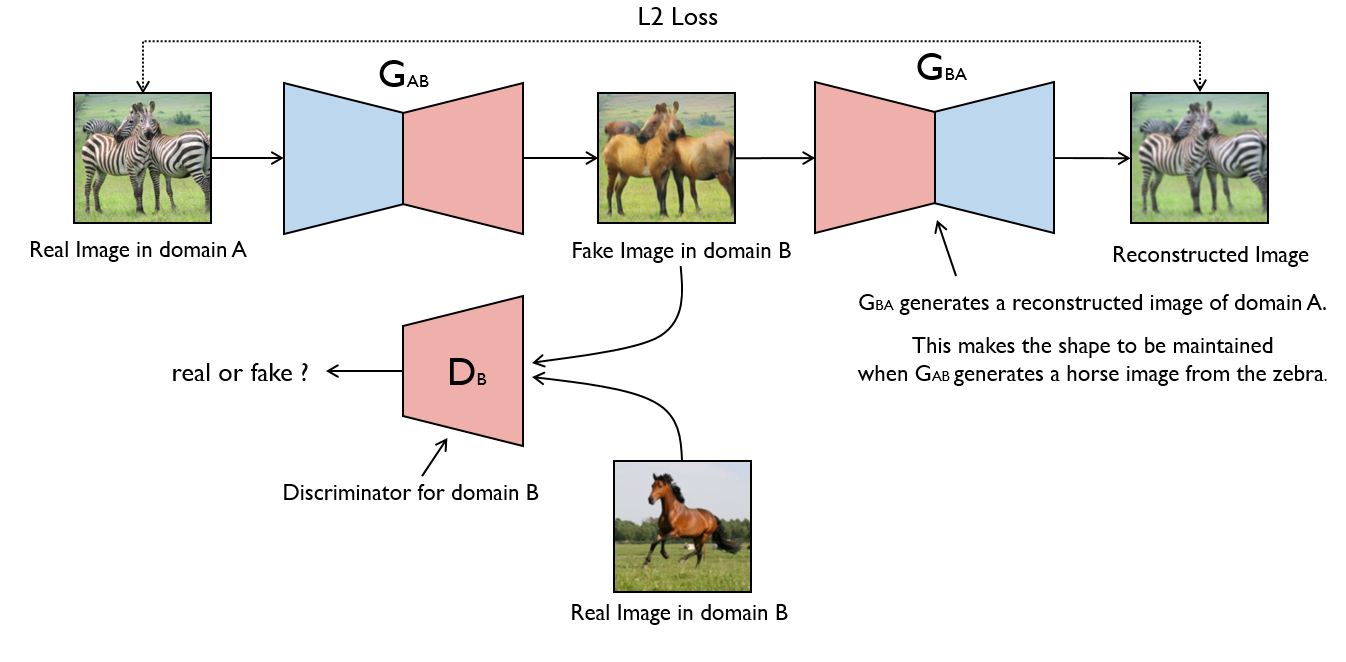

CycleGAN

Implementation of Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.

Paper: https://arxiv.org/abs/1703.10593

Example

$ cd cyclegan/

$ bash download_dataset.sh apple2orange

$ python3 cyclegan.py

DCGAN

Implementation of Deep Convolutional Generative Adversarial Network.

Paper: https://arxiv.org/abs/1511.06434

Example

$ cd dcgan/

$ python3 dcgan.py

DiscoGAN

Implementation of Learning to Discover Cross-Domain Relations with Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1703.05192

Example

$ cd discogan/

$ bash download_dataset.sh edges2shoes

$ python3 discogan.py

DualGAN

Implementation of DualGAN: Unsupervised Dual Learning for Image-to-Image Translation.

Paper: https://arxiv.org/abs/1704.02510

Example

$ cd dualgan/

$ python3 dualgan.py

GAN

Implementation of Generative Adversarial Network with a MLP generator and discriminator.

Paper: https://arxiv.org/abs/1406.2661

Example

$ cd gan/

$ python3 gan.py

InfoGAN

Implementation of InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets.

Paper: https://arxiv.org/abs/1606.03657

Example

$ cd infogan/

$ python3 infogan.py

LSGAN

Implementation of Least Squares Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1611.04076

Example

$ cd lsgan/

$ python3 lsgan.py

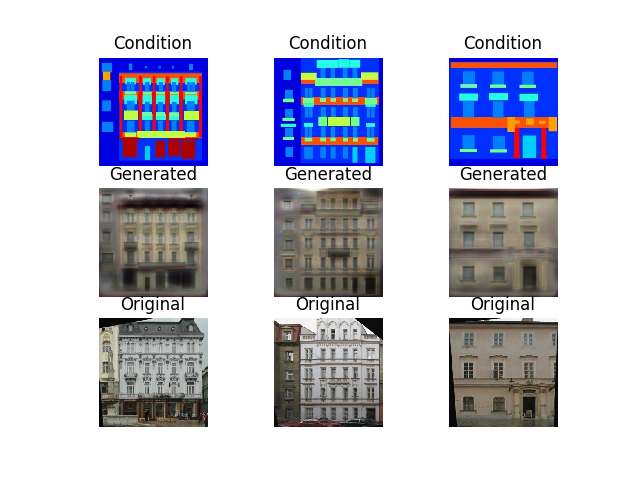

Pix2Pix

Implementation of Image-to-Image Translation with Conditional Adversarial Networks.

Paper: https://arxiv.org/abs/1611.07004

Example

$ cd pix2pix/

$ bash download_dataset.sh facades

$ python3 pix2pix.py

PixelDA

Implementation of Unsupervised Pixel-Level Domain Adaptation with Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1612.05424

MNIST to MNIST-M Classification

Trains a classifier on MNIST images that are translated to resemble MNIST-M (by performing unsupervised image-to-image domain adaptation). This model is compared to the naive solution of training a classifier on MNIST and evaluating it on MNIST-M. The naive model manages a 55% classification accuracy on MNIST-M while the one trained during domain adaptation gets a 95% classification accuracy.

$ cd pixelda/

$ python3 pixelda.py

| Method | Accuracy |

|---|---|

| Naive | 55% |

| PixelDA | 95% |

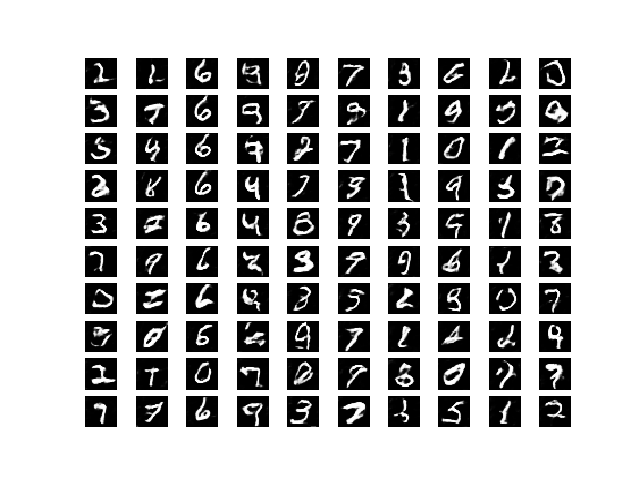

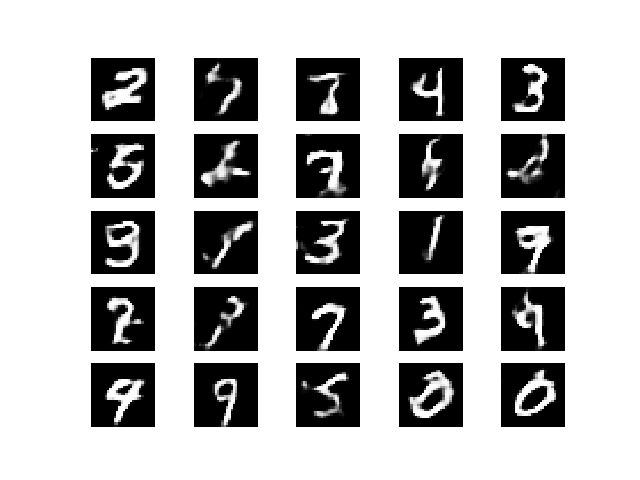

SGAN

Implementation of Semi-Supervised Generative Adversarial Network.

Paper: https://arxiv.org/abs/1606.01583

Example

$ cd sgan/

$ python3 sgan.py

SRGAN

Implementation of Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network.

Paper: https://arxiv.org/abs/1609.04802

Example

$ cd srgan/

<follow steps at the top of srgan.py>

$ python3 srgan.py

WGAN

Implementation of Wasserstein GAN (with DCGAN generator and discriminator).

Paper: https://arxiv.org/abs/1701.07875

Example

$ cd wgan/

$ python3 wgan.py

WGAN GP

Implementation of Improved Training of Wasserstein GANs.

Paper: https://arxiv.org/abs/1704.00028

Example

$ cd wgan_gp/

$ python3 wgan_gp.py