Big data on k8s

# microsoft azure

# https://docs.microsoft.com/en-us/cli/azure/install-azure-cli

az account set --subscription []

az aks get-credentials --resource-g

X-news - Pipeline data use scrapy, kafka, spark streaming, spark ML and elasticsearch, Kibana

X-news - Pipeline data use scrapy, kafka, spark streaming, spark ML and elasticsearch, Kibana

C++ Environment InitiatorVisual Studio Code C / C++ Environment Initiator

Visual Studio Code C / C++ Environment Initiator Latest Version : v 1.0.1(2021/11/08) .exe link here About : Visual Studio Code에서 C/C++환경을 MinGW GCC/G

Emacs Python Development Environment

Elpy, the Emacs Python IDE Elpy is an Emacs package to bring powerful Python editing to Emacs. It combines and configures a number of other packages,

Official repository for Spyder - The Scientific Python Development Environment

Copyright © 2009–2021 Spyder Project Contributors Some source files and icons may be under other authorship/licenses; see NOTICE.txt. Project status B

Spyder - The Scientific Python Development Environment

Spyder is a powerful scientific environment written in Python, for Python, and designed by and for scientists, engineers and data analysts. It offers a unique combination of the advanced editing, analysis, debugging, and profiling functionality of a comprehensive development tool with the data exploration, interactive execution, deep inspection, and beautiful visualization capabilities of a scientific package.

Launch a ready-to-code Wagtail Live development environment with a single click.

Wagtail Live Gitpod Launch a ready-to-code Wagtail Live development environment with a single click. Steps: Click the Open in Gitpod button. Relax: a

Tools and Docker images to make a fast Ruby on Rails development environment

Tools and Docker images to make a fast Ruby on Rails development environment. With the production templates, moving from development to production will be seamless.

Dante, my discord bot. Open source project in development and not optimized for other filesystems, install and setup script in development

DanteMode (In private development for ~6 months) Dante, my discord bot. Open source project in development and not optimized for other filesystems, in

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

Serverless proxy for Spark cluster

Hydrosphere Mist Hydrosphere Mist is a serverless proxy for Spark cluster. Mist provides a new functional programming framework and deployment model f

Apache Spark - A unified analytics engine for large-scale data processing

Apache Spark Spark is a unified analytics engine for large-scale data processing. It provides high-level APIs in Scala, Java, Python, and R, and an op

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

Distributed Deep learning with Keras & Spark

Elephas: Distributed Deep Learning with Keras & Spark Elephas is an extension of Keras, which allows you to run distributed deep learning models at sc

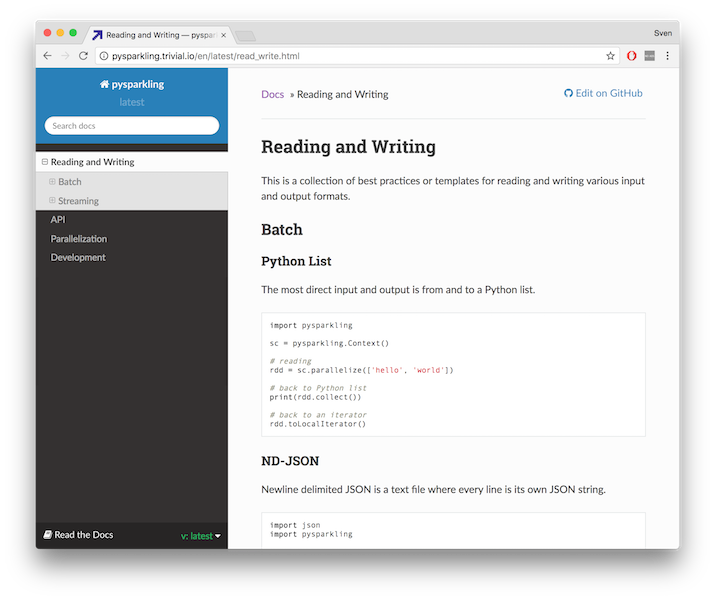

A pure Python implementation of Apache Spark's RDD and DStream interfaces.

pysparkling Pysparkling provides a faster, more responsive way to develop programs for PySpark. It enables code intended for Spark applications to exe

Koalas: pandas API on Apache Spark

pandas API on Apache Spark Explore Koalas docs » Live notebook · Issues · Mailing list Help Thirsty Koalas Devastated by Recent Fires The Koalas proje

BigDL: Distributed Deep Learning Framework for Apache Spark

BigDL: Distributed Deep Learning on Apache Spark What is BigDL? BigDL is a distributed deep learning library for Apache Spark; with BigDL, users can w

Distributed Deep learning with Keras & Spark

Elephas: Distributed Deep Learning with Keras & Spark Elephas is an extension of Keras, which allows you to run distributed deep learning models at sc