Learning Knowledge Bases with Parameters for Task-Oriented Dialogue Systems

This is the implementation of the paper:

Learning Knowledge Bases with Parameters for Task-Oriented Dialogue Systems. Andrea Madotto, Samuel Cahyawijaya, Genta Indra Winata, Yan Xu, Zihan Liu, Zhaojiang Lin, Pascale Fung Findings of EMNLP 2020 [PDF]

If you use any source codes or datasets included in this toolkit in your work, please cite the following paper. The bibtex is listed below:

@article{madotto2020learning,

title={Learning Knowledge Bases with Parameters for Task-Oriented Dialogue Systems},

author={Madotto, Andrea and Cahyawijaya, Samuel and Winata, Genta Indra and Xu, Yan and Liu, Zihan and Lin, Zhaojiang and Fung, Pascale},

journal={arXiv preprint arXiv:2009.13656},

year={2020}

}

Abstract

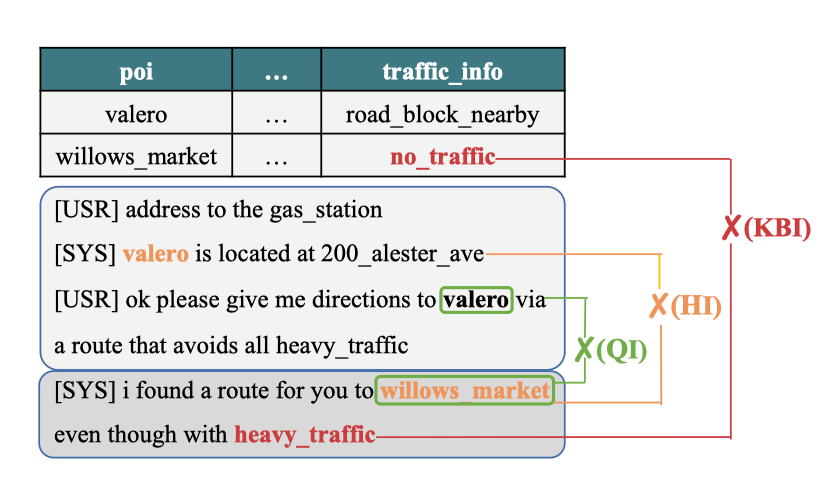

Task-oriented dialogue systems are either modularized with separate dialogue state tracking (DST) and management steps or end-to-end trainable. In either case, the knowledge base (KB) plays an essential role in fulfilling user requests. Modularized systems rely on DST to interact with the KB, which is expensive in terms of annotation and inference time. End-to-end systems use the KB directly as input, but they cannot scale when the KB is larger than a few hundred entries. In this paper, we propose a method to embed the KB, of any size, directly into the model parameters. The resulting model does not require any DST or template responses, nor the KB as input, and it can dynamically update its KB via finetuning. We evaluate our solution in five taskoriented dialogue datasets with small, medium, and large KB size. Our experiments show that end-to-end models can effectively embed knowledge bases in their parameters and achieve competitive performance in all evaluated datasets.

Knowledge-embedded Dialogue:

During training, the KE dialogues are generated by fulfilling the *TEMPLATE* with the *user goal query* results, and they are used to embed the KB into the model parameter theta. At testing time, the model does not use any external knowledge to generate the correct responses.Dependencies

We listed our dependencies on requirements.txt, you can install the dependencies by running

❱❱❱ pip install -r requirements.txt

In addition, our code also includes fp16 support with apex. You can find the package from https://github.com/NVIDIA/apex.

Experiments

bAbI-5

Dataset Download the preprocessed dataset and put the zip file inside the ./knowledge_embed/babi5 folder. Extract the zip file by executing

❱❱❱ cd ./knowledge_embed/babi5

❱❱❱ unzip dialog-bAbI-tasks.zip

Generate the delexicalized dialogues from bAbI-5 dataset via

❱❱❱ python3 generate_delexicalization_babi.py

Generate the lexicalized data from bAbI-5 dataset via

❱❱❱ python generate_dialogues_babi5.py --dialogue_path ./dialog-bAbI-tasks/dialog-babi-task5trn_record-delex.txt --knowledge_path ./dialog-bAbI-tasks/dialog-babi-kb-all.txt --output_folder ./dialog-bAbI-tasks --num_augmented_knowledge <num_augmented_knowledge> --num_augmented_dialogue <num_augmented_dialogues> --random_seed 0

Where the maximum <num_augmented_knowledge> is 558 (recommended) and <num_augmented_dialogues> is 264 as it is corresponds to the number of knowledge and number of dialogues in bAbI-5 dataset.

Fine-tune GPT-2

We provide the checkpoint of GPT-2 model fine-tuned on bAbI training set. You can also choose to train the model by yourself using the following command.

❱❱❱ cd ./modeling/babi5

❱❱❱ python main.py --model_checkpoint gpt2 --dataset BABI --dataset_path ../../knowledge_embed/babi5/dialog-bAbI-tasks --n_epochs <num_epoch> --kbpercentage <num_augmented_dialogues>

Notes that the value of --kbpercentage is equal to <num_augmented_dialogues> the one that comes from the lexicalization. This parameter is used for selecting the augmentation file to embed into the train dataset.

You can evaluate the model by executing the following script

❱❱❱ python evaluate.py --model_checkpoint <model_checkpoint_folder> --dataset BABI --dataset_path ../../knowledge_embed/babi5/dialog-bAbI-tasks

Scoring bAbI-5 To run the scorer for bAbI-5 task model, you can run the following command. Scorer will read all of the result.json under runs folder generated from evaluate.py

python scorer_BABI5.py --model_checkpoint <model_checkpoint> --dataset BABI --dataset_path ../../knowledge_embed/babi5/dialog-bAbI-tasks --kbpercentage 0

CamRest

Dataset

Download the preprocessed dataset and put the zip file under ./knowledge_embed/camrest folder. Unzip the zip file by executing

❱❱❱ cd ./knowledge_embed/camrest

❱❱❱ unzip CamRest.zip

Generate the delexicalized dialogues from CamRest dataset via

❱❱❱ python3 generate_delexicalization_CAMREST.py

Generate the lexicalized data from CamRest dataset via

❱❱❱ python generate_dialogues_CAMREST.py --dialogue_path ./CamRest/train_record-delex.txt --knowledge_path ./CamRest/KB.json --output_folder ./CamRest --num_augmented_knowledge <num_augmented_knowledge> --num_augmented_dialogue <num_augmented_dialogues> --random_seed 0

Where the maximum <num_augmented_knowledge> is 201 (recommended) and <num_augmented_dialogues> is 156 quite huge as it is corresponds to the number of knowledge and number of dialogues in CamRest dataset.

Fine-tune GPT-2

We provide the checkpoint of GPT-2 model fine-tuned on CamRest training set. You can also choose to train the model by yourself using the following command.

❱❱❱ cd ./modeling/camrest/

❱❱❱ python main.py --model_checkpoint gpt2 --dataset CAMREST --dataset_path ../../knowledge_embed/camrest/CamRest --n_epochs <num_epoch> --kbpercentage <num_augmented_dialogues>

Notes that the value of --kbpercentage is equal to <num_augmented_dialogues> the one that comes from the lexicalization. This parameter is used for selecting the augmentation file to embed into the train dataset.

You can evaluate the model by executing the following script

❱❱❱ python evaluate.py --model_checkpoint <model_checkpoint_folder> --dataset CAMREST --dataset_path ../../knowledge_embed/camrest/CamRest

Scoring CamRest To run the scorer for bAbI 5 task model, you can run the following command. Scorer will read all of the result.json under runs folder generated from evaluate.py

python scorer_CAMREST.py --model_checkpoint <model_checkpoint> --dataset CAMREST --dataset_path ../../knowledge_embed/camrest/CamRest --kbpercentage 0

SMD

Dataset

Download the preprocessed dataset and put it under ./knowledge_embed/smd folder.

❱❱❱ cd ./knowledge_embed/smd

❱❱❱ unzip SMD.zip

Fine-tune GPT-2

We provide the checkpoint of GPT-2 model fine-tuned on SMD training set. Download the checkpoint and put it under ./modeling folder.

❱❱❱ cd ./knowledge_embed/smd

❱❱❱ mkdir ./runs

❱❱❱ unzip ./knowledge_embed/smd/SMD_gpt2_graph_False_adj_False_edge_False_unilm_False_flattenKB_False_historyL_1000000000_lr_6.25e-05_epoch_10_weighttie_False_kbpercentage_0_layer_12.zip -d ./runs

You can also choose to train the model by yourself using the following command.

❱❱❱ cd ./modeling/smd

❱❱❱ python main.py --dataset SMD --lr 6.25e-05 --n_epochs 10 --kbpercentage 0 --layers 12

Prepare Knowledge-embedded dialogues

Firstly, we need to build databases for SQL query.

❱❱❱ cd ./knowledge_embed/smd

❱❱❱ python generate_dialogues_SMD.py --build_db --split test

Then we generate dialogues based on pre-designed templates by domains. The following command enables you to generate dialogues in weather domain. Please replace weather with navigate or schedule in dialogue_path and domain arguments if you want to generate dialogues in the other two domains. You can also change number of templates used in relexicalization process by changing the argument num_augmented_dialogue.

❱❱❱ python generate_dialogues_SMD.py --split test --dialogue_path ./templates/weather_template.txt --domain weather --num_augmented_dialogue 100 --output_folder ./SMD/test

Adapt fine-tuned GPT-2 model to the test set

❱❱❱ python evaluate_finetune.py --dataset SMD --model_checkpoint runs/SMD_gpt2_graph_False_adj_False_edge_False_unilm_False_flattenKB_False_historyL_1000000000_lr_6.25e-05_epoch_10_weighttie_False_kbpercentage_0_layer_12 --top_k 1 --eval_indices 0,303 --filter_domain ""

You can also speed up the finetuning process by running experiments parallelly. Please modify the GPU setting in #L14 of the code.

❱❱❱ python runner_expe_SMD.py

MWOZ (2.1)

Dataset

Download the preprocessed dataset and put it under ./knowledge_embed/mwoz folder.

❱❱❱ cd ./knowledge_embed/mwoz

❱❱❱ unzip mwoz.zip

Prepare Knowledge-Embedded dialogues (You can skip this step, if you have downloaded the zip file above)

You can prepare the datasets by running

❱❱❱ bash generate_MWOZ_all_data.sh

The shell script generates the delexicalized dialogues from MWOZ dataset by calling

❱❱❱ python generate_delex_MWOZ_ATTRACTION.py

❱❱❱ python generate_delex_MWOZ_HOTEL.py

❱❱❱ python generate_delex_MWOZ_RESTAURANT.py

❱❱❱ python generate_delex_MWOZ_TRAIN.py

❱❱❱ python generate_redelex_augmented_MWOZ.py

❱❱❱ python generate_MWOZ_dataset.py

Fine-tune GPT-2

We provide the checkpoint of GPT-2 model fine-tuned on MWOZ training set. Download the checkpoint and put it under ./modeling folder.

❱❱❱ cd ./knowledge_embed/mwoz

❱❱❱ mkdir ./runs

❱❱❱ unzip ./mwoz.zip -d ./runs

You can also choose to train the model by yourself using the following command.

❱❱❱ cd ./modeling/mwoz

❱❱❱ python main.py --model_checkpoint gpt2 --dataset MWOZ_SINGLE --max_history 50 --train_batch_size 6 --kbpercentage 100 --fp16 O2 --gradient_accumulation_steps 3 --balance_sampler --n_epochs 10

OpenDialKG

Getting Started We use neo4j community server edition and apoc library for processing graph data. apoc is used to parallelize the query in neo4j, so that we can process large scale graph faster

Before proceed to the dataset section, you need to ensure that you have neo4j (https://neo4j.com/download-center/#community) and apoc (https://neo4j.com/developer/neo4j-apoc/) installed on your system.

If you are not familiar with CYPHER and apoc syntaxes, you can follow the tutorial in https://neo4j.com/developer/cypher/ and https://neo4j.com/blog/intro-user-defined-procedures-apoc/

Dataset Download the original dataset and put the zip file inside the ./knowledge_embed/opendialkg folder. Extract the zip file by executing

❱❱❱ cd ./knowledge_embed/opendialkg

❱❱❱ unzip https://drive.google.com/file/d/1llH4-4-h39sALnkXmGR8R6090xotE0PE/view?usp=sharing.zip

Generate the delexicalized dialogues from opendialkg dataset via (WARNING: this requires around 12 hours to run)

❱❱❱ python3 generate_delexicalization_DIALKG.py

This script will produce ./opendialkg/dialogkg_train_meta.pt which will be use to generate the lexicalized dialogue. You can then generate the lexicalized dialogue from opendialkg dataset via

❱❱❱ python generate_dialogues_DIALKG.py --random_seed <random_seed> --batch_size 100 --max_iteration <max_iter> --stop_count <stop_count> --connection_string bolt://localhost:7687

This script will produce samples of dialogues at most batch_size * max_iter samples, but in every batch there is a possibility where there is no valid candidate and resulting in less samples. The number of generation is limited by another factor called stop_count which will stop the generation if the number of generated samples is more than equal the specified stop_count. The file will produce 4 files: ./opendialkg/db_count_records_{random_seed}.csv, ./opendialkg/used_count_records_{random_seed}.csv, and ./opendialkg/generation_iteration_{random_seed}.csv which are used for checking the distribution shift of the count in the DB; and ./opendialkg/generated_dialogue_bs100_rs{random_seed}.json which contains the generated samples.

Notes:

- You might need to change the

neo4jpassword insidegenerate_delexicalization_DIALKG.pyandgenerate_dialogues_DIALKG.pymanually. - Because there is a ton of possibility of connection in dialkg, we use sampling method to generate the data, so random seed is crucial if you want to have reproducible result

Fine-tune GPT-2

We provide the checkpoint of GPT-2 model fine-tuned on opendialkg training set. You can also choose to train the model by yourself using the following command.

❱❱❱ cd ./modeling/opendialkg

❱❱❱ python main.py --dataset_path ../../knowledge_embed/opendialkg/opendialkg --model_checkpoint gpt2 --dataset DIALKG --n_epochs 50 --kbpercentage <random_seed> --train_batch_size 8 --valid_batch_size 8

Notes that the value of --kbpercentage is equal to <random_seed> the one that comes from the lexicalization. This parameter is used for selecting the augmentation file to embed into the train dataset.

You can evaluate the model by executing the following script

❱❱❱ python evaluate.py --model_checkpoint <model_checkpoint_folder> --dataset DIALKG --dataset_path ../../knowledge_embed/opendialkg/opendialkg

Scoring OpenDialKG To run the scorer for bAbI-5 task model, you can run the following command. Scorer will read all of the result.json under runs folder generated from evaluate.py

python scorer_DIALKG5.py --model_checkpoint <model_checkpoint> --dataset DIALKG ../../knowledge_embed/opendialkg/opendialkg --kbpercentage 0

Further Details

For the details regarding to the experiments, hyperparameters, and Evaluation results you can find it in the main paper of and suplementary materials of our work.

![[IJCAI-2021] A benchmark of data-free knowledge distillation from paper](https://github.com/zju-vipa/DataFree/raw/main/assets/cmi.png)