Instant Neural Graphics Primitives

Ever wanted to train a NeRF model of a fox in under 5 seconds? Or fly around a scene captured from photos of a factory robot? Of course you have!

Here you will find an implementation of four neural graphics primitives, being neural radiance fields (NeRF), signed distance functions (SDFs), neural images, and neural volumes. In each case, we train and render a MLP with multiresolution hash input encoding using the tiny-cuda-nn framework.

Instant Neural Graphics Primitives with a Multiresolution Hash Encoding

Thomas Müller, Alex Evans, Christoph Schied, Alexander Keller

arXiv [cs.GR], Jan 2022

[ Project page ] [ Paper ] [ Video ]

For business inquiries, please visit our website and submit the form: NVIDIA Research Licensing

Requirements

- Both Windows and Linux are supported.

- An NVIDIA GPU; tensor cores increase performance when available. All shown results come from an RTX 3090.

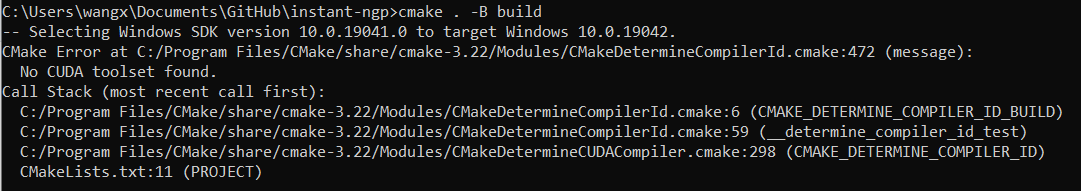

- CUDA v10.2 or higher, a C++14 capable compiler, and CMake v3.19 or higher.

- (optional) Python 3.7 or higher for interactive bindings. Also, run

pip install -r requirements.txt.- On some machines,

pyexrrefuses to install viapip. This can be resolved by installing OpenEXR from here.

- On some machines,

- (optional) OptiX 7.3 or higher for faster mesh SDF training. Set the environment variable

OptiX_INSTALL_DIRto the installation directory if it is not discovered automatically.

If you are using Linux, install the following packages

sudo apt-get install build-essential git python3-dev python3-pip libopenexr-dev libxi-dev \

libglfw3-dev libglew-dev libomp-dev libxinerama-dev libxcursor-dev

We also recommend installing CUDA and OptiX in /usr/local/ and adding the CUDA installation to your PATH. For example, if you have CUDA 11.4, add the following to your ~/.bashrc

export PATH="/usr/local/cuda-11.4/bin:$PATH"

export LD_LIBRARY_PATH="/usr/local/cuda-11.4/lib64:$LD_LIBRARY_PATH"

Compilation (Windows & Linux)

Begin by cloning this repository and all its submodules using the following command:

$ git clone --recursive https://github.com/nvlabs/instant-ngp

$ cd instant-ngp

Then, use CMake to build the project:

instant-ngp$ cmake . -B build

instant-ngp$ cmake --build build --config RelWithDebInfo -j 16

If the build succeeded, you can now run the code via the build/testbed executable or the scripts/run.py script described below.

If automatic GPU architecture detection fails, (as can happen if you have multiple GPUs installed), set the TCNN_CUDA_ARCHITECTURES enivonment variable for the GPU you would like to use. Set it to 86 for RTX 3000 cards, 80 for A100 cards, and 75 for RTX 2000 cards.

Interactive training and rendering

This codebase comes with an interactive testbed that includes many features beyond our academic publication:

- Additional training features, such as extrinsics and intrinsics optimization.

- Marching cubes for

NeRF->MeshandSDF->Meshconversion. - A spline-based camera path editor to create videos.

- Debug visualizations of the activations of every neuron input and output.

- And many more task-specific settings.

- See also our one minute demonstration video of the tool.

NeRF fox

One test scene is provided in this repository, using a small number of frames from a casually captured phone video:

instant-ngp$ ./build/testbed --scene data/nerf/fox

Alternatively, download any NeRF-compatible scene (e.g. from the NeRF authors' drive). Now you can run:

instant-ngp$ ./build/testbed --scene data/nerf_synthetic/lego

For more information about preparing datasets for use with our NeRF implementation, please see this document.

SDF armadillo

instant-ngp$ ./build/testbed --scene data/sdf/armadillo.obj

Image of Einstein

instant-ngp$ ./build/testbed --scene data/image/albert.exr

To reproduce the gigapixel results, download, for example, the Tokyo image and convert it to .bin using the scripts/image2bin.py script. This custom format improves compatibility and loading speed when resolution is high. Now you can run:

instant-ngp$ ./build/testbed --scene data/image/tokyo.bin

Volume Renderer

Download the nanovdb volume for the Disney cloud, which is derived from here (CC BY-SA 3.0).

instant-ngp$ ./build/testbed --mode volume --scene data/volume/wdas_cloud_quarter.nvdb

Python bindings

To conduct controlled experiments in an automated fashion, all features from the interactive testbed (and more!) have Python bindings that can be easily instrumented. For an example of how the ./build/testbed application can be implemented and extended from within Python, see ./scripts/run.py, which supports a superset of the command line arguments that ./build/testbed does.

Happy hacking!

Thanks

Many thanks to Jonathan Tremblay and Andrew Tao for testing early versions of this codebase and to Arman Toornias and Saurabh Jain for the factory robot dataset.

This project makes use of a number of awesome open source libraries, including:

- tiny-cuda-nn for fast CUDA MLP networks

- tinyexr for EXR format support

- tinyobjloader for OBJ format support

- stb_image for PNG and JPEG support

- Dear ImGui an excellent immediate mode GUI library

- Eigen a C++ template library for linear algebra

- pybind11 for seamless C++ / Python interop

- and others! See the

dependenciesfolder.

Many thanks to the authors of these brilliant projects!

License

Copyright © 2022, NVIDIA Corporation. All rights reserved.

This work is made available under the Nvidia Source Code License-NC. Click here to view a copy of this license.