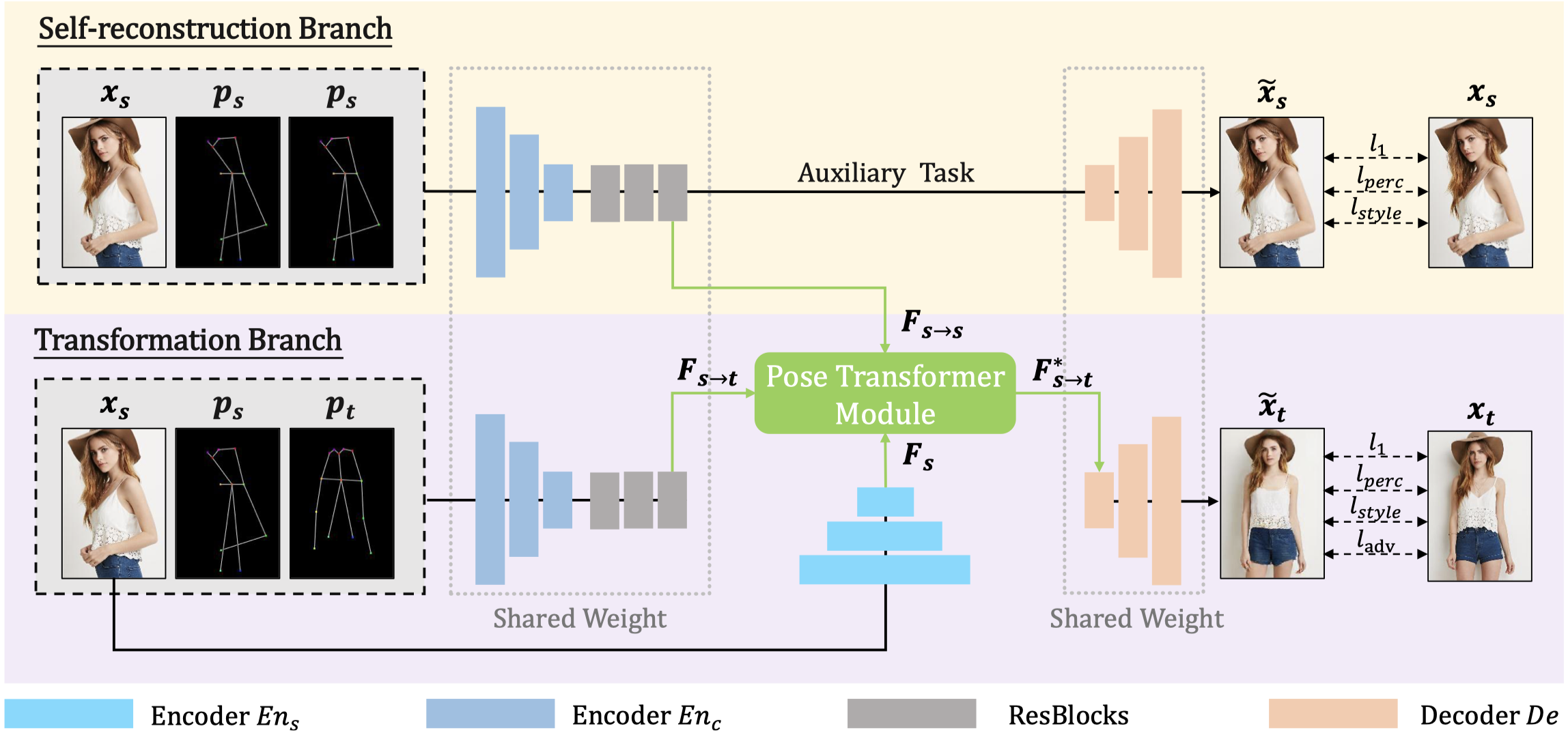

Dual-task Pose Transformer Network

The source code for our paper "Exploring Dual-task Correlation for Pose Guided Person Image Generation“ (CVPR2022)

Get Start

1) Requirement

- Python 3.7.9

- Pytorch 1.7.1

- torchvision 0.8.2

- CUDA 11.1

- NVIDIA A100 40GB PCIe

2) Data Preperation

Following PATN, the dataset split files and extracted keypoints files can be obtained as follows:

DeepFashion

-

Download the DeepFashion dataset in-shop clothes retrival benchmark, and put them under the

./dataset/fashiondirectory. -

Download train/test pairs and train/test keypoints annotations from Google Drive, including fasion-resize-pairs-train.csv, fasion-resize-pairs-test.csv, fasion-resize-annotation-train.csv, fasion-resize-annotation-train.csv, train.lst, test.lst, and put them under the

./dataset/fashiondirectory. -

Split the raw image into the training set (

./dataset/fashion/train) and test set (./dataset/fashion/test):

python data/generate_fashion_datasets.py

Market1501

-

Download the Market1501 dataset from here. Rename bounding_box_train and bounding_box_test as train and test, and put them under the

./dataset/marketdirectory. -

Download train/test key points annotations from Google Drive including market-pairs-train.csv, market-pairs-test.csv, market-annotation-train.csv, market-annotation-train.csv. Put these files under the

./dataset/marketdirectory.

3) Train a model

DeepFashion

python train.py --name=DPTN_fashion --model=DPTN --dataset_mode=fashion --dataroot=./dataset/fashion --batchSize 32 --gpu_id=0

Market1501

python train.py --name=DPTN_market --model=DPTN --dataset_mode=market --dataroot=./dataset/market --dis_layer=3 --lambda_g=5 --lambda_rec 2 --t_s_ratio=0.8 --save_latest_freq=10400 --batchSize 32 --gpu_id=0

4) Test the model

You can directly download our test results from Google Drive: Deepfashion, Market1501.

DeepFashion

python test.py --name=DPTN_fashion --model=DPTN --dataset_mode=fashion --dataroot=./dataset/fashion --which_epoch latest --results_dir ./results/DPTN_fashion --batchSize 1 --gpu_id=0

Market1501

python test.py --name=DPTN_market --model=DPTN --dataset_mode=market --dataroot=./dataset/market --which_epoch latest --results_dir=./results/DPTN_market --batchSize 1 --gpu_id=0

5) Evaluation

We adopt SSIM, PSNR, FID and LPIPS for the evaluation.

DeepFashion

python -m metrics.metrics --gt_path=./dataset/fashion/test --distorated_path=./results/DPTN_fashion --fid_real_path=./dataset/fashion/train --name=./fashion

Market1501

python -m metrics.metrics --gt_path=./dataset/market/test --distorated_path=./results/DPTN_market --fid_real_path=./dataset/market/train --name=./market --market

6) Pre-trained Model

Our pre-trained model can be downloaded from Google Drive: Deepfashion, Market1501.

Citation

Acknowledgement

We build our project based on pix2pix. Some dataset preprocessing methods are derived from PATN.

Do I need to continue training or do I stop to change the parameters.

Thank You!

Do I need to continue training or do I stop to change the parameters.

Thank You!