Authors: Ada Madejska, MCDB, UCSB (contact: [email protected]) Nick Noll, UCSB This pipeline takes error-prone Nanopore reads and tries to increase the percentage identity of the results of identifying species with BLAST. The reads in fastq format are put through the pipeline which includes the following steps. 1. Quality control - very short and very long reads (reads that highly deviate from the usual length of the 16S sequence) are dropped. 2. Kmer frequency matrix - make a kmer frequency matrix based on the reads from the quality control step. The value of k can be changed (k=5 or 6 is recommended) 3. UMAP projection and HDBSCAN clustering - the kmer frequency matrix is used to create a UMAP projection. The default parameters for UMAP and HDBSCAN functions have been chosen based on mock dataset but can be changed. 4. Refinement - based on our tests on mock datasets, sometimes reads from different species can cluster together. To prevent that, we include a refinement step based on MSA of Clustal Omega on each cluster. The alignment outputs a guide tree which is used for dividing the cluster into smaller subclusters. The distance threshold can be changed to suit each dataset. 5. Consensus making - lastly, based on the defined clusters, the last step creates a consensus sequence based on majority calling. The direction of the reads is fixed using minimap2, the alignment is performed by MAFFT, and the consensus is created using em_cons. The reads are run through BLASTN to check for identity of each cluster. Software Dependencies: To successfully run the pipeline, certain software need to be installed. 1. Minimap2 - for the consensus making step (https://github.com/lh3/minimap2) 2. MAFFT - for alignment in the consensus making step (https://mafft.cbrc.jp/alignment/software/) 3. EM_CONS - for creating the consensus (http://emboss.sourceforge.net/apps/cvs/emboss/apps/cons.html) 4. NCBIN - for identification of the consensus sequences in the database (https://ftp.ncbi.nlm.nih.gov/blast/executables/LATEST/) (a 16S database is also required) 5. CLUSTALO - for the refinement step (http://www.clustal.org/omega/) Specifications: This pipeline runs in python3.8.10 and julia v"1.4.1". The following Python libraries are also required: BioPython hdbscan matplotlib pandas sklearn umap Following Julia packages are required: Pkg DataFrames CSV

A pipeline that creates consensus sequences from a Nanopore reads. I

Overview

You might also like...

Pipeline and Dataset helpers for complex algorithm evaluation.

tpcp - Tiny Pipelines for Complex Problems A generic way to build object-oriented datasets and algorithm pipelines and tools to evaluate them pip inst

Created covid data pipeline using PySpark and MySQL that collected data stream from API and do some processing and store it into MYSQL database.

Created covid data pipeline using PySpark and MySQL that collected data stream from API and do some processing and store it into MYSQL database.

Full automated data pipeline using docker images

Create postgres tables from CSV files This first section is only relate to creating tables from CSV files using postgres container alone. Just one of

Demonstrate a Dataflow pipeline that saves data from an API into BigQuery table

Overview dataflow-mvp provides a basic example pipeline that pulls data from an API and writes it to a BigQuery table using GCP's Dataflow (i.e., Apac

A real-time financial data streaming pipeline and visualization platform using Apache Kafka, Cassandra, and Bokeh.

Realtime Financial Market Data Visualization and Analysis Introduction This repo shows my project about real-time stock data pipeline. All the code is

ETL pipeline on movie data using Python and postgreSQL

Movies-ETL ETL pipeline on movie data using Python and postgreSQL Overview This project consisted on a automated Extraction, Transformation and Load p

X-news - Pipeline data use scrapy, kafka, spark streaming, spark ML and elasticsearch, Kibana

X-news - Pipeline data use scrapy, kafka, spark streaming, spark ML and elasticsearch, Kibana

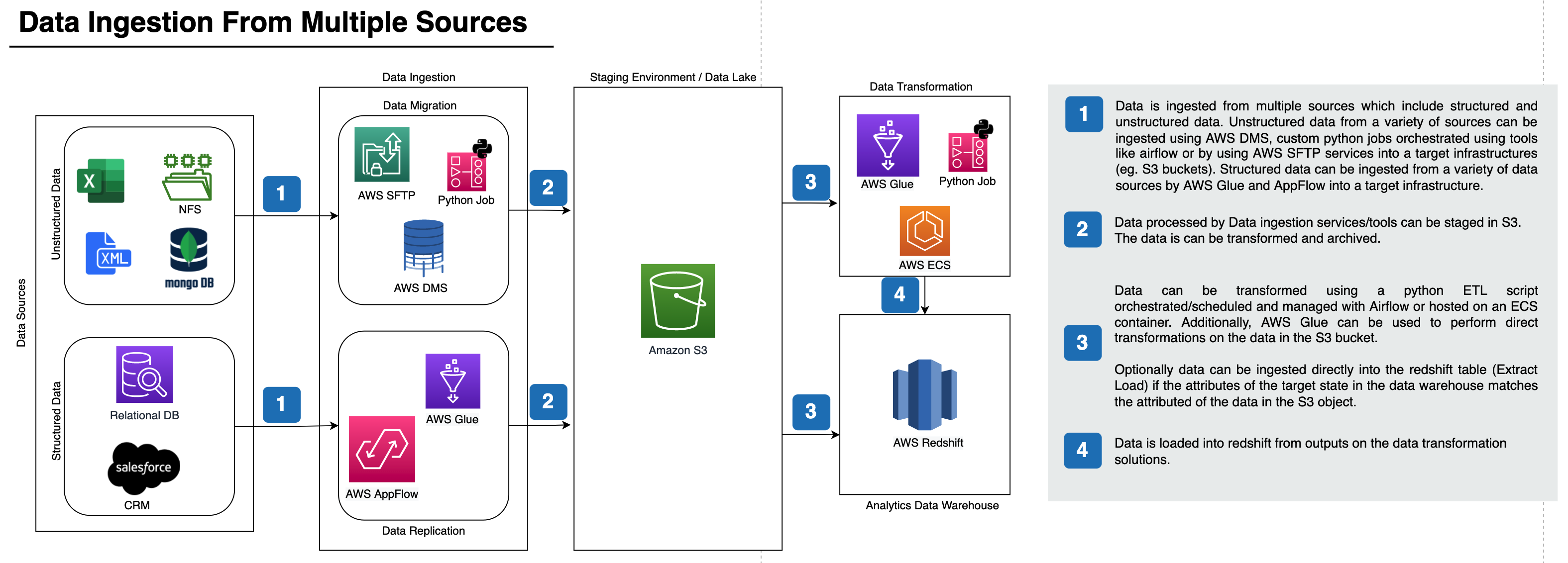

PrimaryBid - Transform application Lifecycle Data and Design and ETL pipeline architecture for ingesting data from multiple sources to redshift

Transform application Lifecycle Data and Design and ETL pipeline architecture for ingesting data from multiple sources to redshift This project is composed of two parts: Part1 and Part2

An ETL Pipeline of a large data set from a fictitious music streaming service named Sparkify.

An ETL Pipeline of a large data set from a fictitious music streaming service named Sparkify. The ETL process flows from AWS's S3 into staging tables in AWS Redshift.

SNV calling pipeline developed explicitly to process individual or trio vcf files obtained from Illumina based pipeline (grch37/grch38).

SNV Pipeline SNV calling pipeline developed explicitly to process individual or trio vcf files obtained from Illumina based pipeline (grch37/grch38).

Two phase pipeline + StreamlitTwo phase pipeline + Streamlit

Two phase pipeline + Streamlit This is an example project that demonstrates how to create a pipeline that consists of two phases of execution. In betw

Using Data Science with Machine Learning techniques (ETL pipeline and ML pipeline) to classify received messages after disasters.

Using Data Science with Machine Learning techniques (ETL pipeline and ML pipeline) to classify received messages after disasters.

Udacity-api-reporting-pipeline - Udacity api reporting pipeline

udacity-api-reporting-pipeline In this exercise, you'll use portions of each of

peptides.py is a pure-Python package to compute common descriptors for protein sequences

peptides.py Physicochemical properties and indices for amino-acid sequences. ??️ Overview peptides.py is a pure-Python package to compute common descr

Physicochemical properties and indices for amino-acid sequences (ported from R).

peptides.py Physicochemical properties and indices for amino-acid sequences. ??️ Overview peptides.py is a pure-Python package to compute common descr

This creates a ohlc timeseries from downloaded CSV files from NSE India website and makes a SQLite database for your research.

NSE-timeseries-form-CSV-file-creator-and-SQL-appender- This creates a ohlc timeseries from downloaded CSV files from National Stock Exchange India (NS

Educational project on how to build an ETL (Extract, Transform, Load) data pipeline, orchestrated with Airflow.

ETL Pipeline with Airflow, Spark, s3, MongoDB and Amazon Redshift

pipeline for migrating lichess data into postgresql

How Long Does It Take Ordinary People To "Get Good" At Chess? TL;DR: According to 5.5 years of data from 2.3 million players and 450 million games, mo

In this project, ETL pipeline is build on data warehouse hosted on AWS Redshift.

ETL Pipeline for AWS Project Description In this project, ETL pipeline is build on data warehouse hosted on AWS Redshift. The data is loaded from S3 t