This repository is forked from Real-Time-Voice-Cloning which only support English.

English | 中文

Features

DEMO VIDEO

Quick Start

1. Install Requirements

Follow the original repo to test if you got all environment ready. **Python 3.7 or higher ** is needed to run the toolbox.

- Install PyTorch.

- Install ffmpeg.

- Run

pip install -r requirements.txtto install the remaining necessary packages.

2. Train synthesizer with aidatatang_200zh

-

Download aidatatang_200zh dataset and unzip: make sure you can access all .wav in train folder

-

Preprocess with the audios and the mel spectrograms:

python synthesizer_preprocess_audio.py -

Preprocess the embeddings:

python synthesizer_preprocess_embeds.py/SV2TTS/synthesizer -

Train the synthesizer:

python synthesizer_train.py mandarin/SV2TTS/synthesizer -

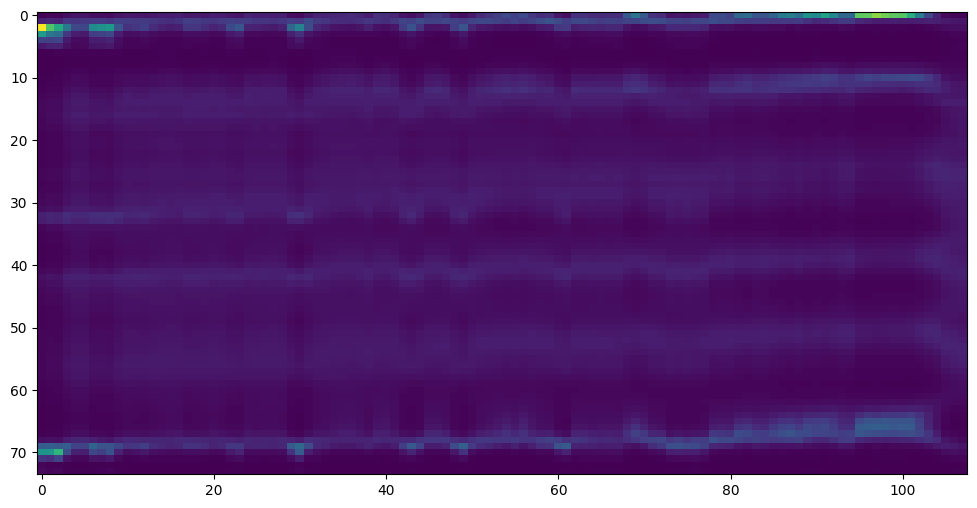

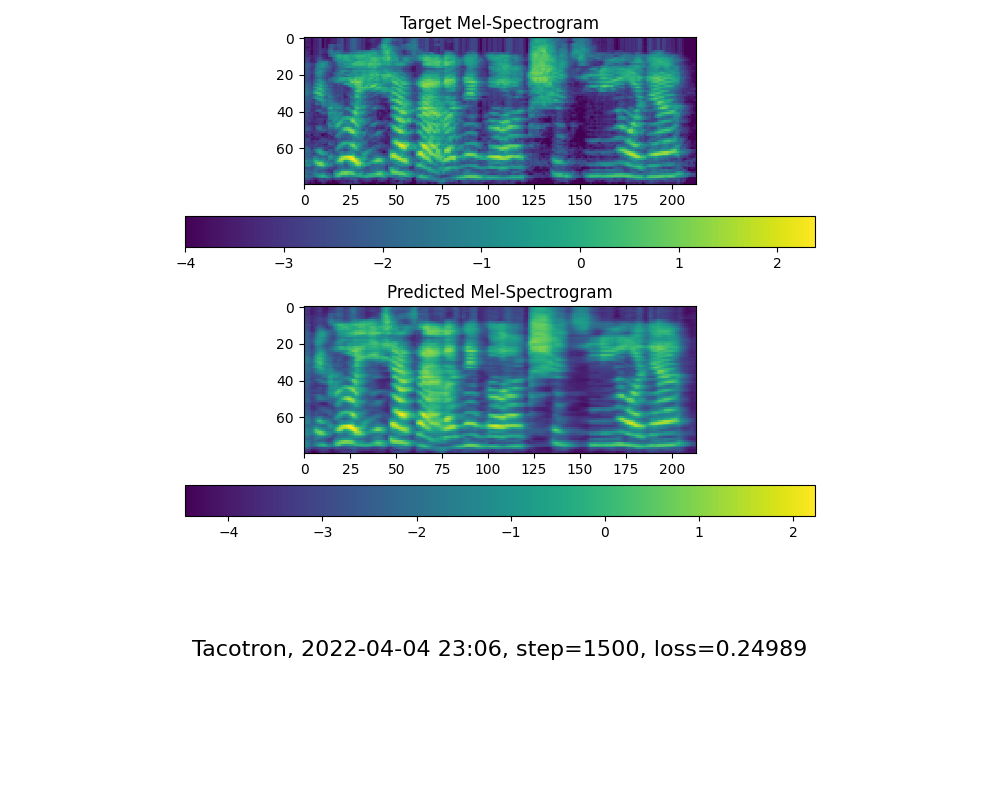

Go to next step when you see attention line show and loss meet your need in training folder synthesizer/saved_models/.

FYI, my attention came after 18k steps and loss became lower than 0.4 after 50k steps.

3. Launch the Toolbox

You can then try the toolbox:

python demo_toolbox.py -d

or

python demo_toolbox.py

TODO

- Add demo video

- Add support for more dataset

- Upload pretrained model

-

🙏 Welcome to add more