The easiest way to build Machine Learning APIs

BentoML makes moving trained ML models to production easy:

- Package models trained with any ML framework and reproduce them for model serving in production

- Deploy anywhere for online API serving or offline batch serving

- High-Performance API model server with adaptive micro-batching support

- Central hub for managing models and deployment process via Web UI and APIs

- Modular and flexible design making it adaptable to your infrastrcuture

BentoML is a framework for serving, managing, and deploying machine learning models. It is aiming to bridge the gap between Data Science and DevOps, and enable teams to deliver prediction services in a fast, repeatable, and scalable way.

Documentation

BentoML documentation: https://docs.bentoml.org/

- Quickstart Guide, try it out on Google Colab

- Core Concepts

- API References

- FAQ

- Example projects: bentoml/Gallery

Key Features

Online serving with API model server:

- Containerized model server for production deployment with Docker, Kubernetes, OpenShift, AWS ECS, Azure, GCP GKE, etc

- Adaptive micro-batching for optimal online serving performance

- Discover and package all dependencies automatically, including PyPI, conda packages and local python modules

- Support multiple ML frameworks including PyTorch, TensorFlow, Scikit-Learn, XGBoost, and many more

- Serve compositions of multiple models

- Serve multiple endpoints in one model server

- Serve any Python code along with trained models

- Automatically generate HTTP API spec in Swagger/OpenAPI format

- Prediction logging and feedback logging endpoint

- Health check endpoint and Prometheus

/metricsendpoint for monitoring - Load and replay historical prediction request logs (roadmap)

- Model serving via gRPC endpoint (roadmap)

Advanced workflow for model serving and deployment:

- Central repository for managing all your team's packaged models via Web UI and API

- Launch inference run from CLI or Python, which enables CI/CD testing, programmatic access and batch offline inference job

- One-click deployment to cloud platforms including AWS Lambda, AWS SageMaker, and Azure Functions

- Distributed batch job or streaming job with Apache Spark (improved Spark support is on the roadmap)

- Advanced model deployment workflows for Kubernetes, including auto-scaling, scale-to-zero, A/B testing, canary deployment, and multi-armed-bandit (roadmap)

- Deep integration with ML experimentation platforms including MLFlow, Kubeflow (roadmap)

ML Frameworks

- Scikit-Learn - Docs | Examples

- PyTorch - Docs | Examples

- Tensorflow 2 - Docs | Examples

- Tensorflow Keras - Docs | Examples

- XGBoost - Docs | Examples

- LightGBM - Docs | Examples

- FastText - Docs | Examples

- FastAI - Docs | Examples

- H2O - Docs | Examples

- ONNX - Docs | Examples

- Spacy - Docs | Examples

- Statsmodels - Docs | Examples

- CoreML - Docs

- Transformers - Docs

- Gluon - Docs

- Detectron - Docs

Deployment Options

Be sure to check out deployment overview doc to understand which deployment option is best suited for your use case.

-

One-click deployment with BentoML:

-

Deploy with open-source platforms:

-

Deploy directly to cloud services:

Introduction

BentoML provides abstractions for creating a prediction service that's bundled with trained models. User can define inference APIs with serving logic with Python code and specify the expected input/output data type:

import pandas as pd

from bentoml import env, artifacts, api, BentoService

from bentoml.adapters import DataframeInput

from bentoml.frameworks.sklearn import SklearnModelArtifact

from my_library import preprocess

@env(infer_pip_packages=True)

@artifacts([SklearnModelArtifact('my_model')])

class MyPredictionService(BentoService):

"""

A simple prediction service exposing a Scikit-learn model

"""

@api(input=DataframeInput(orient="records"), batch=True)

def predict(self, df: pd.DataFrame):

"""

An inference API named `predict` with Dataframe input adapter, which defines

how HTTP requests or CSV files get converted to a pandas Dataframe object as the

inference API function input

"""

model_input = preprocess(df)

return self.artifacts.my_model.predict(model_input)

At the end of your model training pipeline, import your BentoML prediction service class, pack it with your trained model, and persist the entire prediction service with save call at the end:

from my_prediction_service import MyPredictionService

svc = MyPredictionService()

svc.pack('my_model', my_sklearn_model)

svc.save() # default saves to ~/bentoml/repository/MyPredictionService/{version}/

This will save all the code files, serialized models, and configs required for reproducing this prediction service for inference. BentoML automatically captures all the pip package dependencies and local python code dependencies, and versioned together with other code and model files in one place.

With the saved prediction service, user can start a local API server hosting it:

bentoml serve MyPredictionService:latest

And create a docker container image for this API model server with one command:

bentoml containerize MyPredictionService:latest -t my_prediction_service:latest

docker run -p 5000:5000 my_prediction_service:latest

The container image produced will have all the required dependencies installed. Besides the model inference API, the containerized BentoML model server also comes with instrumentations, metrics, health check endpoint, prediction logging, tracing, which makes it easy for your DevOps team to integrate with and deploy in production.

If you are at a small team without DevOps support, BentoML also provides an one-click deployment option, which deploys the model server API to cloud platforms with minimum setup.

Read the Quickstart Guide to learn more about the basic functionalities of BentoML. You can also try it out here on Google Colab.

Why BentoML

Moving trained Machine Learning models to serving applications in production is hard. It is a sequential process across data science, engineering and DevOps teams: after a model is trained by the data science team, they hand it over to the engineering team to refine and optimize code and creates an API, before DevOps can deploy.

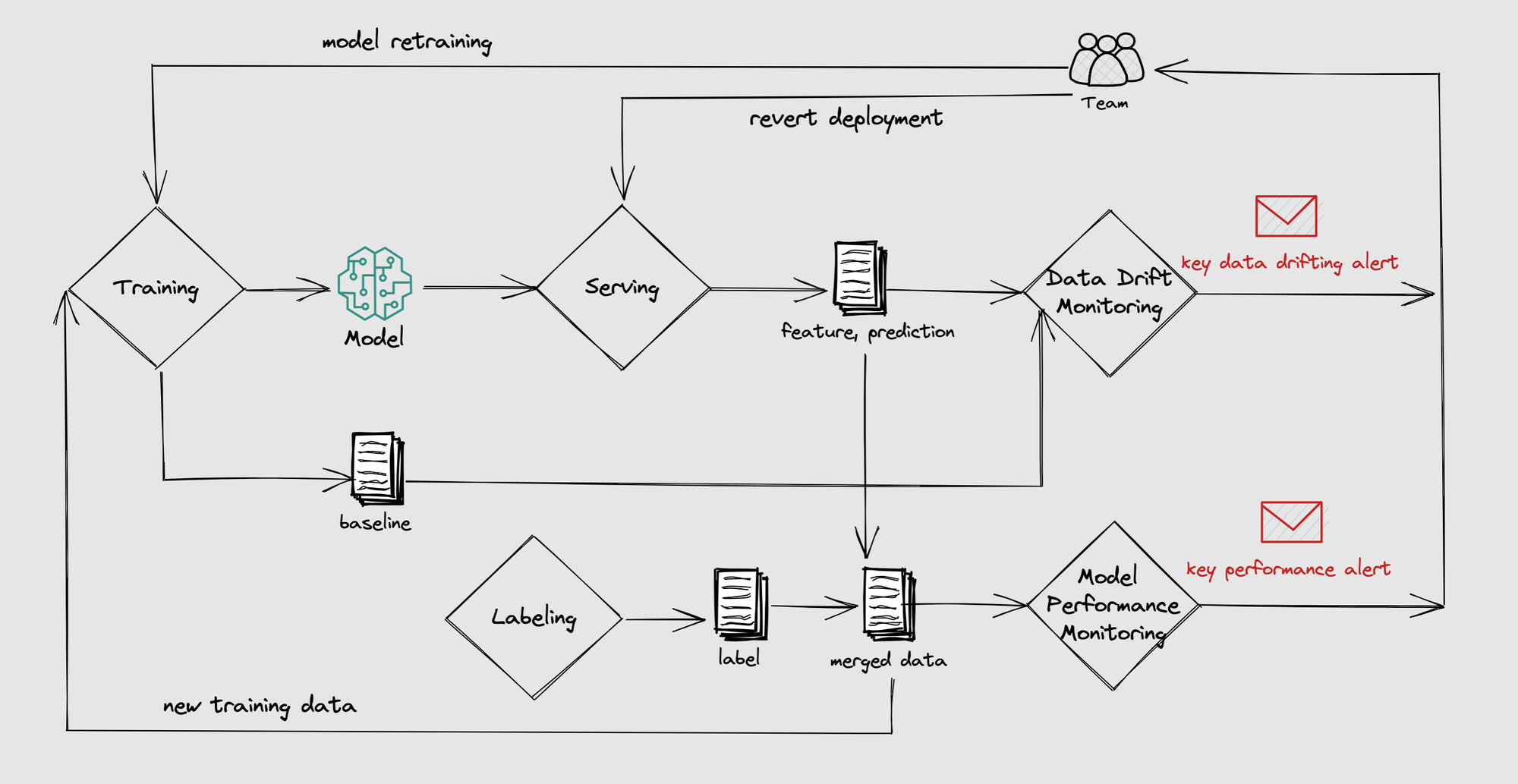

And most importantly, Data Science teams want to continuously repeat this process, monitor the models deployed in production and ship new models quickly. It often takes months for an engineering team to build a model serving & deployment solution that allow data science teams to ship new models in a repeatable and reliable way.

BentoML is a framework designed to solve this problem. It provides high-level APIs for Data Science team to create prediction services, abstract away DevOps' infrastructure needs and performance optimizations in the process. This allows DevOps team to seamlessly work with data science side-by-side, deploy and operate their models packaged in BentoML format in production.

Check out Frequently Asked Questions page on how does BentoML compares to Tensorflow-serving, Clipper, AWS SageMaker, MLFlow, etc.

Contributing

Have questions or feedback? Post a new github issue or discuss in our Slack channel:

Want to help build BentoML? Check out our contributing guide and the development guide.

Releases

BentoML is under active development and is evolving rapidly. It is currently a Beta release, we may change APIs in future releases and there are still major features being worked on.

Read more about the latest updates from the releases page.

Usage Tracking

BentoML by default collects anonymous usage data using Amplitude. It only collects BentoML library's own actions and parameters, no user or model data will be collected. Here is the code that does it.

This helps BentoML team to understand how the community is using this tool and what to build next. You can easily opt-out of usage tracking by running the following command:

# From terminal:

bentoml config set usage_tracking=false

# From python:

import bentoml

bentoml.config().set('core', 'usage_tracking', 'False')