DCGAN in Tensorflow

Tensorflow implementation of Deep Convolutional Generative Adversarial Networks which is a stabilize Generative Adversarial Networks. The referenced torch code can be found here.

- Brandon Amos wrote an excellent blog post and image completion code based on this repo.

- To avoid the fast convergence of D (discriminator) network, G (generator) network is updated twice for each D network update, which differs from original paper.

Online Demo

Prerequisites

- Python 2.7 or Python 3.3+

- Tensorflow 0.12.1

- SciPy

- pillow

- tqdm

- (Optional) moviepy (for visualization)

- (Optional) Align&Cropped Images.zip : Large-scale CelebFaces Dataset

Usage

First, download dataset with:

$ python download.py mnist celebA

To train a model with downloaded dataset:

$ python main.py --dataset mnist --input_height=28 --output_height=28 --train

$ python main.py --dataset celebA --input_height=108 --train --crop

To test with an existing model:

$ python main.py --dataset mnist --input_height=28 --output_height=28

$ python main.py --dataset celebA --input_height=108 --crop

Or, you can use your own dataset (without central crop) by:

$ mkdir data/DATASET_NAME

... add images to data/DATASET_NAME ...

$ python main.py --dataset DATASET_NAME --train

$ python main.py --dataset DATASET_NAME

$ # example

$ python main.py --dataset=eyes --input_fname_pattern="*_cropped.png" --train

If your dataset is located in a different root directory:

$ python main.py --dataset DATASET_NAME --data_dir DATASET_ROOT_DIR --train

$ python main.py --dataset DATASET_NAME --data_dir DATASET_ROOT_DIR

$ # example

$ python main.py --dataset=eyes --data_dir ../datasets/ --input_fname_pattern="*_cropped.png" --train

Results

celebA

After 6th epoch:

After 10th epoch:

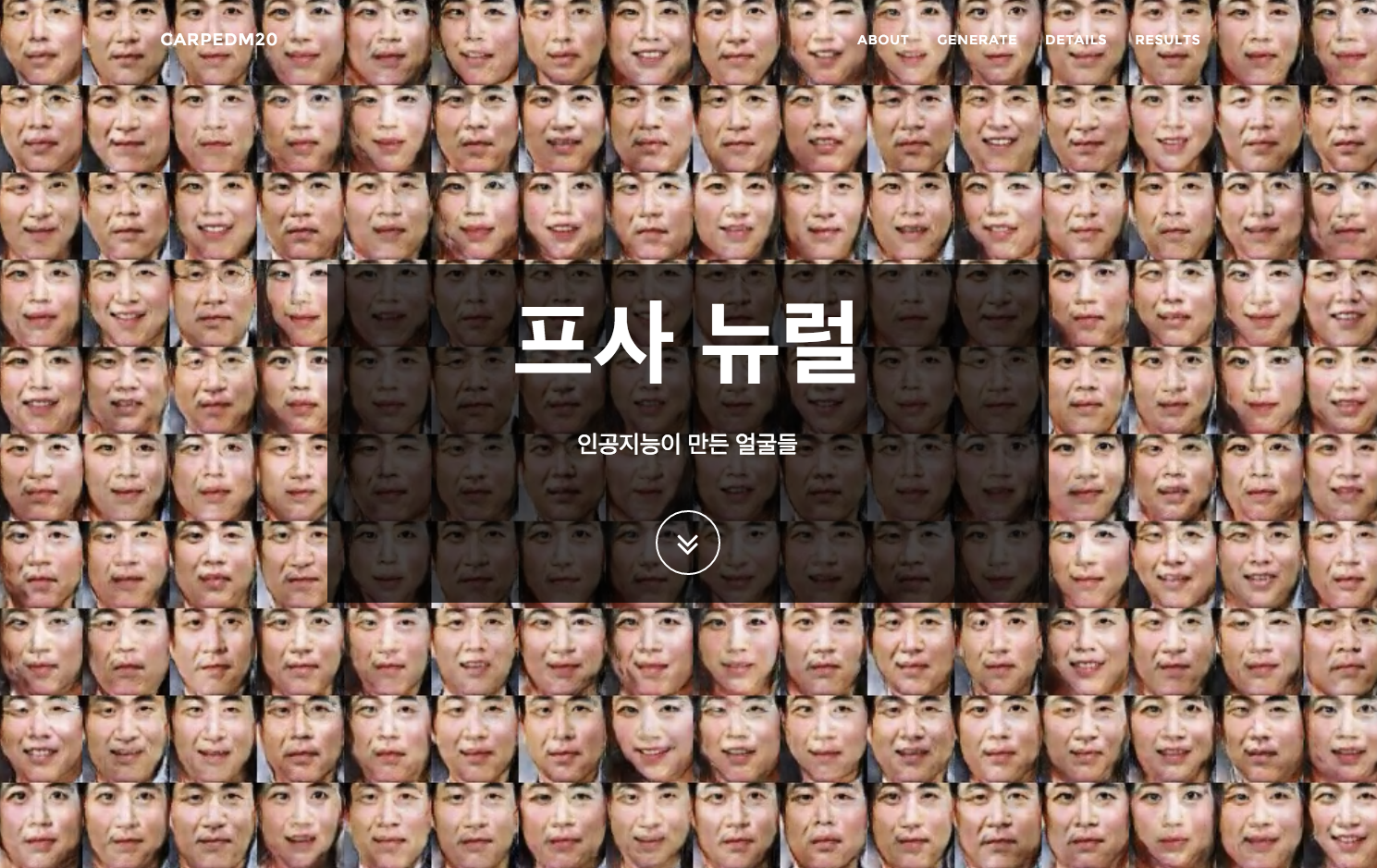

Asian face dataset

MNIST

MNIST codes are written by @PhoenixDai.

More results can be found here and here.

Training details

Details of the loss of Discriminator and Generator (with custom dataset not celebA).

Details of the histogram of true and fake result of discriminator (with custom dataset not celebA).

Related works

Author

Taehoon Kim / @carpedm20