================================================================================

Convolutional Two-Stream Network Fusion for Video Action Recognition

This repository contains the code for our CVPR 2016 paper:

Christoph Feichtenhofer, Axel Pinz, Andrew Zisserman

"Convolutional Two-Stream Network Fusion for Video Action Recognition"

in Proc. CVPR 2016

If you find the code useful for your research, please cite our paper:

@inproceedings{feichtenhofer2016convolutional,

title={Convolutional Two-Stream Network Fusion for Video Action Recognition},

author={Feichtenhofer, Christoph and Pinz, Axel and Zisserman, Andrew},

booktitle={Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2016}

}

Requirements

The code was tested on Ubuntu 14.04 and Windows 10 using MATLAB R2015b and NVIDIA Titan X or Z GPUs.

If you have questions regarding the implementation please contact:

Christoph Feichtenhofer

================================================================================

Setup

-

Download the code

git clone --recursive https://github.com/feichtenhofer/twostreamfusion -

Compile the code by running

compile.m.- This will also compile a modified (and older) version of the MatConvNet toolbox. In case of any issues, please follow the installation instructions on the MatConvNet homepage.

-

Edit the file cnn_setup_environment.m to adjust the models and data paths.

-

Download pretrained model files and the datasets, linked below and unpack them into your models/data directory.

- Optionally you can pretrain your own twostream models by running

cnn_ucf101_spatial();to train the appearance network stream.cnn_ucf101_temporal();to train the optical flow network stream.

- Run

cnn_ucf101_fusion();this will use the downloaded models and demonstrate training of our final architecture on UCF101/HMDB51.- In case you would like to train on the CPU, clear the variable

opts.train.gpus - In case you encounter memory issues on your GPU, consider decreasing the

cudnnWorkspaceLimit(512MB is default)

- In case you would like to train on the CPU, clear the variable

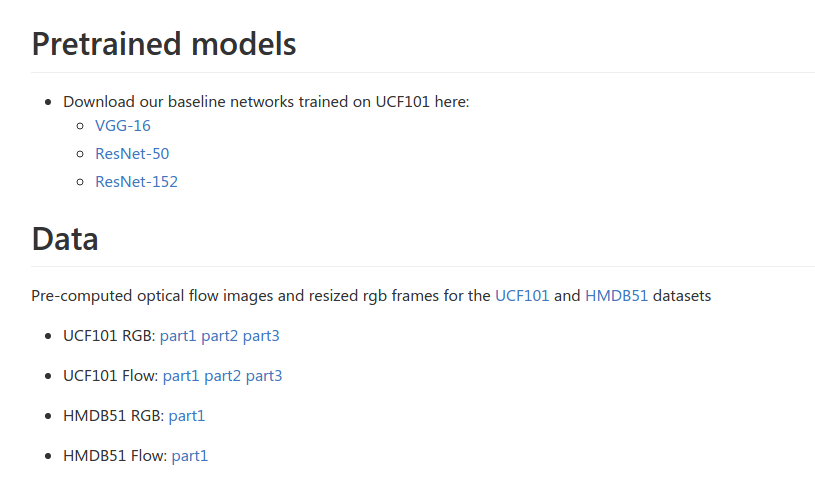

Pretrained models

- Download our baseline networks trained on UCF101 here:

Data

Pre-computed optical flow images and resized rgb frames for the UCF101 and HMDB51 datasets

Use it on your own dataset

- Our Optical flow extraction tool provides OpenCV wrappers for optical flow extraction on a GPU.