using

chart.save('something.png')

Gives the below error:

TypeError: Object of type 'Polygon' is not JSON serializable

What does work:

- When you to .to_json() there is not an error and it produces it fine.

combined_chart.to_json()

- In jupyterlab at the top right of plots you have the (...) menu where you can tell it to save to a png - and that does work fine.

What does not work:

combined_chart.save('something.png')

Here is a reproducible example:

import altair as alt

import geopandas as gpd

import gpdvega

import pandas as pd

from shapely.geometry import Point

from gpdvega import gpd_to_values

alt.data_transformers.register(

'gpd_to_values',

lambda data: alt.pipe(data, gpd_to_values)

)

alt.data_transformers.enable('gpd_to_values')

world = gpd.read_file(gpd.datasets.get_path('naturalearth_lowres'))

# GeoDataFrame could be passed as usual pd.DataFrame

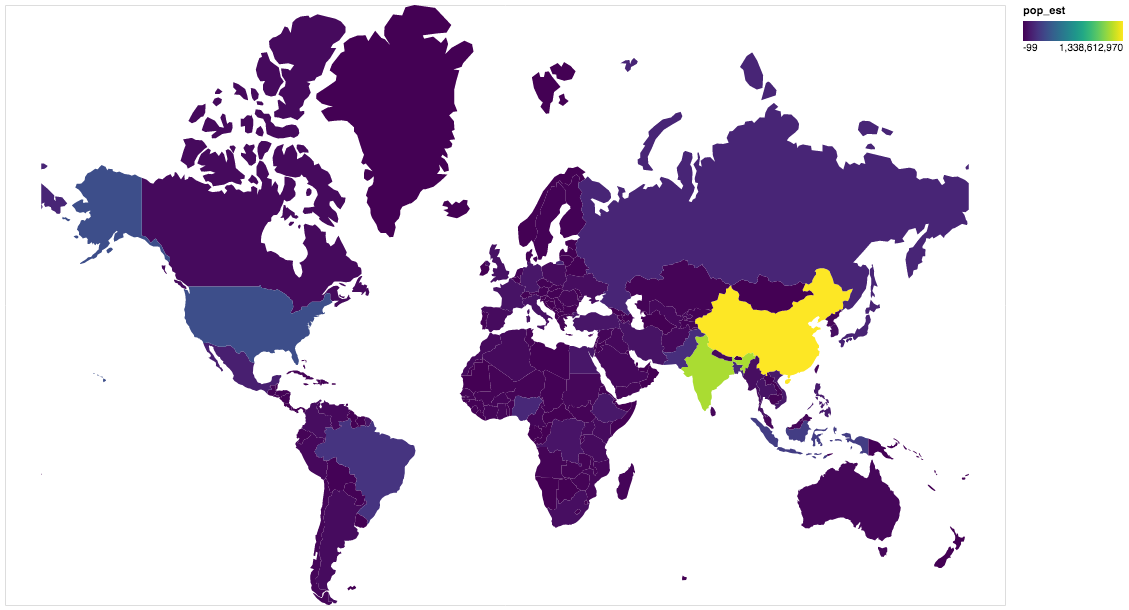

chart_one = alt.Chart(world[world.continent!='Antarctica']).mark_geoshape(

).project(

).encode(

color='pop_est', # shorthand infer types as for regular pd.DataFrame

tooltip='id:Q' # GeoDataFrame.index is accessible as id

).properties(

width=500,

height=300

)

# generate some points to push us over the max rows

some = [[-70.05179, 25.10815] for x in range(6000)]

some = pd.DataFrame(some, columns=['x', 'y'])

some['Coordinates'] = list(zip(some.x, some.y))

some['Coordinates'] = some['Coordinates'].apply(Point)

gdfo = gpd.GeoDataFrame(some, geometry='Coordinates')

chart_two = alt.Chart(gdfo).mark_point(color='red').encode(#.mark_point(size=550, color='orange').encode(

longitude='x:Q',

latitude='y:Q'

)

combined_chart = chart_one + chart_two

To get the error - now run:

combined_chart.save('something.png')

Looking for ideas as it seems to serialize to json fine with .to_json() but runs into trouble with saving to a png.

Also of note - is in jupyterlab at the top right of plots you have the (...) menu where you can tell it to save to a png - and that does work fine.

Here is the full error:

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-5-fe96c4e38374> in <module>()

----> 1 combined_chart.save('something.png')

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\vegalite\v2\api.py in save(self, fp, format, override_data_transformer, scale_factor, vegalite_version, vega_version, vegaembed_version, **kwargs)

500 if override_data_transformer:

501 with data_transformers.enable('default', max_rows=None):

--> 502 result = save(**kwds)

503 else:

504 result = save(**kwds)

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\utils\save.py in save(chart, fp, vega_version, vegaembed_version, format, mode, vegalite_version, embed_options, json_kwds, webdriver, scale_factor)

58 "['png', 'svg', 'html', 'json']")

59

---> 60 spec = chart.to_dict()

61

62 if mode is None:

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\vegalite\v2\api.py in to_dict(self, *args, **kwargs)

406

407 try:

--> 408 dct = super(TopLevelMixin, copy).to_dict(*args, **kwargs)

409 except jsonschema.ValidationError:

410 dct = None

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\utils\schemapi.py in to_dict(self, validate, ignore, context)

243 result = _todict(self._args[0])

244 elif not self._args:

--> 245 result = _todict({k: v for k, v in self._kwds.items()

246 if k not in ignore})

247 else:

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\utils\schemapi.py in _todict(val)

235 return [_todict(v) for v in val]

236 elif isinstance(val, dict):

--> 237 return {k: _todict(v) for k, v in val.items()

238 if v is not Undefined}

239 else:

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\utils\schemapi.py in <dictcomp>(.0)

236 elif isinstance(val, dict):

237 return {k: _todict(v) for k, v in val.items()

--> 238 if v is not Undefined}

239 else:

240 return val

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\utils\schemapi.py in _todict(val)

233 return val.to_dict(validate=sub_validate, context=context)

234 elif isinstance(val, (list, tuple)):

--> 235 return [_todict(v) for v in val]

236 elif isinstance(val, dict):

237 return {k: _todict(v) for k, v in val.items()

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\utils\schemapi.py in <listcomp>(.0)

233 return val.to_dict(validate=sub_validate, context=context)

234 elif isinstance(val, (list, tuple)):

--> 235 return [_todict(v) for v in val]

236 elif isinstance(val, dict):

237 return {k: _todict(v) for k, v in val.items()

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\utils\schemapi.py in _todict(val)

231 def _todict(val):

232 if isinstance(val, SchemaBase):

--> 233 return val.to_dict(validate=sub_validate, context=context)

234 elif isinstance(val, (list, tuple)):

235 return [_todict(v) for v in val]

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\vegalite\v2\api.py in to_dict(self, *args, **kwargs)

396 copy = self.copy()

397 original_data = getattr(copy, 'data', Undefined)

--> 398 copy.data = _prepare_data(original_data, context)

399

400 if original_data is not Undefined:

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\vegalite\v2\api.py in _prepare_data(data, context)

90 # consolidate inline data to top-level datasets

91 if data_transformers.consolidate_datasets:

---> 92 data = _consolidate_data(data, context)

93

94 # if data is still not a recognized type, then return

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\vegalite\v2\api.py in _consolidate_data(data, context)

57

58 if values is not Undefined:

---> 59 name = _dataset_name(values)

60 data = core.NamedData(name=name, **kwds)

61 context.setdefault('datasets', {})[name] = values

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\site-packages\altair\vegalite\v2\api.py in _dataset_name(values)

33 if isinstance(values, core.InlineDataset):

34 values = values.to_dict()

---> 35 values_json = json.dumps(values, sort_keys=True)

36 hsh = hashlib.md5(values_json.encode()).hexdigest()

37 return 'data-' + hsh

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\json\__init__.py in dumps(obj, skipkeys, ensure_ascii, check_circular, allow_nan, cls, indent, separators, default, sort_keys, **kw)

236 check_circular=check_circular, allow_nan=allow_nan, indent=indent,

237 separators=separators, default=default, sort_keys=sort_keys,

--> 238 **kw).encode(obj)

239

240

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\json\encoder.py in encode(self, o)

197 # exceptions aren't as detailed. The list call should be roughly

198 # equivalent to the PySequence_Fast that ''.join() would do.

--> 199 chunks = self.iterencode(o, _one_shot=True)

200 if not isinstance(chunks, (list, tuple)):

201 chunks = list(chunks)

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\json\encoder.py in iterencode(self, o, _one_shot)

255 self.key_separator, self.item_separator, self.sort_keys,

256 self.skipkeys, _one_shot)

--> 257 return _iterencode(o, 0)

258

259 def _make_iterencode(markers, _default, _encoder, _indent, _floatstr,

~\AppData\Local\Continuum\anaconda3\envs\data_analysis\lib\json\encoder.py in default(self, o)

178 """

179 raise TypeError("Object of type '%s' is not JSON serializable" %

--> 180 o.__class__.__name__)

181

182 def encode(self, o):

TypeError: Object of type 'Polygon' is not JSON serializable

but

but