Faster Convex Lipschitz Regression

This reepository provides a python implementation of our Faster Convex Lipschitz Regression algorithm with GPU and CPU based on Pytorch.

Contact

You can contact us at [email protected]

This reepository provides a python implementation of our Faster Convex Lipschitz Regression algorithm with GPU and CPU based on Pytorch.

You can contact us at [email protected]

Quasi-Recurrent Neural Network (QRNN) for PyTorch Updated to support multi-GPU environments via DataParallel - see the the multigpu_dataparallel.py ex

Notice(2019.11.2) This repo was built back two years ago when there were no pytorch detection implementation that can achieve reasonable performance.

shufflev2-yolov5: lighter, faster and easier to deploy. Evolved from yolov5 and the size of model is only 1.7M (int8) and 3.3M (fp16). It can reach 10+ FPS on the Raspberry Pi 4B when the input size is 320×320~

YOLOv5-Lite:lighter, faster and easier to deploy Perform a series of ablation experiments on yolov5 to make it lighter (smaller Flops, lower memory, a

Transformer Captioning This repository contains the code for Transformer-based image captioning. Based on meshed-memory-transformer, we further optimi

Faster RCNN with PyTorch Note: I re-implemented faster rcnn in this project when I started learning PyTorch. Then I use PyTorch in all of my projects.

Tacotron 2 (without wavenet) PyTorch implementation of Natural TTS Synthesis By Conditioning Wavenet On Mel Spectrogram Predictions. This implementati

This project is based on ultralytics/yolov3. LF-YOLO (Lighter and Faster YOLO) is used to detect defect of X-ray weld image. Download $ git clone http

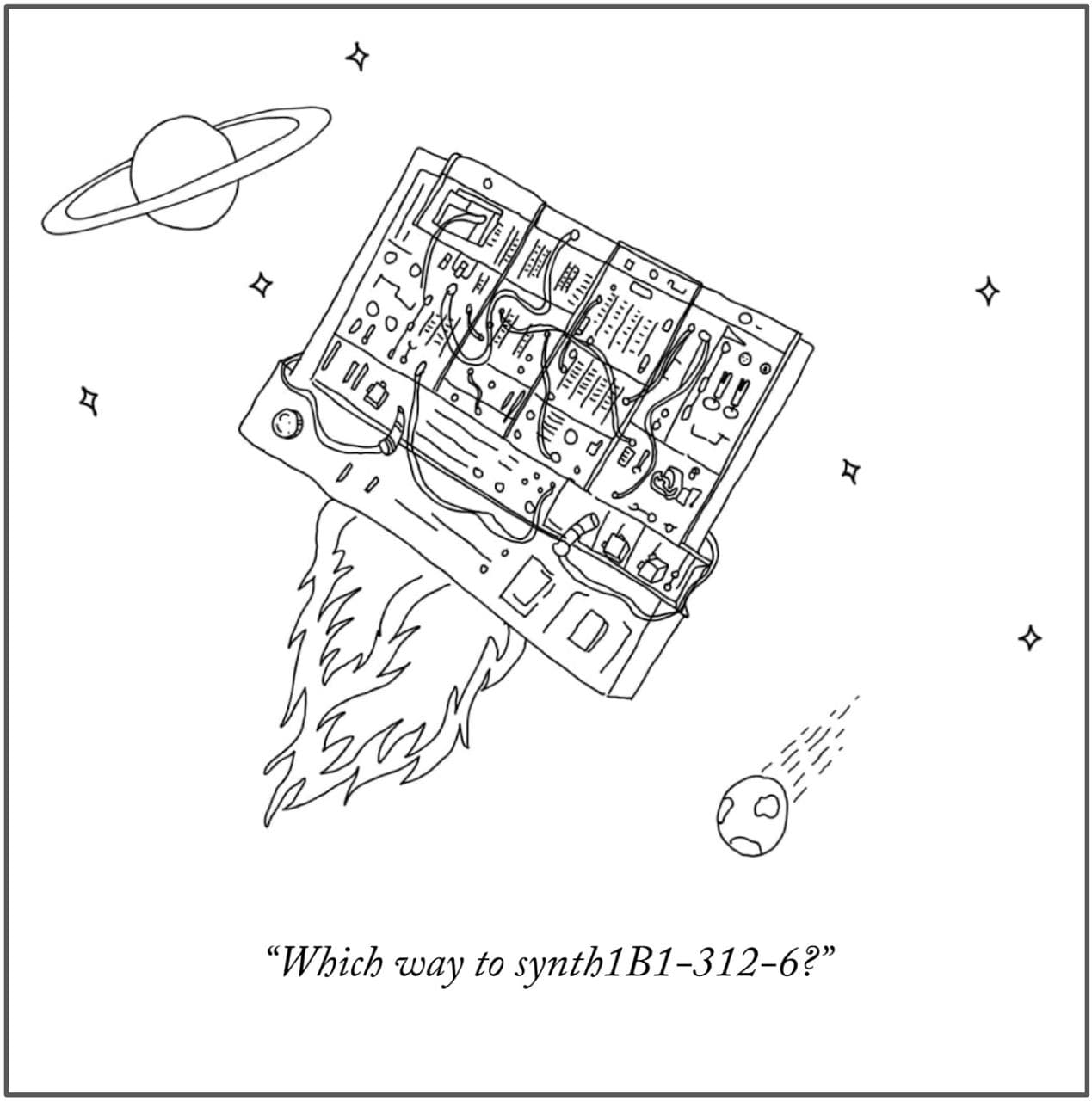

torchsynth The fastest synth in the universe. Introduction torchsynth is based upon traditional modular synthesis written in pytorch. It is GPU-option

Quantile Regression DQN Quantile Regression DQN a Minimal Working Example, Distributional Reinforcement Learning with Quantile Regression (https://arx

Hitters_Linear_Regression Kullanacağımız veri seti Carnegie Mellon Üniversitesi'

Modeling distributions on Riemannian manifolds is a crucial component in understanding non-Euclidean data that arises, e.g., in physics and geology. The budding approaches in this space are limited by representational and computational tradeoffs. We propose and study a class of flows that uses convex potentials from Riemannian optimal transport. These are universal and can model distributions on any compact Riemannian manifold without requiring domain knowledge of the manifold to be integrated into the architecture. We demonstrate that these flows can model standard distributions on spheres, and tori, on synthetic and geological data.

Licensing The majority of neural-scs is licensed under the CC BY-NC 4.0 License, however, portions of the project are available under separate license

VAE with Volume-Preserving Flows This is a PyTorch implementation of two volume-preserving flows as described in the following papers: Tomczak, J. M.,

Sylvester normalizing flows for variational inference Pytorch implementation of Sylvester normalizing flows, based on our paper: Sylvester normalizing

CFMM Optimal Routing This repository contains the code needed to generate the figures used in the paper Optimal Routing for Constant Function Market M

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

LeViT: a Vision Transformer in ConvNet's Clothing for Faster Inference This repository contains PyTorch evaluation code, training code and pretrained

SparseML is a toolkit that includes APIs, CLIs, scripts and libraries that apply state-of-the-art sparsification algorithms such as pruning and quantization to any neural network. General, recipe-driven approaches built around these algorithms enable the simplification of creating faster and smaller models for the ML performance community at large.