Awesome Transformer Architecture Search:

To keep track of the large number of recent papers that look at the intersection of Transformers and Neural Architecture Search (NAS), we have created this awesome list of curated papers and resources, inspired by awesome-autodl, awesome-architecture-search, and awesome-computer-vision. Papers are divided into the following categories:

- General Transformer search

- Domain Specific, applied Transformer search (divided into NLP, Vision, ASR)

- Insights on Transformer components or searchable parameters

- Transformer Surveys

This repository is maintained by the AutoML Group Freiburg. Please feel free to pull requests or open an issue to add papers.

General Transformer Search

| Title | Venue | Group |

|---|---|---|

| UniNet: Unified Architecture Search with Convolutions, Transformer and MLP | arxiv [Oct'21] | SenseTime |

| Analyzing and Mitigating Interference in Neural Architecture Search | arxiv [Aug'21] | Tsinghua, MSR |

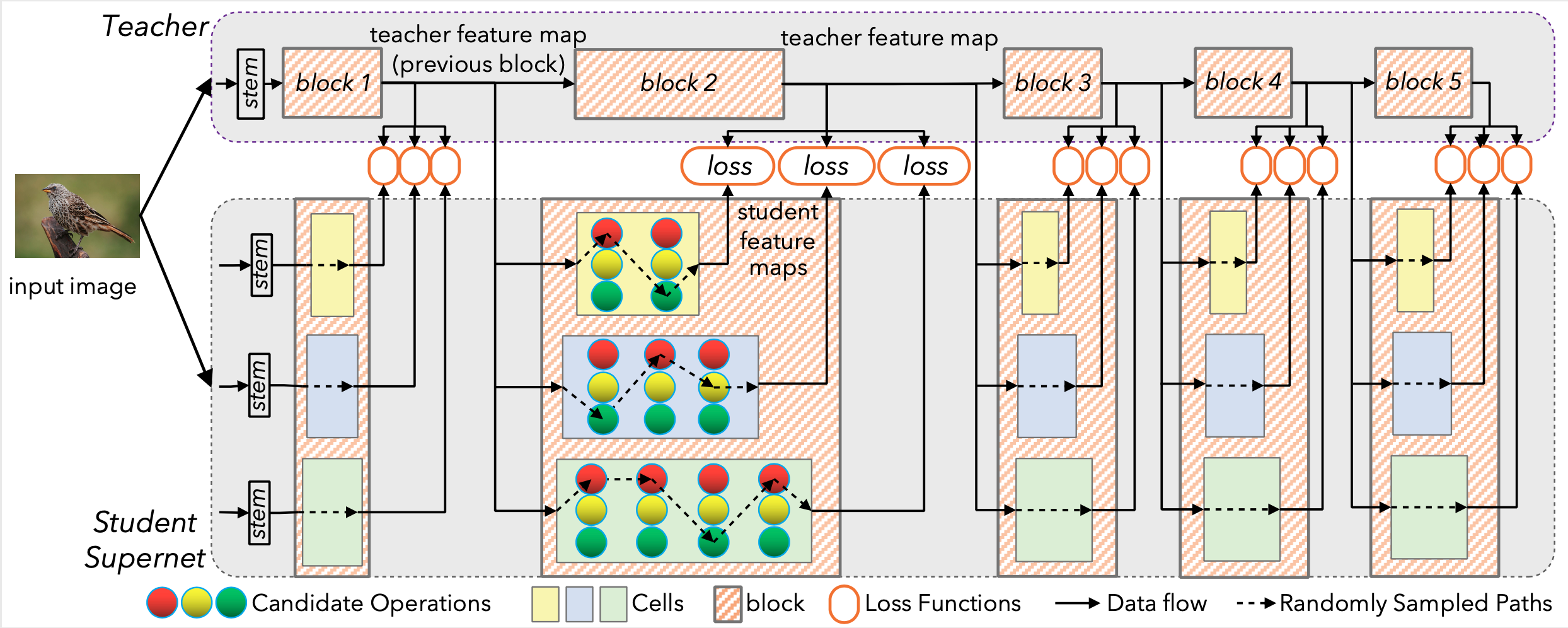

| BossNAS: Exploring Hybrid CNN-transformers with Block-wisely Self-supervised Neural Architecture Search | ICCV'21 | Sun Yat-sen University |

| Memory-Efficient Differentiable Transformer Architecture Search | ACL-IJCNLP'21 | MSR, Peking University |

| Finding Fast Transformers: One-Shot Neural Architecture Search by Component Composition | arxiv [Aug'20] | Google Research |

| AutoTrans: Automating Transformer Design via Reinforced Architecture Search | arxiv [Sep'20] | Fudan University |

| NAT: Neural Architecture Transformer for Accurate and Compact Architectures | NeurIPS'19 | Tencent AI |

| The Evolved Transformer | ICML'19 | Google Brain |

Domain Specific Transformer Search

Vision

| Title | Venue | Group |

|---|---|---|

| AutoFormer: Searching Transformers for Visual Recognition | ICCV'21 | MSR |

| GLiT: Neural Architecture Search for Global and Local Image Transformer | ICCV'21 | University of Sydney |

| Searching for Efficient Multi-Stage Vision Transformers | ICCV'21 workshop | MIT |

| HR-NAS: Searching Efficient High-Resolution Neural Architectures with Lightweight Transformers | CVPR'21 | Bytedance Inc. |

| Vision Transformer Architecture Search | arxiv [June'21] | SenseTime, Tsingua University |

Natural Language Processing

| Title | Venue | Group |

|---|---|---|

| AutoTinyBERT: Automatic Hyper-parameter Optimization for Efficient Pre-trained Language Models | ACL'21 | MIT |

| NAS-BERT: Task-Agnostic and Adaptive-Size BERT Compression with Neural Architecture Search | KDD'21 | MSR, Tsinghua University |

| AutoBERT-Zero: Evolving the BERT backbone from scratch | arxiv [July'21] | Huawei Noah’s Ark Lab |

| HAT: Hardware-Aware Transformers for Efficient Natural Language Processing | ACL'20 | MIT |

Automatic Speech Recognition

| Title | Venue | Group |

|---|---|---|

| LightSpeech: Lightweight and Fast Text to Speech with Neural Architecture Search | ICASSP'21 | MSR |

| Darts-Conformer: Towards Efficient Gradient-Based Neural Architecture Search For End-to-End ASR | arxiv [Aug'21] | NPU, Xi'an |

| Improved Conformer-based End-to-End Speech Recognition Using Neural Architecture Search | arxiv [April'21] | Chinese Academy of Sciences |

| Evolved Speech-Transformer: Applying Neural Architecture Search to End-to-End Automatic Speech Recognition | INTERSPEECH'20 | VUNO Inc. |

Insights on Transformer components and interesting papers

| Title | Venue | Group |

|---|---|---|

| Patches are All You Need ? | ICLR'22 under review | - |

| Swin Transformer: Hierarchical Vision Transformer using Shifted Windows | ICCV'21 best paper | MSR |

| Rethinking Spatial Dimensions of Vision Transformers | ICCV'21 | NAVER AI |

| What makes for hierarchical vision transformers | arxiv [Sept'21] | HUST |

| AutoAttend: Automated Attention Representation Search | ICML'21 | Tsinghua University |

| Rethinking Attention with Performers | ICLR'21 Oral | |

| LambdaNetworks: Modeling long-range Interactions without Attention | ICLR'21 | Google Research |

| HyperGrid Transformers | ICLR'21 | Google Research |

| LocalViT: Bringing Locality to Vision Transformers | arxiv [April'21] | ETH Zurich |

| NASABN: A Neural Architecture Search Framework for Attention-Based Networks | IJCNN'20 | Chinese Academy of Sciences |

| Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy Lifting, the Rest Can Be Pruned | ACL'19 | Yandex |

Transformer Surveys

| Title | Venue | Group |

|---|---|---|

| Transformers in Vision: A Survey | arxiv [Oct'21] | MBZ University of AI |

| Efficient Transformers: A Survey | arxiv [Sept'21] | Google Research |

![[ICLR 2021] HW-NAS-Bench: Hardware-Aware Neural Architecture Search Benchmark](https://github.com/RICE-EIC/HW-NAS-Bench/raw/main/devices.jpg?raw=true)

![[CVPR21] LightTrack: Finding Lightweight Neural Network for Object Tracking via One-Shot Architecture Search](https://github.com/researchmm/LightTrack/raw/main/Archs.gif)