7 Repositories

Python triton Libraries

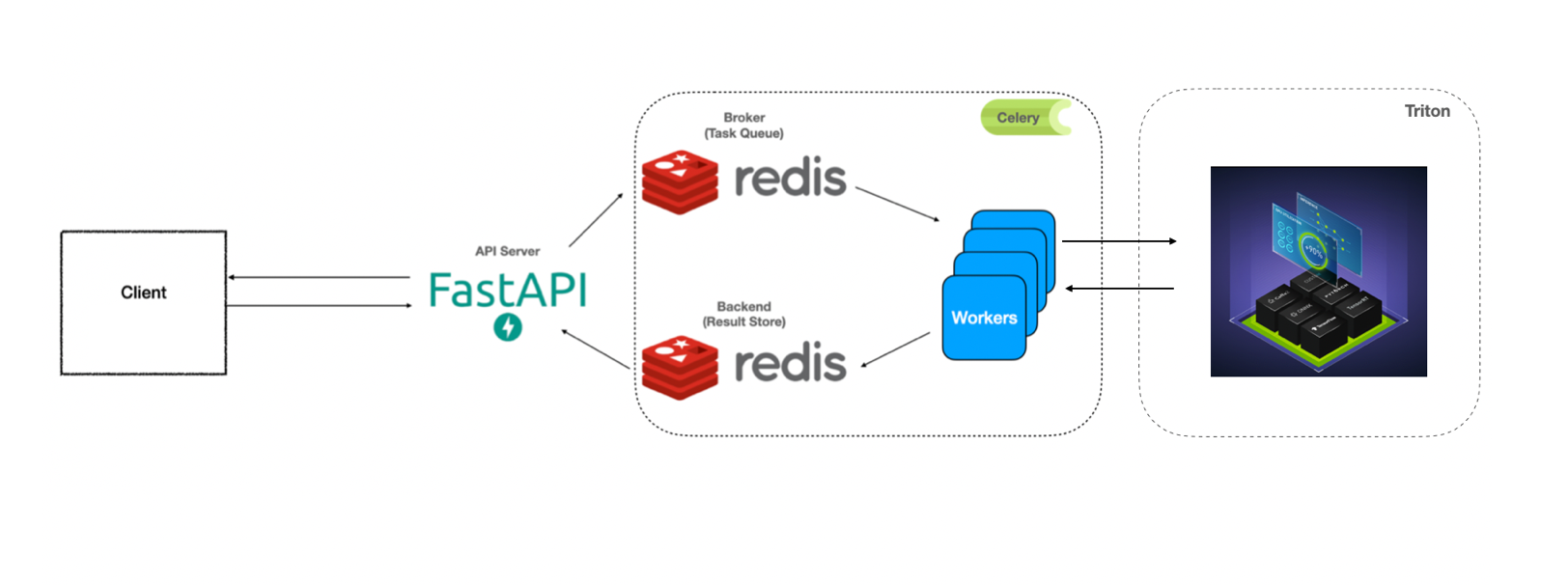

Simple example of FastAPI + Celery + Triton for benchmarking

You can see the previous work from: https://github.com/Curt-Park/producer-consumer-fastapi-celery https://github.com/Curt-Park/triton-inference-server

Pytorch Performace Tuning, WandB, AMP, Multi-GPU, TensorRT, Triton

Plant Pathology 2020 FGVC7 Introduction A deep learning model pipeline for training, experimentaiton and deployment for the Kaggle Competition, Plant

Python wrapper class for OpenVINO Model Server. User can submit inference request to OVMS with just a few lines of code

Python wrapper class for OpenVINO Model Server. User can submit inference request to OVMS with just a few lines of code.

Hardware-accelerated DNN model inference ROS2 packages using NVIDIA Triton/TensorRT for both Jetson and x86_64 with CUDA-capable GPU

Isaac ROS DNN Inference Overview This repository provides two NVIDIA GPU-accelerated ROS2 nodes that perform deep learning inference using custom mode

Deploy optimized transformer based models on Nvidia Triton server

🤗 Hugging Face Transformer submillisecond inference 🤯 and deployment on Nvidia Triton server Yes, you can perfom inference with transformer based mo

Deploy optimized transformer based models on Nvidia Triton server

Deploy optimized transformer based models on Nvidia Triton server

Implementation of a Transformer, but completely in Triton

Transformer in Triton (wip) Implementation of a Transformer, but completely in Triton. I'm completely new to lower-level neural net code, so this repo