Description

This is the repository for the paper Unifying Cross-Lingual Semantic Role Labeling with Heterogeneous Linguistic Resources, to be presented at NAACL 2021 by Simone Conia, Andrea Bacciu and Roberto Navigli.

Abstract

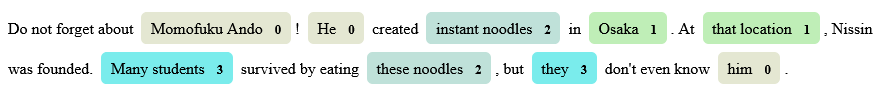

While cross-lingual techniques are finding increasing success in a wide range of Natural Language Processing tasks, their application to Semantic Role Labeling (SRL) has been strongly limited by the fact that each language adopts its own linguistic formalism, from PropBank for English to AnCora for Spanish and PDT-Vallex for Czech, inter alia. In this work, we address this issue and present a unified model to perform cross-lingual SRL over heterogeneous linguistic resources. Our model implicitly learns a high-quality mapping for different formalisms across diverse languages without resorting to word alignment and/or translation techniques. We find that, not only is our cross-lingual system competitive with the current state of the art but that it is also robust to low-data scenarios. Most interestingly, our unified model is able to annotate a sentence in a single forward pass with all the inventories it was trained with, providing a tool for the analysis and comparison of linguistic theories across different languages.

Download

You can download a copy of all the files in this repository by cloning the git repository:

git clone https://github.com/SapienzaNLP/unify-srl.git

Model Checkpoint

To install

To install you can use the environment.yml.

To use the model with NVIDIA CUDA remember to install the torch-scatter package made for CUDA as described in the documentation.

Cite this work

@inproceedings{conia-etal-2021-unify-srl,

title = "Unifying Cross-Lingual Semantic Role Labeling with Heterogeneous Linguistic Resources",

author = "Conia, Simone and

Bacciu, Andrea and

Navigli, Roberto",

booktitle = "Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jun,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.naacl-main.31",

pages = "338--351",

}