Clinica

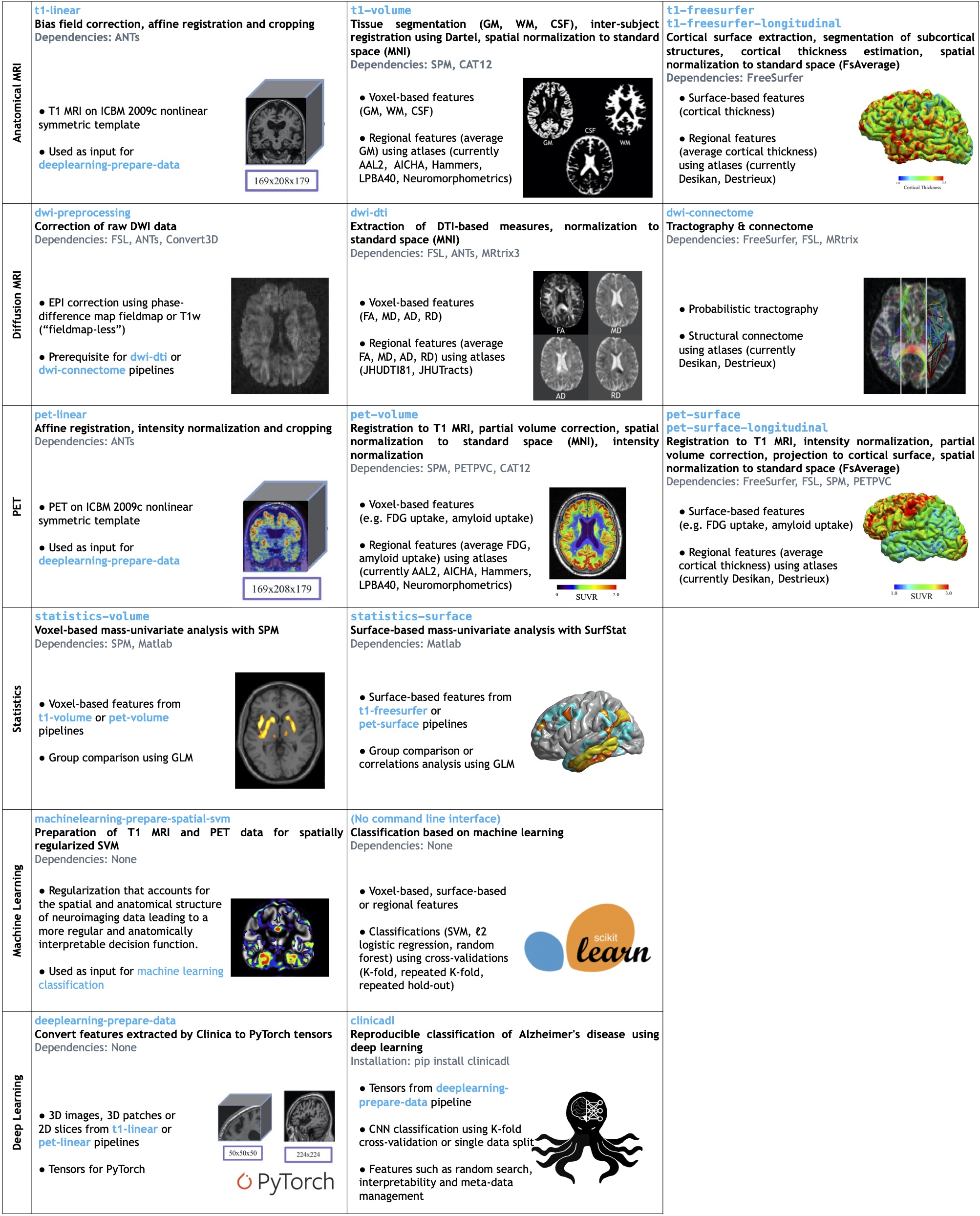

Software platform for clinical neuroimaging studies

Homepage | Documentation | Paper | Forum | See also: AD-ML, AD-DL ClinicaDL

About The Project

Clinica is a software platform for clinical research studies involving patients with neurological and psychiatric diseases and the acquisition of multimodal data (neuroimaging, clinical and cognitive evaluations, genetics...), most often with longitudinal follow-up.

Clinica is command-line driven and written in Python. It uses the Nipype system for pipelining and combines widely-used software packages for neuroimaging data analysis (ANTs, FreeSurfer, FSL, MRtrix, PETPVC, SPM), machine learning (Scikit-learn) and the BIDS standard for data organization.

Clinica provides tools to convert publicly available neuroimaging datasets into BIDS, namely:

- ADNI: Alzheimer’s Disease Neuroimaging Initiative

- AIBL: Australian Imaging, Biomarker & Lifestyle Flagship Study of Ageing

- NIFD: Neuroimaging in Frontotemporal Dementia

- OASIS: Open Access Series of Imaging Studies

- OASIS-3: Longitudinal Neuroimaging, Clinical, and Cognitive Dataset for Normal Aging and Alzheimer’s Disease

Clinica can process any BIDS-compliant dataset with a set of complex processing pipelines involving different software packages for the analysis of neuroimaging data (T1-weighted MRI, diffusion MRI and PET data). It also provides integration between feature extraction and statistics, machine learning or deep learning.

Clinica is also showcased as a framework for the reproducible classification of Alzheimer's disease using machine learning and deep learning.

Getting Started

Full instructions for installation and additional information can be found in the user documentation.

Clinica currently supports macOS and Linux. It can be installed by typing the following command:

pip install clinica

To avoid conflicts with other versions of the dependency packages installed by pip, it is strongly recommended to create a virtual environment before the installation. For example, use Conda, to create a virtual environment and activate it before installing clinica (you can also use virtualenv):

conda create --name clinicaEnv python=3.7

conda activate clinicaEnv

Depending on the pipeline that you want to use, you need to install pipeline-specific interfaces. Not all the dependencies are necessary to run Clinica. Please refer to this page to determine which third-party libraries you need to install.

Example

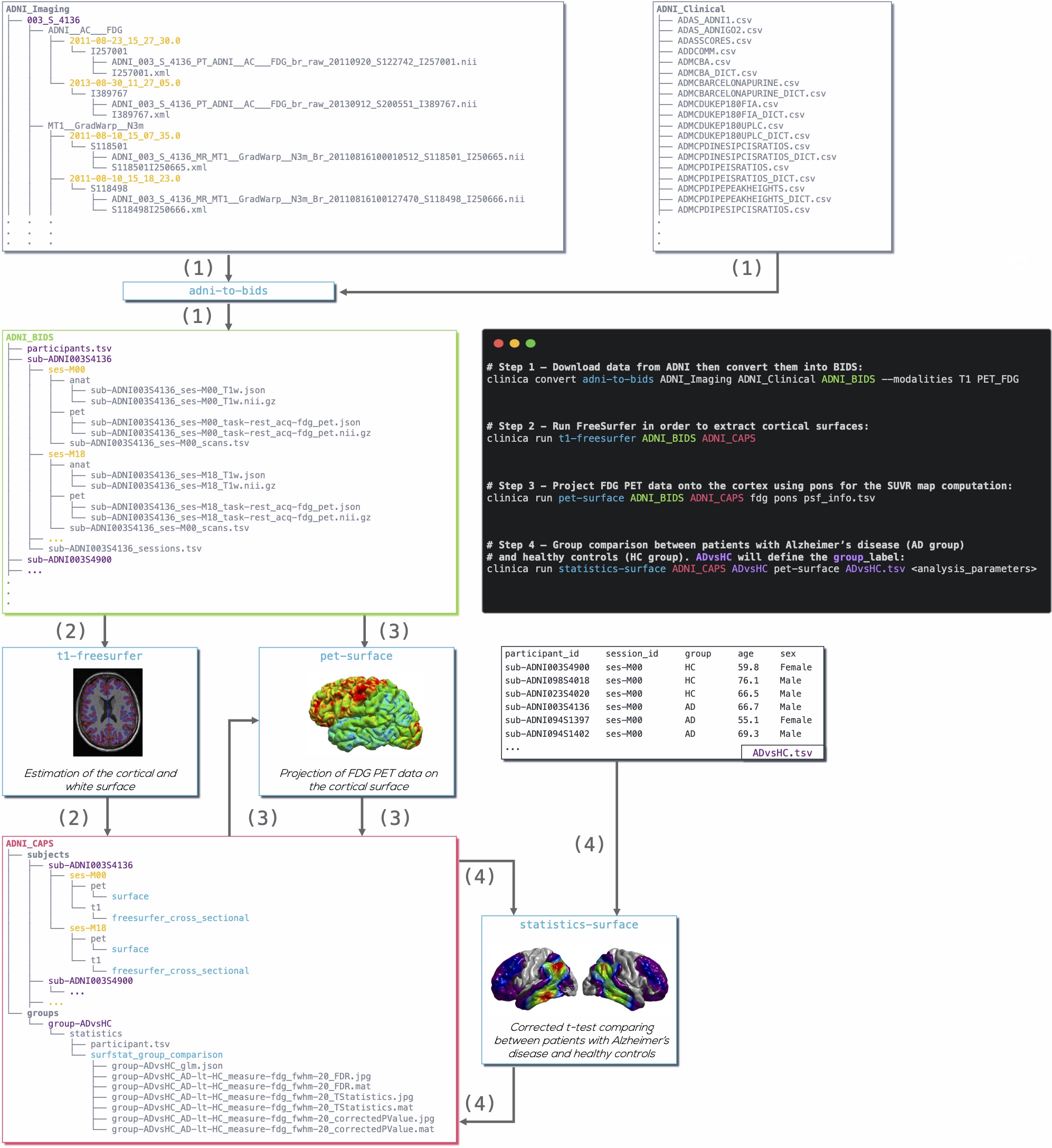

Diagram illustrating the Clinica pipelines involved when performing a group comparison of FDG PET data projected on the cortical surface between patients with Alzheimer's disease and healthy controls from the ADNI database:

- Clinical and neuroimaging data are downloaded from the ADNI website and data are converted into BIDS with the

adni-to-bidsconverter. - Estimation of the cortical and white surface is then produced by the

t1-freesurferpipeline. - FDG PET data can be projected on the subject’s cortical surface and normalized to the FsAverage template from FreeSurfer using the

pet-surfacepipeline. - TSV file with demographic information of the population studied is given to the

statistics-surfacepipeline to generate the results of the group comparison.

For more examples and details, please refer to the Documentation.

Support

- Check for past answers in the old Clinica Google Group

- Start a discussion on Github

- Report an issue on GitHub

Contributing

We encourage you to contribute to Clinica! Please check out the Contributing to Clinica guide for guidelines about how to proceed. Do not hesitate to ask questions if something is not clear for you, report an issue, etc.

License

This software is distributed under the MIT License. See license file for more information.

Citing us

- Routier, A., Burgos, N., Díaz, M., Bacci, M., Bottani, S., El-Rifai O., Fontanella, S., Gori, P., Guillon, J., Guyot, A., Hassanaly, R., Jacquemont, T., Lu, P., Marcoux, A., Moreau, T., Samper-González, J., Teichmann, M., Thibeau-Sutre, E., Vaillant G., Wen, J., Wild, A., Habert, M.-O., Durrleman, S., and Colliot, O.: Clinica: An Open Source Software Platform for Reproducible Clinical Neuroscience Studies Frontiers in Neuroinformatics, 2021 doi:10.3389/fninf.2021.689675