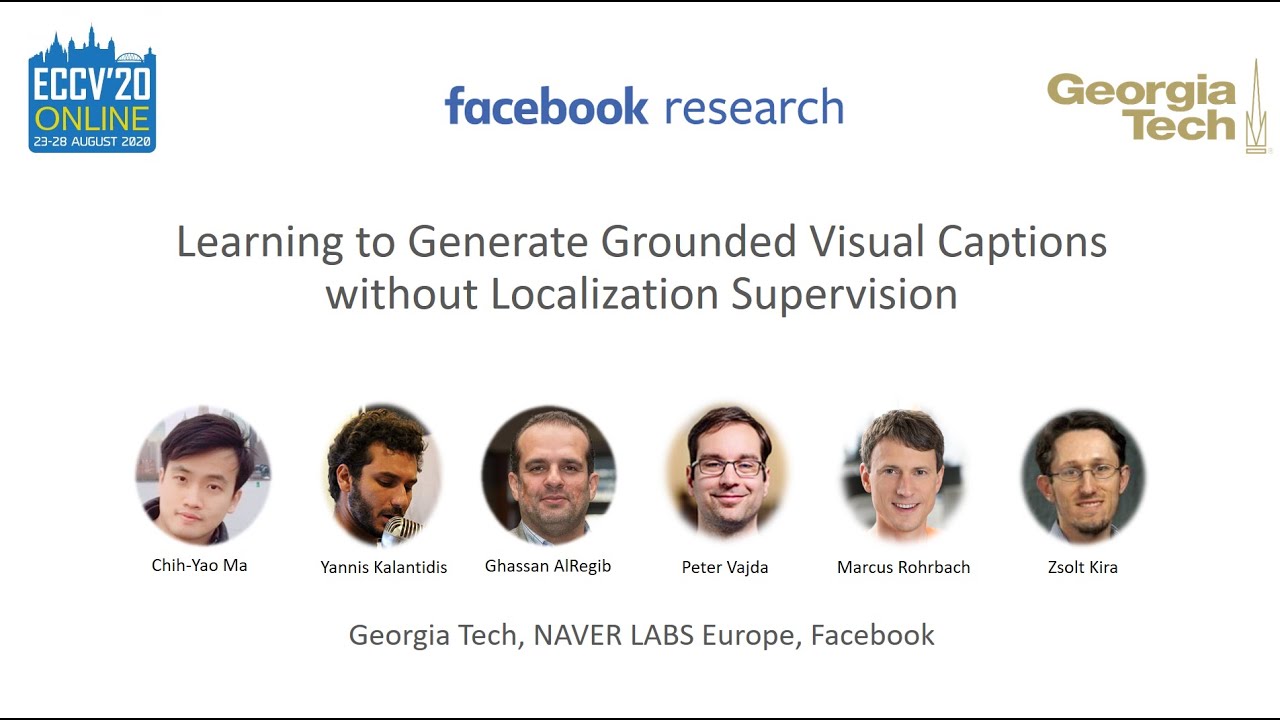

Learning to Generate Grounded Visual Captions without Localization Supervision

This is the PyTorch implementation of our paper:

Learning to Generate Grounded Visual Captions without Localization Supervision

Chih-Yao Ma, Yannis Kalantidis, Ghassan AlRegib, Peter Vajda, Marcus Rohrbach, Zsolt Kira

European Conference on Computer Vision (ECCV), 2020

10-min YouTube Video

How to start

Clone the repo recursively:

git clone --recursive [email protected]:chihyaoma/cyclical-visual-captioning.git

If you didn't clone with the --recursive flag, then you'll need to manually clone the pybind submodule from the top-level directory:

git submodule update --init --recursive

Installation

The proposed cyclical method can be applied directly to image and video captioning tasks.

Currently, installation guide and our code for video captioning on the ActivityNet-Entities dataset are provided in anet-video-captioning.

Acknowledgments

Chih-Yao Ma and Zsolt Kira were partly supported by DARPA’s Lifelong Learning Machines (L2M) program, under Cooperative Agreement HR0011-18-2-0019, as part of their affiliation with Georgia Tech. We thank Chia-Jung Hsu for her valuable and artistic helps on the figures.

Citation

If you find this repository useful, please cite our paper:

@inproceedings{ma2020learning,

title={Learning to Generate Grounded Image Captions without Localization Supervision},

author={Ma, Chih-Yao and Kalantidis, Yannis and AlRegib, Ghassan and Vajda, Peter and Rohrbach, Marcus and Kira, Zsolt},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

year={2020},

url={https://arxiv.org/abs/1906.00283},

}

![[CVPR'22] Official PyTorch Implementation of Collaborative Transformers for Grounded Situation Recognition](https://user-images.githubusercontent.com/55849968/160762073-9a458795-03c1-4b2a-8945-2187b5a48aca.png)

![A 2D Visual Localization Framework based on Essential Matrices [ICRA2020]](https://github.com/GrumpyZhou/visloc-relapose/raw/master/pipeline/pipeline.jpg)