340 Repositories

Python visual-grounding Libraries

![[CVPR 2022 Oral] Balanced MSE for Imbalanced Visual Regression https://arxiv.org/abs/2203.16427](https://github.com/jiawei-ren/BalancedMSE/raw/main/figures/intro.png)

[CVPR 2022 Oral] Balanced MSE for Imbalanced Visual Regression https://arxiv.org/abs/2203.16427

Balanced MSE Code for the paper: Balanced MSE for Imbalanced Visual Regression Jiawei Ren, Mingyuan Zhang, Cunjun Yu, Ziwei Liu CVPR 2022 (Oral) News

One line to host them all. Bootstrap your image search case in minutes.

One line to host them all. Bootstrap your image search case in minutes. Survey NOW gives the world access to customized neural image search in just on

![[CVPR2022] This repository contains code for the paper](https://github.com/Bazinga699/NCL/raw/main/resource/framework_English.png)

[CVPR2022] This repository contains code for the paper "Nested Collaborative Learning for Long-Tailed Visual Recognition", published at CVPR 2022

Nested Collaborative Learning for Long-Tailed Visual Recognition This repository is the official PyTorch implementation of the paper in CVPR 2022: Nes

All of the figures and notebooks for my deep learning book, for free!

"Deep Learning - A Visual Approach" by Andrew Glassner This is the official repo for my book from No Starch Press. Ordering the book My book is called

SeqTR: A Simple yet Universal Network for Visual Grounding

SeqTR This is the official implementation of SeqTR: A Simple yet Universal Network for Visual Grounding, which simplifies and unifies the modelling fo

Language Models Can See: Plugging Visual Controls in Text Generation

Language Models Can See: Plugging Visual Controls in Text Generation Authors: Yixuan Su, Tian Lan, Yahui Liu, Fangyu Liu, Dani Yogatama, Yan Wang, Lin

A python package for generating, analyzing and visualizing building shadows

pybdshadow Introduction pybdshadow is a python package for generating, analyzing and visualizing building shadows from large scale building geographic

Official repository of OFA. Paper: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

Paper | Blog OFA is a unified multimodal pretrained model that unifies modalities (i.e., cross-modality, vision, language) and tasks (e.g., image gene

A Decentralized Omnidirectional Visual-Inertial-UWB State Estimation System for Aerial Swar.

Omni-swarm A Decentralized Omnidirectional Visual-Inertial-UWB State Estimation System for Aerial Swarm Introduction Omni-swarm is a decentralized omn

Self-Supervised Vision Transformers Learn Visual Concepts in Histopathology (LMRL Workshop, NeurIPS 2021)

Self-Supervised Vision Transformers Learn Visual Concepts in Histopathology Self-Supervised Vision Transformers Learn Visual Concepts in Histopatholog

[CVPR 2022 Oral] TubeDETR: Spatio-Temporal Video Grounding with Transformers

TubeDETR: Spatio-Temporal Video Grounding with Transformers Website • STVG Demo • Paper This repository provides the code for our paper. This includes

Facestar dataset. High quality audio-visual recordings of human conversational speech.

Facestar Dataset Description Existing audio-visual datasets for human speech are either captured in a clean, controlled environment but contain only a

Official page of Struct-MDC (RA-L'22 with IROS'22 option); Depth completion from Visual-SLAM using point & line features

Struct-MDC (click the above buttons for redirection!) Official page of "Struct-MDC: Mesh-Refined Unsupervised Depth Completion Leveraging Structural R

![[ICCV2021] 3DVG-Transformer: Relation Modeling for Visual Grounding on Point Clouds](https://github.com/zlccccc/3DVG-Transformer/raw/main/demo/Model.png)

[ICCV2021] 3DVG-Transformer: Relation Modeling for Visual Grounding on Point Clouds

3DVG-Transformer This repository is for the ICCV 2021 paper "3DVG-Transformer: Relation Modeling for Visual Grounding on Point Clouds" Our method "3DV

Implementation of 🦩 Flamingo, state-of-the-art few-shot visual question answering attention net out of Deepmind, in Pytorch

🦩 Flamingo - Pytorch Implementation of Flamingo, state-of-the-art few-shot visual question answering attention net, in Pytorch. It will include the p

A Flask Sentiment Analysis API, with visual implementation

The Sentiment Analysis Api was created using python flask module,it allows users to parse a text or sentence throught the (?text) arguement, then view the sentiment analysis of that sentence. It can be implementable into a web application.

Code for One-shot Talking Face Generation from Single-speaker Audio-Visual Correlation Learning (AAAI 2022)

One-shot Talking Face Generation from Single-speaker Audio-Visual Correlation Learning (AAAI 2022) Paper | Demo Requirements Python = 3.6 , Pytorch

The visual framework is designed on the idea of module and implemented by mixin method

Visual Framework The visual framework is designed on the idea of module and implemented by mixin method. Its biggest feature is the mixins module whic

Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

Official repository of OFA. Paper: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

A visual indicator of what environment/system you're using in django

A visual indicator of what environment/system you're using in django

A Broad Study on the Transferability of Visual Representations with Contrastive Learning

A Broad Study on the Transferability of Visual Representations with Contrastive Learning This repository contains code for the paper: A Broad Study on

Learning Visual Words for Weakly-Supervised Semantic Segmentation

[IJCAI 2021] Learning Visual Words for Weakly-Supervised Semantic Segmentation Implementation of IJCAI 2021 paper Learning Visual Words for Weakly-Sup

On the Limits of Pseudo Ground Truth in Visual Camera Re-Localization

On the Limits of Pseudo Ground Truth in Visual Camera Re-Localization This repository contains the evaluation code and alternative pseudo ground truth

Customizing Visual Styles in Plotly

Customizing Visual Styles in Plotly Code for a workshop originally developed for an Unconference session during the Outlier Conference hosted by Data

A Novel Plug-in Module for Fine-grained Visual Classification

Pytorch implementation for A Novel Plug-in Module for Fine-Grained Visual Classification. fine-grained visual classification task.

HAIS_2GNN: 3D Visual Grounding with Graph and Attention

HAIS_2GNN: 3D Visual Grounding with Graph and Attention This repository is for the HAIS_2GNN research project. Tao Gu, Yue Chen Introduction The motiv

SAAVN - Sound Adversarial Audio-Visual Navigation,ICLR2022 (In PyTorch)

SAAVN SAAVN Code release for paper "Sound Adversarial Audio-Visual Navigation,IC

PyTorch code for BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

Deep ViT Features as Dense Visual Descriptors

dino-vit-features [paper] [project page] Official implementation of the paper "Deep ViT Features as Dense Visual Descriptors". We demonstrate the effe

Ensemble Visual-Inertial Odometry (EnVIO)

Ensemble Visual-Inertial Odometry (EnVIO) Authors : Jae Hyung Jung, Yeongkwon Choe, and Chan Gook Park 1. Overview This is a ROS package of Ensemble V

PyTorch implementation for the paper Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime

Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime Created by Prarthana Bhattacharyya. Disclaimer: This is n

Vpw analyzer - A visual J1850 VPW analyzer written in Python

VPW Analyzer A visual J1850 VPW analyzer written in Python Requires Tkinter, Pan

Revisiting Weakly Supervised Pre-Training of Visual Perception Models

SWAG: Supervised Weakly from hashtAGs This repository contains SWAG models from the paper Revisiting Weakly Supervised Pre-Training of Visual Percepti

On the Adversarial Robustness of Visual Transformer

On the Adversarial Robustness of Visual Transformer Code for our paper "On the Adversarial Robustness of Visual Transformers"

This repository contains the implementation of the following paper: Cross-Descriptor Visual Localization and Mapping

Cross-Descriptor Visual Localization and Mapping This repository contains the implementation of the following paper: "Cross-Descriptor Visual Localiza

Clustering is a popular approach to detect patterns in unlabeled data

Visual Clustering Clustering is a popular approach to detect patterns in unlabeled data. Existing clustering methods typically treat samples in a data

This repository contains source code for the Situated Interactive Language Grounding (SILG) benchmark

SILG This repository contains source code for the Situated Interactive Language Grounding (SILG) benchmark. If you find this work helpful, please cons

This is a small program that prints a user friendly, visual representation, of your current bsp tree

bspcq, q for query A bspc analyzer (utility for bspwm) This is a small program that prints a user friendly, visual representation, of your current bsp

Wider or Deeper: Revisiting the ResNet Model for Visual Recognition

ademxapp Visual applications by the University of Adelaide In designing our Model A, we did not over-optimize its structure for efficiency unless it w

Multi-modal Text Recognition Networks: Interactive Enhancements between Visual and Semantic Features

Multi-modal Text Recognition Networks: Interactive Enhancements between Visual and Semantic Features | paper | Official PyTorch implementation for Mul

TensorDebugger (TDB) is a visual debugger for deep learning. It extends TensorFlow with breakpoints + real-time visualization of the data flowing through the computational graph

TensorDebugger (TDB) is a visual debugger for deep learning. It extends TensorFlow (Google's Deep Learning framework) with breakpoints + real-time visualization of the data flowing through the computational graph.

OMNIVORE is a single vision model for many different visual modalities

Omnivore: A Single Model for Many Visual Modalities [paper][website] OMNIVORE is a single vision model for many different visual modalities. It learns

Collect some papers about transformer with vision. Awesome Transformer with Computer Vision (CV)

Awesome Visual-Transformer Collect some Transformer with Computer-Vision (CV) papers. If you find some overlooked papers, please open issues or pull r

Transformer in Vision

Transformer-in-Vision Recent Transformer-based CV and related works. Welcome to comment/contribute! Keep updated. Resource SCENIC: A JAX Library for C

A general python framework for visual object tracking and video object segmentation, based on PyTorch

PyTracking A general python framework for visual object tracking and video object segmentation, based on PyTorch. 📣 Two tracking/VOS papers accepted

Codes and scripts for "Explainable Semantic Space by Grounding Languageto Vision with Cross-Modal Contrastive Learning"

Visually Grounded Bert Language Model This repository is the official implementation of Explainable Semantic Space by Grounding Language to Vision wit

Visual Python and C++ nanosecond profiler, logger, tests enabler

Look into Palanteer and get an omniscient view of your program Palanteer is a set of lean and efficient tools to improve the quality of software, for

Flow-based visual scripting for Python

A simple visual node editor for Python Ryven combines flow-based visual scripting with Python. It gives you absolute freedom for your nodes and a simp

PyTorch code for the NAACL 2021 paper "Improving Generation and Evaluation of Visual Stories via Semantic Consistency"

Improving Generation and Evaluation of Visual Stories via Semantic Consistency PyTorch code for the NAACL 2021 paper "Improving Generation and Evaluat

Visual dialog agents with pre-trained vision-and-language encoders.

Learning Better Visual Dialog Agents with Pretrained Visual-Linguistic Representation Or READ-UP: Referring Expression Agent Dialog with Unified Pretr

A Unified Framework and Analysis for Structured Knowledge Grounding

UnifiedSKG 📚 : Unifying and Multi-Tasking Structured Knowledge Grounding with Text-to-Text Language Models Code for paper UnifiedSKG: Unifying and Mu

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

PyFlow is a general purpose visual scripting framework for python

PyFlow is a general purpose visual scripting framework for python. State Base structure of program implemented, such things as packages disco

Reproducing-BowNet: Learning Representations by Predicting Bags of Visual Words

Reproducing-BowNet Our reproducibility effort based on the 2020 ML Reproducibility Challenge. We are reproducing the results of this CVPR 2020 paper:

Source codes for Improved Few-Shot Visual Classification (CVPR 2020), Enhancing Few-Shot Image Classification with Unlabelled Examples

Source codes for Improved Few-Shot Visual Classification (CVPR 2020), Enhancing Few-Shot Image Classification with Unlabelled Examples (WACV 2022) and Beyond Simple Meta-Learning: Multi-Purpose Models for Multi-Domain, Active and Continual Few-Shot Learning (TPAMI 2022 - in submission)

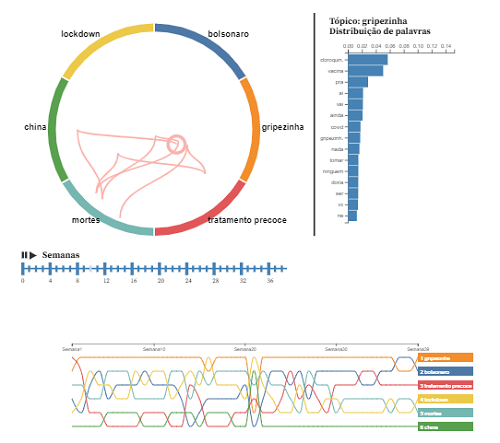

Project for the discipline of Visual Data Analysis at EMAp FGV.

Analysis of the dissemination of fake news about COVID-19 on Twitter This project was the final work for the discipline of Visual Data Analysis of the

VL-LTR: Learning Class-wise Visual-Linguistic Representation for Long-Tailed Visual Recognition

VL-LTR: Learning Class-wise Visual-Linguistic Representation for Long-Tailed Visual Recognition Usage First, install PyTorch 1.7.1+, torchvision 0.8.2

PyTorch implementation of Lip to Speech Synthesis with Visual Context Attentional GAN (NeurIPS2021)

Lip to Speech Synthesis with Visual Context Attentional GAN This repository contains the PyTorch implementation of the following paper: Lip to Speech

Multi-Stage Episodic Control for Strategic Exploration in Text Games

XTX: eXploit - Then - eXplore Requirements First clone this repo using git clone https://github.com/princeton-nlp/XTX.git Please create two conda envi

RARA: Zero-shot Sim2Real Visual Navigation with Following Foreground Cues

RARA: Zero-shot Sim2Real Visual Navigation with Following Foreground Cues FGBG (foreground-background) pytorch package for defining and training model

Housing Price Prediction Using Machine Learning.

HOUSING PRICE PREDICTION USING MACHINE LEARNING DESCRIPTION Housing Price Prediction Using Machine Learning is to predict the data of housings. Here I

Which Apple Keeps Which Doctor Away? Colorful Word Representations with Visual Oracles

Which Apple Keeps Which Doctor Away? Colorful Word Representations with Visual Oracles (TASLP 2022)

PyTorch implementaton of our CVPR 2021 paper "Bridging the Visual Gap: Wide-Range Image Blending"

Bridging the Visual Gap: Wide-Range Image Blending PyTorch implementaton of our CVPR 2021 paper "Bridging the Visual Gap: Wide-Range Image Blending".

A self-supervised learning framework for audio-visual speech

AV-HuBERT (Audio-Visual Hidden Unit BERT) Learning Audio-Visual Speech Representation by Masked Multimodal Cluster Prediction Robust Self-Supervised A

We propose the adversarial blur attack (ABA) against visual object tracking.

ABA We propose the adversarial blur attack (ABA) against visual object tracking. The ICCV link: https://arxiv.org/abs/2107.12085 and, https://openacce

Train Scene Graph Generation for Visual Genome and GQA in PyTorch = 1.2 with improved zero and few-shot generalization.

Scene Graph Generation Object Detections Ground truth Scene Graph Generated Scene Graph In this visualization, woman sitting on rock is a zero-shot tr

Code and Experiments for ACL-IJCNLP 2021 Paper Mind Your Outliers! Investigating the Negative Impact of Outliers on Active Learning for Visual Question Answering.

Code and Experiments for ACL-IJCNLP 2021 Paper Mind Your Outliers! Investigating the Negative Impact of Outliers on Active Learning for Visual Question Answering.

Bottom-up attention model for image captioning and VQA, based on Faster R-CNN and Visual Genome

bottom-up-attention This code implements a bottom-up attention model, based on multi-gpu training of Faster R-CNN with ResNet-101, using object and at

Go from graph data to a secure and interactive visual graph app in 15 minutes. Batteries-included self-hosting of graph data apps with Streamlit, Graphistry, RAPIDS, and more!

✔️ Linux ✔️ OS X ❌ Windows (#39) Welcome to graph-app-kit Turn your graph data into a secure and interactive visual graph app in 15 minutes! Why This

Referring Video Object Segmentation

Awesome-Referring-Video-Object-Segmentation Welcome to starts ⭐ & comments 💹 & sharing 😀 !! - 2021.12.12: Recent papers (from 2021) - welcome to ad

PyTorch code for: Learning to Generate Grounded Visual Captions without Localization Supervision

Learning to Generate Grounded Visual Captions without Localization Supervision This is the PyTorch implementation of our paper: Learning to Generate G

Character Grounding and Re-Identification in Story of Videos and Text Descriptions

Character in Story Identification Network (CiSIN) This project hosts the code for our paper. Youngjae Yu, Jongseok Kim, Heeseung Yun, Jiwan Chung and

Meshed-Memory Transformer for Image Captioning. CVPR 2020

M²: Meshed-Memory Transformer This repository contains the reference code for the paper Meshed-Memory Transformer for Image Captioning (CVPR 2020). Pl

This repository focus on Image Captioning & Video Captioning & Seq-to-Seq Learning & NLP

Awesome-Visual-Captioning Table of Contents ACL-2021 CVPR-2021 AAAI-2021 ACMMM-2020 NeurIPS-2020 ECCV-2020 CVPR-2020 ACL-2020 AAAI-2020 ACL-2019 NeurI

Image captioning - Tensorflow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

Introduction This neural system for image captioning is roughly based on the paper "Show, Attend and Tell: Neural Image Caption Generation with Visual

NFT-Price-Prediction-CNN - Using visual feature extraction, prices of NFTs are predicted via CNN (Alexnet and Resnet) architectures.

NFT-Price-Prediction-CNN - Using visual feature extraction, prices of NFTs are predicted via CNN (Alexnet and Resnet) architectures.

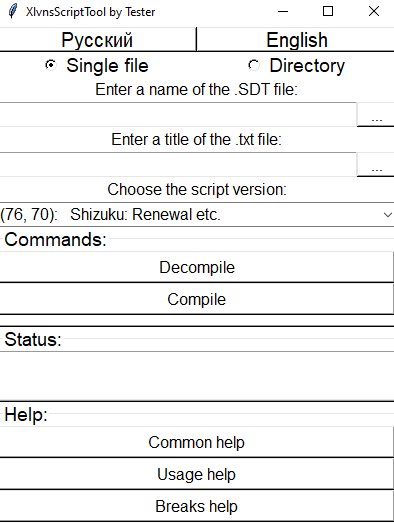

XlvnsScriptTool - Tool for decompilation and compilation of scripts .SDT from the visual novel's engine xlvns

XlvnsScriptTool English Dual languaged (rus+eng) tool for decompiling and compiling (actually, this tool is more than just (dis)assenbler, but less th

Pseudo lidar - (CVPR 2019) Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving

Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving This paper has been accpeted by Conference o

SPT_LSA_ViT - Implementation for Visual Transformer for Small-size Datasets

Vision Transformer for Small-Size Datasets Seung Hoon Lee and Seunghyun Lee and Byung Cheol Song | Paper Inha University Abstract Recently, the Vision

Scaling and Benchmarking Self-Supervised Visual Representation Learning

FAIR Self-Supervision Benchmark is deprecated. Please see VISSL, a ground-up rewrite of benchmark in PyTorch. FAIR Self-Supervision Benchmark This cod

Official implementation of CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21

CATs: Cost Aggregation Transformers for Visual Correspondence NeurIPS'21 For more information, check out the paper on [arXiv]. Training with different

🔀 Visual Room Rearrangement

AI2-THOR Rearrangement Challenge Welcome to the 2021 AI2-THOR Rearrangement Challenge hosted at the CVPR'21 Embodied-AI Workshop. The goal of this cha

For storing the complete exploration of Visual Question Answering for our B.Tech Project

Multi-Image vqa @authors: Akhilesh, Janhavi, Harsh Paper summary, Ideas tried and their corresponding results: on wiki Other discussions: on discussio

Code to generate datasets used in "How Useful is Self-Supervised Pretraining for Visual Tasks?"

Synthetic dataset rendering Framework for producing the synthetic datasets used in: How Useful is Self-Supervised Pretraining for Visual Tasks? Alejan

PyTorch implementation of MoCo: Momentum Contrast for Unsupervised Visual Representation Learning

MoCo: Momentum Contrast for Unsupervised Visual Representation Learning This is a PyTorch implementation of the MoCo paper: @Article{he2019moco, aut

![[arXiv 2020] Video Representation Learning with Visual Tempo Consistency](https://github.com/decisionforce/VTHCL/raw/master/docs/figures/pipeline.png)

[arXiv 2020] Video Representation Learning with Visual Tempo Consistency

Video Representation Learning with Visual Tempo Consistency [Paper] [Project Page] News Full codebae is coming soon Pretained Models For now, we provi

An unsupervised learning framework for depth and ego-motion estimation from monocular videos

SfMLearner This codebase implements the system described in the paper: Unsupervised Learning of Depth and Ego-Motion from Video Tinghui Zhou, Matthew

Code for the paper: Audio-Visual Scene Analysis with Self-Supervised Multisensory Features

[Paper] [Project page] This repository contains code for the paper: Andrew Owens, Alexei A. Efros. Audio-Visual Scene Analysis with Self-Supervised Mu

Percy visual testing for Python Selenium

percy-selenium-python Percy visual testing for Python Selenium. Installation npm install @percy/cli: $ npm install --save-dev @percy/cli pip install P

A small script written in Python3 that generates a visual representation of the Mandelbrot set.

Mandelbrot Set Generator A small script written in Python3 that generates a visual representation of the Mandelbrot set. Abstract The colors in the ou

![[ACMMM 2021, Oral] Code release for](https://github.com/yikaiw/EIP/raw/main/assets/intro.png)

[ACMMM 2021, Oral] Code release for "Elastic Tactile Simulation Towards Tactile-Visual Perception"

EIP: Elastic Interaction of Particles Code release for "Elastic Tactile Simulation Towards Tactile-Visual Perception", in ACMMM (Oral) 2021. By Yikai

Visual odometry package based on hardware-accelerated NVIDIA Elbrus library with world class quality and performance.

Isaac ROS Visual Odometry This repository provides a ROS2 package that estimates stereo visual inertial odometry using the Isaac Elbrus GPU-accelerate

Python package for analyzing behavioral data for Brain Observatory: Visual Behavior

Allen Institute Visual Behavior Analysis package This repository contains code for analyzing behavioral data from the Allen Brain Observatory: Visual

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation Introduction WAKD is a PyTorch implementation for our ICPR-2022 pap

Cilantropy: a Python Package Manager interface created to provide an "easy-to-use" visual and also a command-line interface for Pythonistas.

Cilantropy Cilantropy is a Python Package Manager interface created to provide an "easy-to-use" visual and also a command-line interface for Pythonist

Dual languaged (rus+eng) tool for packing and unpacking archives of Silky Engine.

SilkyArcTool English Dual languaged (rus+eng) GUI tool for packing and unpacking archives of Silky Engine. It is not the same arc as used in Ai6WIN. I

This repository provides data for the VAW dataset as described in the CVPR 2021 paper titled "Learning to Predict Visual Attributes in the Wild"

Visual Attributes in the Wild (VAW) This repository provides data for the VAW dataset as described in the CVPR 2021 Paper: Learning to Predict Visual

[AAAI-2022] Official implementations of MCL: Mutual Contrastive Learning for Visual Representation Learning

Mutual Contrastive Learning for Visual Representation Learning This project provides source code for our Mutual Contrastive Learning for Visual Repres

Depth image based mouse cursor visual haptic

Depth image based mouse cursor visual haptic How to run it. Install pyqt5. Install python modules pip install Pillow pip install numpy For illustrati

![[CoRL 21'] TANDEM: Tracking and Dense Mapping in Real-time using Deep Multi-view Stereo](https://github.com/tum-vision/tandem/raw/master/assets/tandem_poster.jpg)

[CoRL 21'] TANDEM: Tracking and Dense Mapping in Real-time using Deep Multi-view Stereo

TANDEM: Tracking and Dense Mapping in Real-time using Deep Multi-view Stereo Lukas Koestler1* Nan Yang1,2*,† Niclas Zeller2,3 Daniel Cremers1