PocketNet

This is the official repository of the paper:

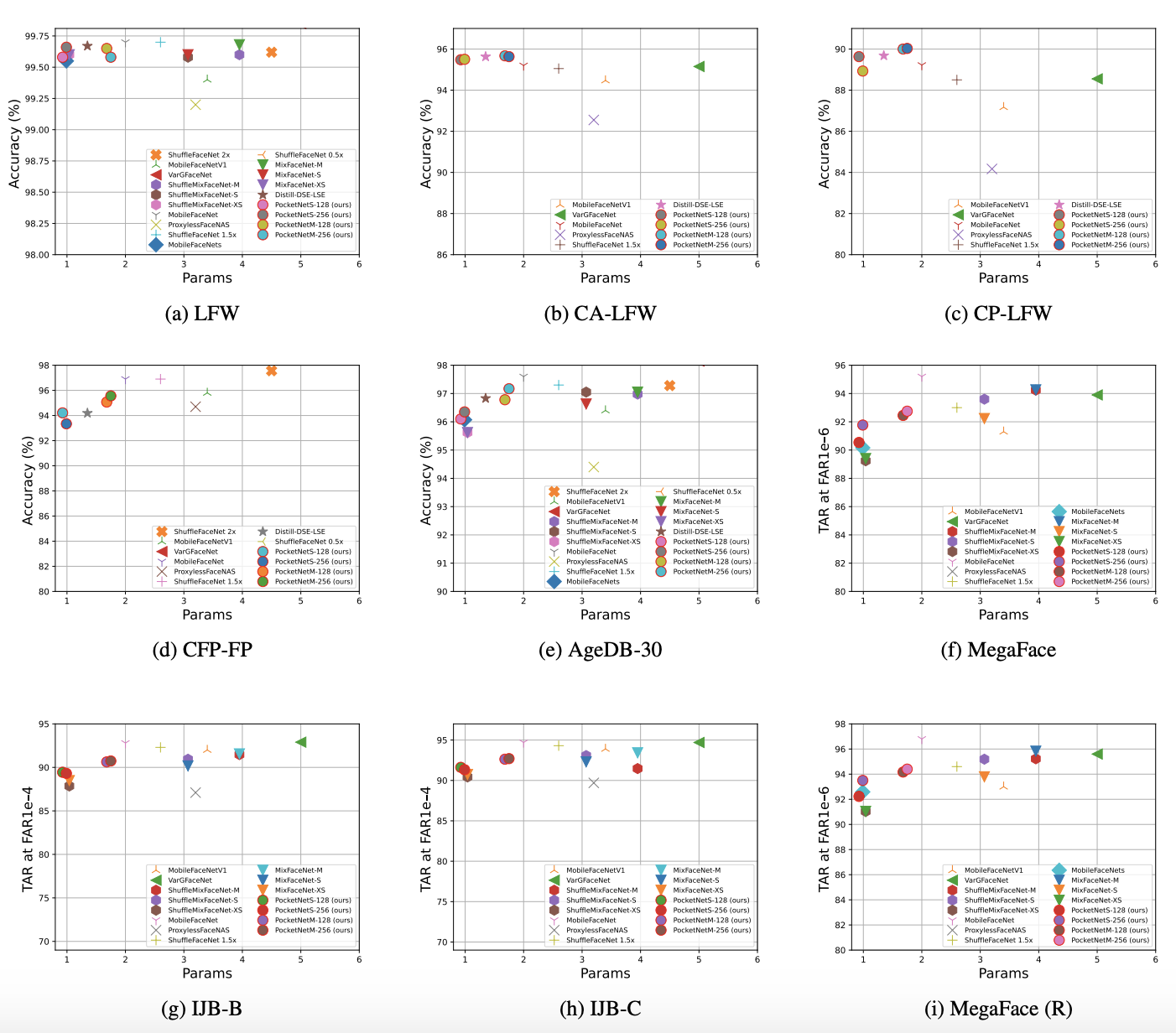

PocketNet: Extreme Lightweight Face Recognition Network using Neural Architecture Search and Multi-Step Knowledge Distillation

Paper on arxiv: arxiv

Face recognition model training

Download MS1MV2 dataset from insightface on strictly follow the licence distribution

Extract the dataset and place it in the data folder

Rename the config/config_xxxxxx.py to config/config.py

- Train PocketNet with ArcFace loss

- ./train.sh

- Train PocketNet with template knowledge distillation

- ./train_kd.sh

- Train PocketNet with multi-step template knowledge distillation

- ./train_kd.sh

| Model | Parameters (M) | configuration | log | pretrained model |

|---|---|---|---|---|

| PocketNetS-128 | 0.92 | Config | log | Pretrained-model |

| PocketNetS-256 | 0.99 | Config | log | Pretrained-model |

| PocketNetM-128 | 1.68 | Config | log | Pretrained-model |

| PocketNetM-256 | 1.75 | Config | log | Pretrained-model |

Differentiable architecture search training

To-do

- Add pretrained model

- Training configuration

- Add NAS code

- Add evaluation results

- Add requirements

If you use any of the provided code in this repository, please cite the following paper:

@misc{boutros2021pocketnet,

title={PocketNet: Extreme Lightweight Face Recognition Network using Neural Architecture Search and Multi-Step Knowledge Distillation},

author={Fadi Boutros and Patrick Siebke and Marcel Klemt and Naser Damer and Florian Kirchbuchner and Arjan Kuijper},

year={2021},

eprint={2108.10710},

archivePrefix={arXiv},

primaryClass={cs.CV}

}