306 Repositories

Python knowledge-distillation Libraries

Official code for our CVPR '22 paper "Dataset Distillation by Matching Training Trajectories"

Dataset Distillation by Matching Training Trajectories Project Page | Paper This repo contains code for training expert trajectories and distilling sy

🐤 Nix-TTS: An Incredibly Lightweight End-to-End Text-to-Speech Model via Non End-to-End Distillation

🐤 Nix-TTS An Incredibly Lightweight End-to-End Text-to-Speech Model via Non End-to-End Distillation Rendi Chevi, Radityo Eko Prasojo, Alham Fikri Aji

![[ACL 2022] LinkBERT: A Knowledgeable Language Model 😎 Pretrained with Document Links](https://github.com/michiyasunaga/LinkBERT/raw/main/figs/overview.png)

[ACL 2022] LinkBERT: A Knowledgeable Language Model 😎 Pretrained with Document Links

LinkBERT: A Knowledgeable Language Model Pretrained with Document Links This repo provides the model, code & data of our paper: LinkBERT: Pretraining

Learn the basics of Python. These tutorials are for Python beginners. so even if you have no prior knowledge of Python, you won’t face any difficulty understanding these tutorials.

01_Python_Introduction Introduction 👋 Python is a modern, robust, high level programming language. It is very easy to pick up even if you are complet

I've demonstrated the working of the decision tree-based ID3 algorithm. Use an appropriate data set for building the decision tree and apply this knowledge to classify a new sample. All the steps have been explained in detail with graphics for better understanding.

Python Decision Tree and Random Forest Decision Tree A Decision Tree is one of the popular and powerful machine learning algorithms that I have learne

Anomaly Detection via Reverse Distillation from One-Class Embedding

Anomaly Detection via Reverse Distillation from One-Class Embedding Implementation (Official Code ⭐️ ⭐️ ⭐️ ) Environment pytorch == 1.91 torchvision =

(CVPR 2022) A minimalistic mapless end-to-end stack for joint perception, prediction, planning and control for self driving.

LAV Learning from All Vehicles Dian Chen, Philipp Krähenbühl CVPR 2022 (also arXiV 2203.11934) This repo contains code for paper Learning from all veh

TigerLily: Finding drug interactions in silico with the Graph.

Drug Interaction Prediction with Tigerlily Documentation | Example Notebook | Youtube Video | Project Report Tigerlily is a TigerGraph based system de

Precision Medicine Knowledge Graph (PrimeKG)

PrimeKG Website | bioRxiv Paper | Harvard Dataverse Precision Medicine Knowledge Graph (PrimeKG) presents a holistic view of diseases. PrimeKG integra

ACL 2022: CAKE: A Scalable Commonsense-Aware Framework For Multi-View Knowledge Graph Completion

CAKE ACL 2022: CAKE: A Scalable Commonsense-Aware Framework For Multi-View Knowledge Graph Completion Introduction This is the PyTorch implementation

The implementation our EMNLP 2021 paper "Enhanced Language Representation with Label Knowledge for Span Extraction".

LEAR The implementation our EMNLP 2021 paper "Enhanced Language Representation with Label Knowledge for Span Extraction". See below for an overview of

LiDAR Distillation: Bridging the Beam-Induced Domain Gap for 3D Object Detection

LiDAR Distillation Paper | Model LiDAR Distillation: Bridging the Beam-Induced Domain Gap for 3D Object Detection Yi Wei, Zibu Wei, Yongming Rao, Jiax

Code for the SIGIR 2022 paper "Hybrid Transformer with Multi-level Fusion for Multimodal Knowledge Graph Completion"

MKGFormer Code for the SIGIR 2022 paper "Hybrid Transformer with Multi-level Fusion for Multimodal Knowledge Graph Completion" Model Architecture Illu

Python/Rust implementations and notes from Proofs Arguments and Zero Knowledge

What is this? This is where I'll be collecting resources related to the Study Group on Dr. Justin Thaler's Proofs Arguments And Zero Knowledge Book. T

The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation"

SD-AANet The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation" [arxiv] Overview confi

Codes for SIGIR'22 Paper 'On-Device Next-Item Recommendation with Self-Supervised Knowledge Distillation'

OD-Rec Codes for SIGIR'22 Paper 'On-Device Next-Item Recommendation with Self-Supervised Knowledge Distillation' Paper, saved teacher models and Andro

Author: Wenhao Yu ([email protected]). ACL 2022. Commonsense Reasoning on Knowledge Graph for Text Generation

Diversifying Commonsense Reasoning Generation on Knowledge Graph Introduction -- This is the pytorch implementation of our ACL 2022 paper "Diversifyin

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning This repository is official Tensorflow implementation of paper: Ensemb

source code for 'Finding Valid Adjustments under Non-ignorability with Minimal DAG Knowledge' by A. Shah, K. Shanmugam, K. Ahuja

Source code for "Finding Valid Adjustments under Non-ignorability with Minimal DAG Knowledge" Reference: Abhin Shah, Karthikeyan Shanmugam, Kartik Ahu

Locally Differentially Private Distributed Deep Learning via Knowledge Distillation (LDP-DL)

Locally Differentially Private Distributed Deep Learning via Knowledge Distillation (LDP-DL) A preprint version of our paper: Link here This is a samp

Kglab - an abstraction layer in Python for building knowledge graphs

Graph Data Science: an abstraction layer in Python for building knowledge graphs, integrated with popular graph libraries – atop Pandas, RDFlib, pySHACL, RAPIDS, NetworkX, iGraph, PyVis, pslpython, pyarrow, etc.

Create a semantic search engine with a neural network (i.e. BERT) whose knowledge base can be updated

Create a semantic search engine with a neural network (i.e. BERT) whose knowledge base can be updated. This engine can later be used for downstream tasks in NLP such as Q&A, summarization, generation, and natural language understanding (NLU).

Rank-One Model Editing for Locating and Editing Factual Knowledge in GPT

Rank-One Model Editing (ROME) This repository provides an implementation of Rank-One Model Editing (ROME) on auto-regressive transformers (GPU-only).

Large-scale Knowledge Graph Construction with Prompting

Large-scale Knowledge Graph Construction with Prompting across tasks (predictive and generative), and modalities (language, image, vision + language, etc.)

Source code for "Understanding Knowledge Integration in Language Models with Graph Convolutions"

Graph Convolution Simulator (GCS) Source code for "Understanding Knowledge Integration in Language Models with Graph Convolutions" Requirements: PyTor

Espial is an engine for automated organization and discovery of personal knowledge

Live Demo (currently not running, on it) Espial is an engine for automated organization and discovery in knowledge bases. It can be adapted to run wit

Tracking Progress in Question Answering over Knowledge Graphs

Tracking Progress in Question Answering over Knowledge Graphs Table of contents Question Answering Systems with Descriptions The QA Systems Table cont

OntoProtein: Protein Pretraining With Ontology Embedding

OntoProtein This is the implement of the paper "OntoProtein: Protein Pretraining With Ontology Embedding". OntoProtein is an effective method that mak

Author Disambiguation using Knowledge Graph Embeddings with Literals

Author Name Disambiguation with Knowledge Graph Embeddings using Literals This is the repository for the master thesis project on Knowledge Graph Embe

HistoKT: Cross Knowledge Transfer in Computational Pathology

HistoKT: Cross Knowledge Transfer in Computational Pathology Exciting News! HistoKT has been accepted to ICASSP 2022. HistoKT: Cross Knowledge Transfe

Improving Object Detection by Label Assignment Distillation

Improving Object Detection by Label Assignment Distillation This is the official implementation of the WACV 2022 paper Improving Object Detection by L

This repo provides the source code & data of our paper "GreaseLM: Graph REASoning Enhanced Language Models"

GreaseLM: Graph REASoning Enhanced Language Models This repo provides the source code & data of our paper "GreaseLM: Graph REASoning Enhanced Language

"Structure-Augmented Text Representation Learning for Efficient Knowledge Graph Completion"(WWW 2021)

STAR_KGC This repo contains the source code of the paper accepted by WWW'2021. "Structure-Augmented Text Representation Learning for Efficient Knowled

CSKG is a commonsense knowledge graph that combines seven popular sources into a consolidated representation

CSKG: The CommonSense Knowledge Graph CSKG is a commonsense knowledge graph that combines seven popular sources into a consolidated representation: AT

PyTorch source code for Distilling Knowledge by Mimicking Features

LSHFM.detection This is the PyTorch source code for Distilling Knowledge by Mimicking Features. And this project contains code for object detection wi

STonKGs is a Sophisticated Transformer that can be jointly trained on biomedical text and knowledge graphs

STonKGs STonKGs is a Sophisticated Transformer that can be jointly trained on biomedical text and knowledge graphs. This multimodal Transformer combin

Pytorch implementation of ICASSP 2022 paper Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents [Project Page] [Paper] [Video] Wenlong Huang1, Pieter Abbee

The goal of the exercises below is to evaluate the candidate knowledge and problem solving expertise regarding the main development focuses for the iFood ML Platform team: MLOps and Feature Store development.

The goal of the exercises below is to evaluate the candidate knowledge and problem solving expertise regarding the main development focuses for the iFood ML Platform team: MLOps and Feature Store development.

🚀 PyTorch Implementation of "Progressive Distillation for Fast Sampling of Diffusion Models(v-diffusion)"

PyTorch Implementation of "Progressive Distillation for Fast Sampling of Diffusion Models(v-diffusion)" Unofficial PyTorch Implementation of Progressi

CKD - Collaborative Knowledge Distillation for Heterogeneous Information Network Embedding

Collaborative Knowledge Distillation for Heterogeneous Information Network Embed

A Unified Framework and Analysis for Structured Knowledge Grounding

UnifiedSKG 📚 : Unifying and Multi-Tasking Structured Knowledge Grounding with Text-to-Text Language Models Code for paper UnifiedSKG: Unifying and Mu

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

A Deep Learning Based Knowledge Extraction Toolkit for Knowledge Base Population

DeepKE is a knowledge extraction toolkit supporting low-resource and document-level scenarios for entity, relation and attribute extraction. We provide comprehensive documents, Google Colab tutorials, and online demo for beginners.

Source code for the BMVC-2021 paper "SimReg: Regression as a Simple Yet Effective Tool for Self-supervised Knowledge Distillation".

SimReg: A Simple Regression Based Framework for Self-supervised Knowledge Distillation Source code for the paper "SimReg: Regression as a Simple Yet E

VisionKG: Vision Knowledge Graph

VisionKG: Vision Knowledge Graph Official Repository of VisionKG by Anh Le-Tuan, Trung-Kien Tran, Manh Nguyen-Duc, Jicheng Yuan, Manfred Hauswirth and

Repo for the paper Extrapolating from a Single Image to a Thousand Classes using Distillation

Extrapolating from a Single Image to a Thousand Classes using Distillation by Yuki M. Asano* and Aaqib Saeed* (*Equal Contribution) Extrapolating from

TransferNet: Learning Transferrable Knowledge for Semantic Segmentation with Deep Convolutional Neural Network

TransferNet: Learning Transferrable Knowledge for Semantic Segmentation with Deep Convolutional Neural Network Created by Seunghoon Hong, Junhyuk Oh,

Code release for "MERLOT Reserve: Neural Script Knowledge through Vision and Language and Sound"

merlot_reserve Code release for "MERLOT Reserve: Neural Script Knowledge through Vision and Language and Sound" MERLOT Reserve (in submission) is a mo

Intel® Neural Compressor is an open-source Python library running on Intel CPUs and GPUs

Intel® Neural Compressor targeting to provide unified APIs for network compression technologies, such as low precision quantization, sparsity, pruning, knowledge distillation, across different deep learning frameworks to pursue optimal inference performance.

Using knowledge-informed machine learning on the PRONOSTIA (FEMTO) and IMS bearing data sets. Predict remaining-useful-life (RUL).

Knowledge Informed Machine Learning using a Weibull-based Loss Function Exploring the concept of knowledge-informed machine learning with the use of a

Demonstration of transfer of knowledge and generalization with distillation

Distilling-the-Knowledge-in-a-Neural-Network This is an implementation of a part of the paper "Distilling the Knowledge in a Neural Network" (https://

Teaches a student network from the knowledge obtained via training of a larger teacher network

Distilling-the-knowledge-in-neural-network Teaches a student network from the knowledge obtained via training of a larger teacher network This is an i

A PyTorch implementation of the continual learning experiments with deep neural networks

Brain-Inspired Replay A PyTorch implementation of the continual learning experiments with deep neural networks described in the following paper: Brain

Paper Title: Heterogeneous Knowledge Distillation for Simultaneous Infrared-Visible Image Fusion and Super-Resolution

HKDnet Paper Title: "Heterogeneous Knowledge Distillation for Simultaneous Infrared-Visible Image Fusion and Super-Resolution" Email: 18186470991@163.

Predico Disease Prediction system based on symptoms provided by patient- using Python-Django & Machine Learning

Predico Disease Prediction system based on symptoms provided by patient- using Python-Django & Machine Learning

A next-generation curated knowledge sharing platform for data scientists and other technical professions.

Knowledge Repo The Knowledge Repo project is focused on facilitating the sharing of knowledge between data scientists and other technical roles using

An Extendible (General) Continual Learning Framework based on Pytorch - official codebase of Dark Experience for General Continual Learning

Mammoth - An Extendible (General) Continual Learning Framework for Pytorch NEWS STAY TUNED: We are working on an update of this repository to include

Sparse Progressive Distillation: Resolving Overfitting under Pretrain-and-Finetune Paradigm

Sparse Progressive Distillation: Resolving Overfitting under Pretrain-and-Finetu

Pure-python-server - A blogging platform written in pure python for developer to share their coding knowledge

Pure Python web server - PyProject A blogging platform written in pure python (n

Pytorch implementation of paper Semi-supervised Knowledge Transfer for Deep Learning from Private Training Data

Pytorch implementation of paper Semi-supervised Knowledge Transfer for Deep Learning from Private Training Data

CCCL: Contrastive Cascade Graph Learning.

CCGL: Contrastive Cascade Graph Learning This repo provides a reference implementation of Contrastive Cascade Graph Learning (CCGL) framework as descr

Code for hyperboloid embeddings for knowledge graph entities

Implementation for the papers: Self-Supervised Hyperboloid Representations from Logical Queries over Knowledge Graphs, Nurendra Choudhary, Nikhil Rao,

PyTorch code for training MM-DistillNet for multimodal knowledge distillation

There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge MM-DistillNet is a

Official implementation for paper Knowledge Bridging for Empathetic Dialogue Generation (AAAI 2021).

Knowledge Bridging for Empathetic Dialogue Generation This is the official implementation for paper Knowledge Bridging for Empathetic Dialogue Generat

The code for our paper "AutoSF: Searching Scoring Functions for Knowledge Graph Embedding"

AutoSF The code for our paper "AutoSF: Searching Scoring Functions for Knowledge Graph Embedding" and this paper has been accepted by ICDE2020. News:

Build a medical knowledge graph based on Unified Language Medical System (UMLS)

UMLS-Graph Build a medical knowledge graph based on Unified Language Medical System (UMLS) Requisite Install MySQL Server 5.6 and import UMLS data int

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation Introduction WAKD is a PyTorch implementation for our ICPR-2022 pap

Build an Amazon SageMaker Pipeline to Transform Raw Texts to A Knowledge Graph

Build an Amazon SageMaker Pipeline to Transform Raw Texts to A Knowledge Graph This repository provides a pipeline to create a knowledge graph from ra

A pipeline for making highlighted text stand-alone.

title emoji colorFrom colorTo sdk app_file pinned decontextualizer 📤 green gray streamlit main.py false Decontextualizer As a second step in improvin

A knowledge base construction engine for richly formatted data

Fonduer is a Python package and framework for building knowledge base construction (KBC) applications from richly formatted data. Note that Fonduer is

This code provides a PyTorch implementation for OTTER (Optimal Transport distillation for Efficient zero-shot Recognition), as described in the paper.

Data Efficient Language-Supervised Zero-Shot Recognition with Optimal Transport Distillation This repository contains PyTorch evaluation code, trainin

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

LUKE -- Language Understanding with Knowledge-based Embeddings

LUKE (Language Understanding with Knowledge-based Embeddings) is a new pre-trained contextualized representation of words and entities based on transf

Large-scale open domain KNOwledge grounded conVERsation system based on PaddlePaddle

Knover Knover is a toolkit for knowledge grounded dialogue generation based on PaddlePaddle. Knover allows researchers and developers to carry out eff

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

A computational block to solve entity alignment over textual attributes in a knowledge graph creation pipeline.

How to apply? Create your config.ini file following the example provided in config.ini Choose one of the options below to run: Run with Python3 pip in

Sleep staging from ECG, assisted with EEG

Sleep_Staging_Knowledge Distillation This codebase implements knowledge distillation approach for ECG based sleep staging assisted by EEG based sleep

Official implementation of the paper "Lightweight Deep CNN for Natural Image Matting via Similarity Preserving Knowledge Distillation"

Lightweight-Deep-CNN-for-Natural-Image-Matting-via-Similarity-Preserving-Knowledge-Distillation Introduction Accepted at IEEE Signal Processing Letter

Mask-invariant Face Recognition through Template-level Knowledge Distillation

Mask-invariant Face Recognition through Template-level Knowledge Distillation This is the official repository of "Mask-invariant Face Recognition thro

In-memory Graph Database and Knowledge Graph with Natural Language Interface, compatible with Pandas

CogniPy for Pandas - In-memory Graph Database and Knowledge Graph with Natural Language Interface Whats in the box Reasoning, exploration of RDF/OWL,

Scripts and outputs related to the paper Prediction of Adverse Biological Effects of Chemicals Using Knowledge Graph Embeddings.

Knowledge Graph Embeddings and Chemical Effect Prediction, 2020. Scripts and outputs related to the paper Prediction of Adverse Biological Effects of

Wikidated : An Evolving Knowledge Graph Dataset of Wikidata’s Revision History

Wikidated Wikidated 1.0 is a dataset of Wikidata’s full revision history, which encodes changes between Wikidata revisions as sets of deletions and ad

K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce (EMNLP Founding 2021)

Introduction K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce. Installation PyTor

Code for "Learning From Multiple Experts: Self-paced Knowledge Distillation for Long-tailed Classification", ECCV 2020 Spotlight

Learning From Multiple Experts: Self-paced Knowledge Distillation for Long-tailed Classification Implementation of "Learning From Multiple Experts: Se

Conflict-aware Inference of Python Compatible Runtime Environments with Domain Knowledge Graph, ICSE 2022

PyCRE Conflict-aware Inference of Python Compatible Runtime Environments with Domain Knowledge Graph, ICSE 2022 Dependencies This project is developed

KIDA: Knowledge Inheritance in Data Aggregation

KIDA: Knowledge Inheritance in Data Aggregation This project releases our 1st place solution on NeurIPS2021 ML4CO Dual Task. Slide and model weights a

Interactive Visualization to empower domain experts to align ML model behaviors with their knowledge.

An interactive visualization system designed to helps domain experts responsibly edit Generalized Additive Models (GAMs). For more information, check

Meandering In Networks of Entities to Reach Verisimilar Answers

MINERVA Meandering In Networks of Entities to Reach Verisimilar Answers Code and models for the paper Go for a Walk and Arrive at the Answer - Reasoni

Python implementation of ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images, AAAI2022.

ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images Binh M. Le & Simon S. Woo, "ADD:

Add a help desk or knowledge base to your Django project with only a few lines of boilerplate code.

This project is no longer maintained. If you are interested in taking over the project, email [email protected]. Welcome to django-knowledge! django-

Perturbed Self-Distillation: Weakly Supervised Large-Scale Point Cloud Semantic Segmentation (ICCV2021)

Perturbed Self-Distillation: Weakly Supervised Large-Scale Point Cloud Semantic Segmentation (ICCV2021) This is the implementation of PSD (ICCV 2021),

Federated learning on graph, especially on graph neural networks (GNNs), knowledge graph, and private GNN.

Federated learning on graph, especially on graph neural networks (GNNs), knowledge graph, and private GNN.

The Codebase for Causal Distillation for Language Models.

Causal Distillation for Language Models Zhengxuan Wu*,Atticus Geiger*, Josh Rozner, Elisa Kreiss, Hanson Lu, Thomas Icard, Christopher Potts, Noah D.

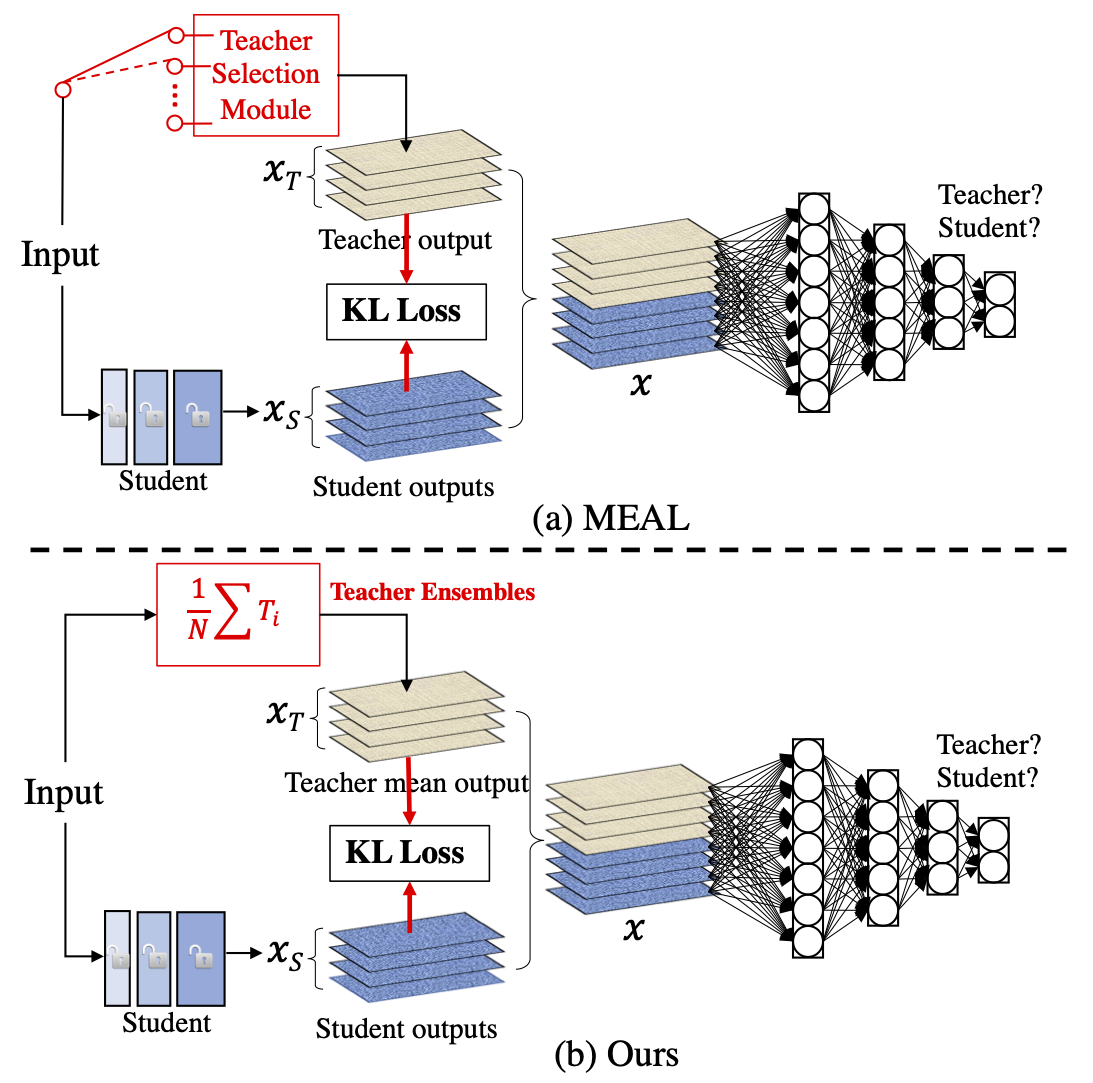

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric

Open-CyKG: An Open Cyber Threat Intelligence Knowledge Graph

Open-CyKG: An Open Cyber Threat Intelligence Knowledge Graph Model Description Open-CyKG is a framework that is constructed using an attenti

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

Code for ACL 2019 Paper: "COMET: Commonsense Transformers for Automatic Knowledge Graph Construction"

To run a generation experiment (either conceptnet or atomic), follow these instructions: First Steps First clone, the repo: git clone https://github.c

Sorce code and datasets for "K-BERT: Enabling Language Representation with Knowledge Graph",

K-BERT Sorce code and datasets for "K-BERT: Enabling Language Representation with Knowledge Graph", which is implemented based on the UER framework. R