Kornia is a differentiable computer vision library for PyTorch.

It consists of a set of routines and differentiable modules to solve generic computer vision problems. At its core, the package uses PyTorch as its main backend both for efficiency and to take advantage of the reverse-mode auto-differentiation to define and compute the gradient of complex functions.

Overview

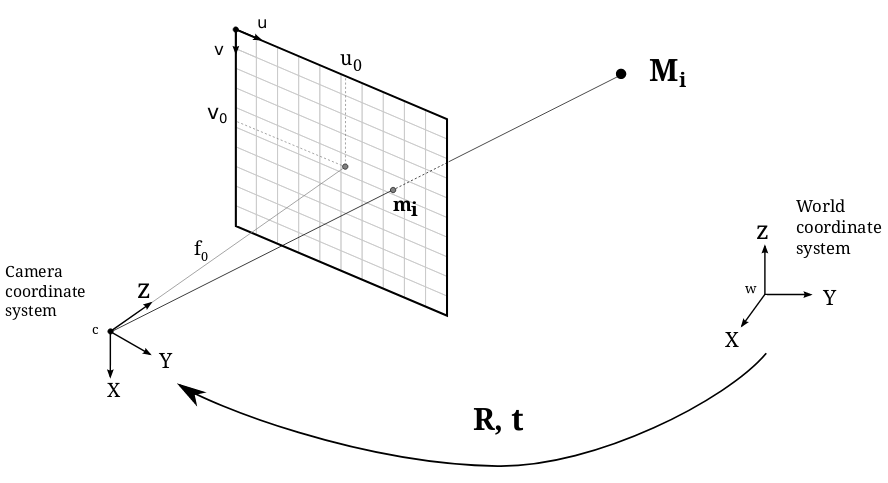

Inspired by existing packages, this library is composed by a subset of packages containing operators that can be inserted within neural networks to train models to perform image transformations, epipolar geometry, depth estimation, and low-level image processing such as filtering and edge detection that operate directly on tensors.

At a granular level, Kornia is a library that consists of the following components:

| Component | Description |

|---|---|

| kornia | a Differentiable Computer Vision library, with strong GPU support |

| kornia.augmentation | a module to perform data augmentation in the GPU |

| kornia.color | a set of routines to perform color space conversions |

| kornia.contrib | a compilation of user contrib and experimental operators |

| kornia.enhance | a module to perform normalization and intensity transformation |

| kornia.feature | a module to perform feature detection |

| kornia.filters | a module to perform image filtering and edge detection |

| kornia.geometry | a geometric computer vision library to perform image transformations, 3D linear algebra and conversions using different camera models |

| kornia.losses | a stack of loss functions to solve different vision tasks |

| kornia.morphology | a module to perform morphological operations |

| kornia.utils | image to tensor utilities and metrics for vision problems |

Installation

From pip:

pip install kornia

Other installation options

From source:

python setup.py install

From source with symbolic links:

pip install -e .

From source using pip:

pip install git+https://github.com/kornia/kornia

Examples

Run our Jupyter notebooks tutorials to learn to use the library.

Cite

If you are using kornia in your research-related documents, it is recommended that you cite the paper. See more in CITATION.

@inproceedings{eriba2019kornia,

author = {E. Riba, D. Mishkin, D. Ponsa, E. Rublee and G. Bradski},

title = {Kornia: an Open Source Differentiable Computer Vision Library for PyTorch},

booktitle = {Winter Conference on Applications of Computer Vision},

year = {2020},

url = {https://arxiv.org/pdf/1910.02190.pdf}

}

Contributing

We appreciate all contributions. If you are planning to contribute back bug-fixes, please do so without any further discussion. If you plan to contribute new features, utility functions or extensions, please first open an issue and discuss the feature with us. Please, consider reading the CONTRIBUTING notes. The participation in this open source project is subject to Code of Conduct.

Community

- Forums: discuss implementations, research, etc. GitHub Forums

- GitHub Issues: bug reports, feature requests, install issues, RFCs, thoughts, etc. OPEN

- Slack: Join our workspace to keep in touch with our core contributors and be part of our community. JOIN HERE

- For general information, please visit our website at www.kornia.org

Good old Lowe ratio-test is good for descriptor matching (implemented as `match_snn`, `match_smnn` in kornia, but it is often not enough: it does not take into account keypoint positions.

With this version we started to add geometry aware descriptor matchers, starting with [FGINN](https://arxiv.org/abs/1503.02619) and [AdaLAM](https://arxiv.org/abs/2006.04250). Later we plan to add something like SuperGlue (but free version, ofc).

Good old Lowe ratio-test is good for descriptor matching (implemented as `match_snn`, `match_smnn` in kornia, but it is often not enough: it does not take into account keypoint positions.

With this version we started to add geometry aware descriptor matchers, starting with [FGINN](https://arxiv.org/abs/1503.02619) and [AdaLAM](https://arxiv.org/abs/2006.04250). Later we plan to add something like SuperGlue (but free version, ofc).