🔥

Features

- Monitor running experiments from mobile phone (or laptop)

- Monitor hardware usage on any computer with a single command

- Integrate with just 2 lines of code (see examples below)

- Keeps track of experiments including infomation like git commit, configurations and hyper-parameters

- Keep Tensorboard logs organized

- Save and load checkpoints

- API for custom visualizations

- Pretty logs of training progress

- Change hyper-parameters while the model is training

- Open source! we also have a small hosted server for the mobile web app

Installation

You can install this package using PIP.

pip install labml

PyTorch example

from labml import tracker, experiment

with experiment.record(name='sample', exp_conf=conf):

for i in range(50):

loss, accuracy = train()

tracker.save(i, {'loss': loss, 'accuracy': accuracy})

PyTorch Lightning example

from labml import experiment

from labml.utils.lightening import LabMLLighteningLogger

trainer = pl.Trainer(gpus=1, max_epochs=5, progress_bar_refresh_rate=20, logger=LabMLLighteningLogger())

with experiment.record(name='sample', exp_conf=conf, disable_screen=True):

trainer.fit(model, data_loader)

TensorFlow 2.X Keras example

from labml import experiment

from labml.utils.keras import LabMLKerasCallback

with experiment.record(name='sample', exp_conf=conf):

for i in range(50):

model.fit(x_train, y_train, epochs=conf['epochs'], validation_data=(x_test, y_test),

callbacks=[LabMLKerasCallback()], verbose=None)

📚

Documentation

Guides

- API to create experiments

- Track training metrics

- Monitored training loop and other iterators

- API for custom visualizations

- Configurations management API

- Logger for stylized logging

🖥

Screenshots

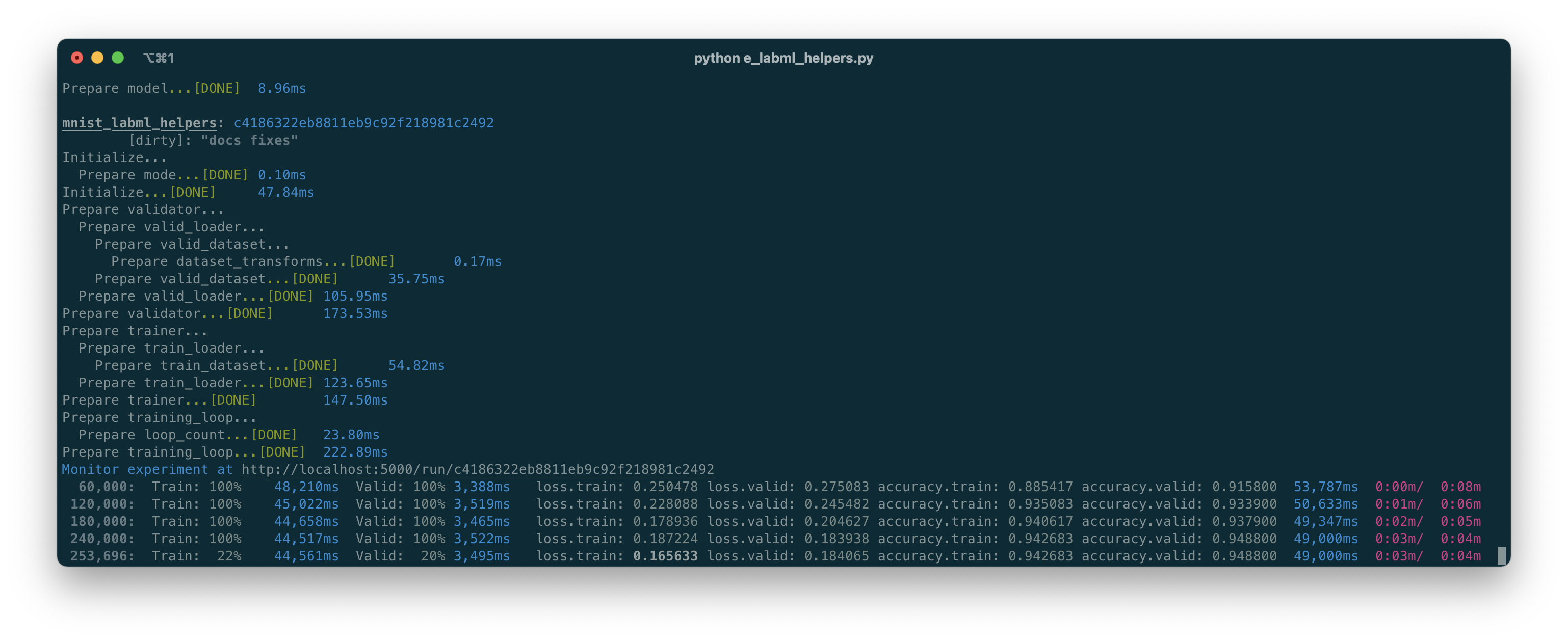

Formatted training loop output

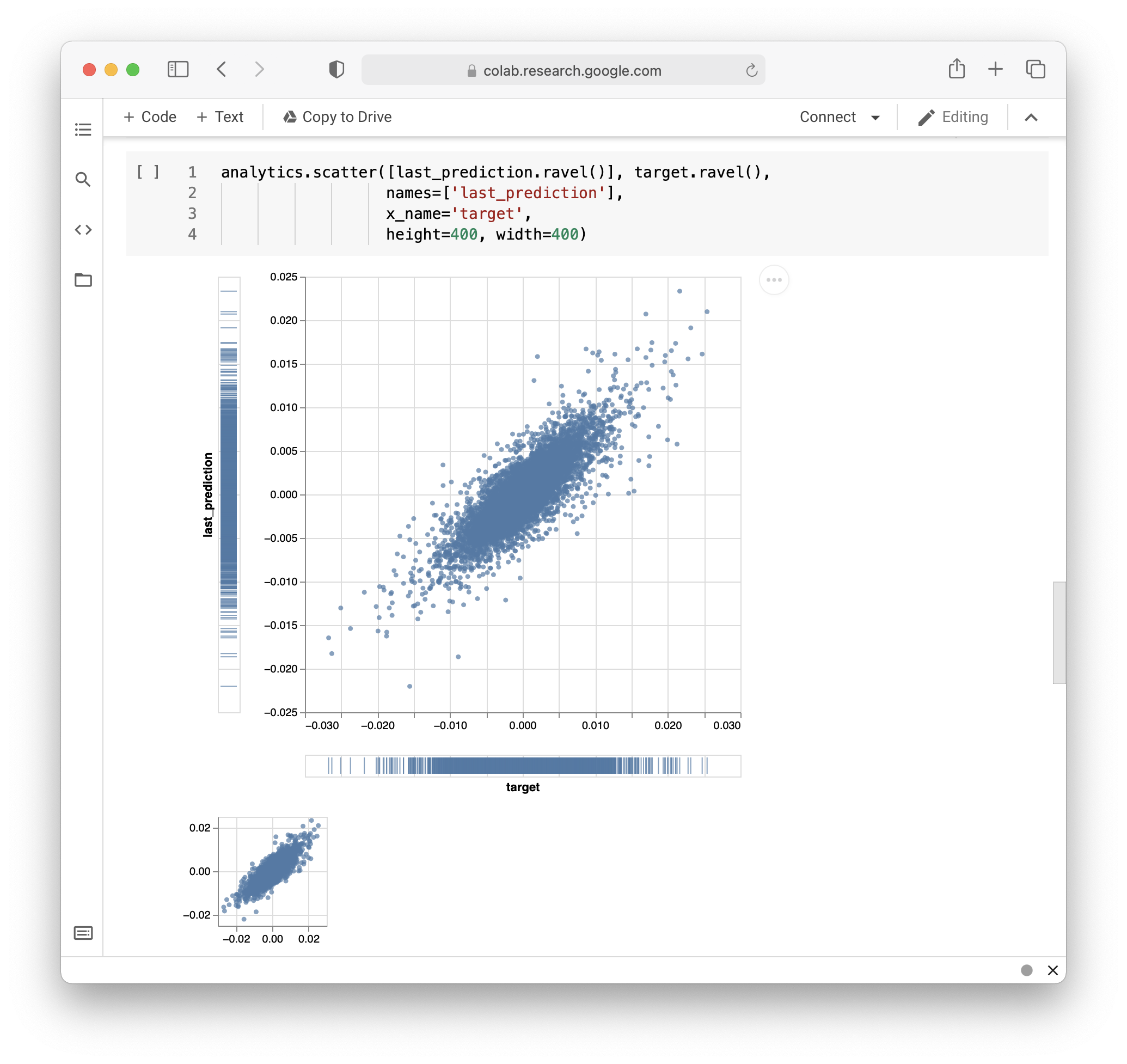

Custom visualizations based on Tensorboard logs

Tools

Hosting your own experiments server

# Install the package

pip install labml-app

# Start the server

labml app-server

Training models on cloud

# Install the package

pip install labml_remote

# Initialize the project

labml_remote init

# Add cloud server(s) to .remote/configs.yaml

# Prepare the remote server(s)

labml_remote prepare

# Start a PyTorch distributed training job

labml_remote helper-torch-launch --cmd 'train.py' --nproc-per-node 2 --env GLOO_SOCKET_IFNAME enp1s0

Monitoring hardware usage

# Install packages and dependencies

pip install labml psutil py3nvml

# Start monitoring

labml monitor

Other Guides

Setting up a local Ubuntu workstation for deep learning

Setting up a local cloud computer for deep learning

Citing

If you use LabML for academic research, please cite the library using the following BibTeX entry.

@misc{labml,

author = {Varuna Jayasiri, Nipun Wijerathne},

title = {labml.ai: A library to organize machine learning experiments},

year = {2020},

url = {https://labml.ai/},

}