BeatNet

A package for music online and offline rhythmic information analysis including music Beat, downbeat, tempo and meter tracking.

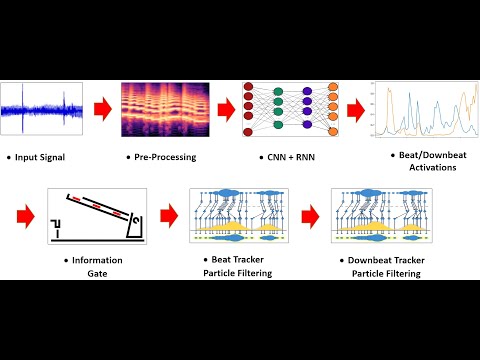

This repository contains the user package and the source code of the Monte Carlo particle flitering inference model of the "BeatNet" music online joint beat/downbeat/tempo/meter tracking system. The arxiv version of the original ISMIR-2021 paper:

In addition to the proposed online inference, we added madmom's DBN beat/downbeat inference model for the offline usages. Note that, the offline model still utilize BeatNet's neural network rather than that of Madmom which leads to better performance and significantly faster results.

Note: All models are trained using pytorch and are included in the models folder. In order to recieve the training script and the datasets data/feature handlers, shoot me an email at mheydari [at] ur.rochester.edu

System Input:

Raw audio waveform

System Output:

A vector including beats and downbeats columns, respectively with the following shape: numpy_array(num_beats, 2).

Installation command:

Approach #1: Installing binaries from the pypi website:

pip install BeatNet

Approach #2: Installing directly from the Git repository:

pip install git+https://github.com/mjhydri/BeatNet

Usage example:

From BeatNet.BeatNet import BeatNet

estimator = BeatNet(1)

Output = estimator.process("music file directory", inference_model= 'PF', plot = True)

A brief video tutorial of the system (Overview):

In order to demonstrate the performance of the system for different beat/donbeat tracking difficulties, here are three video demo examples :

1: Song Difficulty: Easy

2: Song difficulty: Medium

3: Song difficulty: Veteran

Acknowledgements:

For the input feature extraction and implementing of the beat state space, Librosa and Madmom libraries are ustilzed. Many thanks for their great jobs. This work has been partially supported by the National Science Foundation grants 1846184 and DGE-1922591.

References:

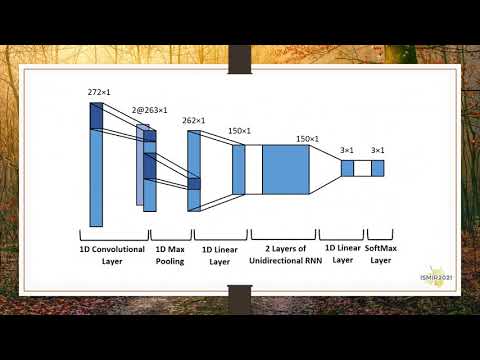

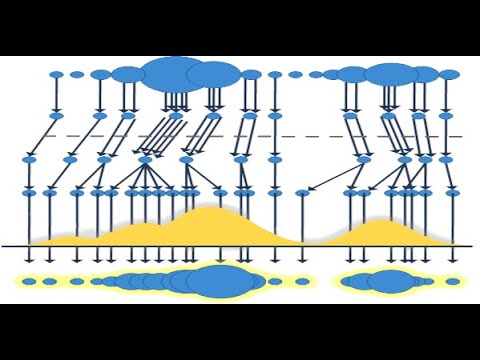

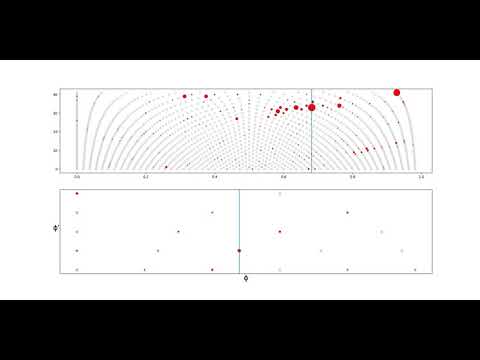

M. Heydari, F. Cwitkowitz, and Z. Duan, “BeatNet:CRNN and particle filtering for online joint beat down-beat and meter tracking,” in Proc. of the 22th Intl. Conf.on Music Information Retrieval (ISMIR), 2021.

M. Heydari and Z. Duan, “Don’t Look Back: An online beat tracking method using RNN and enhanced particle filtering,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), 2021.

I test the model on the same wav but they get different result.

I test the model on the same wav but they get different result.