Energy-based GFlowNets

Code for Generative Flow Networks for Discrete Probabilistic Modeling by Dinghuai Zhang, Nikolay Malkin, Zhen Liu, Alexandra Volokhova, Aaron Courville, Yoshua Bengio.

Example

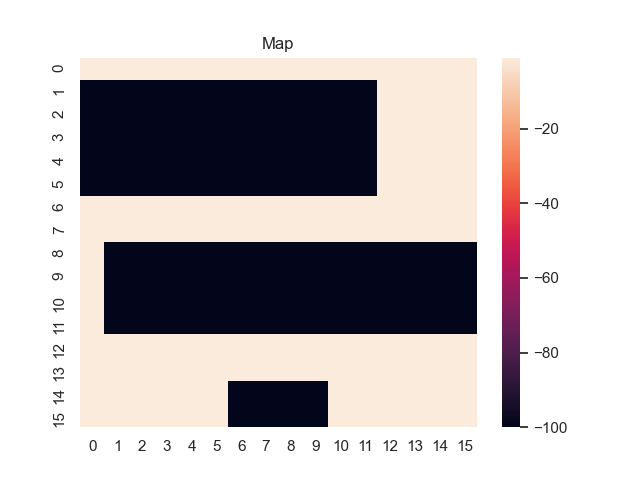

Synthetic tasks

python -m synthetic.train --data checkerboard --lr 1e-3 --type tblb --hid_layer 3 --hid 256 --print_every 100 --glr 1e-3 --zlr 1 --rand_coef 0 --back_ratio 0.5 --lin_k 1 --warmup_k 1e5 --with_mh 1

Discrete image modeling

python -m deepebm.ebm --model mlp-256 --lr 1e-4 --type tblb --hid_layer 3 --hid 256 --glr 1e-3 --zlr 1 --rand_coef 0 --back_ratio 0.5 --lin_k 1 --warmup_k 5e4 --with_mh 1 --print_every 100 --mc_num 5