11 Repositories

Python Parameterization-of-Hypercomplex-Multiplications Libraries

CVPR 2022 "Online Convolutional Re-parameterization"

OREPA: Online Convolutional Re-parameterization This repo is the PyTorch implementation of our paper to appear in CVPR2022 on "Online Convolutional Re

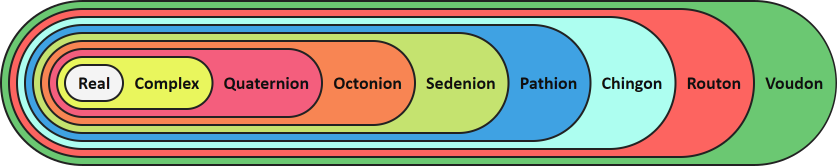

A Python library for working with arbitrary-dimension hypercomplex numbers following the Cayley-Dickson construction of algebras.

Hypercomplex A Python library for working with quaternions, octonions, sedenions, and beyond following the Cayley-Dickson construction of hypercomplex

TensorFlow implementation of PHM (Parameterization of Hypercomplex Multiplication)

Parameterization of Hypercomplex Multiplications (PHM) This repository contains the TensorFlow implementation of PHM (Parameterization of Hypercomplex

Implementation of the Paper: "Parameterized Hypercomplex Graph Neural Networks for Graph Classification" by Tuan Le, Marco Bertolini, Frank Noé and Djork-Arné Clevert

Parameterized Hypercomplex Graph Neural Networks (PHC-GNNs) PHC-GNNs (Le et al., 2021): https://arxiv.org/abs/2103.16584 PHM Linear Layer Illustration

Code for paper " AdderNet: Do We Really Need Multiplications in Deep Learning?"

AdderNet: Do We Really Need Multiplications in Deep Learning? This code is a demo of CVPR 2020 paper AdderNet: Do We Really Need Multiplications in De

Volumetric parameterization of the placenta to a flattened template

placenta-flattening A MATLAB algorithm for volumetric mesh parameterization. Developed for mapping a placenta segmentation derived from an MRI image t

Codes for 'Dual Parameterization of Sparse Variational Gaussian Processes'

Dual Parameterization of Sparse Variational Gaussian Processes Documentation | Notebooks | API reference Introduction This repository is the official

Hypercomplex Neural Networks with PyTorch

HyperNets Hypercomplex Neural Networks with PyTorch: this repository would be a container for hypercomplex neural network modules to facilitate resear

Joint parameterization and fitting of stroke clusters

StrokeStrip: Joint Parameterization and Fitting of Stroke Clusters Dave Pagurek van Mossel1, Chenxi Liu1, Nicholas Vining1,2, Mikhail Bessmeltsev3, Al

Spectral Tensor Train Parameterization of Deep Learning Layers

Spectral Tensor Train Parameterization of Deep Learning Layers This repository is the official implementation of our AISTATS 2021 paper titled "Spectr

Orthogonal Over-Parameterized Training

The inductive bias of a neural network is largely determined by the architecture and the training algorithm. To achieve good generalization, how to effectively train a neural network is of great importance. We propose a novel orthogonal over-parameterized training (OPT) framework that can provably minimize the hyperspherical energy which characterizes the diversity of neurons on a hypersphere. See our previous work -- MHE for an in-depth introduction.