68 Repositories

Python WeakVRD-Captioning Libraries

Language Models Can See: Plugging Visual Controls in Text Generation

Language Models Can See: Plugging Visual Controls in Text Generation Authors: Yixuan Su, Tian Lan, Yahui Liu, Fangyu Liu, Dani Yogatama, Yan Wang, Lin

A Persian Image Captioning model based on Vision Encoder Decoder Models of the transformers🤗.

Persian-Image-Captioning We fine-tuning the Vision Encoder Decoder Model for the task of image captioning on the coco-flickr-farsi dataset. The implem

Official repository of OFA. Paper: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

Paper | Blog OFA is a unified multimodal pretrained model that unifies modalities (i.e., cross-modality, vision, language) and tasks (e.g., image gene

Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

Official repository of OFA. Paper: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

End-to-end image captioning with EfficientNet-b3 + LSTM with Attention

Image captioning End-to-end image captioning with EfficientNet-b3 + LSTM with Attention Model is seq2seq model. In the encoder pretrained EfficientNet

PyTorch code for BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

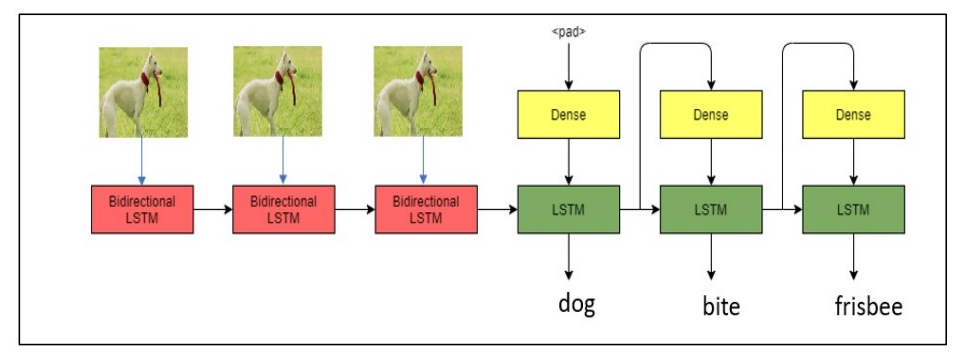

Video-Captioning - A machine Learning project to generate captions for video frames indicating the relationship between the objects in the video

Video-Captioning - A machine Learning project to generate captions for video frames indicating the relationship between the objects in the video

Image Captioning on google cloud platform based on iot

Image-Captioning-on-google-cloud-platform-based-on-iot - Image Captioning on google cloud platform based on iot

Compact Bidirectional Transformer for Image Captioning

Compact Bidirectional Transformer for Image Captioning Requirements Python 3.8 Pytorch 1.6 lmdb h5py tensorboardX Prepare Data Please use git clone --

Compact Bidirectional Transformer for Image Captioning

Compact Bidirectional Transformer for Image Captioning Requirements Python 3.8 Pytorch 1.6 lmdb h5py tensorboardX Prepare Data Please use git clone --

End-to-End Dense Video Captioning with Parallel Decoding (ICCV 2021)

PDVC Official implementation for End-to-End Dense Video Captioning with Parallel Decoding (ICCV 2021) [paper] [valse论文速递(Chinese)] This repo supports:

Bottom-up attention model for image captioning and VQA, based on Faster R-CNN and Visual Genome

bottom-up-attention This code implements a bottom-up attention model, based on multi-gpu training of Faster R-CNN with ResNet-101, using object and at

![[CVPR 2021] Scan2Cap: Context-aware Dense Captioning in RGB-D Scans](https://github.com/daveredrum/Scan2Cap/raw/main/demo/Scan2Cap.gif)

[CVPR 2021] Scan2Cap: Context-aware Dense Captioning in RGB-D Scans

Scan2Cap: Context-aware Dense Captioning in RGB-D Scans Introduction We introduce the task of dense captioning in 3D scans from commodity RGB-D sensor

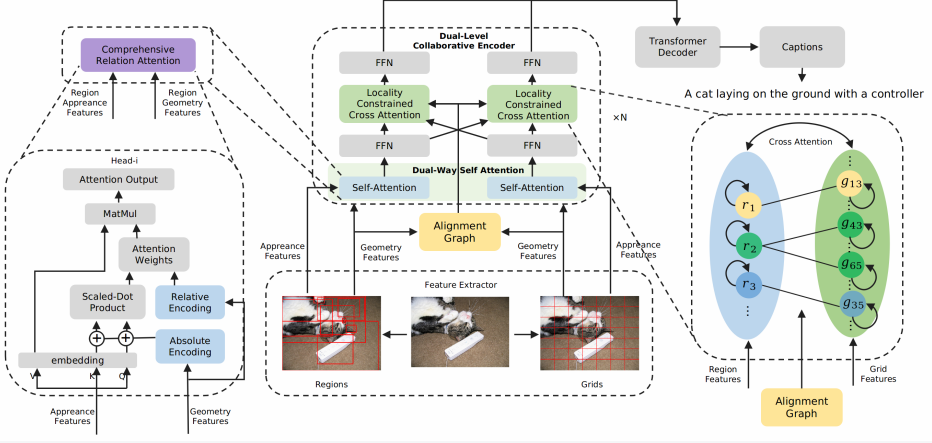

Official pytorch implementation of paper Dual-Level Collaborative Transformer for Image Captioning (AAAI 2021).

Dual-Level Collaborative Transformer for Image Captioning This repository contains the reference code for the paper Dual-Level Collaborative Transform

Official pytorch implementation of the AAAI 2021 paper Semantic Grouping Network for Video Captioning

Semantic Grouping Network for Video Captioning Hobin Ryu, Sunghun Kang, Haeyong Kang, and Chang D. Yoo. AAAI 2021. [arxiv] Environment Ubuntu 16.04 CU

LaBERT - A length-controllable and non-autoregressive image captioning model.

Length-Controllable Image Captioning (ECCV2020) This repo provides the implemetation of the paper Length-Controllable Image Captioning. Install conda

PyTorch code for: Learning to Generate Grounded Visual Captions without Localization Supervision

Learning to Generate Grounded Visual Captions without Localization Supervision This is the PyTorch implementation of our paper: Learning to Generate G

ECCV2020 paper: Fashion Captioning: Towards Generating Accurate Descriptions with Semantic Rewards. Code and Data.

This repo contains some of the codes for the following paper Fashion Captioning: Towards Generating Accurate Descriptions with Semantic Rewards. Code

Moer Grounded Image Captioning by Distilling Image-Text Matching Model

Moer Grounded Image Captioning by Distilling Image-Text Matching Model Requirements Python 3.7 Pytorch 1.2 Prepare data Please use git clone --recurse

Meshed-Memory Transformer for Image Captioning. CVPR 2020

M²: Meshed-Memory Transformer This repository contains the reference code for the paper Meshed-Memory Transformer for Image Captioning (CVPR 2020). Pl

![Implementation of 'X-Linear Attention Networks for Image Captioning' [CVPR 2020]](https://github.com/JDAI-CV/image-captioning/raw/master/images/framework.jpg)

Implementation of 'X-Linear Attention Networks for Image Captioning' [CVPR 2020]

Introduction This repository is for X-Linear Attention Networks for Image Captioning (CVPR 2020). The original paper can be found here. Please cite wi

![[CVPR 2020] Transform and Tell: Entity-Aware News Image Captioning](https://github.com/alasdairtran/transform-and-tell/raw/master/figures/teaser.png)

[CVPR 2020] Transform and Tell: Entity-Aware News Image Captioning

Transform and Tell: Entity-Aware News Image Captioning This repository contains the code to reproduce the results in our CVPR 2020 paper Transform and

WeakVRD-Captioning - Implementation of paper Improving Image Captioning with Better Use of Caption

WeakVRD-Captioning - Implementation of paper Improving Image Captioning with Better Use of Caption

PyTorch code for MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning

MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning PyTorch code for our ACL 2020 paper "MART: Memory-Augmented Recur

Code for paper Adaptively Aligned Image Captioning via Adaptive Attention Time

Adaptively Aligned Image Captioning via Adaptive Attention Time This repository includes the implementation for Adaptively Aligned Image Captioning vi

Implementation of the Object Relation Transformer for Image Captioning

Object Relation Transformer This is a PyTorch implementation of the Object Relation Transformer published in NeurIPS 2019. You can find the paper here

Unsupervised captioning - Code for Unsupervised Image Captioning

Unsupervised Image Captioning by Yang Feng, Lin Ma, Wei Liu, and Jiebo Luo Introduction Most image captioning models are trained using paired image-se

This project provides the code and datasets for 'CapSal: Leveraging Captioning to Boost Semantics for Salient Object Detection', CVPR 2019.

Code-and-Dataset-for-CapSal This project provides the code and datasets for 'CapSal: Leveraging Captioning to Boost Semantics for Salient Object Detec

GoodNews Everyone! Context driven entity aware captioning for news images

This is the code for a CVPR 2019 paper, called GoodNews Everyone! Context driven entity aware captioning for news images. Enjoy! Model preview: Huge T

This repository focus on Image Captioning & Video Captioning & Seq-to-Seq Learning & NLP

Awesome-Visual-Captioning Table of Contents ACL-2021 CVPR-2021 AAAI-2021 ACMMM-2020 NeurIPS-2020 ECCV-2020 CVPR-2020 ACL-2020 AAAI-2020 ACL-2019 NeurI

Show-attend-and-tell - TensorFlow Implementation of "Show, Attend and Tell"

Show, Attend and Tell Update (December 2, 2016) TensorFlow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attent

Deep-Learning-Image-Captioning - Implementing convolutional and recurrent neural networks in Keras to generate sentence descriptions of images

Deep Learning - Image Captioning with Convolutional and Recurrent Neural Nets ========================================================================

Image captioning - Tensorflow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

Introduction This neural system for image captioning is roughly based on the paper "Show, Attend and Tell: Neural Image Caption Generation with Visual

Videocaptioning.pytorch - A simple implementation of video captioning

pytorch implementation of video captioning recommend installing pytorch and pyth

Multilingual Image Captioning

Multilingual Image Captioning Authors: Bhavitvya Malik, Gunjan Chhablani Demo Link: https://huggingface.co/spaces/flax-community/multilingual-image-ca

TensorFlow Implementation of "Show, Attend and Tell"

Show, Attend and Tell Update (December 2, 2016) TensorFlow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attent

Code for the TPAMI paper: "Syntax Customized Video Captioning by Imitating Exemplar Sentences"

Syntax-Customized-Video-Captioning Code for the TPAMI paper: "Syntax Customized Video Captioning by Imitating Exemplar Sentences". This is my second w

Code for the paper "Controllable Video Captioning with an Exemplar Sentence"

SMCG Code for the paper "Controllable Video Captioning with an Exemplar Sentence" Introduction We investigate a novel and challenging task, namely con

Vision-Language Pre-training for Image Captioning and Question Answering

VLP This repo hosts the source code for our AAAI2020 work Vision-Language Pre-training (VLP). We have released the pre-trained model on Conceptual Cap

Oscar and VinVL

Oscar: Object-Semantics Aligned Pre-training for Vision-and-Language Tasks VinVL: Revisiting Visual Representations in Vision-Language Models Updates

![[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations](https://github.com/kdexd/virtex/raw/master/docs/_static/system_figure.jpg)

[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations

VirTex: Learning Visual Representations from Textual Annotations Karan Desai and Justin Johnson University of Michigan CVPR 2021 arxiv.org/abs/2006.06

Code for the paper "Controllable Video Captioning with an Exemplar Sentence"

SMCG Code for the paper "Controllable Video Captioning with an Exemplar Sentence" Introduction We investigate a novel and challenging task, namely con

Fluency ENhanced Sentence-bert Evaluation (FENSE), metric for audio caption evaluation. And Benchmark dataset AudioCaps-Eval, Clotho-Eval.

FENSE The metric, Fluency ENhanced Sentence-bert Evaluation (FENSE), for audio caption evaluation, proposed in the paper "Can Audio Captions Be Evalua

Image Captioning using CNN ,LSTM and Attention

Image Captioning using CNN ,LSTM and Attention This is a deeplearning model which tries to summarize an image into a text . Installation Install this

Weakly Supervised Dense Event Captioning in Videos, i.e. generating multiple sentence descriptions for a video in a weakly-supervised manner.

WSDEC This is the official repo for our NeurIPS paper Weakly Supervised Dense Event Captioning in Videos. Description Repo directories ./: global conf

Automatic Video Captioning Evaluation Metric --- EMScore

Automatic Video Captioning Evaluation Metric --- EMScore Overview For an illustration, EMScore can be computed as: Installation modify the encode_text

Simple image captioning model

CLIP prefix captioning. Inference Notebook: 🥳 New: 🥳 Our technical papar is finally out! Official implementation for the paper "ClipCap: CLIP Prefix

A transformer-based method for Healthcare Image Captioning in Vietnamese

vieCap4H Challenge 2021: A transformer-based method for Healthcare Image Captioning in Vietnamese This repo GitHub contains our solution for vieCap4H

Machine-in-the-Loop Rewriting for Creative Image Captioning

Machine-in-the-Loop Rewriting for Creative Image Captioning Data Annotated sources of data used in the paper: Data Source URL Mohammed et al. Link Gor

Image captioning service for healthcare domains in Vietnamese using VLP

Image captioning service for healthcare domains in Vietnamese using VLP This service is a web service that provides image captioning services for heal

A modular framework for vision & language multimodal research from Facebook AI Research (FAIR)

MMF is a modular framework for vision and language multimodal research from Facebook AI Research. MMF contains reference implementations of state-of-t

An Image Captioning codebase

An Image Captioning codebase This is a codebase for image captioning research. It supports: Self critical training from Self-critical Sequence Trainin

Simple image captioning model - CLIP prefix captioning.

Simple image captioning model - CLIP prefix captioning.

Optimized code based on M2 for faster image captioning training

Transformer Captioning This repository contains the code for Transformer-based image captioning. Based on meshed-memory-transformer, we further optimi

Image Captioning using CNN and Transformers

Image-Captioning Keras/Tensorflow Image Captioning application using CNN and Transformer as encoder/decoder. In particulary, the architecture consists

X-modaler is a versatile and high-performance codebase for cross-modal analytics.

X-modaler X-modaler is a versatile and high-performance codebase for cross-modal analytics. This codebase unifies comprehensive high-quality modules i

An unreferenced image captioning metric (ACL-21)

UMIC This repository provides an unferenced image captioning metric from our ACL 2021 paper UMIC: An Unreferenced Metric for Image Captioning via Cont

Unofficial pytorch implementation for Self-critical Sequence Training for Image Captioning. and others.

An Image Captioning codebase This is a codebase for image captioning research. It supports: Self critical training from Self-critical Sequence Trainin

VisualGPT: Data-efficient Adaptation of Pretrained Language Models for Image Captioning

VisualGPT Our Paper VisualGPT: Data-efficient Adaptation of Pretrained Language Models for Image Captioning Main Architecture of Our VisualGPT Downloa

improvement of CLIP features over the traditional resnet features on the visual question answering, image captioning, navigation and visual entailment tasks.

CLIP-ViL In our paper "How Much Can CLIP Benefit Vision-and-Language Tasks?", we show the improvement of CLIP features over the traditional resnet fea

Codes for paper "Towards Diverse Paragraph Captioning for Untrimmed Videos". CVPR 2021

Towards Diverse Paragraph Captioning for Untrimmed Videos This repository contains PyTorch implementation of our paper Towards Diverse Paragraph Capti

Semi-Autoregressive Transformer for Image Captioning

Semi-Autoregressive Transformer for Image Captioning Requirements Python 3.6 Pytorch 1.6 Prepare data Please use git clone --recurse-submodules to clo

Source code for "MusCaps: Generating Captions for Music Audio" (IJCNN 2021)

MusCaps: Generating Captions for Music Audio Ilaria Manco1 2, Emmanouil Benetos1, Elio Quinton2, Gyorgy Fazekas1 1 Queen Mary University of London, 2

A modular framework for vision & language multimodal research from Facebook AI Research (FAIR)

MMF is a modular framework for vision and language multimodal research from Facebook AI Research. MMF contains reference implementations of state-of-t

Syntax-Aware Action Targeting for Video Captioning

Syntax-Aware Action Targeting for Video Captioning Code for SAAT from "Syntax-Aware Action Targeting for Video Captioning" (Accepted to CVPR 2020). Th

Diverse Image Captioning with Context-Object Split Latent Spaces (NeurIPS 2020)

Diverse Image Captioning with Context-Object Split Latent Spaces This repository is the PyTorch implementation of the paper: Diverse Image Captioning

An Analysis Toolkit for Natural Language Generation (Translation, Captioning, Summarization, etc.)

VizSeq is a Python toolkit for visual analysis on text generation tasks like machine translation, summarization, image captioning, speech translation

An Analysis Toolkit for Natural Language Generation (Translation, Captioning, Summarization, etc.)

VizSeq is a Python toolkit for visual analysis on text generation tasks like machine translation, summarization, image captioning, speech translation