23 Repositories

Python defense Libraries

This repository contains the code and models necessary to replicate the results of paper: How to Robustify Black-Box ML Models? A Zeroth-Order Optimization Perspective

Black-Box-Defense This repository contains the code and models necessary to replicate the results of our recent paper: How to Robustify Black-Box ML M

This repository contains the code and models necessary to replicate the results of paper: How to Robustify Black-Box ML Models? A Zeroth-Order Optimization Perspective

Black-Box-Defense This repository contains the code and models necessary to replicate the results of our recent paper: How to Robustify Black-Box ML M

Dcf-game-infrastructure-public - Contains all the components necessary to run a DC finals (attack-defense CTF) game from OOO

dcf-game-infrastructure All the components necessary to run a game of the OOO DC

Hierarchical-Bayesian-Defense - Towards Adversarial Robustness of Bayesian Neural Network through Hierarchical Variational Inference (Openreview)

Towards Adversarial Robustness of Bayesian Neural Network through Hierarchical V

Memory Defense: More Robust Classificationvia a Memory-Masking Autoencoder

Memory Defense: More Robust Classificationvia a Memory-Masking Autoencoder Authors: - Eashan Adhikarla - Dan Luo - Dr. Brian D. Davison Abstract Many

Domain abuse scanner covering domainsquatting and phishing keywords.

🦷 monodon 🐋 Domain abuse scanner covering domainsquatting and phishing keywords. Setup Monodon is a Python 3.7+ programm. To setup on a Linux machin

ReplitTD - Replit Tower Defense Game

IMPORTANT: I mean no offense at all in this game, this is only based off of cycl

Learnable Boundary Guided Adversarial Training (ICCV2021)

Learnable Boundary Guided Adversarial Training This repository contains the implementation code for the ICCV2021 paper: Learnable Boundary Guided Adve

Adversarial Self-Defense for Cycle-Consistent GANs

Adversarial Self-Defense for Cycle-Consistent GANs This is the official implementation of the CycleGAN robust to self-adversarial attacks used in pape

Gym Threat Defense

Gym Threat Defense The Threat Defense environment is an OpenAI Gym implementation of the environment defined as the toy example in Optimal Defense Pol

Is RobustBench/AutoAttack a suitable Benchmark for Adversarial Robustness?

Adversrial Machine Learning Benchmarks This code belongs to the papers: Is RobustBench/AutoAttack a suitable Benchmark for Adversarial Robustness? Det

![[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️](https://github.com/sungyoon-lee/LossLandscapeMatters/raw/master/media/fig.png)

[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️

Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples This repository is the official implementation of "Tow

Codes for NeurIPS 2021 paper "Adversarial Neuron Pruning Purifies Backdoored Deep Models"

Adversarial Neuron Pruning Purifies Backdoored Deep Models Code for NeurIPS 2021 "Adversarial Neuron Pruning Purifies Backdoored Deep Models" by Dongx

Code for the paper "SmoothMix: Training Confidence-calibrated Smoothed Classifiers for Certified Robustness" (NeurIPS 2021)

SmoothMix: Training Confidence-calibrated Smoothed Classifiers for Certified Robustness (NeurIPS2021) This repository contains code for the paper "Smo

Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models (published in ICLR2018)

Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models Pouya Samangouei*, Maya Kabkab*, Rama Chellappa [*: authors co

PyTorch implementation of our method for adversarial attacks and defenses in hyperspectral image classification.

Self-Attention Context Network for Hyperspectral Image Classification PyTorch implementation of our method for adversarial attacks and defenses in hyp

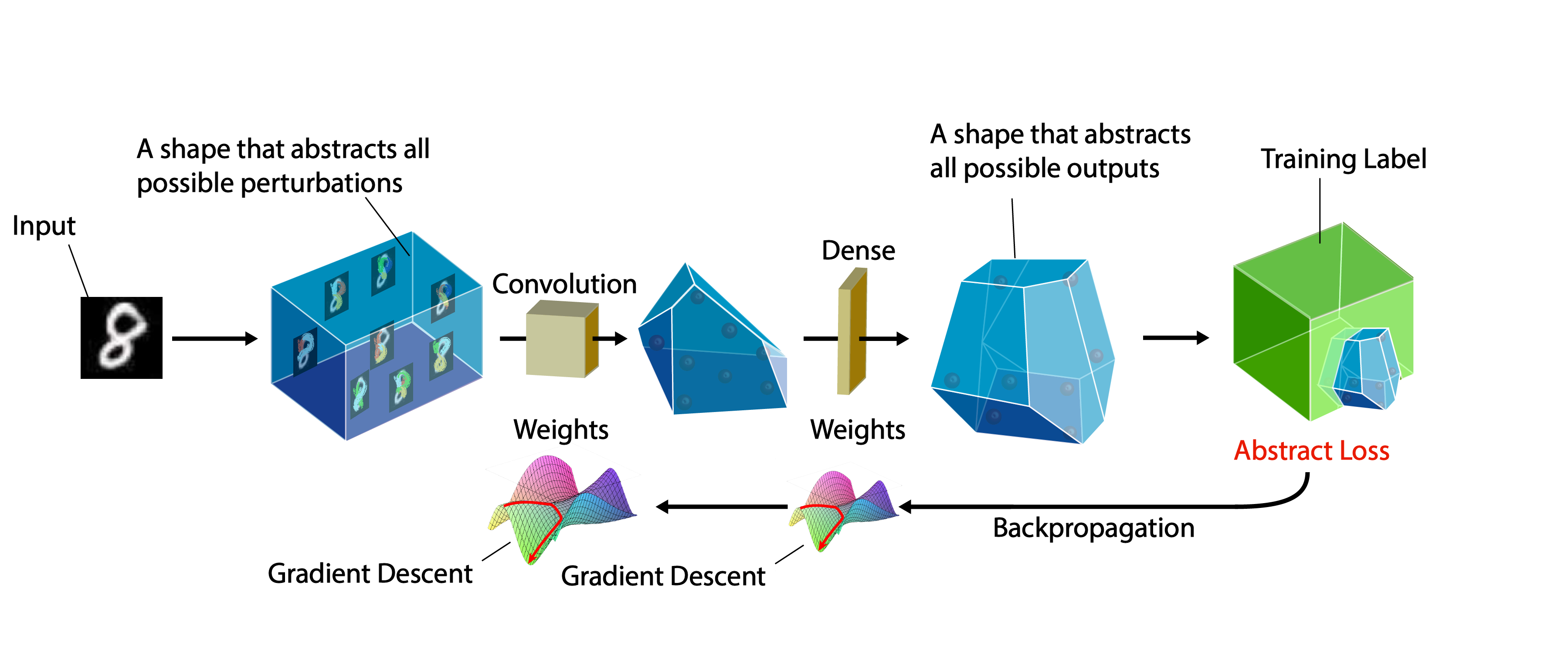

A certifiable defense against adversarial examples by training neural networks to be provably robust

DiffAI v3 DiffAI is a system for training neural networks to be provably robust and for proving that they are robust. The system was developed for the

An implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks in PyTorch.

Neural Attention Distillation This is an implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep

Minimal implementation of Denoised Smoothing: A Provable Defense for Pretrained Classifiers in TensorFlow.

Denoised-Smoothing-TF Minimal implementation of Denoised Smoothing: A Provable Defense for Pretrained Classifiers in TensorFlow. Denoised Smoothing is

SLIDE : In Defense of Smart Algorithms over Hardware Acceleration for Large-Scale Deep Learning Systems

The SLIDE package contains the source code for reproducing the main experiments in this paper. Dataset The Datasets can be downloaded in Amazon-

Code for the paper: Adversarial Training Against Location-Optimized Adversarial Patches. ECCV-W 2020.

Adversarial Training Against Location-Optimized Adversarial Patches arXiv | Paper | Code | Video | Slides Code for the paper: Sukrut Rao, David Stutz,

MozDef: Mozilla Enterprise Defense Platform

MozDef: Documentation: https://mozdef.readthedocs.org/en/latest/ Give MozDef a Try in AWS: The following button will launch the Mozilla Enterprise Def

InfoBERT: Improving Robustness of Language Models from An Information Theoretic Perspective

InfoBERT: Improving Robustness of Language Models from An Information Theoretic Perspective This is the official code base for our ICLR 2021 paper