28 Repositories

Python exponential-smoothing Libraries

Implementation of ETSformer, state of the art time-series Transformer, in Pytorch

ETSformer - Pytorch Implementation of ETSformer, state of the art time-series Transformer, in Pytorch Install $ pip install etsformer-pytorch Usage im

Decoupled Smoothing in Probabilistic Soft Logic

Decoupled Smoothing in Probabilistic Soft Logic Experiments for "Decoupled Smoothing in Probabilistic Soft Logic". Probabilistic Soft Logic Probabilis

Image Smoothing and Blurring Using OpenCV

Image-Smoothing-and-Blurring-Using-OpenCV This repository contains codes for performing image smoothing and blurring using OpenCV. There are different

Supervised Sliding Window Smoothing Loss Function Based on MS-TCN for Video Segmentation

SSWS-loss_function_based_on_MS-TCN Supervised Sliding Window Smoothing Loss Function Based on MS-TCN for Video Segmentation Supervised Sliding Window

Unity Propagation in Bayesian Networks Handling Inconsistency via Unity Smoothing

This repository contains the scripts needed to generate the results from the paper Unity Propagation in Bayesian Networks Handling Inconsistency via U

Natural Posterior Network: Deep Bayesian Predictive Uncertainty for Exponential Family Distributions

Natural Posterior Network This repository provides the official implementation o

Hierarchical Time Series Forecasting with a familiar API

scikit-hts Hierarchical Time Series with a familiar API. This is the result from not having found any good implementations of HTS on-line, and my work

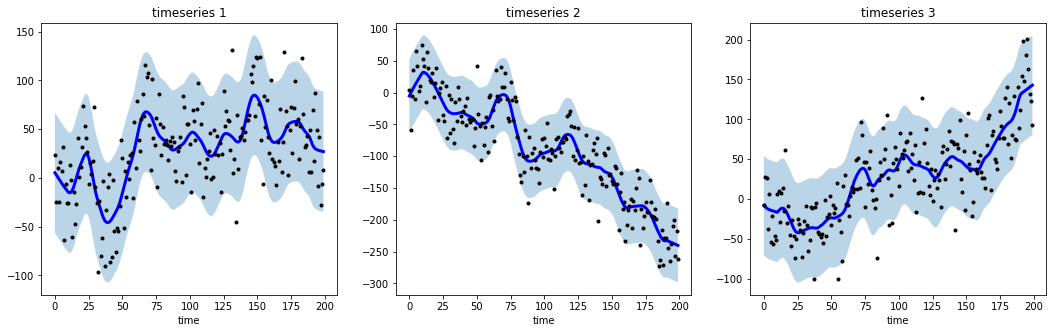

A python library for time-series smoothing and outlier detection in a vectorized way.

tsmoothie A python library for time-series smoothing and outlier detection in a vectorized way. Overview tsmoothie computes, in a fast and efficient w

N-gram models- Unsmoothed, Laplace, Deleted Interpolation

N-gram models- Unsmoothed, Laplace, Deleted Interpolation

Image Processing, Image Smoothing, Edge Detection and Transforms

opevcvdl-hw1 This project uses openCV and Qt to achieve the requirements. Version Python 3.7 opencv-contrib-python 3.4.2.17 Matplotlib 3.1.1 pyqt5 5.1

Delayed iteration for polling and retries.

Does Python need yet another retry / poll library? It needs at least one that isn't coupled to decorators and functions. Decorators prevent the caller

The official PyTorch implementation of Curriculum by Smoothing (NeurIPS 2020, Spotlight).

Curriculum by Smoothing (NeurIPS 2020) The official PyTorch implementation of Curriculum by Smoothing (NeurIPS 2020, Spotlight). For any questions reg

Implementation of parameterized soft-exponential activation function.

Soft-Exponential-Activation-Function: Implementation of parameterized soft-exponential activation function. In this implementation, the parameters are

A Pytorch implementation of SMU: SMOOTH ACTIVATION FUNCTION FOR DEEP NETWORKS USING SMOOTHING MAXIMUM TECHNIQUE

SMU_pytorch A Pytorch Implementation of SMU: SMOOTH ACTIVATION FUNCTION FOR DEEP NETWORKS USING SMOOTHING MAXIMUM TECHNIQUE arXiv https://arxiv.org/ab

Code for the paper "SmoothMix: Training Confidence-calibrated Smoothed Classifiers for Certified Robustness" (NeurIPS 2021)

SmoothMix: Training Confidence-calibrated Smoothed Classifiers for Certified Robustness (NeurIPS2021) This repository contains code for the paper "Smo

Tensorflow Implementation of SMU: SMOOTH ACTIVATION FUNCTION FOR DEEP NETWORKS USING SMOOTHING MAXIMUM TECHNIQUE

SMU A Tensorflow Implementation of SMU: SMOOTH ACTIVATION FUNCTION FOR DEEP NETWORKS USING SMOOTHING MAXIMUM TECHNIQUE arXiv https://arxiv.org/abs/211

exponential adaptive pooling for PyTorch

AdaPool: Exponential Adaptive Pooling for Information-Retaining Downsampling Abstract Pooling layers are essential building blocks of Convolutional Ne

Implicit MLE: Backpropagating Through Discrete Exponential Family Distributions

torch-imle Concise and self-contained PyTorch library implementing the I-MLE gradient estimator proposed in our NeurIPS 2021 paper Implicit MLE: Backp

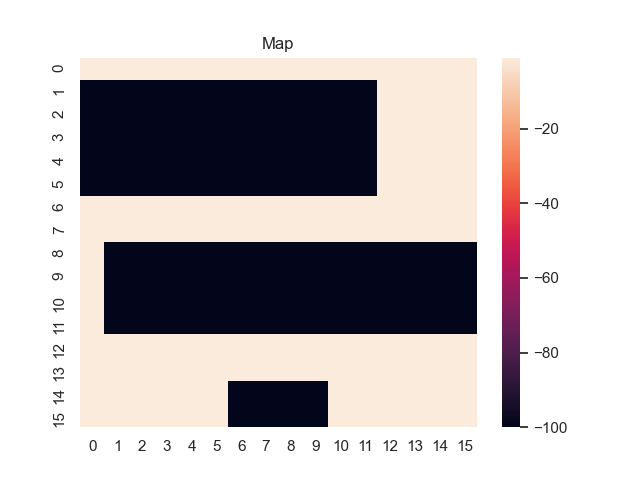

Node Dependent Local Smoothing for Scalable Graph Learning

Node Dependent Local Smoothing for Scalable Graph Learning Requirements Environments: Xeon Gold 5120 (CPU), 384GB(RAM), TITAN RTX (GPU), Ubuntu 16.04

Exponential Graph is Provably Efficient for Decentralized Deep Training

Exponential Graph is Provably Efficient for Decentralized Deep Training This code repository is for the paper Exponential Graph is Provably Efficient

This is our Tensorflow implementation for "Graph-based Embedding Smoothing for Sequential Recommendation" (GES) (TKDE, 2021).

Graph-based Embedding Smoothing (GES) This is our Tensorflow implementation for the paper: Tianyu Zhu, Leilei Sun, and Guoqing Chen. "Graph-based Embe

Instance-based label smoothing for improving deep neural networks generalization and calibration

Instance-based Label Smoothing for Neural Networks Pytorch Implementation of the algorithm. This repository includes a new proposed method for instanc

Implementation of Online Label Smoothing in PyTorch

Online Label Smoothing Pytorch implementation of Online Label Smoothing (OLS) presented in Delving Deep into Label Smoothing. Introduction As the abst

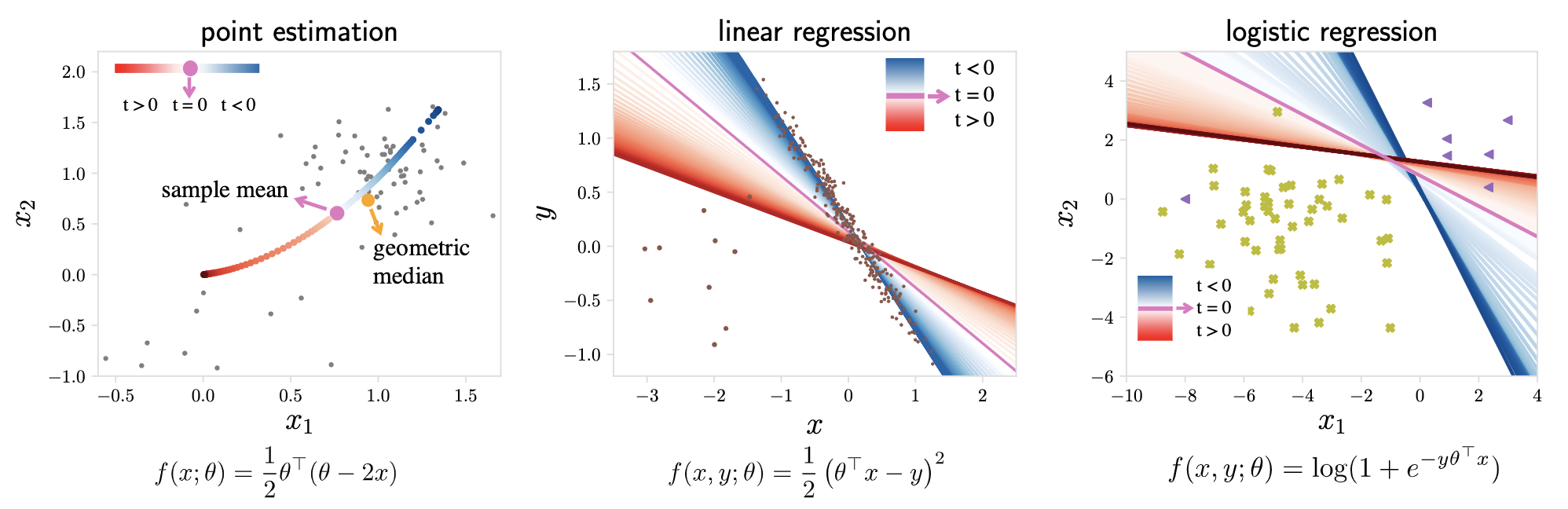

Tilted Empirical Risk Minimization (ICLR '21)

Tilted Empirical Risk Minimization This repository contains the implementation for the paper Tilted Empirical Risk Minimization ICLR 2021 Empirical ri

Minimal implementation of Denoised Smoothing: A Provable Defense for Pretrained Classifiers in TensorFlow.

Denoised-Smoothing-TF Minimal implementation of Denoised Smoothing: A Provable Defense for Pretrained Classifiers in TensorFlow. Denoised Smoothing is

LVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping

LVI-SAM This repository contains code for a lidar-visual-inertial odometry and mapping system, which combines the advantages of LIO-SAM and Vins-Mono

A Python package for Bayesian forecasting with object-oriented design and probabilistic models under the hood.

Disclaimer This project is stable and being incubated for long-term support. It may contain new experimental code, for which APIs are subject to chang

Machine learning, in numpy

numpy-ml Ever wish you had an inefficient but somewhat legible collection of machine learning algorithms implemented exclusively in NumPy? No? Install