1034 Repositories

Python fast-transformers Libraries

Official implement of Evo-ViT: Slow-Fast Token Evolution for Dynamic Vision Transformer

Evo-ViT: Slow-Fast Token Evolution for Dynamic Vision Transformer This repository contains the PyTorch code for Evo-ViT. This work proposes a slow-fas

Code for evaluating Japanese pretrained models provided by NTT Ltd.

japanese-dialog-transformers 日本語の説明文はこちら This repository provides the information necessary to evaluate the Japanese Transformer Encoder-decoder dialo

An API that renders HTML/CSS content to PNG using Chromium

html_png An API that renders HTML/CSS content to PNG using Chromium Disclaimer I am not responsible if you happen to make your own instance of this AP

Natural Language Processing with transformers

we want to create a repo to illustrate usage of transformers in chinese

Fast and robust certifiable relative pose estimation

Fast and Robust Relative Pose Estimation for Calibrated Cameras This repository contains the code for the relative pose estimation between two central

Search Git commits in natural language

NaLCoS - NAtural Language COmmit Search Search commit messages in your repository in natural language. NaLCoS (NAtural Language COmmit Search) is a co

Implementation of a Transformer, but completely in Triton

Transformer in Triton (wip) Implementation of a Transformer, but completely in Triton. I'm completely new to lower-level neural net code, so this repo

Fast / fuzzy PostgreSQL counts for Django

Created by Stephen McDonald Introduction Up until PostgreSQL 9.2, COUNT queries generally required scanning every row in a database table. With millio

Technical Indicators implemented in Python only using Numpy-Pandas as Magic - Very Very Fast! Very tiny! Stock Market Financial Technical Analysis Python library . Quant Trading automation or cryptocoin exchange

MyTT Technical Indicators implemented in Python only using Numpy-Pandas as Magic - Very Very Fast! to Stock Market Financial Technical Analysis Python

Polyp-PVT: Polyp Segmentation with Pyramid Vision Transformers (arXiv2021)

Polyp-PVT by Bo Dong, Wenhai Wang, Deng-Ping Fan, Jinpeng Li, Huazhu Fu, & Ling Shao. This repo is the official implementation of "Polyp-PVT: Polyp Se

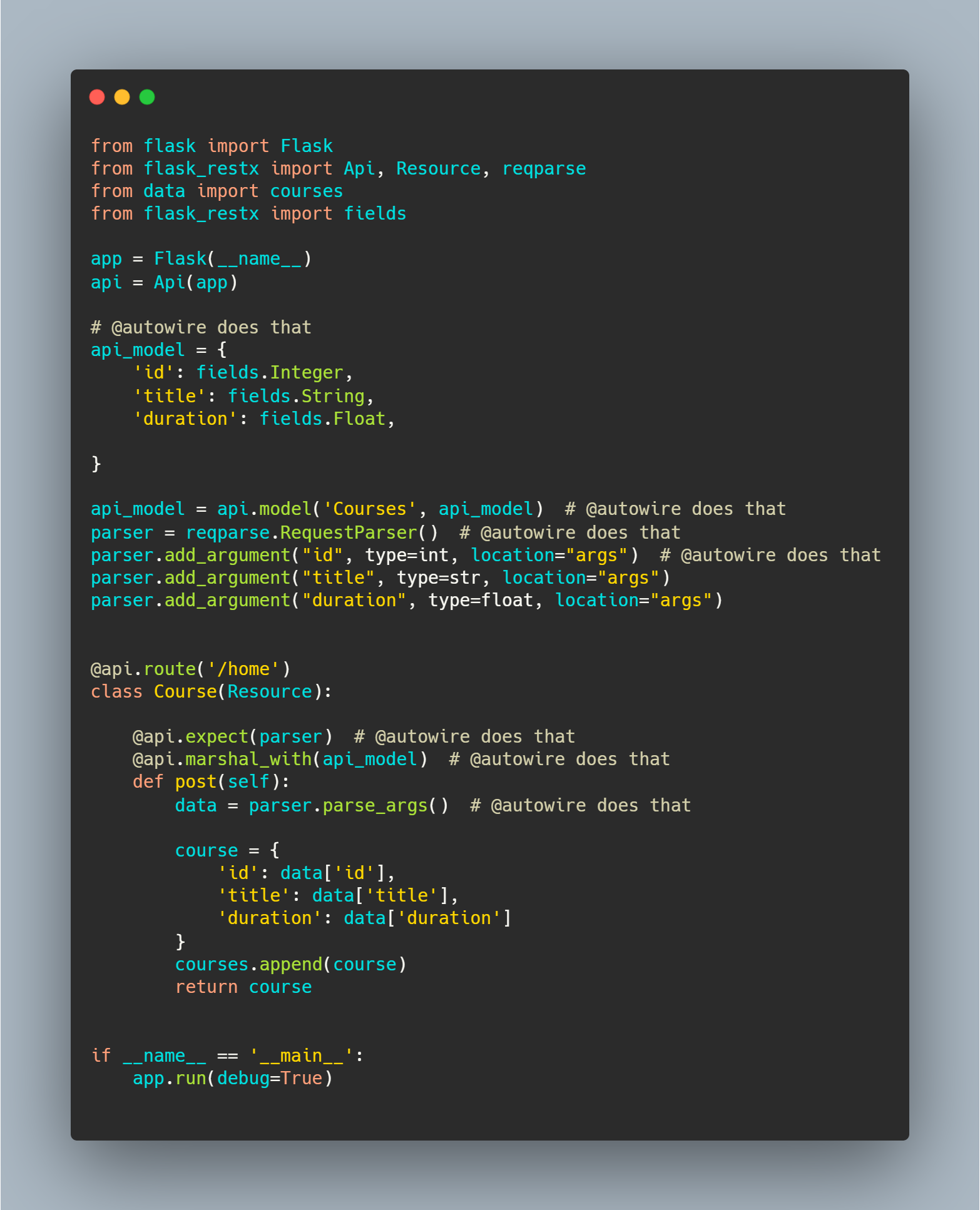

A Fast API style support for Flask. Gives you MyPy types with the flexibility of flask

Flask-Fastx Flask-Fastx is a Fast API style support for Flask. It Gives you MyPy types with the flexibility of flask. Compatibility Flask-Fastx requir

lightweight, fast and robust columnar dataframe for data analytics with online update

streamdf Streamdf is a lightweight data frame library built on top of the dictionary of numpy array, developed for Kaggle's time-series code competiti

🚀 A fast, flexible and lightweight Discord API wrapper for Python.

Krema A fast, flexible and lightweight Discord API wrapper for Python. Installation Unikorn unikorn add kremayard krema -no-confirmation Pip pip insta

Fast, simple API for Apple firmwares.

Loyal Fast, Simple API for fetching Apple Firmwares. The API server is closed due to some reasons. Wait for v2 releases. Features Fetching Signed IPSW

Using Youtube downloader is the fast and easy way to download and save any YouTube video.

Youtube video downloader using Django Using Django as a backend along with pytube module to create Youtbue Video Downloader. https://yt-videos-downloa

PyTorch Implementation of "Light Field Image Super-Resolution with Transformers"

LFT PyTorch implementation of "Light Field Image Super-Resolution with Transformers", arXiv 2021. [pdf]. Contributions: We make the first attempt to a

Send Emails through the terminal , fast and secure

Send Emails through the terminal , fast and secure

Code for lyric-section-to-comment generation based on huggingface transformers.

CommentGeneration Code for lyric-section-to-comment generation based on huggingface transformers. Migrate Guyu model and code (both 12-layers and 24-l

[ICCV 2021] Instance-level Image Retrieval using Reranking Transformers

Instance-level Image Retrieval using Reranking Transformers Fuwen Tan, Jiangbo Yuan, Vicente Ordonez, ICCV 2021. Abstract Instance-level image retriev

Document processing using transformers

Doc Transformers Document processing using transformers. This is still in developmental phase, currently supports only extraction of form data i.e (ke

A PyTorch library for Vision Transformers

VFormer A PyTorch library for Vision Transformers Getting Started Read the contributing guidelines in CONTRIBUTING.rst to learn how to start contribut

Tutorial to pretrain & fine-tune a 🤗 Flax T5 model on a TPUv3-8 with GCP

Pretrain and Fine-tune a T5 model with Flax on GCP This tutorial details how pretrain and fine-tune a FlaxT5 model from HuggingFace using a TPU VM ava

official Pytorch implementation of ICCV 2021 paper FuseFormer: Fusing Fine-Grained Information in Transformers for Video Inpainting.

FuseFormer: Fusing Fine-Grained Information in Transformers for Video Inpainting By Rui Liu, Hanming Deng, Yangyi Huang, Xiaoyu Shi, Lewei Lu, Wenxiu

Code for EMNLP 2021 paper Contrastive Out-of-Distribution Detection for Pretrained Transformers.

Contra-OOD Code for EMNLP 2021 paper Contrastive Out-of-Distribution Detection for Pretrained Transformers. Requirements PyTorch Transformers datasets

CMT: Convolutional Neural Networks Meet Vision Transformers

CMT: Convolutional Neural Networks Meet Vision Transformers [arxiv] 1. Introduction This repo is the CMT model which impelement with pytorch, no refer

A Python multilingual toolkit for Sentiment Analysis and Social NLP tasks

pysentimiento: A Python toolkit for Sentiment Analysis and Social NLP tasks A Transformer-based library for SocialNLP classification tasks. Currently

"3D Human Texture Estimation from a Single Image with Transformers", ICCV 2021

Texformer: 3D Human Texture Estimation from a Single Image with Transformers This is the official implementation of "3D Human Texture Estimation from

Example scripts for the detection of lanes using the ultra fast lane detection model in Tensorflow Lite.

TFlite Ultra Fast Lane Detection Inference Example scripts for the detection of lanes using the ultra fast lane detection model in Tensorflow Lite. So

Image Captioning using CNN and Transformers

Image-Captioning Keras/Tensorflow Image Captioning application using CNN and Transformer as encoder/decoder. In particulary, the architecture consists

⚡ A really fast and powerful Discord Token Checker

discord-token-checker ⚡ A really fast and powerful Discord Token Checker How To Use? Do pip install -r requirements.txt in your command prompt Make to

Implementation of fast algorithms for Maximum Spanning Tree (MST) parsing that includes fast ArcMax+Reweighting+Tarjan algorithm for single-root dependency parsing.

Fast MST Algorithm Implementation of fast algorithms for (Maximum Spanning Tree) MST parsing that includes fast ArcMax+Reweighting+Tarjan algorithm fo

pysentimiento: A Python toolkit for Sentiment Analysis and Social NLP tasks

A Python multilingual toolkit for Sentiment Analysis and Social NLP tasks

Code for "Searching for Efficient Multi-Stage Vision Transformers"

Searching for Efficient Multi-Stage Vision Transformers This repository contains the official Pytorch implementation of "Searching for Efficient Multi

Collection of NLP model explanations and accompanying analysis tools

Thermostat is a large collection of NLP model explanations and accompanying analysis tools. Combines explainability methods from the captum library wi

A fast tool to scan prototype pollution vulnerability

proto A fast tool to scan prototype pollution vulnerability Syntax python3 proto.py -l alive.txt Requirements Selenium Google Chrome Webdriver Note :

An implementation of Fastformer: Additive Attention Can Be All You Need in TensorFlow

Fast Transformer This repo implements Fastformer: Additive Attention Can Be All You Need by Wu et al. in TensorFlow. Fast Transformer is a Transformer

Ptorch NLU, a Chinese text classification and sequence annotation toolkit, supports multi class and multi label classification tasks of Chinese long text and short text, and supports sequence annotation tasks such as Chinese named entity recognition, part of speech tagging and word segmentation.

Pytorch-NLU,一个中文文本分类、序列标注工具包,支持中文长文本、短文本的多类、多标签分类任务,支持中文命名实体识别、词性标注、分词等序列标注任务。 Ptorch NLU, a Chinese text classification and sequence annotation toolkit, supports multi class and multi label classification tasks of Chinese long text and short text, and supports sequence annotation tasks such as Chinese named entity recognition, part of speech tagging and word segmentation.

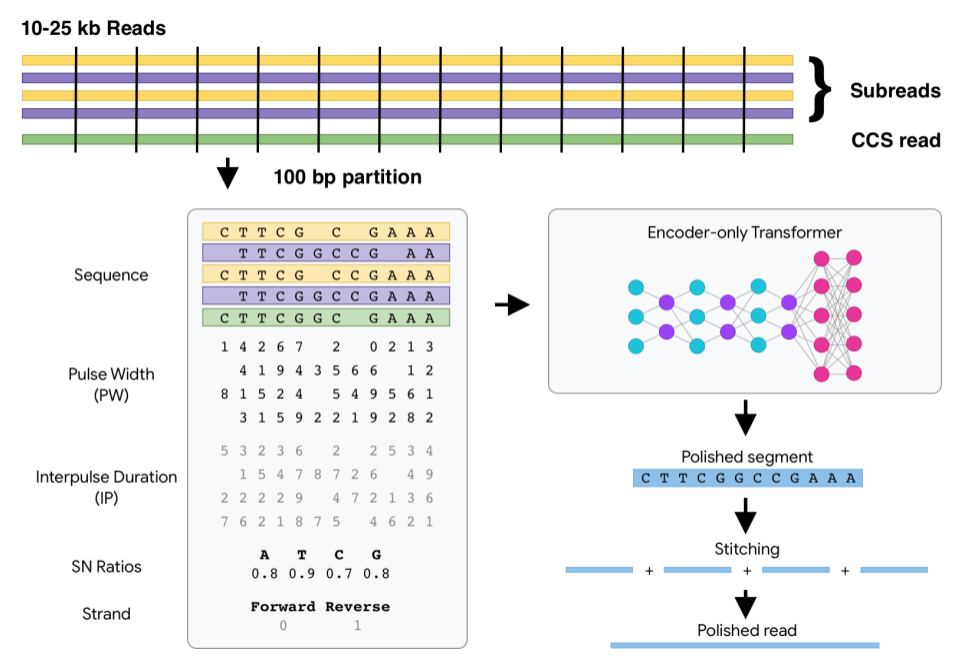

DeepConsensus uses gap-aware sequence transformers to correct errors in Pacific Biosciences (PacBio) Circular Consensus Sequencing (CCS) data.

DeepConsensus DeepConsensus uses gap-aware sequence transformers to correct errors in Pacific Biosciences (PacBio) Circular Consensus Sequencing (CCS)

WarpDrive: Extremely Fast End-to-End Deep Multi-Agent Reinforcement Learning on a GPU

WarpDrive is a flexible, lightweight, and easy-to-use open-source reinforcement learning (RL) framework that implements end-to-end multi-agent RL on a single GPU (Graphics Processing Unit).

The official repository for our paper "The Devil is in the Detail: Simple Tricks Improve Systematic Generalization of Transformers". We significantly improve the systematic generalization of transformer models on a variety of datasets using simple tricks and careful considerations.

Codebase for training transformers on systematic generalization datasets. The official repository for our EMNLP 2021 paper The Devil is in the Detail:

Sink is a CLI tool that allows users to synchronize their local folders to their Google Drives. It is similar to the Git CLI and allows fast and reliable syncs with the drive.

Sink is a CLI synchronisation tool that enables a user to synchronise local system files and folders with their Google Drives. It follows a git C

Example scripts for the detection of lanes using the ultra fast lane detection model in ONNX.

Example scripts for the detection of lanes using the ultra fast lane detection model in ONNX.

Implementation of a Transformer that Ponders, using the scheme from the PonderNet paper

Ponder(ing) Transformer Implementation of a Transformer that learns to adapt the number of computational steps it takes depending on the difficulty of

Implementation of Fast Transformer in Pytorch

Fast Transformer - Pytorch Implementation of Fast Transformer in Pytorch. This only work as an encoder. Yannic video AI Epiphany Install $ pip install

Many Class Activation Map methods implemented in Pytorch for CNNs and Vision Transformers. Including Grad-CAM, Grad-CAM++, Score-CAM, Ablation-CAM and XGrad-CAM

Class Activation Map methods implemented in Pytorch pip install grad-cam ⭐ Tested on many Common CNN Networks and Vision Transformers. ⭐ Includes smoo

A fast python implementation of Ray Tracing in One Weekend using python and Taichi

ray-tracing-one-weekend-taichi A fast python implementation of Ray Tracing in One Weekend using python and Taichi. Taichi is a simple "Domain specific

Implementation of Fast Transformer in Pytorch

Fast Transformer - Pytorch Implementation of Fast Transformer in Pytorch. This only work as an encoder. Yannic video AI Epiphany Install $ pip install

Implementation of a Transformer that Ponders, using the scheme from the PonderNet paper

Ponder(ing) Transformer Implementation of a Transformer that learns to adapt the number of computational steps it takes depending on the difficulty of

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics.

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics. Jury offers a smooth and easy-to-use interface. It uses datasets for underlying metric computation, and hence adding custom metric is easy as adopting datasets.Metric.

![SOTR: Segmenting Objects with Transformers [ICCV 2021]](https://github.com/easton-cau/SOTR/raw/main/images/overview.png)

SOTR: Segmenting Objects with Transformers [ICCV 2021]

SOTR: Segmenting Objects with Transformers [ICCV 2021] By Ruohao Guo, Dantong Niu, Liao Qu, Zhenbo Li Introduction This is the official implementation

![[ICCV 2021 Oral] PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers](https://github.com/yuxumin/PoinTr/raw/master/fig/pointr.gif)

[ICCV 2021 Oral] PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers

PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers Created by Xumin Yu*, Yongming Rao*, Ziyi Wang, Zuyan Liu, Jiwen Lu, Jie Zhou

Pipeline for fast building text classification TF-IDF + LogReg baselines.

Text Classification Baseline Pipeline for fast building text classification TF-IDF + LogReg baselines. Usage Instead of writing custom code for specif

Official code for "Focal Self-attention for Local-Global Interactions in Vision Transformers"

Focal Transformer This is the official implementation of our Focal Transformer -- "Focal Self-attention for Local-Global Interactions in Vision Transf

the swiss army knife in the hash field. fast, reliable and easy to use

hexxus Hexxus is a fast hash cracking tool which checks more than 30 thousand passwords in under 4 seconds and can crack the following types bcrypt sh

A Pythonic Data Catalog powered by Ray that brings exabyte-level scalability and fast, ACID-compliant, change-data-capture to your big data workloads.

DeltaCAT DeltaCAT is a Pythonic Data Catalog powered by Ray. Its data storage model allows you to define and manage fast, scalable, ACID-compliant dat

Composed Image Retrieval using Pretrained LANguage Transformers (CIRPLANT)

CIRPLANT This repository contains the code and pre-trained models for Composed Image Retrieval using Pretrained LANguage Transformers (CIRPLANT) For d

FPS, fast pluggable server, is a framework designed to compose and run a web-server based on plugins.

FPS, fast pluggable server, is a framework designed to compose and run a web-server based on plugins. It is based on top of fastAPI, uvicorn, typer, and pluggy.

Official implementation of the ICCV 2021 paper "Conditional DETR for Fast Training Convergence".

The DETR approach applies the transformer encoder and decoder architecture to object detection and achieves promising performance. In this paper, we handle the critical issue, slow training convergence, and present a conditional cross-attention mechanism for fast DETR training. Our approach is motivated by that the cross-attention in DETR relies highly on the content embeddings and that the spatial embeddings make minor contributions, increasing the need for high-quality content embeddings and thus increasing the training difficulty.

Fast as FUCK nvim completion. SQLite, concurrent scheduler, hundreds of hours of optimization.

Fast as FUCK nvim completion. SQLite, concurrent scheduler, hundreds of hours of optimization.

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

Refactoring dalle-pytorch and taming-transformers for TPU VM

Text-to-Image Translation (DALL-E) for TPU in Pytorch Refactoring Taming Transformers and DALLE-pytorch for TPU VM with Pytorch Lightning Requirements

SlotRefine: A Fast Non-Autoregressive Model forJoint Intent Detection and Slot Filling

SlotRefine: A Fast Non-Autoregressive Model for Joint Intent Detection and Slot Filling Reference Main paper to be cited (Di Wu et al., 2020) @article

This is the official implementation for "Do Transformers Really Perform Bad for Graph Representation?".

Graphormer By Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng*, Guolin Ke, Di He*, Yanming Shen and Tie-Yan Liu. This repo is the official impl

Source code for "Progressive Transformers for End-to-End Sign Language Production" (ECCV 2020)

Progressive Transformers for End-to-End Sign Language Production Source code for "Progressive Transformers for End-to-End Sign Language Production" (B

Sign Language Translation with Transformers (COLING'2020, ECCV'20 SLRTP Workshop)

transformer-slt This repository gathers data and code supporting the experiments in the paper Better Sign Language Translation with STMC-Transformer.

Sign Language Transformers (CVPR'20)

Sign Language Transformers (CVPR'20) This repo contains the training and evaluation code for the paper Sign Language Transformers: Sign Language Trans

This is an official implementation for "Self-Supervised Learning with Swin Transformers".

Self-Supervised Learning with Vision Transformers By Zhenda Xie*, Yutong Lin*, Zhuliang Yao, Zheng Zhang, Qi Dai, Yue Cao and Han Hu This repo is the

Text-to-Image generation

Generate vivid Images for Any (Chinese) text CogView is a pretrained (4B-param) transformer for text-to-image generation in general domain. Read our p

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

A library for fast import of Windows NT Registry(REGF) into Elasticsearch.

A library for fast import of Windows NT Registry(REGF) into Elasticsearch.

Piccolo - A fast, user friendly ORM and query builder which supports asyncio.

A fast, user friendly ORM and query builder which supports asyncio.

Code for "Finetuning Pretrained Transformers into Variational Autoencoders"

transformers-into-vaes Code for Finetuning Pretrained Transformers into Variational Autoencoders (our submission to NLP Insights Workshop 2021). Gathe

Zero-Shot Text-to-Image Generation VQGAN+CLIP Dockerized

VQGAN-CLIP-Docker About Zero-Shot Text-to-Image Generation VQGAN+CLIP Dockerized This is a stripped and minimal dependency repository for running loca

Official implementation of the paper Visual Parser: Representing Part-whole Hierarchies with Transformers

Visual Parser (ViP) This is the official implementation of the paper Visual Parser: Representing Part-whole Hierarchies with Transformers. Key Feature

Scenic: A Jax Library for Computer Vision and Beyond

Scenic Scenic is a codebase with a focus on research around attention-based models for computer vision. Scenic has been successfully used to develop c

[ICCV, 2021] Cloud Transformers: A Universal Approach To Point Cloud Processing Tasks

Cloud Transformers: A Universal Approach To Point Cloud Processing Tasks This is an official PyTorch code repository of the paper "Cloud Transformers:

Study of human inductive biases in CNNs and Transformers.

Are Convolutional Neural Networks or Transformers more like human vision? This repository contains the code and fine-tuned models of popular Convoluti

This repository contains data used in the NAACL 2021 Paper - Proteno: Text Normalization with Limited Data for Fast Deployment in Text to Speech Systems

Proteno This is the data release associated with the corresponding NAACL 2021 Paper - Proteno: Text Normalization with Limited Data for Fast Deploymen

FPGA: Fast Patch-Free Global Learning Framework for Fully End-to-End Hyperspectral Image Classification

FPGA & FreeNet Fast Patch-Free Global Learning Framework for Fully End-to-End Hyperspectral Image Classification by Zhuo Zheng, Yanfei Zhong, Ailong M

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

Implementation of Multistream Transformers in Pytorch

Multistream Transformers Implementation of Multistream Transformers in Pytorch. This repository deviates slightly from the paper, where instead of usi

Dense Prediction Transformers

Vision Transformers for Dense Prediction This repository contains code and models for our paper: Vision Transformers for Dense Prediction René Ranftl,

An official implementation of the paper Exploring Sequence Feature Alignment for Domain Adaptive Detection Transformers

Sequence Feature Alignment (SFA) By Wen Wang, Yang Cao, Jing Zhang, Fengxiang He, Zheng-jun Zha, Yonggang Wen, and Dacheng Tao This repository is an o

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

This project is a sample demo of Arxiv search related to AI/ML Papers built using Streamlit, sentence-transformers and Faiss.

This project is a sample demo of Arxiv search related to AI/ML Papers built using Streamlit, sentence-transformers and Faiss.

Active learning for text classification in Python

Active Learning allows you to efficiently label training data in a small-data scenario.

GlokyPortScannar is a really fast tool to scan TCP ports implemented in Python.

GlokyPortScannar is a really fast tool to scan TCP ports implemented in Python. Installation: This program requires Python 3.9. Linux

Implementation of Multistream Transformers in Pytorch

Multistream Transformers Implementation of Multistream Transformers in Pytorch. This repository deviates slightly from the paper, where instead of usi

Easily turn single threaded command line applications into a fast, multi-threaded application with CIDR and glob support.

Easily turn single threaded command line applications into a fast, multi-threaded application with CIDR and glob support.

![[ICCV'21] PlaneTR: Structure-Guided Transformers for 3D Plane Recovery](https://github.com/IceTTTb/PlaneTR3D/raw/master/misc/network.jpg)

[ICCV'21] PlaneTR: Structure-Guided Transformers for 3D Plane Recovery

PlaneTR: Structure-Guided Transformers for 3D Plane Recovery This is the official implementation of our ICCV 2021 paper News There maybe some bugs in

Fast and scalable uncertainty quantification for neural molecular property prediction, accelerated optimization, and guided virtual screening.

Evidential Deep Learning for Guided Molecular Property Prediction and Discovery Ava Soleimany*, Alexander Amini*, Samuel Goldman*, Daniela Rus, Sangee

Learned Token Pruning for Transformers

LTP: Learned Token Pruning for Transformers Check our paper for more details. Installation We follow the same installation procedure as the original H

RapidFuzz is a fast string matching library for Python and C++

RapidFuzz is a fast string matching library for Python and C++, which is using the string similarity calculations from FuzzyWuzzy

Relative Positional Encoding for Transformers with Linear Complexity

Stochastic Positional Encoding (SPE) This is the source code repository for the ICML 2021 paper Relative Positional Encoding for Transformers with Lin

A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers.

ViTGAN: Training GANs with Vision Transformers A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers. Refer

A Fast Sequence Transducer Implementation with PyTorch Bindings

transducer A Fast Sequence Transducer Implementation with PyTorch Bindings. The corresponding publication is Sequence Transduction with Recurrent Neur

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

A PyTorch implementation of "SimGNN: A Neural Network Approach to Fast Graph Similarity Computation" (WSDM 2019).

SimGNN ⠀⠀⠀ A PyTorch implementation of SimGNN: A Neural Network Approach to Fast Graph Similarity Computation (WSDM 2019). Abstract Graph similarity s

RoBERTa Marathi Language model trained from scratch during huggingface 🤗 x flax community week

RoBERTa base model for Marathi Language (मराठी भाषा) Pretrained model on Marathi language using a masked language modeling (MLM) objective. RoBERTa wa