49 Repositories

Python kapre-layers Libraries

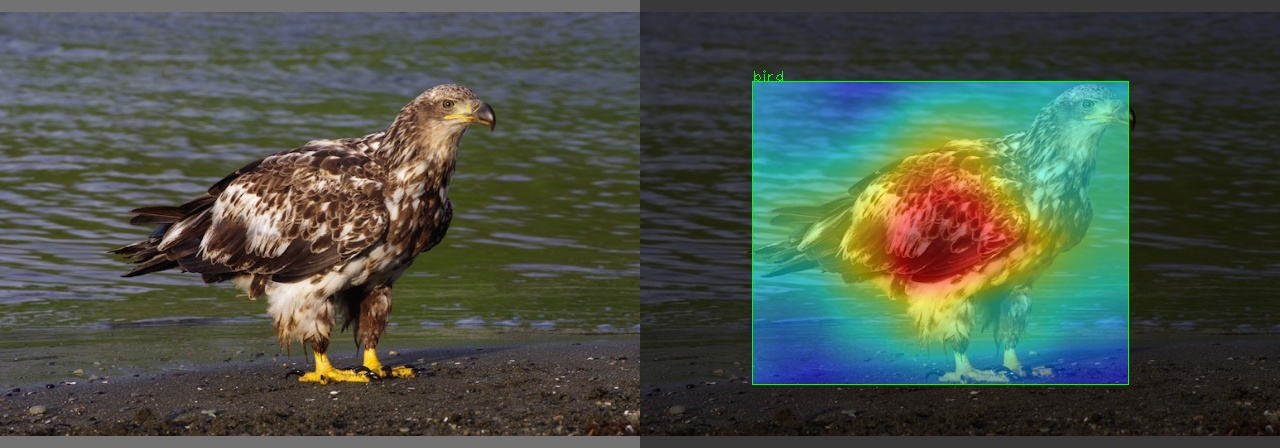

Visualizing Yolov5's layers using GradCam

YOLO-V5 GRADCAM I constantly desired to know to which part of an object the object-detection models pay more attention. So I searched for it, but I di

A library to inspect itermediate layers of PyTorch models.

A library to inspect itermediate layers of PyTorch models. Why? It's often the case that we want to inspect intermediate layers of a model without mod

Learning Features with Parameter-Free Layers, ICLR 2022

Learning Features with Parameter-Free Layers (ICLR 2022) Dongyoon Han, YoungJoon Yoo, Beomyoung Kim, Byeongho Heo | Paper NAVER AI Lab, NAVER CLOVA Up

Learning Features with Parameter-Free Layers (ICLR 2022)

Learning Features with Parameter-Free Layers (ICLR 2022) Dongyoon Han, YoungJoon Yoo, Beomyoung Kim, Byeongho Heo | Paper NAVER AI Lab, NAVER CLOVA Up

The unified machine learning framework, enabling framework-agnostic functions, layers and libraries.

The unified machine learning framework, enabling framework-agnostic functions, layers and libraries. Contents Overview In a Nutshell Where Next? Overv

Automatic generation of crypto-arts based on image layers

NFT Generator Автоматическая генерация крипто-артов на основе слоев изображения. Установка pip3 install -r requirements.txt rm -rf result/* Как это ра

Simple mathematical operations on image, point and surface layers.

napari-math This package provides a GUI interfrace for simple mathematical operations on image, point and surface layers. addition subtraction multipl

PyTorch implementation of Histogram Layers from DeepHist: Differentiable Joint and Color Histogram Layers for Image-to-Image Translation

deep-hist PyTorch implementation of Histogram Layers from DeepHist: Differentiable Joint and Color Histogram Layers for Image-to-Image Translation PyT

HAR-stacked-residual-bidir-LSTMs - Deep stacked residual bidirectional LSTMs for HAR

HAR-stacked-residual-bidir-LSTM The project is based on this repository which is presented as a tutorial. It consists of Human Activity Recognition (H

Lamblayer: a minimal deployment tool for AWS Lambda layers

lamblayer lamblayer is a minimal deployment tool for AWS Lambda layers. lamblayer does, Create a Layers of built pip-installable python packages. Crea

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

Equivariant layers for RC-complement symmetry in DNA sequence data

Equi-RC Equivariant layers for RC-complement symmetry in DNA sequence data This is a repository that implements the layers as described in "Reverse-Co

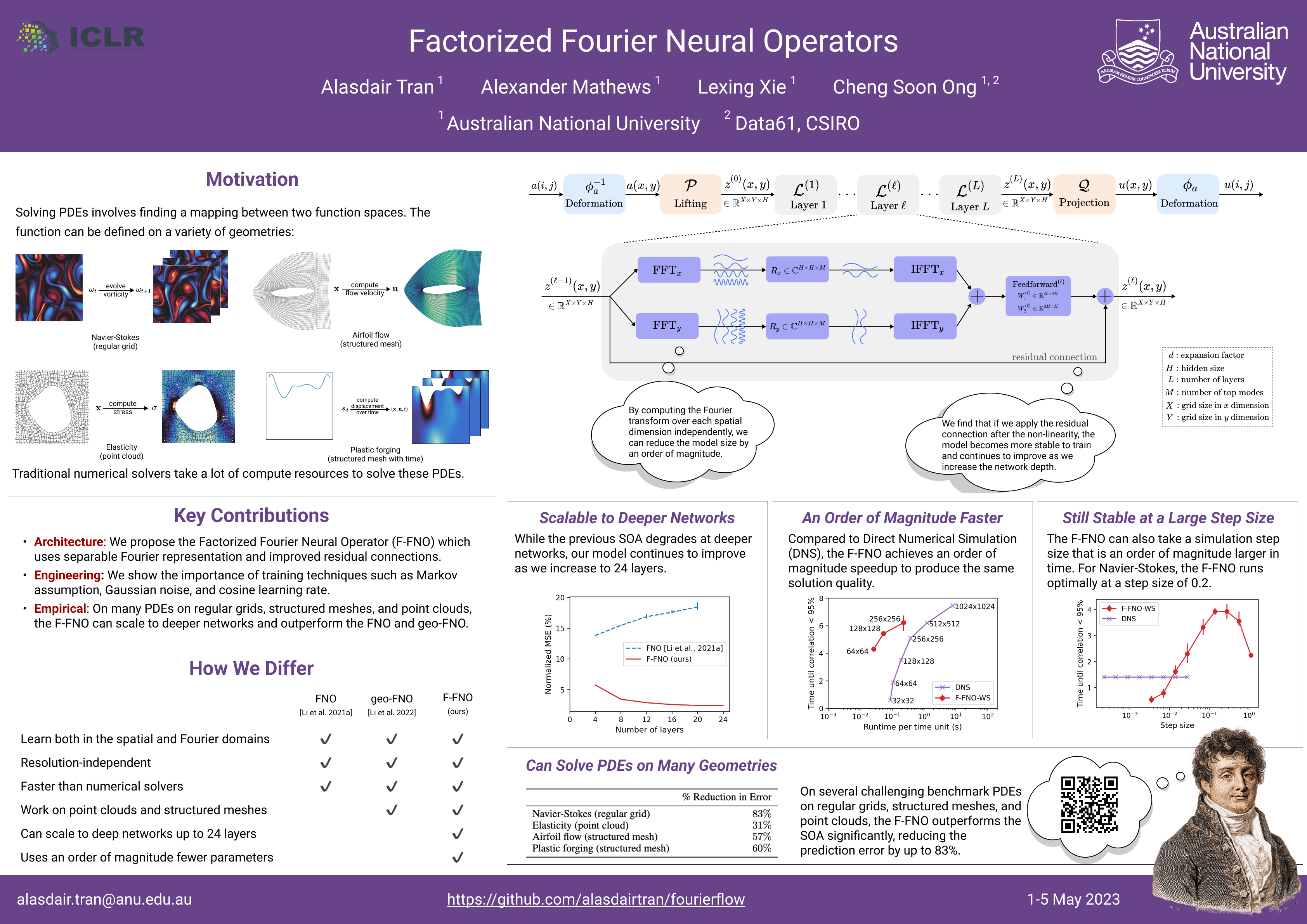

Experiments with Fourier layers on simulation data.

Factorized Fourier Neural Operators This repository contains the code to reproduce the results in our NeurIPS 2021 ML4PS workshop paper, Factorized Fo

A simple and extensible library to create Bayesian Neural Network layers on PyTorch.

Blitz - Bayesian Layers in Torch Zoo BLiTZ is a simple and extensible library to create Bayesian Neural Network Layers (based on whats proposed in Wei

inklayers is a command line program that exports layers from an SVG file.

inklayers is a command line program that exports layers from an SVG file. It can be used to create slide shows by editing a single SVG file.

A concept I came up which ditches the idea of "layers" in a neural network.

Dynet A concept I came up which ditches the idea of "layers" in a neural network. Install Copy Dynet.py to your project. Run the example Install matpl

This is the official repository for our paper: ''Pruning Self-attentions into Convolutional Layers in Single Path''.

Pruning Self-attentions into Convolutional Layers in Single Path This is the official repository for our paper: Pruning Self-attentions into Convoluti

This is the official repository for our paper: ''Pruning Self-attentions into Convolutional Layers in Single Path''.

Pruning Self-attentions into Convolutional Layers in Single Path This is the official repository for our paper: Pruning Self-attentions into Convoluti

Keras Implementation of The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation by (Simon Jégou, Michal Drozdzal, David Vazquez, Adriana Romero, Yoshua Bengio)

The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation: Work In Progress, Results can't be replicated yet with the m

Open source single image super-resolution toolbox containing various functionality for training a diverse number of state-of-the-art super-resolution models. Also acts as the companion code for the IEEE signal processing letters paper titled 'Improving Super-Resolution Performance using Meta-Attention Layers’.

Deep-FIR Codebase - Super Resolution Meta Attention Networks About This repository contains the main coding framework accompanying our work on meta-at

The official repository for "Intermediate Layers Matter in Momentum Contrastive Self Supervised Learning" paper.

Intermdiate layer matters - SSL The official repository for "Intermediate Layers Matter in Momentum Contrastive Self Supervised Learning" paper. Downl

a reccurrent neural netowrk that when trained on a peice of text and fed a starting prompt will write its on 250 character text using LSTM layers

RNN-Playwrite a reccurrent neural netowrk that when trained on a peice of text and fed a starting prompt will write its on 250 character text using LS

Building and deploying AWS Lambda Shared Layers

AWS Lambda Shared Layers This repository is hosting the code from the following blog post: AWS Lambda & Shared layers for Python. The goal of this rep

ConformalLayers: A non-linear sequential neural network with associative layers

ConformalLayers: A non-linear sequential neural network with associative layers ConformalLayers is a conformal embedding of sequential layers of Convo

PyTorch Implementation of Unsupervised Depth Completion with Calibrated Backprojection Layers (ORAL, ICCV 2021)

Unsupervised Depth Completion with Calibrated Backprojection Layers PyTorch implementation of Unsupervised Depth Completion with Calibrated Backprojec

PyTorch Implementation of Unsupervised Depth Completion with Calibrated Backprojection Layers (ORAL, ICCV 2021)

PyTorch Implementation of Unsupervised Depth Completion with Calibrated Backprojection Layers (ORAL, ICCV 2021)

Modeling CNN layers activity with Gaussian mixture model

GMM-CNN This code package implements the modeling of CNN layers activity with Gaussian mixture model and Inference Graphs visualization technique from

Deep Residual Networks with 1K Layers

Deep Residual Networks with 1K Layers By Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun. Microsoft Research Asia (MSRA). Table of Contents Introduc

A (very dirty) experiment to remove layers from a Docker image.

Surgically remove layers from a Docker image (with a chainsaw)

Dear PyGui Extensions is a collection of useful tools, abstractions, and simplification layers built with/for Dear PyGui users.

Dear PyGui Extensions: A collection of useful tools, abstractions, and simplification layers built with/for Dear PyGui users.

Accelerate Neural Net Training by Progressively Freezing Layers

FreezeOut A simple technique to accelerate neural net training by progressively freezing layers. This repository contains code for the extended abstra

DEMix Layers for Modular Language Modeling

DEMix This repository contains modeling utilities for "DEMix Layers: Disentangling Domains for Modular Language Modeling" (Gururangan et. al, 2021). T

RepMLP: Re-parameterizing Convolutions into Fully-connected Layers for Image Recognition

RepMLP: Re-parameterizing Convolutions into Fully-connected Layers for Image Recognition (PyTorch) Paper: https://arxiv.org/abs/2105.01883 Citation: @

TensorFlow, PyTorch and Numpy layers for generating Orthogonal Polynomials

OrthNet TensorFlow, PyTorch and Numpy layers for generating multi-dimensional Orthogonal Polynomials 1. Installation 2. Usage 3. Polynomials 4. Base C

🍀 Pytorch implementation of various Attention Mechanisms, MLP, Re-parameter, Convolution, which is helpful to further understand papers.⭐⭐⭐

🍀 Pytorch implementation of various Attention Mechanisms, MLP, Re-parameter, Convolution, which is helpful to further understand papers.⭐⭐⭐

An implementation of "MixHop: Higher-Order Graph Convolutional Architectures via Sparsified Neighborhood Mixing" (ICML 2019).

MixHop and N-GCN ⠀ A PyTorch implementation of "MixHop: Higher-Order Graph Convolutional Architectures via Sparsified Neighborhood Mixing" (ICML 2019)

Compare outputs between layers written in Tensorflow and layers written in Pytorch

Compare outputs of Wasserstein GANs between TensorFlow vs Pytorch This is our testing module for the implementation of improved WGAN in Pytorch Prereq

Spectral Tensor Train Parameterization of Deep Learning Layers

Spectral Tensor Train Parameterization of Deep Learning Layers This repository is the official implementation of our AISTATS 2021 paper titled "Spectr

Unofficial PyTorch implementation of Attention Free Transformer (AFT) layers by Apple Inc.

aft-pytorch Unofficial PyTorch implementation of Attention Free Transformer's layers by Zhai, et al. [abs, pdf] from Apple Inc. Installation You can i

Improving Deep Network Debuggability via Sparse Decision Layers

Improving Deep Network Debuggability via Sparse Decision Layers This repository contains the code for our paper: Leveraging Sparse Linear Layers for D

RepMLP: Re-parameterizing Convolutions into Fully-connected Layers for Image Recognition

RepMLP RepMLP: Re-parameterizing Convolutions into Fully-connected Layers for Image Recognition Released the code of RepMLP together with an example o

Meta Language-Specific Layers in Multilingual Language Models

Meta Language-Specific Layers in Multilingual Language Models This repo contains the source codes for our paper On Negative Interference in Multilingu

Bayesian-Torch is a library of neural network layers and utilities extending the core of PyTorch to enable the user to perform stochastic variational inference in Bayesian deep neural networks

Bayesian-Torch is a library of neural network layers and utilities extending the core of PyTorch to enable the user to perform stochastic variational inference in Bayesian deep neural networks. Bayesian-Torch is designed to be flexible and seamless in extending a deterministic deep neural network architecture to corresponding Bayesian form by simply replacing the deterministic layers with Bayesian layers.

WebGL2 powered geospatial visualization layers

deck.gl | Website WebGL2-powered, highly performant large-scale data visualization deck.gl is designed to simplify high-performance, WebGL-based visua

uMap lets you create maps with OpenStreetMap layers in a minute and embed them in your site.

uMap project About uMap lets you create maps with OpenStreetMap layers in a minute and embed them in your site. Because we think that the more OSM wil

Code for "Infinitely Deep Bayesian Neural Networks with Stochastic Differential Equations"

Infinitely Deep Bayesian Neural Networks with SDEs This library contains JAX and Pytorch implementations of neural ODEs and Bayesian layers for stocha

🎆 A visualization of the CapsNet layers to better understand how it works

CapsNet-Visualization For more information on capsule networks check out my Medium articles here and here. Setup Use pip to install the required pytho

kapre: Keras Audio Preprocessors

Kapre Keras Audio Preprocessors - compute STFT, ISTFT, Melspectrogram, and others on GPU real-time. Tested on Python 3.6 and 3.7 Why Kapre? vs. Pre-co

kapre: Keras Audio Preprocessors

Kapre Keras Audio Preprocessors - compute STFT, ISTFT, Melspectrogram, and others on GPU real-time. Tested on Python 3.6 and 3.7 Why Kapre? vs. Pre-co