259 Repositories

Python nas-benchmark Libraries

Open source implementation of AceNAS: Learning to Rank Ace Neural Architectures with Weak Supervision of Weight Sharing

AceNAS This repo is the experiment code of AceNAS, and is not considered as an official release. We are working on integrating AceNAS as a built-in st

HDR Video Reconstruction: A Coarse-to-fine Network and A Real-world Benchmark Dataset (ICCV 2021)

Code for HDR Video Reconstruction HDR Video Reconstruction: A Coarse-to-fine Network and A Real-world Benchmark Dataset (ICCV 2021) Guanying Chen, Cha

FedScale: Benchmarking Model and System Performance of Federated Learning

FedScale: Benchmarking Model and System Performance of Federated Learning (Paper) This repository contains scripts and instructions of building FedSca

ALFRED - A Benchmark for Interpreting Grounded Instructions for Everyday Tasks

ALFRED A Benchmark for Interpreting Grounded Instructions for Everyday Tasks Mohit Shridhar, Jesse Thomason, Daniel Gordon, Yonatan Bisk, Winson Han,

MQBench: Towards Reproducible and Deployable Model Quantization Benchmark

MQBench: Towards Reproducible and Deployable Model Quantization Benchmark We propose a benchmark to evaluate different quantization algorithms on vari

Disfl-QA: A Benchmark Dataset for Understanding Disfluencies in Question Answering

Disfl-QA is a targeted dataset for contextual disfluencies in an information seeking setting, namely question answering over Wikipedia passages. Disfl-QA builds upon the SQuAD-v2 (Rajpurkar et al., 2018) dataset, where each question in the dev set is annotated to add a contextual disfluency using the paragraph as a source of distractors.

The dataset and source code for our paper: "Did You Ask a Good Question? A Cross-Domain Question IntentionClassification Benchmark for Text-to-SQL"

TriageSQL The dataset and source code for our paper: "Did You Ask a Good Question? A Cross-Domain Question Intention Classification Benchmark for Text

Benchmark for evaluating open-ended generation

OpenMEVA Contributed by Jian Guan, Zhexin Zhang. Thank Jiaxin Wen for DeBugging. OpenMEVA is a benchmark for evaluating open-ended story generation me

ManiSkill-Learn is a framework for training agents on SAPIEN Open-Source Manipulation Skill Challenge (ManiSkill Challenge), a large-scale learning-from-demonstrations benchmark for object manipulation.

ManiSkill-Learn ManiSkill-Learn is a framework for training agents on SAPIEN Open-Source Manipulation Skill Challenge, a large-scale learning-from-dem

KLUE-baseline contains the baseline code for the Korean Language Understanding Evaluation (KLUE) benchmark.

KLUE Baseline Korean(한국어) KLUE-baseline contains the baseline code for the Korean Language Understanding Evaluation (KLUE) benchmark. See our paper fo

SAPIEN Manipulation Skill Benchmark

ManiSkill Benchmark SAPIEN Manipulation Skill Benchmark (abbreviated as ManiSkill, pronounced as "Many Skill") is a large-scale learning-from-demonstr

Few-shot NLP benchmark for unified, rigorous eval

FLEX FLEX is a benchmark and framework for unified, rigorous few-shot NLP evaluation. FLEX enables: First-class NLP support Support for meta-training

Few-shot Neural Architecture Search

One-shot Neural Architecture Search uses a single supernet to approximate the performance each architecture. However, this performance estimation is super inaccurate because of co-adaption among operations in supernet.

![[IJCAI-2021] A benchmark of data-free knowledge distillation from paper](https://github.com/zju-vipa/DataFree/raw/main/assets/cmi.png)

[IJCAI-2021] A benchmark of data-free knowledge distillation from paper "Contrastive Model Inversion for Data-Free Knowledge Distillation"

DataFree A benchmark of data-free knowledge distillation from paper "Contrastive Model Inversion for Data-Free Knowledge Distillation" Authors: Gongfa

Code for ViTAS_Vision Transformer Architecture Search

Vision Transformer Architecture Search This repository open source the code for ViTAS: Vision Transformer Architecture Search. ViTAS aims to search fo

An open-access benchmark and toolbox for electricity price forecasting

epftoolbox The epftoolbox is the first open-access library for driving research in electricity price forecasting. Its main goal is to make available a

MVP Benchmark for Multi-View Partial Point Cloud Completion and Registration

MVP Benchmark: Multi-View Partial Point Clouds for Completion and Registration [NEWS] 2021-07-12 [NEW 🎉 ] The submission on Codalab starts! 2021-07-1

Generic Event Boundary Detection: A Benchmark for Event Segmentation

Generic Event Boundary Detection: A Benchmark for Event Segmentation We release our data annotation & baseline codes for detecting generic event bound

Official Implement of CVPR 2021 paper “Cross-Modal Collaborative Representation Learning and a Large-Scale RGBT Benchmark for Crowd Counting”

RGBT Crowd Counting Lingbo Liu, Jiaqi Chen, Hefeng Wu, Guanbin Li, Chenglong Li, Liang Lin. "Cross-Modal Collaborative Representation Learning and a L

This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard evaluation metric to measure the accuracy and robustness of 3D face reconstruction methods from a single image under variations in viewing angle, lighting, and common occlusions.

NoW Evaluation This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard e

Baseline model for "GraspNet-1Billion: A Large-Scale Benchmark for General Object Grasping" (CVPR 2020)

GraspNet Baseline Baseline model for "GraspNet-1Billion: A Large-Scale Benchmark for General Object Grasping" (CVPR 2020). [paper] [dataset] [API] [do

NAS Benchmark in "Prioritized Architecture Sampling with Monto-Carlo Tree Search", CVPR2021

NAS-Bench-Macro This repository includes the benchmark and code for NAS-Bench-Macro in paper "Prioritized Architecture Sampling with Monto-Carlo Tree

A Neural Language Style Transfer framework to transfer natural language text smoothly between fine-grained language styles like formal/casual, active/passive, and many more. Created by Prithiviraj Damodaran. Open to pull requests and other forms of collaboration.

Styleformer A Neural Language Style Transfer framework to transfer natural language text smoothly between fine-grained language styles like formal/cas

modelvshuman is a Python library to benchmark the gap between human and machine vision

modelvshuman is a Python library to benchmark the gap between human and machine vision. Using this library, both PyTorch and TensorFlow models can be evaluated on 17 out-of-distribution datasets with high-quality human comparison data.

中文医疗信息处理基准CBLUE: A Chinese Biomedical LanguageUnderstanding Evaluation Benchmark

English | 中文说明 CBLUE AI (Artificial Intelligence) is playing an indispensabe role in the biomedical field, helping improve medical technology. For fur

3D AffordanceNet is a 3D point cloud benchmark consisting of 23k shapes from 23 semantic object categories, annotated with 56k affordance annotations and covering 18 visual affordance categories.

3D AffordanceNet This repository is the official experiment implementation of 3D AffordanceNet benchmark. 3D AffordanceNet is a 3D point cloud benchma

A list of hyperspectral image super-solution resources collected by Junjun Jiang

A list of hyperspectral image super-resolution resources collected by Junjun Jiang. If you find that important resources are not included, please feel free to contact me.

[CVPR2021] UAV-Human: A Large Benchmark for Human Behavior Understanding with Unmanned Aerial Vehicles

UAV-Human Official repository for CVPR2021: UAV-Human: A Large Benchmark for Human Behavior Understanding with Unmanned Aerial Vehicle Paper arXiv Res

RoboDesk A Multi-Task Reinforcement Learning Benchmark

RoboDesk A Multi-Task Reinforcement Learning Benchmark If you find this open source release useful, please reference in your paper: @misc{kannan2021ro

![[CVPR21] LightTrack: Finding Lightweight Neural Network for Object Tracking via One-Shot Architecture Search](https://github.com/researchmm/LightTrack/raw/main/Archs.gif)

[CVPR21] LightTrack: Finding Lightweight Neural Network for Object Tracking via One-Shot Architecture Search

LightTrack: Finding Lightweight Neural Networks for Object Tracking via One-Shot Architecture Search The official implementation of the paper LightTra

A Research-oriented Federated Learning Library and Benchmark Platform for Graph Neural Networks. Accepted to ICLR'2021 - DPML and MLSys'21 - GNNSys workshops.

FedGraphNN: A Federated Learning System and Benchmark for Graph Neural Networks A Research-oriented Federated Learning Library and Benchmark Platform

"NAS-Bench-301 and the Case for Surrogate Benchmarks for Neural Architecture Search".

NAS-Bench-301 This repository containts code for the paper: "NAS-Bench-301 and the Case for Surrogate Benchmarks for Neural Architecture Search". The

![[CVPR 2021 Oral] ForgeryNet: A Versatile Benchmark for Comprehensive Forgery Analysis](https://github.com/yinanhe/ForgeryNet/raw/main/images/abstract.png)

[CVPR 2021 Oral] ForgeryNet: A Versatile Benchmark for Comprehensive Forgery Analysis

ForgeryNet: A Versatile Benchmark for Comprehensive Forgery Analysis ForgeryNet: A Versatile Benchmark for Comprehensive Forgery Analysis [arxiv|pdf|v

OpenMMLab Pose Estimation Toolbox and Benchmark.

Introduction English | 简体中文 MMPose is an open-source toolbox for pose estimation based on PyTorch. It is a part of the OpenMMLab project. The master b

OpenMMLab's Next Generation Video Understanding Toolbox and Benchmark

Introduction English | 简体中文 MMAction2 is an open-source toolbox for video understanding based on PyTorch. It is a part of the OpenMMLab project. The m

OpenMMLab Semantic Segmentation Toolbox and Benchmark.

Documentation: https://mmsegmentation.readthedocs.io/ English | 简体中文 Introduction MMSegmentation is an open source semantic segmentation toolbox based

Fast, modular reference implementation of Instance Segmentation and Object Detection algorithms in PyTorch.

Faster R-CNN and Mask R-CNN in PyTorch 1.0 maskrcnn-benchmark has been deprecated. Please see detectron2, which includes implementations for all model

[ICLR2021oral] Rethinking Architecture Selection in Differentiable NAS

DARTS-PT Code accompanying the paper ICLR'2021: Rethinking Architecture Selection in Differentiable NAS Ruochen Wang, Minhao Cheng, Xiangning Chen, Xi

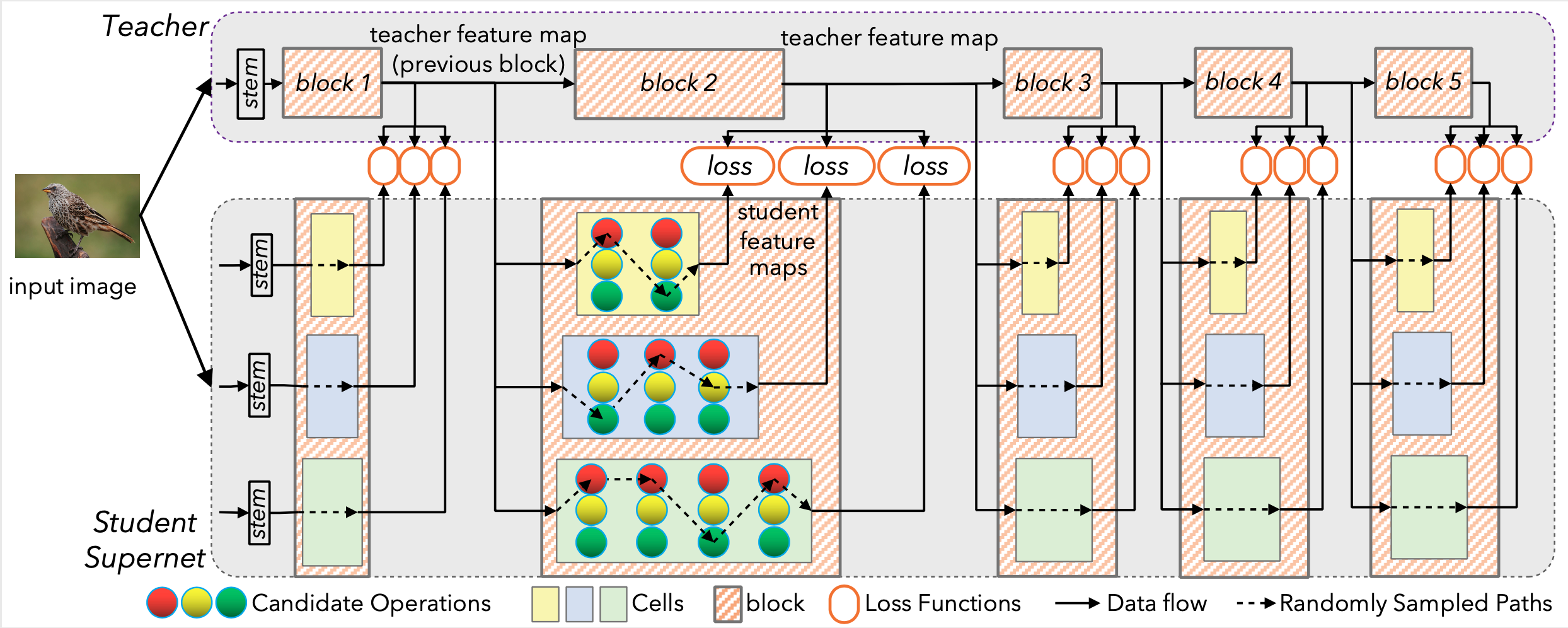

Block-wisely Supervised Neural Architecture Search with Knowledge Distillation (CVPR 2020)

DNA This repository provides the code of our paper: Blockwisely Supervised Neural Architecture Search with Knowledge Distillation. Illustration of DNA

Pretrained EfficientNet, EfficientNet-Lite, MixNet, MobileNetV3 / V2, MNASNet A1 and B1, FBNet, Single-Path NAS

(Generic) EfficientNets for PyTorch A 'generic' implementation of EfficientNet, MixNet, MobileNetV3, etc. that covers most of the compute/parameter ef

A code repository associated with the paper A Benchmark for Rough Sketch Cleanup by Chuan Yan, David Vanderhaeghe, and Yotam Gingold from SIGGRAPH Asia 2020.

A Benchmark for Rough Sketch Cleanup This is the code repository associated with the paper A Benchmark for Rough Sketch Cleanup by Chuan Yan, David Va

Open-L2O: A Comprehensive and Reproducible Benchmark for Learning to Optimize Algorithms

Open-L2O This repository establishes the first comprehensive benchmark efforts of existing learning to optimize (L2O) approaches on a number of proble

TableBank: A Benchmark Dataset for Table Detection and Recognition

TableBank TableBank is a new image-based table detection and recognition dataset built with novel weak supervision from Word and Latex documents on th

Repository for Traffic Accident Benchmark for Causality Recognition (ECCV 2020)

Causality In Traffic Accident (Under Construction) Repository for Traffic Accident Benchmark for Causality Recognition (ECCV 2020) Overview Data Prepa

![[ICLR 2021] HW-NAS-Bench: Hardware-Aware Neural Architecture Search Benchmark](https://github.com/RICE-EIC/HW-NAS-Bench/raw/main/devices.jpg?raw=true)

[ICLR 2021] HW-NAS-Bench: Hardware-Aware Neural Architecture Search Benchmark

HW-NAS-Bench: Hardware-Aware Neural Architecture Search Benchmark Accepted as a spotlight paper at ICLR 2021. Table of content File structure Prerequi

OpenMMLab Detection Toolbox and Benchmark

MMDetection is an open source object detection toolbox based on PyTorch. It is a part of the OpenMMLab project.

![[CVPR 2021] 'Searching by Generating: Flexible and Efficient One-Shot NAS with Architecture Generator'](https://github.com/eric8607242/SGNAS/raw/master/resource/SGNAS_framework.png)

[CVPR 2021] 'Searching by Generating: Flexible and Efficient One-Shot NAS with Architecture Generator'

[CVPR2021] Searching by Generating: Flexible and Efficient One-Shot NAS with Architecture Generator Overview This is the entire codebase for the paper

Baselines for TrajNet++

TrajNet++ : The Trajectory Forecasting Framework PyTorch implementation of Human Trajectory Forecasting in Crowds: A Deep Learning Perspective TrajNet

Beyond the Imitation Game collaborative benchmark for enormous language models

BIG-bench 🪑 The Beyond the Imitation Game Benchmark (BIG-bench) will be a collaborative benchmark intended to probe large language models, and extrap

Simulation-Based Inference Benchmark

This repository contains a simulation-based inference benchmark framework, sbibm, which we describe in the associated manuscript "Benchmarking Simulation-based Inference".

DeepMind Alchemy task environment: a meta-reinforcement learning benchmark

The DeepMind Alchemy environment is a meta-reinforcement learning benchmark that presents tasks sampled from a task distribution with deep underlying structure.

Airspeed Velocity: A simple Python benchmarking tool with web-based reporting

airspeed velocity airspeed velocity (asv) is a tool for benchmarking Python packages over their lifetime. It is primarily designed to benchmark a sing

py.test fixture for benchmarking code

Overview docs tests package A pytest fixture for benchmarking code. It will group the tests into rounds that are calibrated to the chosen timer. See c

Load and performance benchmark tool

Yandex Tank Yandextank has been moved to Python 3. Latest stable release for Python 2 here. Yandex.Tank is an extensible open source load testing tool

A machine learning benchmark of in-the-wild distribution shifts, with data loaders, evaluators, and default models.

WILDS is a benchmark of in-the-wild distribution shifts spanning diverse data modalities and applications, from tumor identification to wildlife monitoring to poverty mapping.

[ICLR 2021] "Neural Architecture Search on ImageNet in Four GPU Hours: A Theoretically Inspired Perspective" by Wuyang Chen, Xinyu Gong, Zhangyang Wang

Neural Architecture Search on ImageNet in Four GPU Hours: A Theoretically Inspired Perspective [PDF] Wuyang Chen, Xinyu Gong, Zhangyang Wang In ICLR 2

An open source robotics benchmark for meta- and multi-task reinforcement learning

Meta-World Meta-World is an open-source simulated benchmark for meta-reinforcement learning and multi-task learning consisting of 50 distinct robotic

Modular Deep Reinforcement Learning framework in PyTorch. Companion library of the book "Foundations of Deep Reinforcement Learning".

SLM Lab Modular Deep Reinforcement Learning framework in PyTorch. Documentation: https://slm-lab.gitbook.io/slm-lab/ BeamRider Breakout KungFuMaster M