Super-BPD for Fast Image Segmentation (CVPR 2020)

Introduction

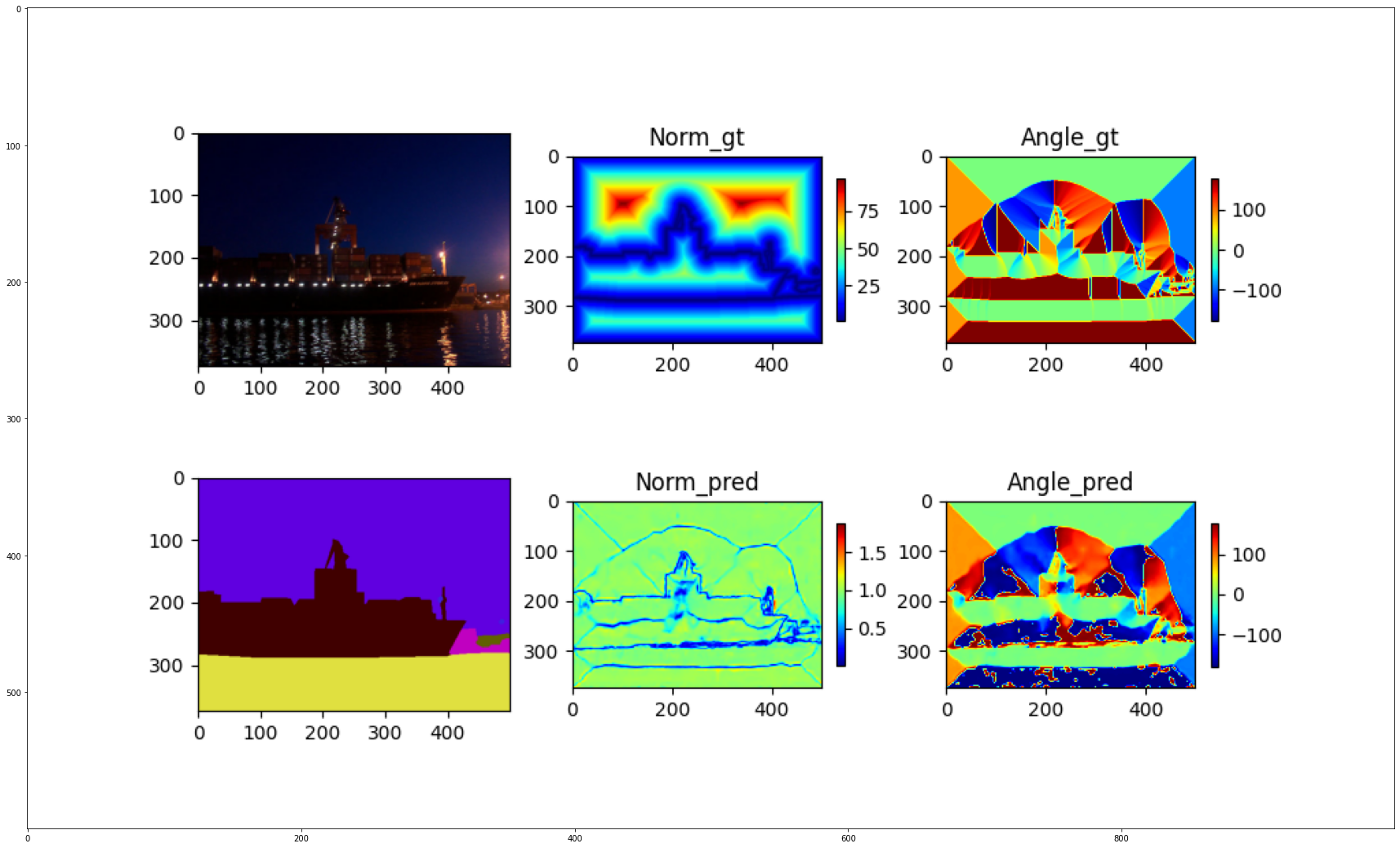

We propose direction-based super-BPD, an alternative to superpixel, for fast generic image segmentation, achieving state-of-the-art real-time result.

Citation

Please cite the related works in your publications if it helps your research:

@InProceedings{Wan_2020_CVPR,

author = {Wan, Jianqiang and Liu, Yang and Wei, Donglai and Bai, Xiang and Xu, Yongchao},

title = {Super-BPD: Super Boundary-to-Pixel Direction for Fast Image Segmentation},

booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}

Prerequisite

- pytorch >= 1.3.0

- g++ 7

Dataset

- Download the BSDS500 & PascalContext Dataset, and unzip it into the

Super-BPD/folder.

Testing

- Compile cuda code for post-process.

cd post_process

python setup.py install

-

Download the pre-trained PascalContext model and put it in the

savedfolder. -

Test the model and results will be saved in the

test_pred_flux/PascalContextfolder. -

SEISM is used for evaluation of image segmentation.

Training

- Download VGG-16 pretrained model.

python train.py --dataset PascalContext