Label Studio for Hugging Face's Transformers

Website • Docs • Twitter • Join Slack Community

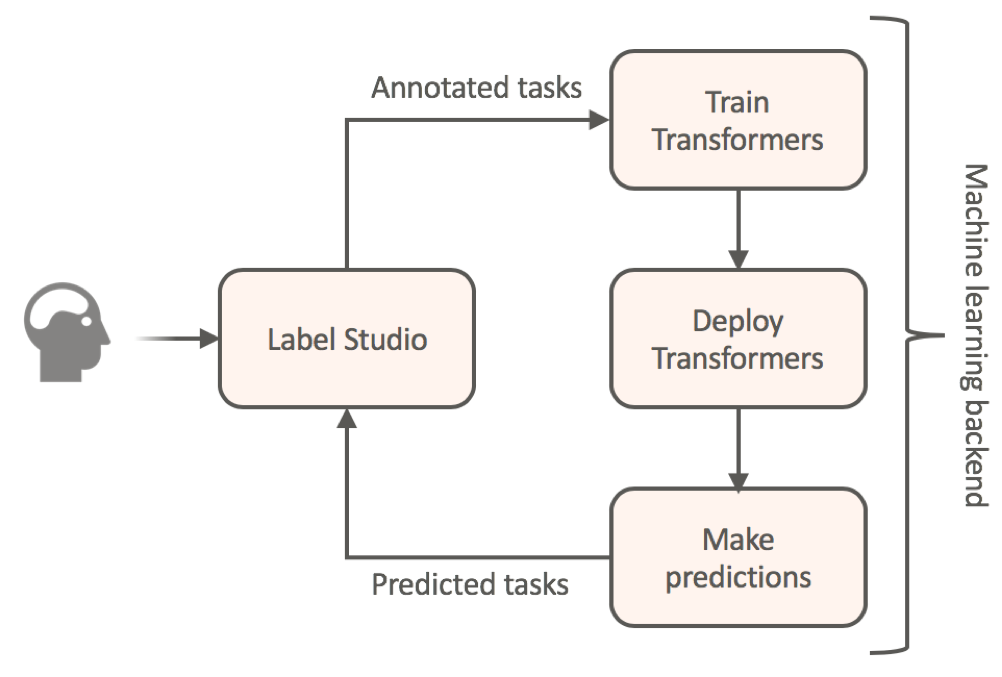

Transfer learning for NLP models by annotating your textual data without any additional coding.

This package provides a ready-to-use container that links together:

- Label Studio as annotation frontend

- Hugging Face's transformers as machine learning backend for NLP

Quick Usage

Install Label Studio and other dependencies

pip install -r requirements.txt

Create ML backend with BERT classifier

label-studio-ml init my-ml-backend --script models/bert_classifier.py

cp models/utils.py my-ml-backend/utils.py

# Start ML backend at http://localhost:9090

label-studio-ml start my-ml-backend

# Start Label Studio in the new terminal with the same python environment

label-studio start

- Create a project with

ChoicesandTexttags in the labeling config. - Connect the ML backend in the Project settings with

http://localhost:9090

Create ML backend with BERT named entity recognizer

label-studio-ml init my-ml-backend --script models/ner.py

cp models/utils.py my-ml-backend/utils.py

# Start ML backend at http://localhost:9090

label-studio-ml start my-ml-backend

# Start Label Studio in the new terminal with the same python environment

label-studio start

- Create a project with

LabelsandTexttags in the labeling config. - Connect the ML backend in the Project settings with

http://localhost:9090

Training and inference

The browser opens at http://localhost:8080. Upload your data on Import page then annotate by selecting Labeling page. Once you've annotate sufficient amount of data, go to Model page and press Start Training button. Once training is finished, model automatically starts serving for inference from Label Studio, and you'll find all model checkpoints inside my-ml-backend/

directory.

Click here to read more about how to use Machine Learning backend and build Human-in-the-Loop pipelines with Label Studio

License

This software is licensed under the Apache 2.0 LICENSE © Heartex. 2020