fds is a tool for Data Scientists made by DAGsHub to version control data and code at once.

Fast Data Science, AKA fds, is a CLI for Data Scientists to version control data and code at once, by conveniently wrapping git and dvc

Tuplex is a parallel big data processing framework that runs data science pipelines written in Python at the speed of compiled code

Tuplex is a parallel big data processing framework that runs data science pipelines written in Python at the speed of compiled code. Tuplex has similar Python APIs to Apache Spark or Dask, but rather than invoking the Python interpreter, Tuplex generates optimized LLVM bytecode for the given pipeline and input data set.

A data parser for the internal syncing data format used by Fog of World.

A data parser for the internal syncing data format used by Fog of World. The parser is not designed to be a well-coded library with good performance, it is more like a demo for showing the data structure.

Fancy data functions that will make your life as a data scientist easier.

WhiteBox Utilities Toolkit: Tools to make your life easier Fancy data functions that will make your life as a data scientist easier. Installing To ins

A Big Data ETL project in PySpark on the historical NYC Taxi Rides data

Processing NYC Taxi Data using PySpark ETL pipeline Description This is an project to extract, transform, and load large amount of data from NYC Taxi

Utilize data analytics skills to solve real-world business problems using Humana’s big data

Humana-Mays-2021-HealthCare-Analytics-Case-Competition- The goal of the project is to utilize data analytics skills to solve real-world business probl

Python data processing, analysis, visualization, and data operations

Python This is a Python data processing, analysis, visualization and data operations of the source code warehouse, book ISBN: 9787115527592 Descriptio

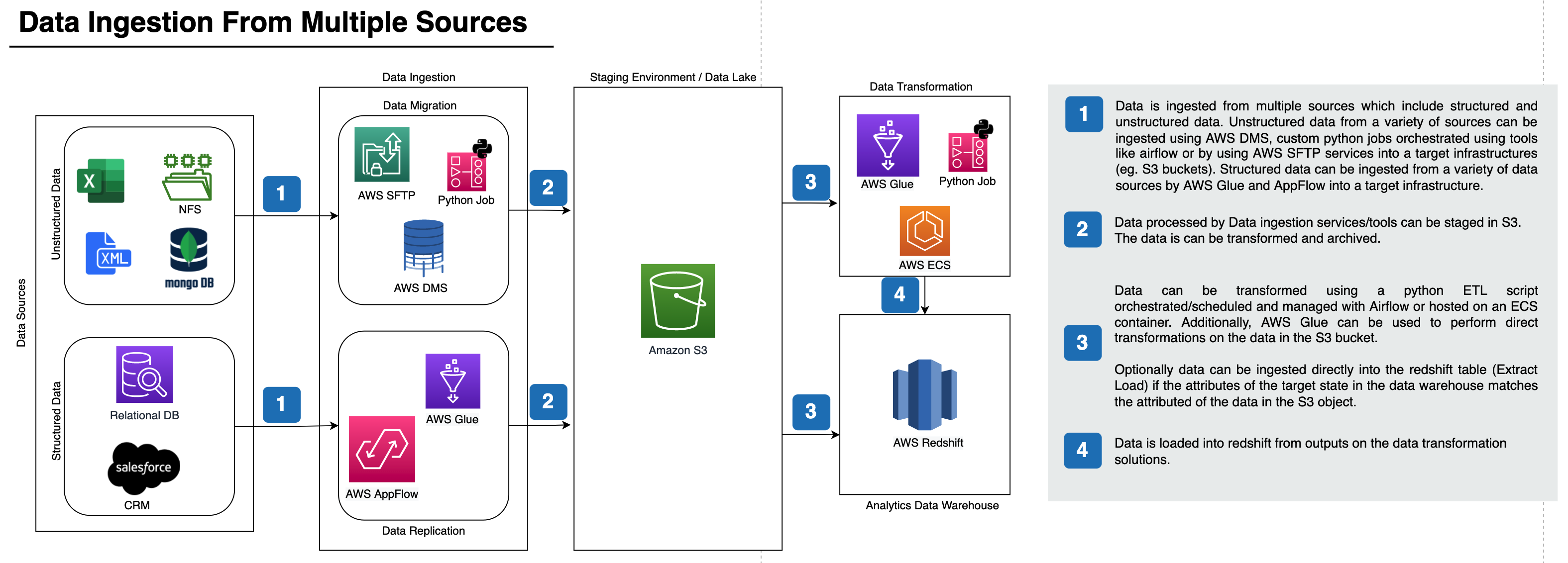

PrimaryBid - Transform application Lifecycle Data and Design and ETL pipeline architecture for ingesting data from multiple sources to redshift

Transform application Lifecycle Data and Design and ETL pipeline architecture for ingesting data from multiple sources to redshift This project is composed of two parts: Part1 and Part2