PL-LOW

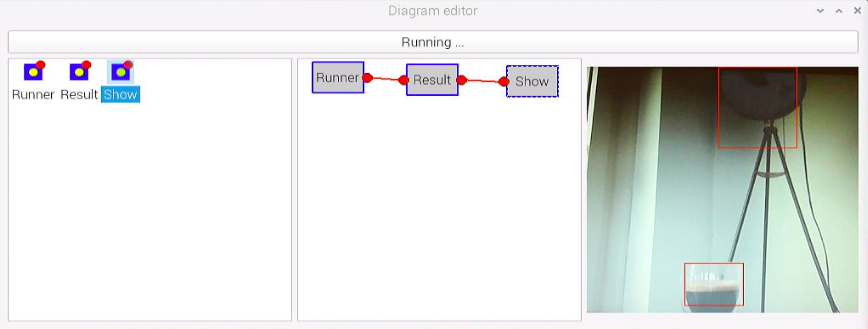

A high-performance deep learning model lightweight pipeline that gradually lightens deep neural networks in order to utilize high-performance deep learning models in low-power systems with limited computational resources such as mobile/embedded devices.

PL-LOW includes the following three lightweight element technologies that reduce the size of the deep learning model, the amount of computation required for inference, and the memory usage.

- Deep learning model parameter lightweight technology that maintains high expressive power.

- Deep learning model knowledge distillation technology that effectively learns high-level information.

- Deep learning model lightweight inference technology for fast computation and high accuracy

Authors

Principal Investigator (PI)

- Professor Hwanjo Yu ([email protected])

License

The code is licensed under the MIT License