Release Highlights

Ray 2.0 is an exciting release with enhancements to all libraries in the Ray ecosystem. With this major release, we take strides towards our goal of making distributed computing scalable, unified, and open.

Towards these goals, Ray 2.0 features new capabilities for unifying the machine learning (ML) ecosystem, improving Ray's production support, and making it easier than ever for ML practitioners to use Ray's libraries.

Highlights:

- Ray AIR, a scalable and unified toolkit for ML applications, is now in Beta.

- Ray now supports natively shuffling 100TB or more of data with the Ray Datasets library.

- KubeRay, a toolkit for running Ray on Kubernetes, is now in Beta. This replaces the legacy Python-based Ray operator.

- Ray Serve’s Deployment Graph APIis a new and easier way to build, test, and deploy an inference graph of deployments. This is released as Beta in 2.0.

A migration guide for all the different libraries can be found here: Ray 2.0 Migration Guide.

Ray Libraries

Ray AIR

Ray AIR is now in beta. Ray AIR builds upon Ray’s libraries to enable end-to-end machine learning workflows and applications on Ray. You can install all dependencies needed for Ray AIR via pip install -u "ray[air]".

🎉 New Features:

- Predictors:

- BatchPredictors now have support for scalable inference on GPUs.

- All Predictors can now be constructed from pre-trained models, allowing you to easily scale batch inference with trained models from common ML frameworks.

- ray.ml.predictors has been moved to the Ray Train namespace (ray.train).

- Preprocessing: New preprocessors and API changes on Ray Datasets now make feature processing easier to do on AIR. See the Ray Data release notes for more details.

- New features for Datasets/Train/Tune/Serve can be found in the corresponding library release notes for more details.

💫 Enhancements:

- Major package refactoring is included in this release.

- ray.ml is renamed to ray.air.

- ray.ml.preprocessors have been moved to ray.data.

- train_test_split is now a new method of ray.data.Dataset (#27065)

- ray.ml.trainers have been moved to ray.train (#25570)

- ray.ml.predictors has been moved to ray.train.

- ray.ml.config has been moved to ray.air.config (#25712).

- Checkpoints are now framework-specific -- meaning that each Trainer generates its own Framework-specific Checkpoint class. See Ray Train for more details.

- ModelWrappers have been renamed to PredictorDeployments.

- API stability annotations have been added (#25485)

- Train/Tune now have the same reporting and checkpointing API -- see the Train notes for more details (#26303)

- ScalingConfigs are now Dataclasses not Dict types

- Many AIR examples, benchmarks, and documentation pages were added in this release. The Ray AIR documentation will cover breadth of usage (end to end workflows across different libraries) while library-specific documentation will cover depth (specific features of a specific library).

🔨 Fixes:

- Many documentation examples were previously untested. This release fixes those examples and adds them to the CI.

- Predictors:

- Torch/Tensorflow Predictors have correctness fixes (#25199, #25190, #25138, #25136)

- Update

KerasCallback to work with TensorflowPredictor (#26089)

- Add streaming BatchPredictor support (#25693)

- Add

predict_pandas implementation (#25534)

- Add

_predict_arrow interface for Predictor (#25579)

- Allow creating Predictor directly from a UDF (#26603)

- Execute GPU inference in a separate stage in BatchPredictor (#26616, #27232, #27398)

- Accessors for preprocessor in Predictor class (#26600)

- [AIR] Predictor

call_model API for unsupported output types (#26845)

Ray Data Processing

🎉 New Features:

- Add ImageFolderDatasource (#24641)

- Add the NumPy batch format for batch mapping and batch consumption (#24870)

- Add iter_torch_batches() and iter_tf_batches() APIs (#26689)

- Add local shuffling API to iterators (#26094)

- Add drop_columns() API (#26200)

- Add randomize_block_order() API (#25568)

- Add random_sample() API (#24492)

- Add support for len(Dataset) (#25152)

- Add UDF passthrough args to map_batches() (#25613)

- Add Concatenator preprocessor (#26526)

- Change range_arrow() API to range_table() (#24704)

💫 Enhancements:

- Autodetect dataset parallelism based on available resources and data size (#25883)

- Use polars for sorting (#25454)

- Support tensor columns in to_tf() and to_torch() (#24752)

- Add explicit resource allocation option via a top-level scheduling strategy (#24438)

- Spread actor pool actors evenly across the cluster by default (#25705)

- Add ray_remote_args to read_text() (#23764)

- Add max_epoch argument to iter_epochs() (#25263)

- Add Pandas-native groupby and sorting (#26313)

- Support push-based shuffle in groupby operations (#25910)

- More aggressive memory releasing for Dataset and DatasetPipeline (#25461, #25820, #26902, #26650)

- Automatically cast tensor columns on Pandas UDF outputs (#26924)

- Better error messages when reading from S3 (#26619, #26669, #26789)

- Make dataset splitting more efficient and stable (#26641, #26768, #26778)

- Use sampling to estimate in-memory data size for Parquet data source (#26868)

- De-experimentalized lazy execution mode (#26934)

🔨 Fixes:

- Fix pipeline pre-repeat caching (#25265)

- Fix stats construction for from_*() APIs (#25601)

- Fixes label tensor squeezing in to_tf() (#25553)

- Fix stage fusion between equivalent resource args (fixes BatchPredictor) (#25706)

- Fix tensor extension string formatting (repr) (#25768)

- Workaround for unserializable Arrow JSON ReadOptions (#25821)

- Make ActorPoolStrategy kill pool of actors if exception is raised (#25803)

- Fix max number of actors for default actor pool strategy (#26266)

- Fix byte size calculation for non-trivial tensors (#25264)

Ray Train

Ray Train has received a major expansion of scope with Ray 2.0.

In particular, the Ray Train module now contains:

- Trainers

- Predictors

- Checkpoints

for common different ML frameworks including Pytorch, Tensorflow, XGBoost, LightGBM, HuggingFace, and Scikit-Learn. These API help provide end-to-end usage of Ray libraries in Ray AIR workflows.

🎉 New Features:

- The Trainer API is now deprecated for the new Ray AIR Trainers API. Trainers for Pytorch, Tensorflow, Horovod, XGBoost, and LightGBM are now in Beta. (#25570)

- ML framework-specific Predictors have been moved into the

ray.train namespace. This provides streamlined API for offline and online inference of Pytorch, Tensorflow, XGBoost models and more. (#25769 #26215, #26251, #26451, #26531, #26600, #26603, #26616, #26845)

- ML framework-specific checkpoints are introduced. Checkpoints are consumed by Predictors to load model weights and information. (#26777, #25940, #26532, #26534)

💫 Enhancements:

- Train and Tune now use the same reporting and checkpointing API (#24772, #25558)

- Add tunable ScalingConfig dataclass (#25712)

- Randomize block order by default to avoid hotspots (#25870)

- Improve checkpoint configurability and extend results (#25943)

- Improve prepare_data_loader to support multiple batch data types (#26386)

- Discard returns of train loops in Trainers (#26448)

- Clean up logs, reprs, warning s(#26259, #26906, #26988, #27228, #27519)

📖 Documentation:

- Update documentation to use new Train API (#25735)

- Update documentation to use session API (#26051, #26303)

- Add Trainer user guide and update Trainer docs (#27570, #27644, #27685)

- Add Predictor documentation (#25833)

- Replace to_torch with iter_torch_batches (#27656)

- Replace to_tf with iter_tf_batches (#27768)

- Minor doc fixes (#25773, #27955)

🏗 Architecture refactoring:

- Clean up ray.train package (#25566)

- Mark Trainer interfaces as Deprecated (#25573)

🔨 Fixes:

- An issue with GPU ID detection and assignment was fixed. (#26493)

- Fix AMP for models with a custom

__getstate__ method (#25335)

- Fix transformers example for multi-gpu (#24832)

- Fix ScalingConfig key validation (#25549)

- Fix ResourceChangingScheduler integration (#26307)

- Fix auto_transfer cuda device (#26819)

- Fix BatchPredictor.predict_pipelined not working with GPU stage (#27398)

- Remove rllib dependency from tensorflow_predictor (#27688)

Ray Tune

🎉 New Features:

- The Tuner API is the new way of running Ray Tune experiments. (#26987, #26987, #26961, #26931, #26884, #26930)

- Ray Tune and Ray Train now have the same API for reporting (#25558)

- Introduce tune.with_resources() to specify function trainable resources (#26830)

- Add Tune benchmark for AIR (#26763, #26564)

- Allow Tuner().restore() from cloud URIs (#26963)

- Add top-level imports for Tuner, TuneConfig, move CheckpointConfig (#26882)

- Add resume experiment options to Tuner.restore() (#26826)

- Add checkpoint_frequency/checkpoint_at_end arguments to CheckpointConfig (#26661)

- Add more config arguments to Tuner (#26656)

- Better error message for Tune nested tasks / actors (#25241)

- Allow iterators in tune.grid_search (#25220)

- Add

get_dataframe() method to result grid, fix config flattening (#24686)

💫 Enhancements:

- Expose number of errored/terminated trials in ResultGrid (#26655)

- remove fully_executed from Tune. (#25750)

- Exclude in remote storage upload (#25544)

- Add

TempFileLock (#25408)

- Add annotations/set scope for Tune classes (#25077)

📖 Documentation:

- Improve Tune + Datasets documentation (#25389)

- Tune examples better navigation, minor fixes (#24733)

🏗 Architecture refactoring:

- Consolidate checkpoint manager 3: Ray Tune (#24430)

- Clean up ray.tune scope (remove stale objects in all) (#26829)

🔨 Fixes:

- Fix k8s release test + node-to-node syncing (#27365)

- Fix Tune custom syncer example (#27253)

- Fix tune_cloud_aws_durable_upload_rllib_* release tests (#27180)

- Fix test_tune (#26721)

- Larger head node for tune_scalability_network_overhead weekly test (#26742)

- Fix tune-sklearn notebook example (#26470)

- Fix reference to

dataset_tune (#25402)

- Fix Tune-Pytorch-CIFAR notebook example (#26474)

- Fix documentation testing (#26409)

- Fix

set_tune_experiment (#26298)

- Fix GRPC resource exhausted test for tune trainables (#24467)

Ray Serve

🎉 New Features:

- We are excited to introduce you to the 2.0 API centered around multi-model composition API, operation API, and production stability. (#26310,#26507,#26217,#25932,#26374,#26901,#27058,#24549,#24616,#27479,#27576,#27433,#24306,#25651,#26682,#26521,#27194,#27206,#26804,#25575,#26574)

- Deployment Graph API is the new API for model composition. It provides a declarative layer on top of the 1.x deployment API to help you author performant inference pipeline easily. (#27417,#27420,#24754,#24435,#24630,#26573,#27349,#24404,#25424,#24418,#27815,#27844,#25453,#24629)

- We introduced a new K8s native way to deploy Ray Serve. Along with a brand new REST API to perform deployment, update, and configure. (#25935,#27063,#24814,#26093,#25213,#26588,#25073,#27000,#27444,#26578,#26652,#25610,#25502,#26096,#24265,#26177,#25861,#25691,#24839,#27498,#27561,#25862,#26347)

- Serve can now survive Ray GCS failure. This used to be a single-point-of-failure in Ray Serve's architecture. Now, when the GCS goes down, Serve can continue to Serve traffic. We recommend you to try out this feature and give us feedback! (#25633,#26107,#27608,#27763,#27771,#25478,#25637,#27526,#27674,#26753,#26797,#24560,#26685,#26734,#25987,#25091,#24934)

- Autoscaling has been promoted to stable. Additionally, we added a scale to zero support. (#25770,#25733,#24892,#26393)

- The documentation has been revamped. Check them at rayserve.org (#24414,#26211,#25786,#25936,#26029,#25830,#24760,#24871,#25243,#25390,#25646,#24657,#24713,#25270,#25808,#24693,#24736,#24524,#24690,#25494)

💫 Enhancements:

- Serve natively supports deploying predictor and checkpoints from Ray AI Runtime (#26026,#25003,#25537,#25609,#25962,#26494,#25688,#24512,#24417)

- Serve now supports scaling Gradio application (#27560)

- Java Client API, marking the complete alpha release Java API (#22726)

- Improved out-of-box performance by using uvicorn with uvloop (#25027)

RLlib

🎉 New Features:

- In 2.0, RLlib is introducing an object-oriented configuration API instead of using a python dict for algorithm configuration (#24332, #24374, #24375, #24376, #24433, #24576, #24650, #24577, #24339, #24687, #24775, #24584, #24583, #24853, #25028, #25059, #25065, #25066, #25067, #25256, #25255, #25278, #25279)

- RLlib is introducing a Connectors API (alpha). Connectors are a new component that handles transformations on inputs and outputs of a given RL policy. (#25311, #25007, #25923, #25922, #25954, #26253, #26510, #26645, #26836, #26803, #26998, #27016)

- New improvements to off-policy estimators, including a new Doubly-Robust Off-Policy Estimator implementation (#24384, #25107, #25056, #25899, #25911, #26279, #26893)

- CRR Algorithm (#25459, #25667, #25905, #26142, #26304, #26770, #27161)

- Feature importance evaluation for offline RL (#26412)

- RE3 exploration algorithm TF2 framework support (#25221)

- Unified replay Buffer API (#24212, #24156, #24473, #24506, #24866, #24683, #25841, #25560, #26428)

💫 Enhancements:

- Improvements to RolloutWorker / Env fault tolerance (#24967, #26134, #26276, #26809)

- Upgrade gym to 0.23 (#24171), Bump gym dep to 0.24 (#26190)

- Agents has been renamed to Algorithms (#24511, #24516, #24739, #24797, #24841, #24896, #25014, #24579, #25314, #25346, #25366, #25539, #25869)

- Execution Plan API is now deprecated. Training step function API is the new way of specifying RLlib algorithms (#23454, #24488, #2450, #24212, #24165, #24545, #24507, #25076, #25624, #25924, #25856, #25851, #27344, #24423)

- Policy V2 subclassing implementation migration (#24742, #24746, #24914, #25117, #25203, #25078, #25254, #25384, #25585, #25871, #25956, #26054)

- Allow passing **kwargs to action distribution. (#24692)

- Deprecation: Replace remaining evaluation_num_episodes with

evaluation_duration. (#26000)

🔨 Fixes:

- Multi-GPU learner thread key error in MA-scenarios (#24382)

- Add release learning tests for SlateQ (#24429)

- APEX-DQN replay buffer config validation fix. (#24588)

- Automatic sequencing in function timeslice_along_seq_lens_with_overlap (#24561)

- Policy Server/Client metrics reporting fix (#24783)

- Re-establish dashboard performance tests. (#24728)

- Bandit tf2 fix (+ add tf2 to test cases). (#24908)

- Fix estimated buffer size in replay buffers. (#24848)

- Fix RNNSAC example failing on CI + fixes for recurrent models for other Q Learning Algos. (#24923)

- Curiosity bug fix. (#24880)

- Auto-infer different agents' spaces in multi-agent env. (#24649)

- Fix the bug “WorkerSet.stop() will raise error if

self._local_worker is None (e.g. in evaluation worker sets)”. (#25332)

- Fix Policy global timesteps being off by init sample batch size. (#25349)

- Disambiguate timestep fragment storage unit in replay buffers. (#25242)

- Fix the bug where on GPU, sample_batch.to_device() only converts the device and does not convert float64 to float32. (#25460)

- Fix faulty usage of get_filter_config in ComplexInputNextwork

(#25493)

- Custom resources per worker should get added to default_resource_request (#24463)

- Better default values for training_intensity and

target_network_update_freq for R2D2. (#25510)

- Fix multi agent environment checks for observations that contain only some agents' obs each step. (#25506)

- Fixes PyTorch grad clipping logic and adds grad clipping to QMIX. (#25584)

- Discussion 6432: Automatic train_batch_size calculation fix. (#25621)

- Added meaningful error for multi-agent failure of SampleCollector in case no agent steps in episode. (#25596)

- Replace torch.range with torch.arange. (#25640)\

- Fix the bug where there is no gradient clipping in QMix. (#25656)

- Fix sample batch concatination. (#25572)

- Fix action_sampler_fn call in TorchPolicyV2 (obs_batch instead of

input_dict arg). (#25877)

- Fixes logging of all of RLlib's Algorithm names as warning messages. (#25840)

- IMPALA/APPO multi-agent mix-in-buffer fixes (plus MA learningt ests). (#25848)

- Move offline input into replay buffer using rollout ops in CQL. (#25629)

- Include SampleBatch.T column in all collected batches. (#25926)

- Add timeout to filter synchronization. (#25959)

- SimpleQ PyTorch Multi GPU fix (#26109)

- IMPALA and APPO metrics fixes; remove deprecated

async_parallel_requests utility. (#26117)

- Added 'episode.hist_data' to the 'atari_metrics' to nsure that custom metrics of the user are kept in postprocessing when using Atari environments. (#25292)

- Make the dataset and json readers batchable (#26055)

- Fix Issue 25696: Output writers not working w/ multiple workers. (#25722)

- Fix all the erroneous on_trainer_init warning. (#26433)

- In env check, step only expected agents. (#26425)

- Make DQN update_target use only trainable variables. (#25226)

- Fix FQE Policy call (#26671)

- Make queue placement ops blocking (#26581)

- Fix memory leak in APEX_DQN (#26691)

- Fix MultiDiscrete not being one-hotted correctly (#26558)

- Make IOContext optional for DatasetReader (#26694)

- Make sure we step() after adding init_obs. (#26827)

- Fix ModelCatalog for nested complex inputs (#25620)

- Use compress observations where replay buffers and image obs are used in tuned examples (#26735)

- Fix SampleBatch.split_by_episode to use dones if episode id is not available (#26492)

- Fix torch None conversion in

torch_utils.py::convert_to_torch_tensor. (#26863)

- Unify gnorm mixin for tf and torch policies. (#26102)

Ray Workflows

🎉 New Features:

- Support ray client (#26702)

- Http event is supported (#26010)

- Support retry_exceptions (#26913)

- Support queuing in workflow (#24697)

- Make status indexed (#24767)

🔨 Fixes:

- Push logs to drivers correctly (#24490)

- Make resume no side effect (#26918)

- Make the max_retries aligned with ray (#26350)

🏗 Architecture refactoring:

- Rewrite workflow execution engine (#25618)

- Simplify the resume flow (#24594)

- Deprecate step and use bind (#26232)

- Deprecate virtual actor (#25394)

- Refactor the exception processing (#26398)

Ray Core and Ray Clusters

Ray Core

🎉 New Features:

- Ray State API is now at alpha. You can access the live information of tasks, actors, objects, placement groups, and etc. through Ray CLI (summary / list / get) and Python SDK. See the Ray State API documentation for more information.

- Support generators for tasks with multiple return values (#25247)

- Support GCS Fault tolerance.(#24764, #24813, #24887, #25131, #25126, #24747, #25789, #25975, #25994, #26405, #26421, #26919)

💫 Enhancements:

- Allow failing new tasks immediately while the actor is restarting (#22818)

- Add more accurate worker exit (#24468)

- Allow user to override global default for max_retries (#25189)

- Export additional metrics for workers and Raylet memory (#25418)

- Push message to driver when a Raylet dies (#25516)

- Out of Disk prevention (#25370)

- ray.init defaults to an existing Ray instance if there is one (#26678)

- Reconstruct manually freed objects (#27567)

🔨 Fixes:

- Fix a task cancel hanging bug (#24369)

- Adjust worker OOM scores to prioritize the raylet during memory pressure (#24623)

- Fix pull manager deadlock due to object reconstruction (#24791)

- Fix bugs in data locality aware scheduling (#25092)

- Fix node affinity strategy when resource is empty (#25344)

- Fix object transfer resend protocol (#26349)

🏗 Architecture refactoring:

- Raylet and GCS schedulers share the same code (#23829)

- Remove multiple core workers in one process (#24147, #25159)

Ray Clusters

🎉 New Features:

- The KubeRay operator is now the preferred tool to run Ray on Kubernetes.

- Ray Autoscaler + KubeRay operator integration is now beta.

💫 Enhancements:

🔨 Fixes:

- Previously deprecated fields,

head_node, worker_nodes, head_node_type, default_worker_node_type, autoscaling_mode, target_utilization_fraction are removed. Check out the migration guideto learn how to migrate to the new versions.

Ray Client

🎉 New Features:

- Support for configuring request metadata for client gRPC (#24946)

💫 Enhancements:

- Remove 2 GiB size limit on remote function arguments (#24555)

🔨 Fixes:

- Fix excessive memory usage when submitting large remote arguments (#24477)

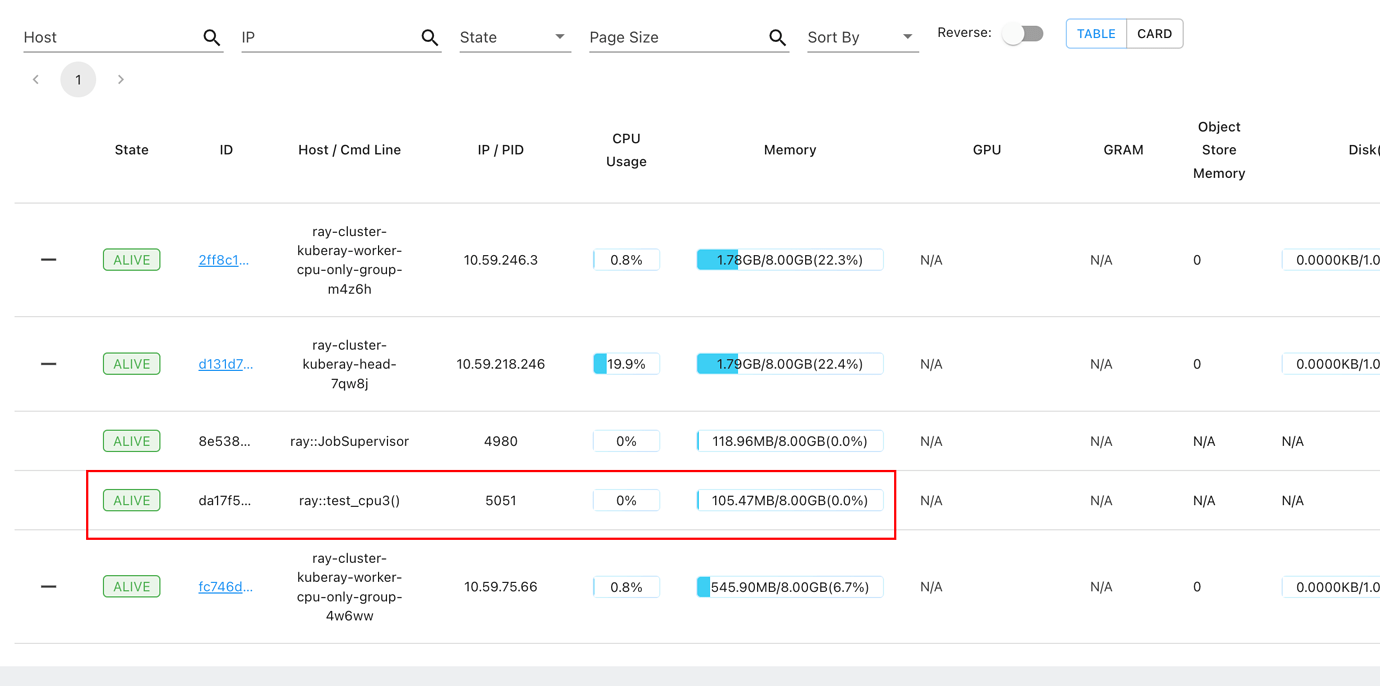

Dashboard

🎉 New Features:

- The new dashboard UI is now to default dashboard. Please leave any feedback about the dashboard on Github Issues or Discourse! You can still go to the legacy dashboard UI by clicking “Back to legacy dashboard”.

- New Dashboard UI now shows all ray jobs. This includes jobs submitted via the job submission API and jobs launched from python scripts via ray.init().

- New Dashboard UI now shows worker nodes in the main node tab

- New Dashboard UI now shows more information in the actors tab

Breaking changes:

- The job submission list_jobs API endpoint, CLI command, and SDK function now returns a list of jobs instead of a dictionary from id to job.

- The Tune tab is no longer in the new dashboard UI. It is still available in the legacy dashboard UI but will be removed.

- The memory tab is no longer in the new dashboard UI. It is still available in the legacy dashboard UI but will be removed.

🔨 Fixes:

- We reduced the memory usage of the dashboard. We are no longer caching logs and we cache a maximum of 1000 actors. As a result of this change, node level logs can no longer be accessed in the legacy dashboard.

- Jobs status error message now properly truncates logs to 10 lines. We also added a max characters of 20000 to avoid passing too much data.

Many thanks to all those who contributed to this release!

@ujvl, @xwjiang2010, @EricCousineau-TRI, @ijrsvt, @waleedkadous, @captain-pool, @olipinski, @danielwen002, @amogkam, @bveeramani, @kouroshHakha, @jjyao, @larrylian, @goswamig, @hanming-lu, @edoakes, @nikitavemuri, @enori, @grechaw, @truelegion47, @alanwguo, @sychen52, @ArturNiederfahrenhorst, @pcmoritz, @mwtian, @vakker, @c21, @rberenguel, @mattip, @robertnishihara, @cool-RR, @iamhatesz, @ofey404, @raulchen, @nmatare, @peterghaddad, @n30111, @fkaleo, @Riatre, @zhe-thoughts, @lchu-ibm, @YoelShoshan, @Catch-Bull, @matthewdeng, @VishDev12, @valtab, @maxpumperla, @tomsunelite, @fwitter, @liuyang-my, @peytondmurray, @clarkzinzow, @VeronikaPolakova, @sven1977, @stephanie-wang, @emjames, @Nintorac, @suquark, @javi-redondo, @xiurobert, @smorad, @brucez-anyscale, @pdames, @jjyyxx, @dmatrix, @nakamasato, @richardliaw, @juliusfrost, @anabranch, @christy, @Rohan138, @cadedaniel, @simon-mo, @mavroudisv, @guidj, @rkooo567, @orcahmlee, @lixin-wei, @neigh80, @yuduber, @JiahaoYao, @simonsays1980, @gjoliver, @jimthompson5802, @lucasalavapena, @zcin, @clarng, @jbn, @DmitriGekhtman, @timgates42, @charlesjsun, @Yard1, @mgelbart, @wumuzi520, @sihanwang41, @ghost, @jovany-wang, @siavash119, @yuanchi2807, @tupui, @jianoaix, @sumanthratna, @code-review-doctor, @Chong-Li, @FedericoGarza, @ckw017, @Makan-Ar, @kfstorm, @flanaman, @WangTaoTheTonic, @franklsf95, @scv119, @kvaithin, @wuisawesome, @jiaodong, @mgerstgrasser, @tiangolo, @architkulkarni, @MyeongKim, @ericl, @SongGuyang, @avnishn, @chengscott, @shrekris-anyscale, @Alyetama, @iycheng, @rickyyx, @krfricke, @sijieamoy, @kimikuri, @czgdp1807, @michalsustr

Source code(tar.gz)

Source code(zip)