sne4onnx

A very simple tool for situations where optimization with onnx-simplifier would exceed the Protocol Buffers upper file size limit of 2GB, or simply to separate onnx files to any size you want. Simple Network Extraction for ONNX.

https://github.com/PINTO0309/simple-onnx-processing-tools

Key concept

- If INPUT OP name and OUTPUT OP name are specified, the onnx graph within the range of the specified OP name is extracted and .onnx is generated.

- Change backend to

onnx.utils.Extractor.extract_modelso that onnx.ModelProto can be specified as input.

1. Setup

1-1. HostPC

### option

$ echo export PATH="~/.local/bin:$PATH" >> ~/.bashrc \

&& source ~/.bashrc

### run

$ pip install -U onnx \

&& pip install -U sne4onnx

1-2. Docker

### docker pull

$ docker pull pinto0309/sne4onnx:latest

### docker build

$ docker build -t pinto0309/sne4onnx:latest .

### docker run

$ docker run --rm -it -v `pwd`:/workdir pinto0309/sne4onnx:latest

$ cd /workdir

2. CLI Usage

$ sne4onnx -h

usage:

sne4onnx [-h]

--input_onnx_file_path INPUT_ONNX_FILE_PATH

--input_op_names INPUT_OP_NAMES

--output_op_names OUTPUT_OP_NAMES

[--output_onnx_file_path OUTPUT_ONNX_FILE_PATH]

optional arguments:

-h, --help

show this help message and exit

--input_onnx_file_path INPUT_ONNX_FILE_PATH

Input onnx file path.

--input_op_names INPUT_OP_NAMES

List of OP names to specify for the input layer of the model.

Specify the name of the OP, separated by commas.

e.g. --input_op_names aaa,bbb,ccc

--output_op_names OUTPUT_OP_NAMES

List of OP names to specify for the output layer of the model.

Specify the name of the OP, separated by commas.

e.g. --output_op_names ddd,eee,fff

--output_onnx_file_path OUTPUT_ONNX_FILE_PATH

Output onnx file path. If not specified, extracted.onnx is output.

3. In-script Usage

$ python

>>> from sne4onnx import extraction

>>> help(extraction)

Help on function extraction in module sne4onnx.onnx_network_extraction:

extraction(

input_op_names: List[str],

output_op_names: List[str],

input_onnx_file_path: Union[str, NoneType] = '',

onnx_graph: Union[onnx.onnx_ml_pb2.ModelProto, NoneType] = None,

output_onnx_file_path: Union[str, NoneType] = ''

) -> onnx.onnx_ml_pb2.ModelProto

Parameters

----------

input_op_names: List[str]

List of OP names to specify for the input layer of the model.

Specify the name of the OP, separated by commas.

e.g. ['aaa','bbb','ccc']

output_op_names: List[str]

List of OP names to specify for the output layer of the model.

Specify the name of the OP, separated by commas.

e.g. ['ddd','eee','fff']

input_onnx_file_path: Optional[str]

Input onnx file path.

Either input_onnx_file_path or onnx_graph must be specified.

onnx_graph If specified, ignore input_onnx_file_path and process onnx_graph.

onnx_graph: Optional[onnx.ModelProto]

onnx.ModelProto.

Either input_onnx_file_path or onnx_graph must be specified.

onnx_graph If specified, ignore input_onnx_file_path and process onnx_graph.

output_onnx_file_path: Optional[str]

Output onnx file path.

If not specified, .onnx is not output.

Default: ''

Returns

-------

extracted_graph: onnx.ModelProto

Extracted onnx ModelProto

4. CLI Execution

$ sne4onnx \

--input_onnx_file_path input.onnx \

--input_op_names aaa,bbb,ccc \

--output_op_names ddd,eee,fff \

--output_onnx_file_path output.onnx

5. In-script Execution

5-1. Use ONNX files

from sne4onnx import extraction

extracted_graph = extraction(

input_op_names=['aaa', 'bbb', 'ccc'],

output_op_names=['ddd', 'eee', 'fff'],

input_onnx_file_path='input.onnx',

output_onnx_file_path='output.onnx',

)

5-2. Use onnx.ModelProto

from sne4onnx import extraction

extracted_graph = extraction(

input_op_names=['aaa', 'bbb', 'ccc'],

output_op_names=['ddd', 'eee', 'fff'],

onnx_graph=graph,

output_onnx_file_path='output.onnx',

)

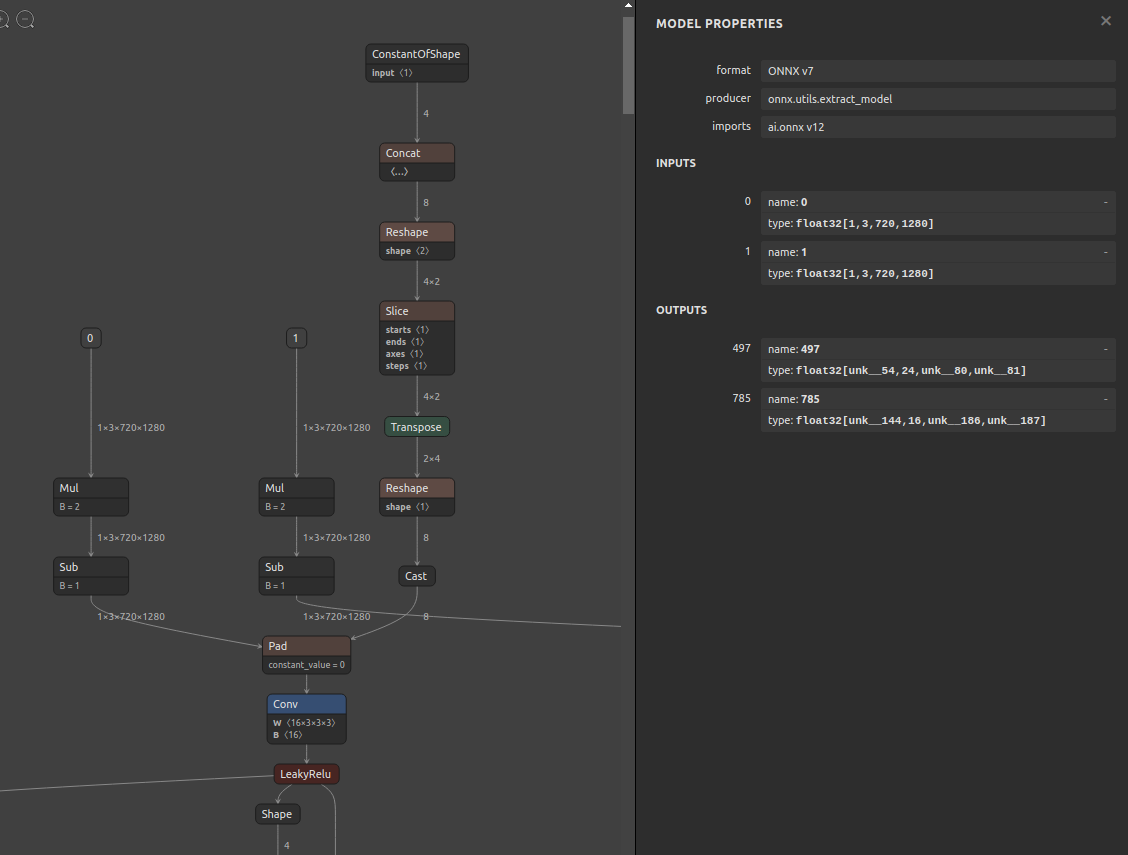

6. Samples

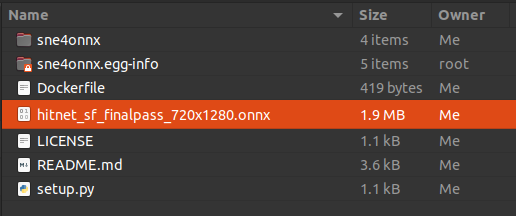

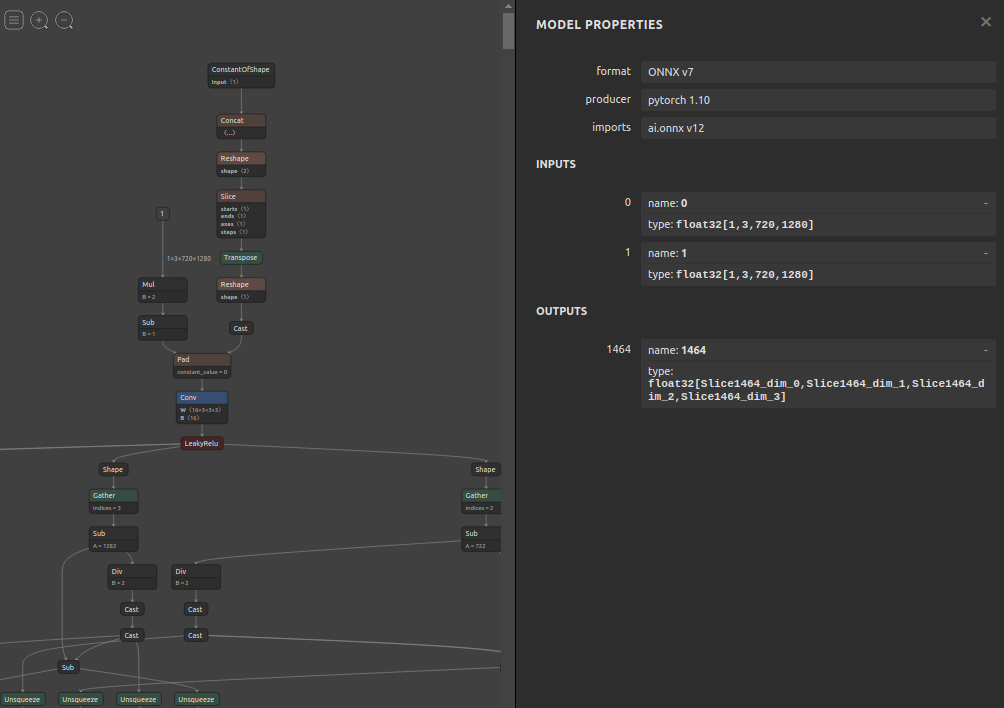

6-1. Pre-extraction

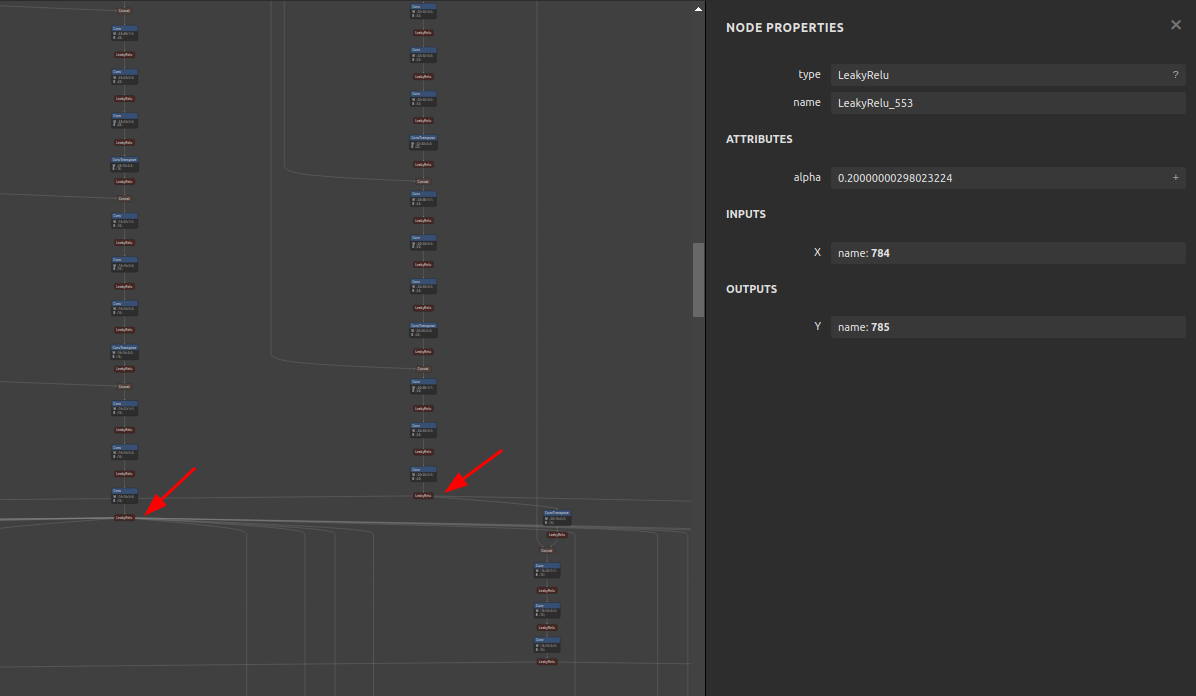

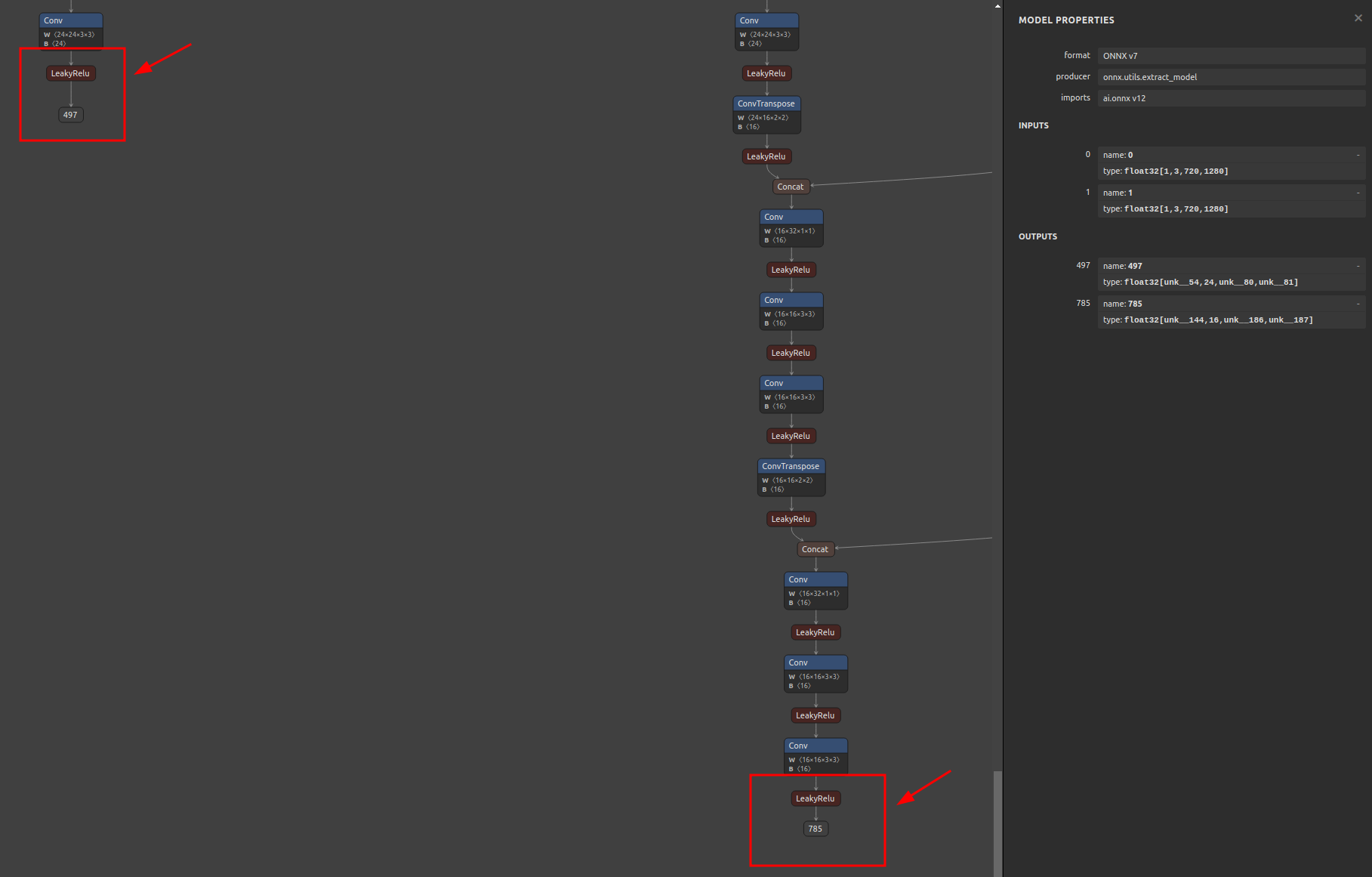

6-2. Extraction

$ sne4onnx \

--input_onnx_file_path hitnet_sf_finalpass_720x1280.onnx \

--input_op_names 0,1 \

--output_op_names 497,785 \

--output_onnx_file_path hitnet_sf_finalpass_720x960_head.onnx

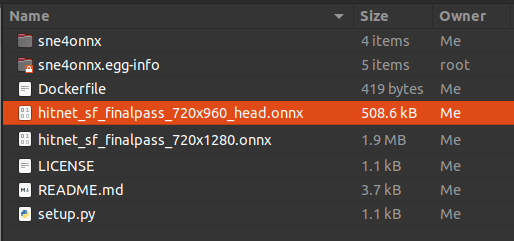

6-3. Extracted

7. Reference

- https://github.com/onnx/onnx/blob/main/docs/PythonAPIOverview.md

- https://docs.nvidia.com/deeplearning/tensorrt/onnx-graphsurgeon/docs/index.html

- https://github.com/NVIDIA/TensorRT/tree/main/tools/onnx-graphsurgeon

- https://github.com/PINTO0309/snd4onnx

- https://github.com/PINTO0309/scs4onnx

- https://github.com/PINTO0309/snc4onnx

- https://github.com/PINTO0309/sog4onnx

- https://github.com/PINTO0309/PINTO_model_zoo

8. Issues

https://github.com/PINTO0309/simple-onnx-processing-tools/issues

![A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]](https://user-images.githubusercontent.com/33194443/104581604-2592cb00-56a2-11eb-9610-5eaa0afb6e1f.png)