377 Repositories

Python BERT-Attack Libraries

Generate malicious files using recently published bidi-attack (CVE-2021-42574)

CVE-2021-42574 - Code generator Generate malicious files using recently published bidi-attack vulnerability, which was discovered in Unicode Specifica

A lightweight Python module to interact with the Mitre Att&ck Enterprise dataset.

enterpriseattack - Mitre's Enterprise Att&ck A lightweight Python module to interact with the Mitre Att&ck Enterprise dataset. Built to be used in pro

A (completely native) python3 wifi brute-force attack using the 100k most common passwords (2021)

wifi-bf [LINUX ONLY] A (completely native) python3 wifi brute-force attack using the 100k most common passwords (2021) This script is purely for educa

This is python script that will extract the functions call in all used DLL in an executable and then provide a mapping of those functions to the attack classes defined and curated malapi.io.

F2Amapper This is python script that will extract the functions call in all used DLL in an executable and then provide a mapping of those functions to

FinEAS: Financial Embedding Analysis of Sentiment 📈

FinEAS: Financial Embedding Analysis of Sentiment 📈 (SentenceBERT for Financial News Sentiment Regression) This repository contains the code for gene

Generate malicious files using recently published homoglyphic-attack (CVE-2021-42694)

CVE-2021-42694 Generate malicious files using recently published homoglyph-attack vulnerability, which was discovered at least in C, C++, C#, Go, Pyth

Code for "Adversarial Attack Generation Empowered by Min-Max Optimization", NeurIPS 2021

Min-Max Adversarial Attacks [Paper] [arXiv] [Video] [Slide] Adversarial Attack Generation Empowered by Min-Max Optimization Jingkang Wang, Tianyun Zha

Use Google's BERT for named entity recognition (CoNLL-2003 as the dataset).

For better performance, you can try NLPGNN, see NLPGNN for more details. BERT-NER Version 2 Use Google's BERT for named entity recognition (CoNLL-2003

Chinese NER(Named Entity Recognition) using BERT(Softmax, CRF, Span)

Chinese NER(Named Entity Recognition) using BERT(Softmax, CRF, Span)

Repository for the paper titled: "When is BERT Multilingual? Isolating Crucial Ingredients for Cross-lingual Transfer"

When is BERT Multilingual? Isolating Crucial Ingredients for Cross-lingual Transfer This repository contains code for our paper titled "When is BERT M

D-dos attack GUI tool written in python using tkinter module

ddos D-dos attack GUI tool written in python using tkinter module #to use this tool on android, do the following on termux. *. apt update *. apt upgra

Brute Force Attack On Facebook Accounts

Brute Force Attack On Facebook Accounts For Install: pkg install update && pkg upgrade -y pkg install python pip install requests pip install mechani

A repository to spoof ARP table of any devices and successfully establish Man in the Middle(MITM) attack using Python3 in Linux

arp_spoofer A repository to spoof ARP table of any devices and successfully establish Man in the Middle(MITM) attack using Python3 in Linux Usage: git

A repository to detect the ARP spoofing in any devices and prevent Man in the Middle(MITM) attack using Python3

arp_spoof_detector A repository to detect the ARP spoofing in any devices and prevent Man in the Middle(MITM) attack using Python3 Usage: git clone ht

PatrickStar enables Larger, Faster, Greener Pretrained Models for NLP. Democratize AI for everyone.

PatrickStar: Parallel Training of Large Language Models via a Chunk-based Memory Management Meeting PatrickStar Pre-Trained Models (PTM) are becoming

PatrickStar enables Larger, Faster, Greener Pretrained Models for NLP. Democratize AI for everyone.

PatrickStar enables Larger, Faster, Greener Pretrained Models for NLP. Democratize AI for everyone.

NLP Text Classification

多标签文本分类任务 近年来随着深度学习的发展,模型参数的数量飞速增长。为了训练这些参数,需要更大的数据集来避免过拟合。然而,对于大部分NLP任务来说,构建大规模的标注数据集非常困难(成本过高),特别是对于句法和语义相关的任务。相比之下,大规模的未标注语料库的构建则相对容易。为了利用这些数据,我们可以

Attack on Confidence Estimation algorithm from the paper "Disrupting Deep Uncertainty Estimation Without Harming Accuracy"

Attack on Confidence Estimation (ACE) This repository is the official implementation of "Disrupting Deep Uncertainty Estimation Without Harming Accura

command injection vulnerability in the web server of some Hikvision product. Due to the insufficient input validation, attacker can exploit the vulnerability to launch a command injection attack by sending some messages with malicious commands.

CVE-2021-36260 CVE-2021-36260 POC command injection vulnerability in the web server of some Hikvision product. Due to the insufficient input validatio

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrai

PyABSA - Open & Efficient for Framework for Aspect-based Sentiment Analysis

PyABSA - Open & Efficient for Framework for Aspect-based Sentiment Analysis

Example Of Fine-Tuning BERT For Named-Entity Recognition Task And Preparing For Cloud Deployment Using Flask, React, And Docker

Example Of Fine-Tuning BERT For Named-Entity Recognition Task And Preparing For Cloud Deployment Using Flask, React, And Docker This repository contai

Solución al reto BBVA Contigo, Hack BBVA 2021

Solution Solución propuesta para el reto BBVA Contigo del Hackathon BBVA 2021. Equipo Mexdapy. Integrantes: David Pedroza Segoviano Regina Priscila Ba

A Proof-of-Concept Layer 2 Denial of Service Attack that disrupts low level operations of Programmable Logic Controllers within industrial environments. Utilizing multithreaded processing, Automator-Terminator delivers a powerful wave of spoofed ethernet packets to a null MAC address.

Automator-Terminator A Proof-of-Concept Layer 2 Denial of Service Attack that disrupts low level operations of Programmable Logic Controllers (PLCs) w

D(HE)ater is a security tool can perform DoS attack by enforcing the DHE key exchange.

D(HE)ater D(HE)ater is an attacking tool based on CPU heating in that it forces the ephemeral variant of Diffie-Hellman key exchange (DHE) in given cr

Bootstrapped Unsupervised Sentence Representation Learning (ACL 2021)

Install first pip3 install -e . Training python3 training/unsupervised_tuning.py python3 training/supervised_tuning.py python3 training/multilingual_

BERT model training impelmentation using 1024 A100 GPUs for MLPerf Training v1.1

Pre-trained checkpoint and bert config json file Location of checkpoint and bert config json file This MLCommons members Google Drive location contain

Bark Toolkit is a toolkit wich provides Denial-of-service attacks, SMS attacks and more.

Bark Toolkit About Bark Toolkit Bark Toolkit is a set of tools that provides denial of service attacks. Bark Toolkit includes SMS attack tool, HTTP

Dos attack a Bluetooth connection!

Bluetooth Denial of service Script made for attacking Bluetooth Devices By Samrat Katwal. Warning This project was created only for fun purposes and p

![Moiré Attack (MA): A New Potential Risk of Screen Photos [NeurIPS 2021]](https://github.com/Dantong88/Moire_Attack/raw/main/Images/Pipeline.png)

Moiré Attack (MA): A New Potential Risk of Screen Photos [NeurIPS 2021]

Moiré Attack (MA): A New Potential Risk of Screen Photos [NeurIPS 2021] This repository is the official implementation of Moiré Attack (MA): A New Pot

A Word Level Transformer layer based on PyTorch and 🤗 Transformers.

Transformer Embedder A Word Level Transformer layer based on PyTorch and 🤗 Transformers. How to use Install the library from PyPI: pip install transf

[EMNLP 2021] Mirror-BERT: Converting Pretrained Language Models to universal text encoders without labels.

[EMNLP 2021] Mirror-BERT: Converting Pretrained Language Models to universal text encoders without labels.

An NLP library with Awesome pre-trained Transformer models and easy-to-use interface, supporting wide-range of NLP tasks from research to industrial applications.

简体中文 | English News [2021-10-12] PaddleNLP 2.1版本已发布!新增开箱即用的NLP任务能力、Prompt Tuning应用示例与生成任务的高性能推理! 🎉 更多详细升级信息请查看Release Note。 [2021-08-22]《千言:面向事实一致性的生

Mengzi Pretrained Models

中文 | English Mengzi 尽管预训练语言模型在 NLP 的各个领域里得到了广泛的应用,但是其高昂的时间和算力成本依然是一个亟需解决的问题。这要求我们在一定的算力约束下,研发出各项指标更优的模型。 我们的目标不是追求更大的模型规模,而是轻量级但更强大,同时对部署和工业落地更友好的模型。

pytorch bert intent classification and slot filling

pytorch_bert_intent_classification_and_slot_filling 基于pytorch的中文意图识别和槽位填充 说明 基本思路就是:分类+序列标注(命名实体识别)同时训练。 使用的预训练模型:hugging face上的chinese-bert-wwm-ext 依

Open & Efficient for Framework for Aspect-based Sentiment Analysis

PyABSA - Open & Efficient for Framework for Aspect-based Sentiment Analysis Fast & Low Memory requirement & Enhanced implementation of Local Context F

Membership Inference Attack against Graph Neural Networks

MIA GNN Project Starter If you meet the version mismatch error for Lasagne library, please use following command to upgrade Lasagne library. pip insta

SciBERT is a BERT model trained on scientific text.

SciBERT is a BERT model trained on scientific text.

Converting CPT to bert form for use

cpt-encoder 将CPT转成bert形式使用 说明 刚刚刷到又出了一种模型:CPT,看论文显示,在很多中文任务上性能比mac bert还好,就迫不及待想把它用起来。 根据对源码的研究,发现该模型在做nlu建模时主要用的encoder部分,也就是bert,因此我将这部分权重转为bert权重类型

Brute force a JWT token. Script uses multithreading.

JWT BF Brute force a JWT token. Script uses multithreading. Tested on Kali Linux v2021.4 (64-bit). Made for educational purposes. I hope it will help!

Implementation of BI-RADS-BERT & The Advantages of Section Tokenization.

BI-RADS BERT Implementation of BI-RADS-BERT & The Advantages of Section Tokenization. This implementation could be used on other radiology in house co

this is demo of tool dosploit for test and dos in network with python

this tool for dos and pentest vul SKILLS: syn flood udp flood $ git clone https://github.com/amicheh/demo_dosploit/ $ cd demo_dosploit $ python3 -m pi

This is the repository for paper NEEDLE: Towards Non-invertible Backdoor Attack to Deep Learning Models.

This is the repository for paper NEEDLE: Towards Non-invertible Backdoor Attack to Deep Learning Models.

Pre-training BERT masked language models with custom vocabulary

Pre-training BERT Masked Language Models (MLM) This repository contains the method to pre-train a BERT model using custom vocabulary. It was used to p

An easy-to-use Python module that helps you to extract the BERT embeddings for a large text dataset (Bengali/English) efficiently.

An easy-to-use Python module that helps you to extract the BERT embeddings for a large text dataset (Bengali/English) efficiently.

compact and speedy hash cracker for md5, sha1, and sha256 hashes

hash-cracker hash cracker is a multi-functional and compact...hash cracking tool...that supports dictionary attacks against three kinds of hashes: md5

Google and Stanford University released a new pre-trained model called ELECTRA

Google and Stanford University released a new pre-trained model called ELECTRA, which has a much compact model size and relatively competitive performance compared to BERT and its variants. For further accelerating the research of the Chinese pre-trained model, the Joint Laboratory of HIT and iFLYTEK Research (HFL) has released the Chinese ELECTRA models based on the official code of ELECTRA. ELECTRA-small could reach similar or even higher scores on several NLP tasks with only 1/10 parameters compared to BERT and its variants.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Pre-trained BERT Models for Ancient and Medieval Greek, and associated code for LaTeCH 2021 paper titled - "A Pilot Study for BERT Language Modelling and Morphological Analysis for Ancient and Medieval Greek"

Ancient Greek BERT The first and only available Ancient Greek sub-word BERT model! State-of-the-art post fine-tuning on Part-of-Speech Tagging and Mor

This is the official code for the paper "Ad2Attack: Adaptive Adversarial Attack for Real-Time UAV Tracking".

Ad^2Attack:Adaptive Adversarial Attack on Real-Time UAV Tracking Demo video 📹 Our video on bilibili demonstrates the test results of Ad^2Attack on se

Code and data form the paper BERT Got a Date: Introducing Transformers to Temporal Tagging

BERT Got a Date: Introducing Transformers to Temporal Tagging Satya Almasian*, Dennis Aumiller*, and Michael Gertz Heidelberg University Contact us vi

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

A model library for exploring state-of-the-art deep learning topologies and techniques for optimizing Natural Language Processing neural networks

A Deep Learning NLP/NLU library by Intel® AI Lab Overview | Models | Installation | Examples | Documentation | Tutorials | Contributing NLP Architect

Applying "Load What You Need: Smaller Versions of Multilingual BERT" to LaBSE

smaller-LaBSE LaBSE(Language-agnostic BERT Sentence Embedding) is a very good method to get sentence embeddings across languages. But it is hard to fi

Learnable Multi-level Frequency Decomposition and Hierarchical Attention Mechanism for Generalized Face Presentation Attack Detection

LMFD-PAD Note This is the official repository of the paper: LMFD-PAD: Learnable Multi-level Frequency Decomposition and Hierarchical Attention Mechani

Natural Language Processing with transformers

we want to create a repo to illustrate usage of transformers in chinese

DirBruter is a Python based CLI tool. It looks for hidden or existing directories/files using brute force method. It basically works by launching a dictionary based attack against a webserver and analyse its response.

DirBruter DirBruter is a Python based CLI tool. It looks for hidden or existing directories/files using brute force method. It basically works by laun

Pytorch-version BERT-flow: One can apply BERT-flow to any PLM within Pytorch framework.

Pytorch-version BERT-flow: One can apply BERT-flow to any PLM within Pytorch framework.

G-NIA model from "Single Node Injection Attack against Graph Neural Networks" (CIKM 2021)

Single Node Injection Attack against Graph Neural Networks This repository is our Pytorch implementation of our paper: Single Node Injection Attack ag

Demonstrates iterative FGSM on Apple's NeuralHash model.

apple-neuralhash-attack Demonstrates iterative FGSM on Apple's NeuralHash model. TL;DR: It is possible to apply noise to CSAM images and make them loo

This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced in the paper titled "BanglaBERT: Combating Embedding Barrier in Multilingual Models for Low-Resource Language Understanding".

BanglaBERT This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced i

The code for our paper "NSP-BERT: A Prompt-based Zero-Shot Learner Through an Original Pre-training Task —— Next Sentence Prediction"

The code for our paper "NSP-BERT: A Prompt-based Zero-Shot Learner Through an Original Pre-training Task —— Next Sentence Prediction"

multi-label,classifier,text classification,多标签文本分类,文本分类,BERT,ALBERT,multi-label-classification,seq2seq,attention,beam search

multi-label,classifier,text classification,多标签文本分类,文本分类,BERT,ALBERT,multi-label-classification,seq2seq,attention,beam search

Ptorch NLU, a Chinese text classification and sequence annotation toolkit, supports multi class and multi label classification tasks of Chinese long text and short text, and supports sequence annotation tasks such as Chinese named entity recognition, part of speech tagging and word segmentation.

Pytorch-NLU,一个中文文本分类、序列标注工具包,支持中文长文本、短文本的多类、多标签分类任务,支持中文命名实体识别、词性标注、分词等序列标注任务。 Ptorch NLU, a Chinese text classification and sequence annotation toolkit, supports multi class and multi label classification tasks of Chinese long text and short text, and supports sequence annotation tasks such as Chinese named entity recognition, part of speech tagging and word segmentation.

超轻量级bert的pytorch版本,大量中文注释,容易修改结构,持续更新

bert4pytorch 2021年8月27更新: 感谢大家的star,最近有小伙伴反映了一些小的bug,我也注意到了,奈何这个月工作上实在太忙,更新不及时,大约会在9月中旬集中更新一个只需要pip一下就完全可用的版本,然后会新添加一些关键注释。 再增加对抗训练的内容,更新一个完整的finetune

Ongoing research training transformer language models at scale, including: BERT & GPT-2

Megatron (1 and 2) is a large, powerful transformer developed by the Applied Deep Learning Research team at NVIDIA.

Abusing Microsoft 365 OAuth Authorization Flow for Phishing Attack

Microsoft365_devicePhish Abusing Microsoft 365 OAuth Authorization Flow for Phishing Attack This is a simple proof-of-concept script that allows an at

A BERT-based reverse dictionary of Korean proverbs

Wisdomify A BERT-based reverse-dictionary of Korean proverbs. 김유빈 : 모델링 / 데이터 수집 / 프로젝트 설계 / back-end 김종윤 : 데이터 수집 / 프로젝트 설계 / front-end / back-end 임용

Named Entity Recognition with Small Strongly Labeled and Large Weakly Labeled Data

Named Entity Recognition with Small Strongly Labeled and Large Weakly Labeled Data arXiv This is the code base for weakly supervised NER. We provide a

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

Ongoing research training transformer language models at scale, including: BERT & GPT-2

What is this fork of Megatron-LM and Megatron-DeepSpeed This is a detached fork of https://github.com/microsoft/Megatron-DeepSpeed, which in itself is

Using VideoBERT to tackle video prediction

VideoBERT This repo reproduces the results of VideoBERT (https://arxiv.org/pdf/1904.01766.pdf). Inspiration was taken from https://github.com/MDSKUL/M

(CVPR2021) Kaleido-BERT: Vision-Language Pre-training on Fashion Domain

Kaleido-BERT: Vision-Language Pre-training on Fashion Domain Mingchen Zhuge*, Dehong Gao*, Deng-Ping Fan#, Linbo Jin, Ben Chen, Haoming Zhou, Minghui

LightSeq is a high performance training and inference library for sequence processing and generation implemented in CUDA

LightSeq: A High Performance Library for Sequence Processing and Generation

Punctuation Restoration using Transformer Models for High-and Low-Resource Languages

Punctuation Restoration using Transformer Models This repository contins official implementation of the paper Punctuation Restoration using Transforme

This demo showcase the use of onnxruntime-rs with a GPU on CUDA 11 to run Bert in a data pipeline with Rust.

Demo BERT ONNX pipeline written in rust This demo showcase the use of onnxruntime-rs with a GPU on CUDA 11 to run Bert in a data pipeline with Rust. R

A curated list of amazingly awesome Cybersecurity datasets

A curated list of amazingly awesome Cybersecurity datasets

Implementing SimCSE(paper, official repository) using TensorFlow 2 and KR-BERT.

KR-BERT-SimCSE Implementing SimCSE(paper, official repository) using TensorFlow 2 and KR-BERT. Training Unsupervised python train_unsupervised.py --mi

REST API for sentence tokenization and embedding using Multilingual Universal Sentence Encoder.

MUSE stands for Multilingual Universal Sentence Encoder - multilingual extension (supports 16 languages) of Universal Sentence Encoder (USE).

Learned Token Pruning for Transformers

LTP: Learned Token Pruning for Transformers Check our paper for more details. Installation We follow the same installation procedure as the original H

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

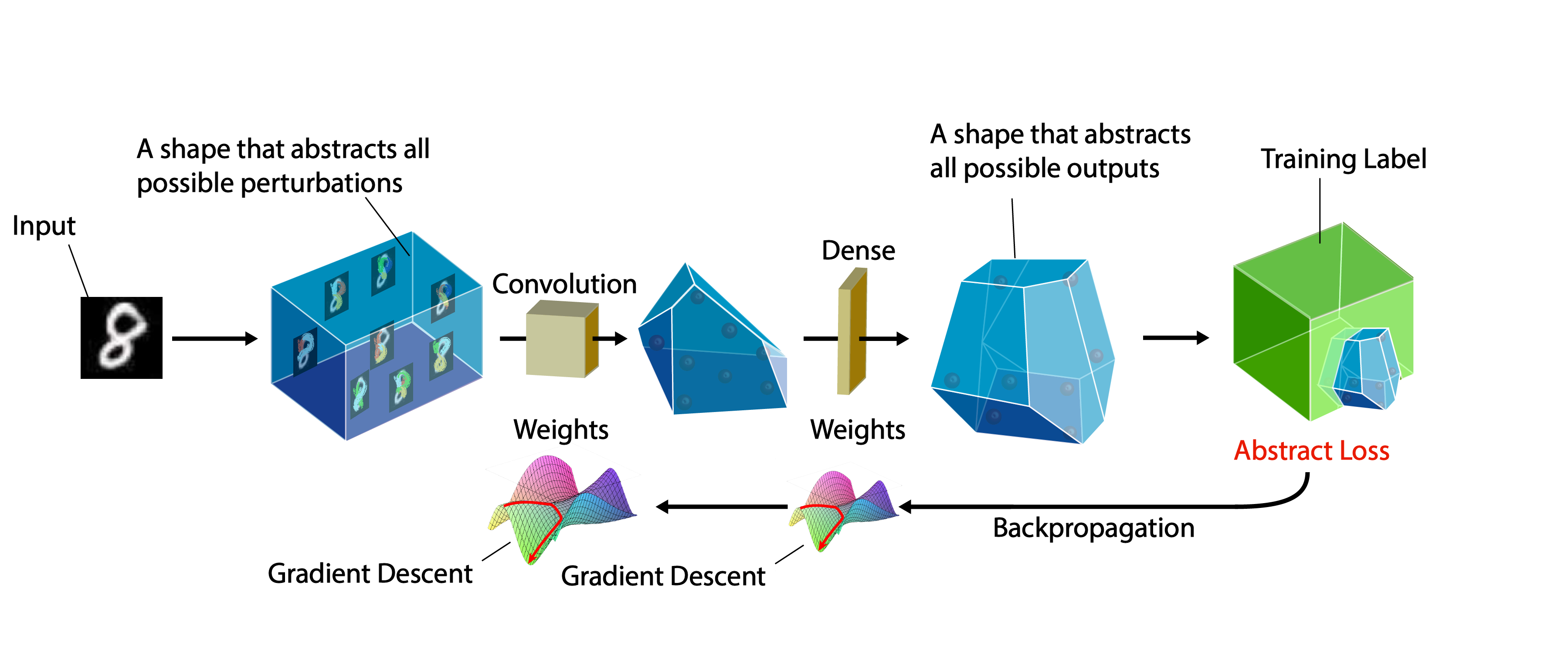

A certifiable defense against adversarial examples by training neural networks to be provably robust

DiffAI v3 DiffAI is a system for training neural networks to be provably robust and for proving that they are robust. The system was developed for the

A heterogeneous entity-augmented academic language model based on Open Academic Graph (OAG)

Library | Paper | Slack We released two versions of OAG-BERT in CogDL package. OAG-BERT is a heterogeneous entity-augmented academic language model wh

Pytorch code for ICRA'21 paper: "Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation"

Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation This repository is the pytorch implementation of our paper: Hierarchical Cr

Official repository for the paper, MidiBERT-Piano: Large-scale Pre-training for Symbolic Music Understanding.

MidiBERT-Piano Authors: Yi-Hui (Sophia) Chou, I-Chun (Bronwin) Chen Introduction This is the official repository for the paper, MidiBERT-Piano: Large-

VD-BERT: A Unified Vision and Dialog Transformer with BERT

VD-BERT: A Unified Vision and Dialog Transformer with BERT PyTorch Code for the following paper at EMNLP2020: Title: VD-BERT: A Unified Vision and Dia

VD-BERT: A Unified Vision and Dialog Transformer with BERT

VD-BERT: A Unified Vision and Dialog Transformer with BERT PyTorch Code for the following paper at EMNLP2020: Title: VD-BERT: A Unified Vision and Dia

Huggingface Transformers + Adapters = ❤️

adapter-transformers A friendly fork of HuggingFace's Transformers, adding Adapters to PyTorch language models adapter-transformers is an extension of

The source codes for ACL 2021 paper 'BoB: BERT Over BERT for Training Persona-based Dialogue Models from Limited Personalized Data'

BoB: BERT Over BERT for Training Persona-based Dialogue Models from Limited Personalized Data This repository provides the implementation details for

PyTorch impelementations of BERT-based Spelling Error Correction Models

PyTorch impelementations of BERT-based Spelling Error Correction Models

PyTorch impelementations of BERT-based Spelling Error Correction Models.

PyTorch impelementations of BERT-based Spelling Error Correction Models. 基于BERT的文本纠错模型,使用PyTorch实现。

A BERT-based reverse-dictionary of Korean proverbs

Wisdomify A BERT-based reverse-dictionary of Korean proverbs. 김유빈 : 모델링 / 데이터 수집 / 프로젝트 설계 / back-end 김종윤 : 데이터 수집 / 프로젝트 설계 / front-end Quick Start C

LV-BERT: Exploiting Layer Variety for BERT (Findings of ACL 2021)

LV-BERT Introduction In this repo, we introduce LV-BERT by exploiting layer variety for BERT. For detailed description and experimental results, pleas

LV-BERT: Exploiting Layer Variety for BERT (Findings of ACL 2021)

LV-BERT Introduction In this repo, we introduce LV-BERT by exploiting layer variety for BERT. For detailed description and experimental results, pleas

Incident Response Process and Playbooks | Goal: Playbooks to be Mapped to MITRE Attack Techniques

PURPOSE OF PROJECT That this project will be created by the SOC/Incident Response Community Develop a Catalog of Incident Response Playbook for every

TunBERT is the first release of a pre-trained BERT model for the Tunisian dialect using a Tunisian Common-Crawl-based dataset.

TunBERT is the first release of a pre-trained BERT model for the Tunisian dialect using a Tunisian Common-Crawl-based dataset. TunBERT was applied to three NLP downstream tasks: Sentiment Analysis (SA), Tunisian Dialect Identification (TDI) and Reading Comprehension Question-Answering (RCQA)

Adversarial Robustness Toolbox (ART) - Python Library for Machine Learning Security - Evasion, Poisoning, Extraction, Inference - Red and Blue Teams

Adversarial Robustness Toolbox (ART) is a Python library for Machine Learning Security. ART provides tools that enable developers and researchers to defend and evaluate Machine Learning models and applications against the adversarial threats of Evasion, Poisoning, Extraction, and Inference. ART supports all popular machine learning frameworks (TensorFlow, Keras, PyTorch, MXNet, scikit-learn, XGBoost, LightGBM, CatBoost, GPy, etc.), all data types (images, tables, audio, video, etc.) and machine learning tasks (classification, object detection, speech recognition, generation, certification, etc.).

We will see a basic program that is basically a hint to brute force attack to crack passwords. In other words, we will make a program to Crack Any Password Using Python. Show some ❤️ by starring this repository!

Crack Any Password Using Python We will see a basic program that is basically a hint to brute force attack to crack passwords. In other words, we will