51 Repositories

Python boosting Libraries

This repo presents you the official code of "VISTA: Boosting 3D Object Detection via Dual Cross-VIew SpaTial Attention"

VISTA VISTA: Boosting 3D Object Detection via Dual Cross-VIew SpaTial Attention Shengheng Deng, Zhihao Liang, Lin Sun and Kui Jia* (*) Corresponding a

TigerLily: Finding drug interactions in silico with the Graph.

Drug Interaction Prediction with Tigerlily Documentation | Example Notebook | Youtube Video | Project Report Tigerlily is a TigerGraph based system de

My Implementation for the paper EDA: Easy Data Augmentation Techniques for Boosting Performance on Text Classification Tasks using Tensorflow

Easy Data Augmentation Implementation This repository contains my Implementation for the paper EDA: Easy Data Augmentation Techniques for Boosting Per

🌶️ Give real chat boosting to your discord server.

Chat-Booster Give real chat boosting to your discord server. ✅ Setup: - Add token to scrape messages on server that you whant. - Put the token in

LightGBM + Optuna: no brainer

AutoLGBM LightGBM + Optuna: no brainer auto train lightgbm directly from CSV files auto tune lightgbm using optuna auto serve best lightgbm model usin

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc)

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc). Structured a custom ensemble model and a neural network. Found a outperformed model for heart failure prediction accuracy of 88 percent.

Code for You Only Cut Once: Boosting Data Augmentation with a Single Cut

You Only Cut Once (YOCO) YOCO is a simple method/strategy of performing augmenta

hgboost - Hyperoptimized Gradient Boosting

hgboost is short for Hyperoptimized Gradient Boosting and is a python package for hyperparameter optimization for xgboost, catboost and lightboost using cross-validation, and evaluating the results on an independent validation set. hgboost can be applied for classification and regression tasks.

A boosting-based Multiple Instance Learning (MIL) package that includes MIL-Boost and MCIL-Boost

A boosting-based Multiple Instance Learning (MIL) package that includes MIL-Boost and MCIL-Boost

EloGGs 🎮 is a 1v1.LOL Trophy Boosting Program (PATCHED)

EloGGs 🎮 is an old patched 1v1.LOL boosting program I developed months ago, My team made around $1000 total off of this, but now it's been patched by the developers.

Towards Boosting the Accuracy of Non-Latin Scene Text Recognition

Convolutional Recurrent Neural Network + CTCLoss | STAR-Net Code for paper "Towards Boosting the Accuracy of Non-Latin Scene Text Recognition" Depende

LRBoost is a scikit-learn compatible approach to performing linear residual based stacking/boosting.

LRBoost is a sckit-learn compatible package for linear residual boosting. LRBoost combines a linear estimator and a non-linear estimator to leverage t

Code, pre-trained models and saliency results for the paper "Boosting RGB-D Saliency Detection by Leveraging Unlabeled RGB Images".

Boosting RGB-D Saliency Detection by Leveraging Unlabeled RGB This repository is the official implementation of the paper. Our results comming soon in

PickMush - A mini study/project on boosting algorithm

PickMush A mini project implementing Boosting Author Shashwat Vaibhav What does it do? Classifies whether Mushroom is edible or is non-edible (binary

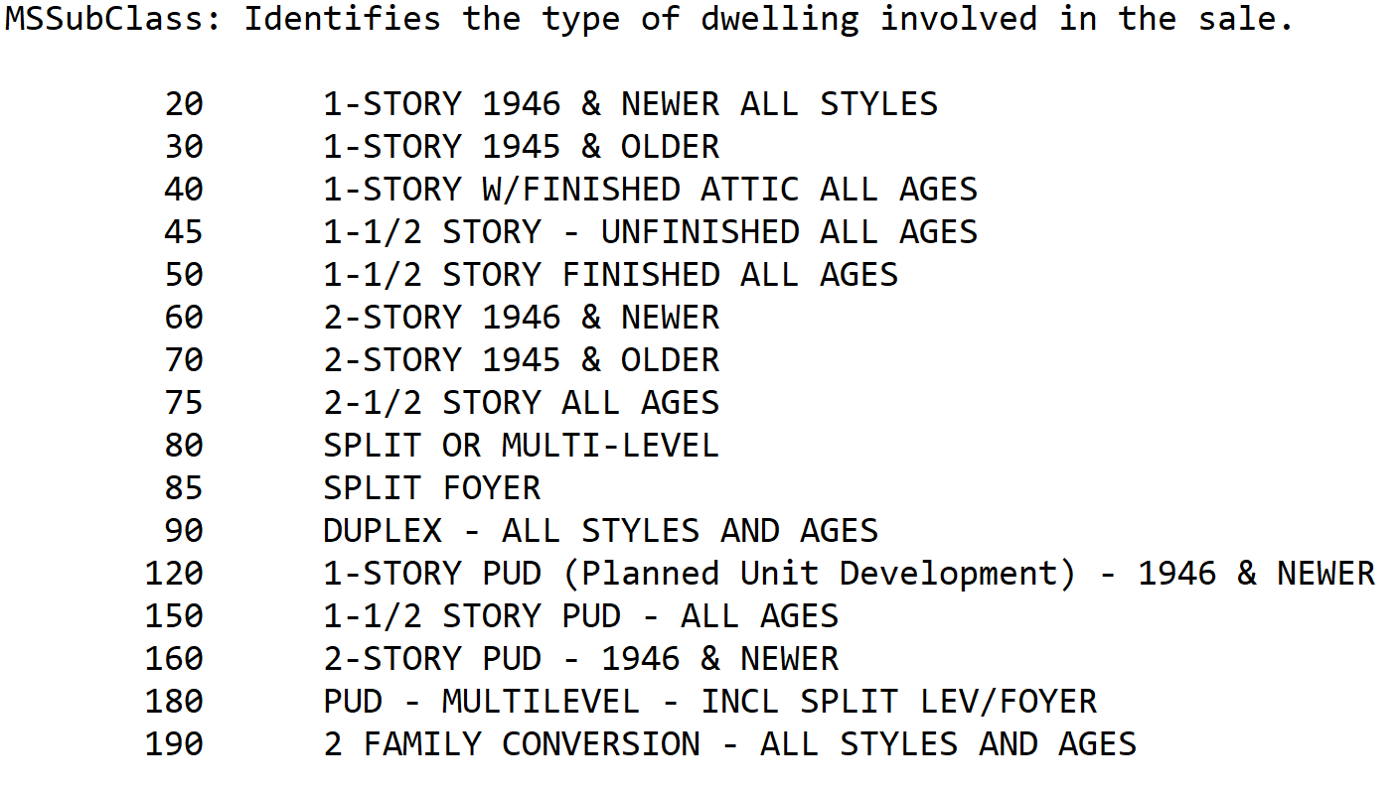

House_prices_kaggle - Predict sales prices and practice feature engineering, RFs, and gradient boosting

House Prices - Advanced Regression Techniques Predicting House Prices with Machine Learning This project is build to enhance my knowledge about machin

Houseprices - Predict sales prices and practice feature engineering, RFs, and gradient boosting

House Prices - Advanced Regression Techniques Predicting House Prices with Machine Learning This project is build to enhance my knowledge about machin

Code of paper Interact, Embed, and EnlargE (IEEE): Boosting Modality-specific Representations for Multi-Modal Person Re-identification.

Interact, Embed, and EnlargE (IEEE): Boosting Modality-specific Representations for Multi-Modal Person Re-identification We provide the codes for repr

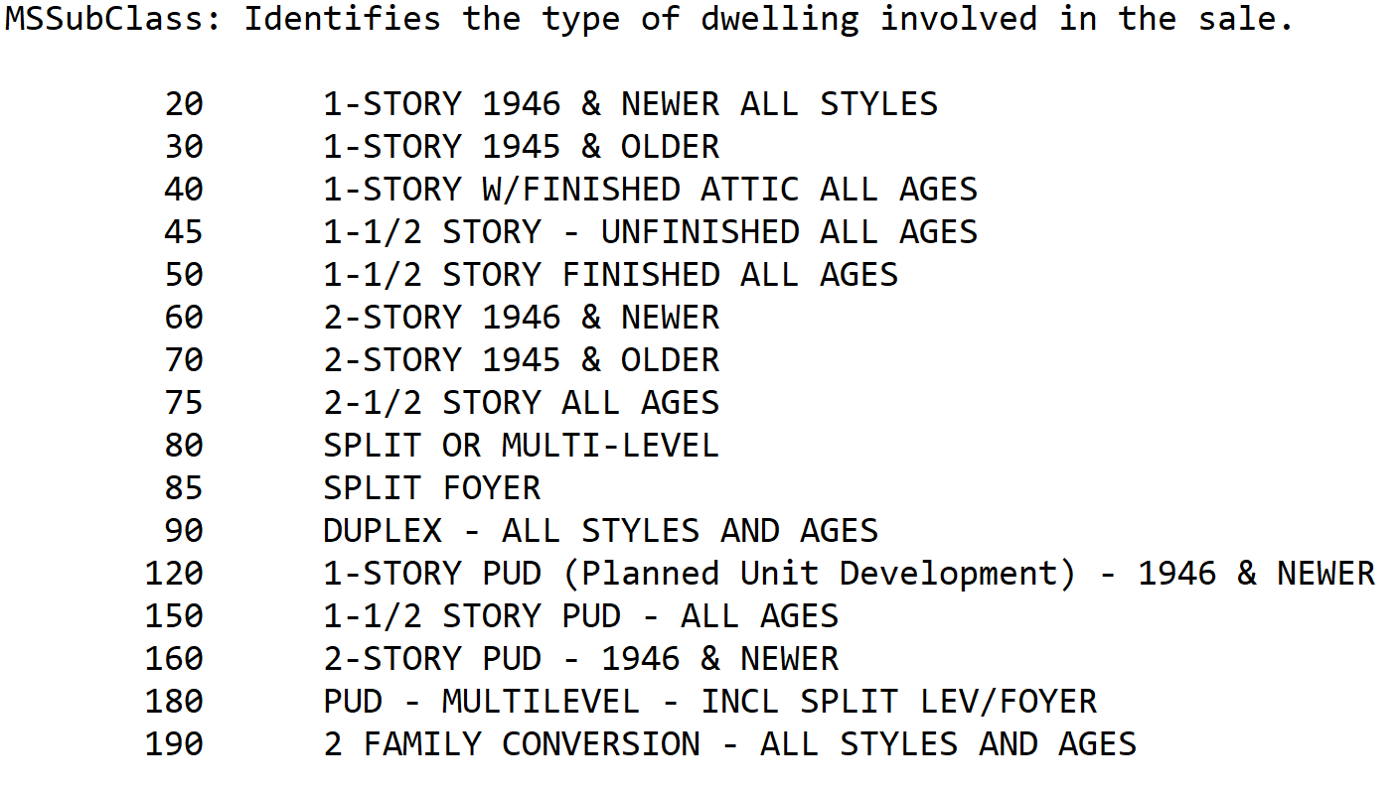

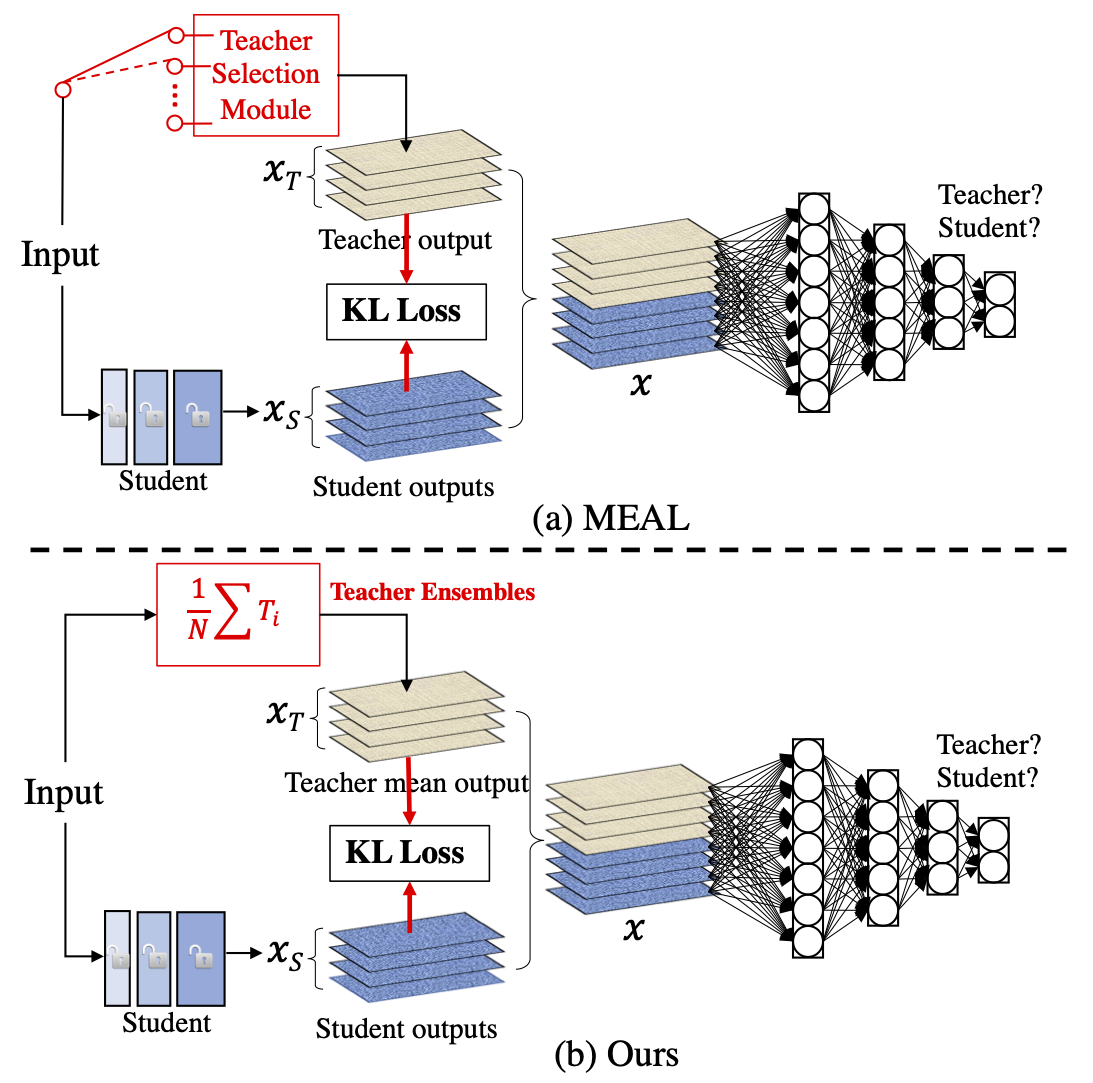

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric

LSTC: Boosting Atomic Action Detection with Long-Short-Term Context

LSTC: Boosting Atomic Action Detection with Long-Short-Term Context This Repository contains the code on AVA of our ACM MM 2021 paper: LSTC: Boosting

Accommodating supervised learning algorithms for the historical prices of the world's favorite cryptocurrency and boosting it through LightGBM.

Accommodating supervised learning algorithms for the historical prices of the world's favorite cryptocurrency and boosting it through LightGBM.

LLVM-based compiler for LightGBM gradient-boosted trees. Speeds up prediction by ≥10x.

LLVM-based compiler for LightGBM gradient-boosted trees. Speeds up prediction by ≥10x.

InfiniteBoost: building infinite ensembles with gradient descent

InfiniteBoost Code for a paper InfiniteBoost: building infinite ensembles with gradient descent (arXiv:1706.01109). A. Rogozhnikov, T. Likhomanenko De

Responsible Machine Learning with Python

Examples of techniques for training interpretable ML models, explaining ML models, and debugging ML models for accuracy, discrimination, and security.

Fit interpretable models. Explain blackbox machine learning.

InterpretML - Alpha Release In the beginning machines learned in darkness, and data scientists struggled in the void to explain them. Let there be lig

The codes of paper 'Active-LATHE: An Active Learning Algorithm for Boosting the Error exponent for Learning Homogeneous Ising Trees'

Active-LATHE: An Active Learning Algorithm for Boosting the Error exponent for Learning Homogeneous Ising Trees This project contains the codes of pap

Apply our monocular depth boosting to your own network!

MergeNet - Boost Your Own Depth Boost custom or edited monocular depth maps using MergeNet Input Original result After manual editing of base You can

Official PyTorch implementation of "Edge Rewiring Goes Neural: Boosting Network Resilience via Policy Gradient".

Edge Rewiring Goes Neural: Boosting Network Resilience via Policy Gradient This repository is the official PyTorch implementation of "Edge Rewiring Go

LSTC: Boosting Atomic Action Detection with Long-Short-Term Context

LSTC: Boosting Atomic Action Detection with Long-Short-Term Context This Repository contains the code on AVA of our ACM MM 2021 paper: LSTC: Boosting

Boosting Adversarial Attacks with Enhanced Momentum (BMVC 2021)

EMI-FGSM This repository contains code to reproduce results from the paper: Boosting Adversarial Attacks with Enhanced Momentum (BMVC 2021) Xiaosen Wa

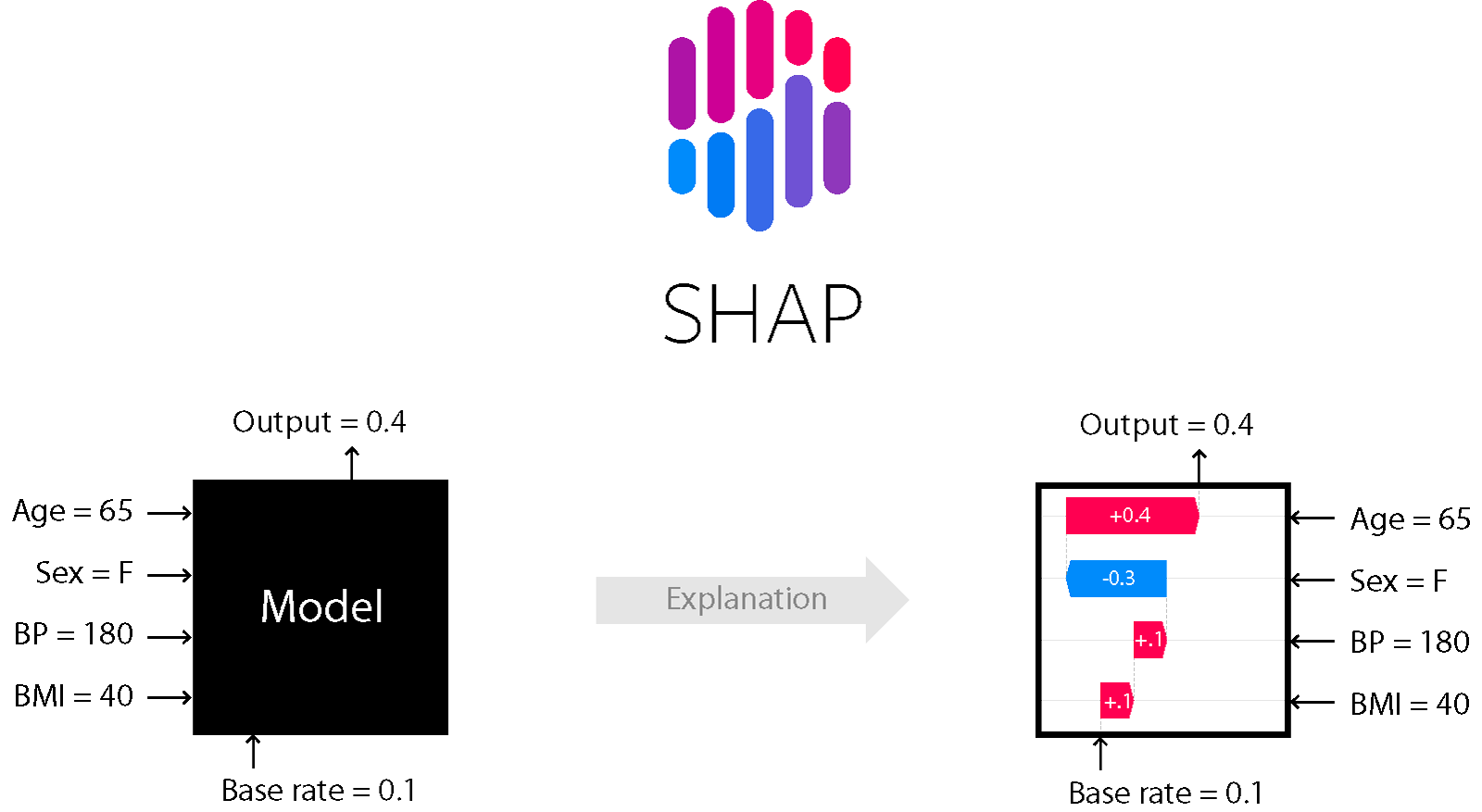

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

Python版OpenCVのTracking APIのサンプルです。DaSiamRPNアルゴリズムまで対応しています。

OpenCV-Object-Tracker-Sample Python版OpenCVのTracking APIのサンプルです。 Requirement opencv-contrib-python 4.5.3.56 or later Algorithm 2021/07/16時点でOpenCVには以

Instagram boosting

instagram boosting bot This bot can boost your instagram account! Rules and Instruction Use git clone to download this repository Open cmd/terminal an

Probabilistic Gradient Boosting Machines

PGBM Probabilistic Gradient Boosting Machines (PGBM) is a probabilistic gradient boosting framework in Python based on PyTorch/Numba, developed by Air

Boosting Monocular Depth Estimation Models to High-Resolution via Content-Adaptive Multi-Resolution Merging

Boosting Monocular Depth Estimation Models to High-Resolution via Content-Adaptive Multi-Resolution Merging This repository contains an implementation

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models.

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models. The library is a collection of Keras models and supports classification, regression and ranking. TF-DF is a TensorFlow wrapper around the Yggdrasil Decision Forests C++ libraries. Models trained with TF-DF are compatible with Yggdrasil Decision Forests' models, and vice versa.

(L2ID@CVPR2021) Boosting Co-teaching with Compression Regularization for Label Noise

Nested-Co-teaching (L2ID@CVPR2021) Pytorch implementation of paper "Boosting Co-teaching with Compression Regularization for Label Noise" [PDF] If our

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Machine learning, in numpy

numpy-ml Ever wish you had an inefficient but somewhat legible collection of machine learning algorithms implemented exclusively in NumPy? No? Install

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

[UNMAINTAINED] Automated machine learning for analytics & production

auto_ml Automated machine learning for production and analytics Installation pip install auto_ml Getting started from auto_ml import Predictor from au

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

H2O is an Open Source, Distributed, Fast & Scalable Machine Learning Platform: Deep Learning, Gradient Boosting (GBM) & XGBoost, Random Forest, Generalized Linear Modeling (GLM with Elastic Net), K-Means, PCA, Generalized Additive Models (GAM), RuleFit, Support Vector Machine (SVM), Stacked Ensembles, Automatic Machine Learning (AutoML), etc.

H2O H2O is an in-memory platform for distributed, scalable machine learning. H2O uses familiar interfaces like R, Python, Scala, Java, JSON and the Fl