174 Repositories

Python gradient-boosted-trees Libraries

Making decision trees competitive with neural networks on CIFAR10, CIFAR100, TinyImagenet200, Imagenet

Neural-Backed Decision Trees · Site · Paper · Blog · Video Alvin Wan, *Lisa Dunlap, *Daniel Ho, Jihan Yin, Scott Lee, Henry Jin, Suzanne Petryk, Sarah

Pytorch implementation of convolutional neural network visualization techniques

Convolutional Neural Network Visualizations This repository contains a number of convolutional neural network visualization techniques implemented in

Tutorial for surrogate gradient learning in spiking neural networks

SpyTorch A tutorial on surrogate gradient learning in spiking neural networks Version: 0.4 This repository contains tutorial files to get you started

A PyTorch implementation of Learning to learn by gradient descent by gradient descent

Intro PyTorch implementation of Learning to learn by gradient descent by gradient descent. Run python main.py TODO Initial implementation Toy data LST

A PyTorch implementation of Learning to learn by gradient descent by gradient descent

Intro PyTorch implementation of Learning to learn by gradient descent by gradient descent. Run python main.py TODO Initial implementation Toy data LST

Implementation of algorithms for continuous control (DDPG and NAF).

DEPRECATION This repository is deprecated and is no longer maintaned. Please see a more recent implementation of RL for continuous control at jax-sac.

Audits Python environments and dependency trees for known vulnerabilities

pip-audit pip-audit is a prototype tool for scanning Python environments for packages with known vulnerabilities. It uses the Python Packaging Advisor

决策树分类与回归模型的实现和可视化

DecisionTree 决策树分类与回归模型,以及可视化 DecisionTree ID3 C4.5 CART 分类 回归 决策树绘制 分类树 回归树 调参 剪枝 ID3 ID3决策树是最朴素的决策树分类器: 无剪枝 只支持离散属性 采用信息增益准则 在data.py中,我们记录了一个小的西瓜数据

Code for the paper "TadGAN: Time Series Anomaly Detection Using Generative Adversarial Networks"

TadGAN: Time Series Anomaly Detection Using Generative Adversarial Networks This is a Python3 / Pytorch implementation of TadGAN paper. The associated

Continuum Learning with GEM: Gradient Episodic Memory

Gradient Episodic Memory for Continual Learning Source code for the paper: @inproceedings{GradientEpisodicMemory, title={Gradient Episodic Memory

Pytorch Implementation of paper "Noisy Natural Gradient as Variational Inference"

Noisy Natural Gradient as Variational Inference PyTorch implementation of Noisy Natural Gradient as Variational Inference. Requirements Python 3 Pytor

Proximal Backpropagation - a neural network training algorithm that takes implicit instead of explicit gradient steps

Proximal Backpropagation Proximal Backpropagation (ProxProp) is a neural network training algorithm that takes implicit instead of explicit gradient s

This is the pytorch implementation of the paper - Axiomatic Attribution for Deep Networks.

Integrated Gradients This is the pytorch implementation of "Axiomatic Attribution for Deep Networks". The original tensorflow version could be found h

Bonsai: Gradient Boosted Trees + Bayesian Optimization

Bonsai is a wrapper for the XGBoost and Catboost model training pipelines that leverages Bayesian optimization for computationally efficient hyperparameter tuning.

Official implementation of paper Gradient Matching for Domain Generalization

Gradient Matching for Domain Generalisation This is the official PyTorch implementation of Gradient Matching for Domain Generalisation. In our paper,

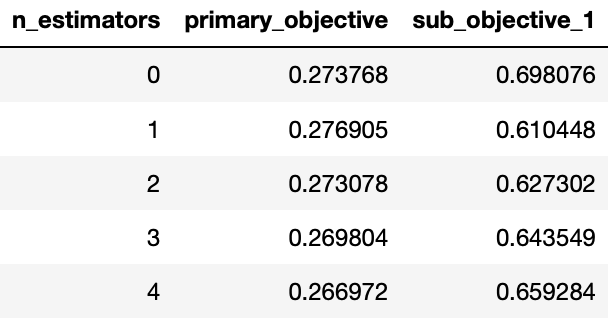

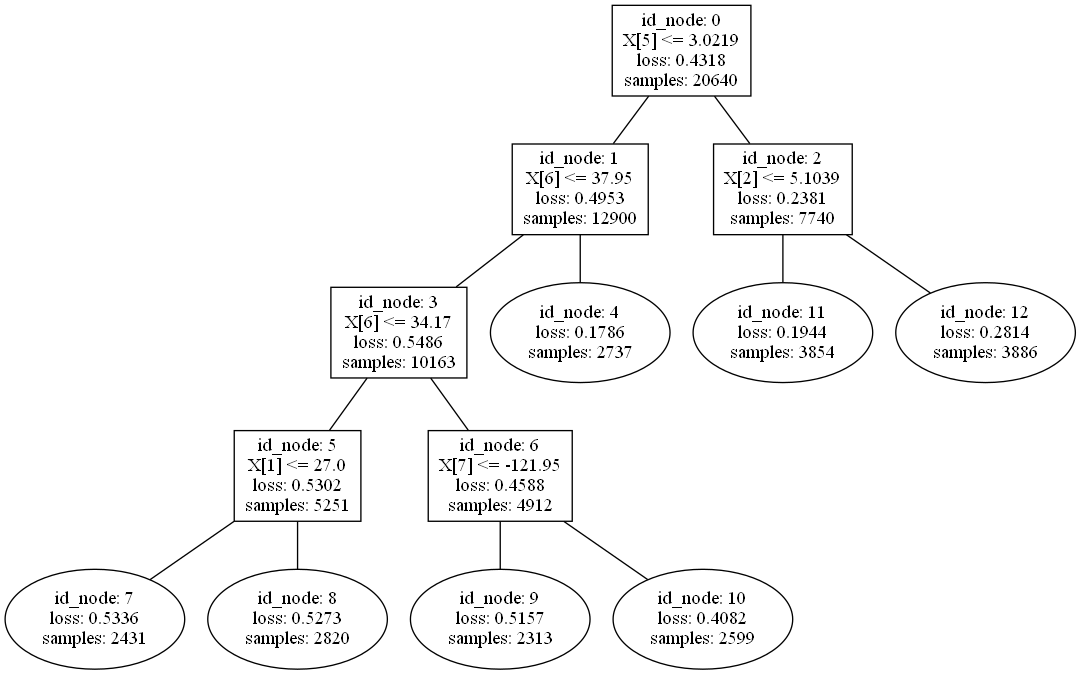

MooGBT is a library for Multi-objective optimization in Gradient Boosted Trees.

MooGBT is a library for Multi-objective optimization in Gradient Boosted Trees. MooGBT optimizes for multiple objectives by defining constraints on sub-objective(s) along with a primary objective. The constraints are defined as upper bounds on sub-objective loss function. MooGBT uses a Augmented Lagrangian(AL) based constrained optimization framework with Gradient Boosted Trees, to optimize for multiple objectives.

A python library to build Model Trees with Linear Models at the leaves.

A python library to build Model Trees with Linear Models at the leaves.

30 Days Of Machine Learning Using Pytorch

Objective of the repository is to learn and build machine learning models using Pytorch. 30DaysofML Using Pytorch

Implementation of Learning Gradient Fields for Molecular Conformation Generation (ICML 2021).

[PDF] | [Slides] The official implementation of Learning Gradient Fields for Molecular Conformation Generation (ICML 2021 Long talk) Installation Inst

Custom TensorFlow2 implementations of forward and backward computation of soft-DTW algorithm in batch mode.

Batch Soft-DTW(Dynamic Time Warping) in TensorFlow2 including forward and backward computation Custom TensorFlow2 implementations of forward and backw

ML Optimizers from scratch using JAX

Toy implementations of some popular ML optimizers using Python/JAX

On the model-based stochastic value gradient for continuous reinforcement learning

On the model-based stochastic value gradient for continuous reinforcement learning This repository is by Brandon Amos, Samuel Stanton, Denis Yarats, a

Multivariate Boosted TRee

Multivariate Boosted TRee What is MBTR MBTR is a python package for multivariate boosted tree regressors trained in parameter space. The package can h

MBTR is a python package for multivariate boosted tree regressors trained in parameter space.

MBTR is a python package for multivariate boosted tree regressors trained in parameter space.

Intruder detection systems are common place now, and readily available in industry, but how do they work? They must detect people and large animals, but not generate false alarms in the presence of small animals, changes in lighting, environmental motion such as trees, or melting snow. To work correctly, the system must learn the background, in order to differentiate foreground objects.

Intruder-Detection Intruder detection systems are common place now, and readily available in industry, but how do they work? They must detect people a

Probabilistic Gradient Boosting Machines

PGBM Probabilistic Gradient Boosting Machines (PGBM) is a probabilistic gradient boosting framework in Python based on PyTorch/Numba, developed by Air

The official implementation of You Only Compress Once: Towards Effective and Elastic BERT Compression via Exploit-Explore Stochastic Nature Gradient.

You Only Compress Once: Towards Effective and Elastic BERT Compression via Exploit-Explore Stochastic Nature Gradient (paper) @misc{zhang2021compress,

Boosted neural network for tabular data

XBNet - Xtremely Boosted Network Boosted neural network for tabular data XBNet is an open source project which is built with PyTorch which tries to co

Code release to accompany paper "Geometry-Aware Gradient Algorithms for Neural Architecture Search."

Geometry-Aware Gradient Algorithms for Neural Architecture Search This repository contains the code required to run the experiments for the DARTS sear

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models.

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models. The library is a collection of Keras models and supports classification, regression and ranking. TF-DF is a TensorFlow wrapper around the Yggdrasil Decision Forests C++ libraries. Models trained with TF-DF are compatible with Yggdrasil Decision Forests' models, and vice versa.

![[ECCV 2020] Gradient-Induced Co-Saliency Detection](https://github.com/zzhanghub/gicd/raw/master/img/GICD_LOGO.png)

[ECCV 2020] Gradient-Induced Co-Saliency Detection

Gradient-Induced Co-Saliency Detection Zhao Zhang*, Wenda Jin*, Jun Xu, Ming-Ming Cheng ⭐ Project Home » The official repo of the ECCV 2020 paper Grad

Paddle-RLBooks is a reinforcement learning code study guide based on pure PaddlePaddle.

Paddle-RLBooks Welcome to Paddle-RLBooks which is a reinforcement learning code study guide based on pure PaddlePaddle. 欢迎来到Paddle-RLBooks,该仓库主要是针对强化学

A collection of various RL algorithms like policy gradients, DQN and PPO. The goal of this repo will be to make it a go-to resource for learning about RL. How to visualize, debug and solve RL problems. I've additionally included playground.py for learning more about OpenAI gym, etc.

Reinforcement Learning (PyTorch) 🤖 + 🍰 = ❤️ This repo will contain PyTorch implementation of various fundamental RL algorithms. It's aimed at making

Objective of the repository is to learn and build machine learning models using Pytorch. 30DaysofML Using Pytorch

30 Days Of Machine Learning Using Pytorch Objective of the repository is to learn and build machine learning models using Pytorch. List of Algorithms

A Closer Look at Invalid Action Masking in Policy Gradient Algorithms

A Closer Look at Invalid Action Masking in Policy Gradient Algorithms This repo contains the source code to reproduce the results in the paper A Close

![[CVPRW 21]](https://github.com/VITA-Group/BNN_NoBN/raw/main/Figs/res.png)

[CVPRW 21] "BNN - BN = ? Training Binary Neural Networks without Batch Normalization", Tianlong Chen, Zhenyu Zhang, Xu Ouyang, Zechun Liu, Zhiqiang Shen, Zhangyang Wang

BNN - BN = ? Training Binary Neural Networks without Batch Normalization Codes for this paper BNN - BN = ? Training Binary Neural Networks without Bat

PGPortfolio: Policy Gradient Portfolio, the source code of "A Deep Reinforcement Learning Framework for the Financial Portfolio Management Problem"(https://arxiv.org/pdf/1706.10059.pdf).

This is the original implementation of our paper, A Deep Reinforcement Learning Framework for the Financial Portfolio Management Problem (arXiv:1706.1

A resource for learning about deep learning techniques from regression to LSTM and Reinforcement Learning using financial data and the fitness functions of algorithmic trading

A tour through tensorflow with financial data I present several models ranging in complexity from simple regression to LSTM and policy networks. The s

This project provides a stock market environment using OpenGym with Deep Q-learning and Policy Gradient.

Stock Trading Market OpenAI Gym Environment with Deep Reinforcement Learning using Keras Overview This project provides a general environment for stoc

Scalable, event-driven, deep-learning-friendly backtesting library

...Minimizing the mean square error on future experience. - Richard S. Sutton BTGym Scalable event-driven RL-friendly backtesting library. Build on

Code for: Gradient-based Hierarchical Clustering using Continuous Representations of Trees in Hyperbolic Space. Nicholas Monath, Manzil Zaheer, Daniel Silva, Andrew McCallum, Amr Ahmed. KDD 2019.

gHHC Code for: Gradient-based Hierarchical Clustering using Continuous Representations of Trees in Hyperbolic Space. Nicholas Monath, Manzil Zaheer, D

Official implementation of "GS-WGAN: A Gradient-Sanitized Approach for Learning Differentially Private Generators" (NeurIPS 2020)

GS-WGAN This repository contains the implementation for GS-WGAN: A Gradient-Sanitized Approach for Learning Differentially Private Generators (NeurIPS

This is the source code of RPG (Reward-Randomized Policy Gradient)

RPG (Reward-Randomized Policy Gradient) Zhenggang Tang*, Chao Yu*, Boyuan Chen, Huazhe Xu, Xiaolong Wang, Fei Fang, Simon Shaolei Du, Yu Wang, Yi Wu (

🎯 A comprehensive gradient-free optimization framework written in Python

Solid is a Python framework for gradient-free optimization. It contains basic versions of many of the most common optimization algorithms that do not

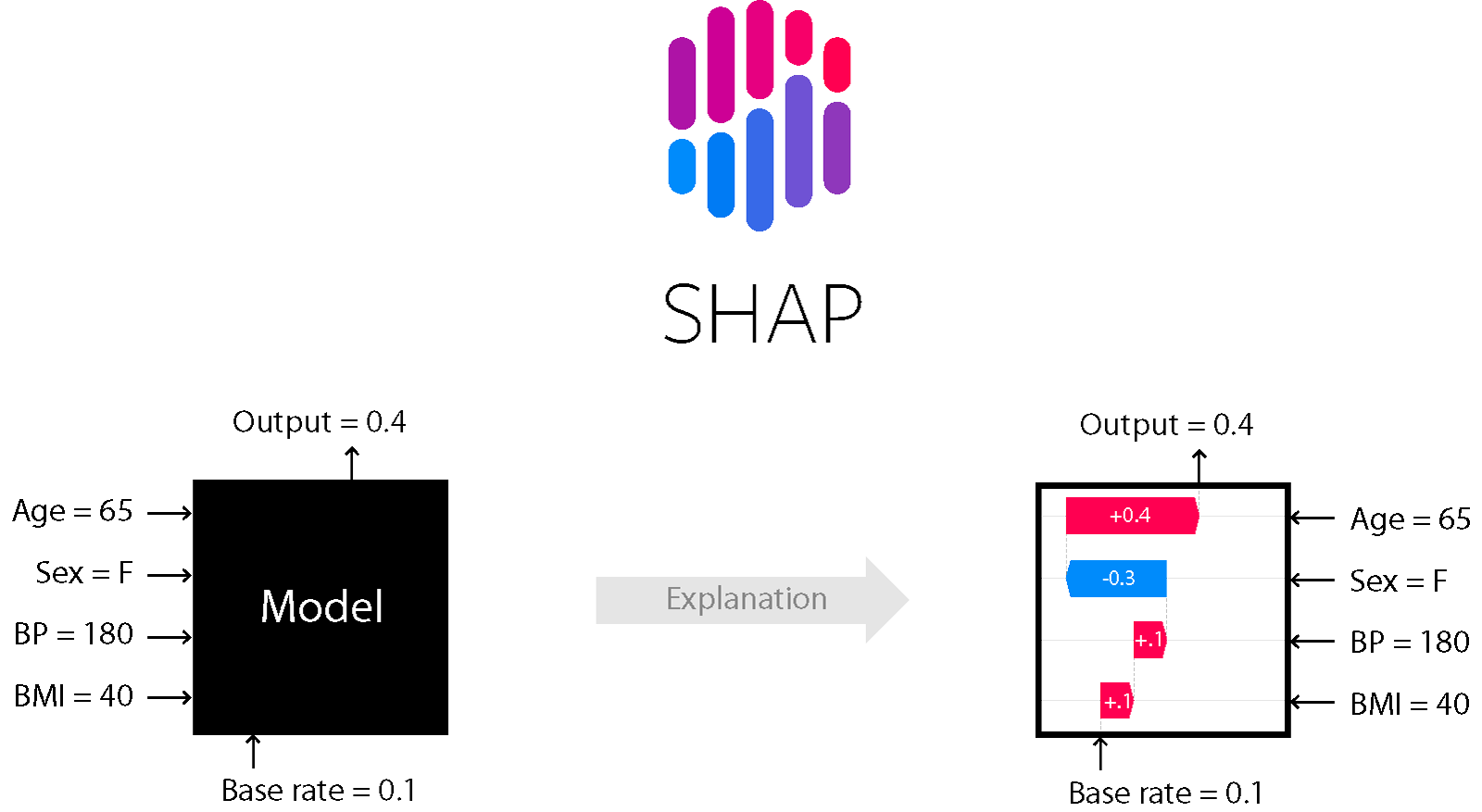

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

Contrastive Explanation (Foil Trees), developed at TNO/Utrecht University

Contrastive Explanation (Foil Trees) Contrastive and counterfactual explanations for machine learning (ML) Marcel Robeer (2018-2020), TNO/Utrecht Univ

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

Home repository for the Regularized Greedy Forest (RGF) library. It includes original implementation from the paper and multithreaded one written in C++, along with various language-specific wrappers.

Regularized Greedy Forest Regularized Greedy Forest (RGF) is a tree ensemble machine learning method described in this paper. RGF can deliver better r

It is a forest of random projection trees

rpforest rpforest is a Python library for approximate nearest neighbours search: finding points in a high-dimensional space that are close to a given

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

🍊 :bar_chart: :bulb: Orange: Interactive data analysis

Orange Data Mining Orange is a data mining and visualization toolbox for novice and expert alike. To explore data with Orange, one requires no program

Implements Gradient Centralization and allows it to use as a Python package in TensorFlow

Gradient Centralization TensorFlow This Python package implements Gradient Centralization in TensorFlow, a simple and effective optimization technique

Storchastic is a PyTorch library for stochastic gradient estimation in Deep Learning

Storchastic is a PyTorch library for stochastic gradient estimation in Deep Learning

NFNets and Adaptive Gradient Clipping for SGD implemented in PyTorch

PyTorch implementation of Normalizer-Free Networks and SGD - Adaptive Gradient Clipping Paper: https://arxiv.org/abs/2102.06171.pdf Original code: htt

Keras implementation of Normalizer-Free Networks and SGD - Adaptive Gradient Clipping

Keras implementation of Normalizer-Free Networks and SGD - Adaptive Gradient Clipping

Fast and Easy Infinite Neural Networks in Python

Neural Tangents ICLR 2020 Video | Paper | Quickstart | Install guide | Reference docs | Release notes Overview Neural Tangents is a high-level neural

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

Image morphing without reference points by applying warp maps and optimizing over them.

Differentiable Morphing Image morphing without reference points by applying warp maps and optimizing over them. Differentiable Morphing is machine lea

Modular Deep Reinforcement Learning framework in PyTorch. Companion library of the book "Foundations of Deep Reinforcement Learning".

SLM Lab Modular Deep Reinforcement Learning framework in PyTorch. Documentation: https://slm-lab.gitbook.io/slm-lab/ BeamRider Breakout KungFuMaster M

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Python interface for converting Penn Treebank trees to Stanford Dependencies and Universal Depenencies

PyStanfordDependencies Python interface for converting Penn Treebank trees to Universal Dependencies and Stanford Dependencies. Example usage Start by

Machine learning, in numpy

numpy-ml Ever wish you had an inefficient but somewhat legible collection of machine learning algorithms implemented exclusively in NumPy? No? Install

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

Simple machine learning library / 簡單易用的機器學習套件

FukuML Simple machine learning library / 簡單易用的機器學習套件 Installation $ pip install FukuML Tutorial Lesson 1: Perceptron Binary Classification Learning Al

Home repository for the Regularized Greedy Forest (RGF) library. It includes original implementation from the paper and multithreaded one written in C++, along with various language-specific wrappers.

Regularized Greedy Forest Regularized Greedy Forest (RGF) is a tree ensemble machine learning method described in this paper. RGF can deliver better r

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

Python implementation of cover trees, near-drop-in replacement for scipy.spatial.kdtree

This is a Python implementation of cover trees, a data structure for finding nearest neighbors in a general metric space (e.g., a 3D box with periodic

[UNMAINTAINED] Automated machine learning for analytics & production

auto_ml Automated machine learning for production and analytics Installation pip install auto_ml Getting started from auto_ml import Predictor from au

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

H2O is an Open Source, Distributed, Fast & Scalable Machine Learning Platform: Deep Learning, Gradient Boosting (GBM) & XGBoost, Random Forest, Generalized Linear Modeling (GLM with Elastic Net), K-Means, PCA, Generalized Additive Models (GAM), RuleFit, Support Vector Machine (SVM), Stacked Ensembles, Automatic Machine Learning (AutoML), etc.

H2O H2O is an in-memory platform for distributed, scalable machine learning. H2O uses familiar interfaces like R, Python, Scala, Java, JSON and the Fl