44 Repositories

Python md-benchmarks-rs Libraries

Code for testing various M1 Chip benchmarks with TensorFlow.

M1, M1 Pro, M1 Max Machine Learning Speed Test Comparison This repo contains some sample code to benchmark the new M1 MacBooks (M1 Pro and M1 Max) aga

Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration

This repo is for the paper: Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration The DAC environment is based on the Dynam

All public open-source implementations of convnets benchmarks

convnet-benchmarks Easy benchmarking of all public open-source implementations of convnets. A summary is provided in the section below. Machine: 6-cor

Best practices for segmentation of the corporate network of any company

Best-practice-for-network-segmentation What is this? This project was created to publish the best practices for segmentation of the corporate network

Code examples and benchmarks from the paper "Understanding Entropy Coding With Asymmetric Numeral Systems (ANS): a Statistician's Perspective"

Code For the Paper "Understanding Entropy Coding With Asymmetric Numeral Systems (ANS): a Statistician's Perspective" Author: Robert Bamler Date: 22 D

A benchmark framework for Tensorflow

TensorFlow benchmarks This repository contains various TensorFlow benchmarks. Currently, it consists of two projects: PerfZero: A benchmark framework

Datasets, tools, and benchmarks for representation learning of code.

The CodeSearchNet challenge has been concluded We would like to thank all participants for their submissions and we hope that this challenge provided

Benchmarks for Model-Based Optimization

Design-Bench Design-Bench is a benchmarking framework for solving automatic design problems that involve choosing an input that maximizes a black-box

Benchmark spaces - Benchmarks of how well different two dimensional spaces work for clustering algorithms

benchmark_spaces Benchmarks of how well different two dimensional spaces work fo

Rust Markdown Parsing Benchmarks

Rust Markdown Parsing Benchmarks This repo tries to assess Rust markdown parsing

Raster processing benchmarks for Python and R packages

Raster processing benchmarks This repository contains a collection of raster processing benchmarks for Python and R packages. The tests cover the most

Benchmarks for Object Detection in Aerial Images

Benchmarks for Object Detection in Aerial Images

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

A benchmark dataset for emulating atmospheric radiative transfer in weather and climate models with machine learning (NeurIPS 2021 Datasets and Benchmarks Track)

ClimART - A Benchmark Dataset for Emulating Atmospheric Radiative Transfer in Weather and Climate Models Official PyTorch Implementation Using deep le

Benchmarks for the Optimal Power Flow Problem

Power Grid Lib - Optimal Power Flow This benchmark library is curated and maintained by the IEEE PES Task Force on Benchmarks for Validation of Emergi

Training code and evaluation benchmarks for the "Self-Supervised Policy Adaptation during Deployment" paper.

Self-Supervised Policy Adaptation during Deployment PyTorch implementation of PAD and evaluation benchmarks from Self-Supervised Policy Adaptation dur

Just a little benchmark for scrapper PC's

PopMark Just a little benchmark for scrapper PC's This benchmark is for old computer that dont support other benchmark because of support. Like lack o

NeurIPS 2021 Datasets and Benchmarks Track

AP-10K: A Benchmark for Animal Pose Estimation in the Wild Introduction | Updates | Overview | Download | Training Code | Key Questions | License Intr

Source code and notebooks to reproduce experiments and benchmarks on Bias Faces in the Wild (BFW).

Face Recognition: Too Bias, or Not Too Bias? Robinson, Joseph P., Gennady Livitz, Yann Henon, Can Qin, Yun Fu, and Samson Timoner. "Face recognition:

QED-C: The Quantum Economic Development Consortium provides these computer programs and software for use in the fields of quantum science and engineering.

Application-Oriented Performance Benchmarks for Quantum Computing This repository contains a collection of prototypical application- or algorithm-cent

Official source for spanish Language Models and resources made @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish Language Models 💃🏻 A repository part of the MarIA project. Corpora 📃 Corpora Number of documents Number of tokens Size (GB) BNE 201,080,084

![[NeurIPS 2021] Large Scale Learning on Non-Homophilous Graphs: New Benchmarks and Strong Simple Methods](https://user-images.githubusercontent.com/58995473/120717799-27fd9200-c496-11eb-940f-b16d4d528e0f.png)

[NeurIPS 2021] Large Scale Learning on Non-Homophilous Graphs: New Benchmarks and Strong Simple Methods

Large Scale Learning on Non-Homophilous Graphs: New Benchmarks and Strong Simple Methods Large Scale Learning on Non-Homophilous Graphs: New Benchmark

Source code for the paper: Variance-Aware Machine Translation Test Sets (NeurIPS 2021 Datasets and Benchmarks Track)

Variance-Aware-MT-Test-Sets Variance-Aware Machine Translation Test Sets License See LICENSE. We follow the data licensing plan as the same as the WMT

SustainBench: Benchmarks for Monitoring the Sustainable Development Goals with Machine Learning

Datasets | Website | Raw Data | OpenReview SustainBench: Benchmarks for Monitoring the Sustainable Development Goals with Machine Learning Christopher

SW components and demos for visual kinship recognition. An emphasis is put on the FIW dataset-- data loaders, benchmarks, results in summary.

FIW Data Development Kit Table of Contents Introduction Families In the Wild Database Publications Organization To Do License Getting Involved Introdu

![[NeurIPS 2021] Large Scale Learning on Non-Homophilous Graphs: New Benchmarks and Strong Simple Methods](https://user-images.githubusercontent.com/58995473/120717799-27fd9200-c496-11eb-940f-b16d4d528e0f.png)

[NeurIPS 2021] Large Scale Learning on Non-Homophilous Graphs: New Benchmarks and Strong Simple Methods

Large Scale Learning on Non-Homophilous Graphs: New Benchmarks and Strong Simple Methods Large Scale Learning on Non-Homophilous Graphs: New Benchmark

Simple data balancing baselines for worst-group-accuracy benchmarks.

BalancingGroups Code to replicate the experimental results from Simple data balancing baselines achieve competitive worst-group-accuracy. Replicating

A suite of benchmarks for CPU and GPU performance of the most popular high-performance libraries for Python :rocket:

A suite of benchmarks for CPU and GPU performance of the most popular high-performance libraries for Python :rocket:

Simple data balancing baselines for worst-group-accuracy benchmarks.

BalancingGroups Code to replicate the experimental results from Simple data balancing baselines achieve competitive worst-group-accuracy. Replicating

Repo for "Physion: Evaluating Physical Prediction from Vision in Humans and Machines" submission to NeurIPS 2021 (Datasets & Benchmarks track)

Physion: Evaluating Physical Prediction from Vision in Humans and Machines This repo contains code and data to reproduce the results in our paper, Phy

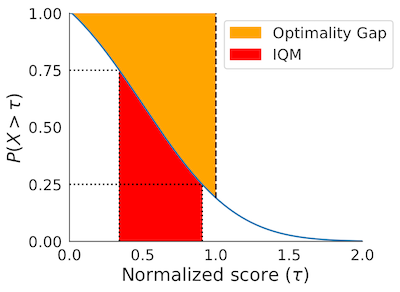

rliable is an open-source Python library for reliable evaluation, even with a handful of runs, on reinforcement learning and machine learnings benchmarks.

Open-source library for reliable evaluation on reinforcement learning and machine learning benchmarks. See NeurIPS 2021 oral for details.

This repository contains a set of benchmarks of different implementations of Parquet (storage format) - Arrow (in-memory format).

Parquet benchmarks This repository contains a set of benchmarks of different implementations of Parquet (storage format) - Arrow (in-memory format).

Reproducible nvim completion framework benchmarks.

Nvim.Bench Reproducible nvim completion framework benchmarks. Runs inside Docker. Fair and balanced Methodology Note: for all "randomness", they are g

Sequence modeling benchmarks and temporal convolutional networks

Sequence Modeling Benchmarks and Temporal Convolutional Networks (TCN) This repository contains the experiments done in the work An Empirical Evaluati

Silero Models: pre-trained speech-to-text, text-to-speech models and benchmarks made embarrassingly simple

Silero Models: pre-trained speech-to-text, text-to-speech models and benchmarks made embarrassingly simple

wireguard-config-benchmark is a python script that benchmarks the download speeds for the connections defined in one or more wireguard config files

wireguard-config-benchmark is a python script that benchmarks the download speeds for the connections defined in one or more wireguard config files. If multiple configs are benchmarked it will output a file ranking them from fastest to slowest.

Standard implementations of FedLab and its provided benchmarks.

FedLab-benchmarks This repo contains standard implementations of FedLab and its provided benchmarks. Currently, following algorithms or benchrmarks ar

Sequence modeling benchmarks and temporal convolutional networks

Sequence Modeling Benchmarks and Temporal Convolutional Networks (TCN) This repository contains the experiments done in the work An Empirical Evaluati

Official source for spanish Language Models and resources made @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish Language Models 💃🏻 Corpora 📃 Corpora Number of documents Size (GB) BNE 201,080,084 570GB Models 🤖 RoBERTa-base BNE: https://huggingface.co

Benchmarks for semi-supervised domain generalization.

Semi-Supervised Domain Generalization This code is the official implementation of the following paper: Semi-Supervised Domain Generalization with Stoc

This repository contains the implementations related to the experiments of a set of publicly available datasets that are used in the time series forecasting research space.

TSForecasting This repository contains the implementations related to the experiments of a set of publicly available datasets that are used in the tim

"NAS-Bench-301 and the Case for Surrogate Benchmarks for Neural Architecture Search".

NAS-Bench-301 This repository containts code for the paper: "NAS-Bench-301 and the Case for Surrogate Benchmarks for Neural Architecture Search". The

![[WWW 2021 GLB] New Benchmarks for Learning on Non-Homophilous Graphs](https://user-images.githubusercontent.com/58995473/113487776-f3665d80-9487-11eb-8035-fbf5126f85df.png)

[WWW 2021 GLB] New Benchmarks for Learning on Non-Homophilous Graphs

New Benchmarks for Learning on Non-Homophilous Graphs Here are the codes and datasets accompanying the paper: New Benchmarks for Learning on Non-Homop

Code and model benchmarks for "SEVIR : A Storm Event Imagery Dataset for Deep Learning Applications in Radar and Satellite Meteorology"

NeurIPS 2020 SEVIR Code for paper: SEVIR : A Storm Event Imagery Dataset for Deep Learning Applications in Radar and Satellite Meteorology Requirement