36 Repositories

Python mixture-of-experts Libraries

Rich Prosody Diversity Modelling with Phone-level Mixture Density Network

Phone Level Mixture Density Network for TTS This repo contains pytorch implementation of paper Rich Prosody Diversity Modelling with Phone-level Mixtu

![[ICLR 2022] Pretraining Text Encoders with Adversarial Mixture of Training Signal Generators](https://github.com/microsoft/AMOS/raw/main/AMOS.png)

[ICLR 2022] Pretraining Text Encoders with Adversarial Mixture of Training Signal Generators

AMOS This repository contains the scripts for fine-tuning AMOS pretrained models on GLUE and SQuAD 2.0 benchmarks. Paper: Pretraining Text Encoders wi

Pytorch implementation of paper: "NeurMiPs: Neural Mixture of Planar Experts for View Synthesis"

NeurMips: Neural Mixture of Planar Experts for View Synthesis This is the official repo for PyTorch implementation of paper "NeurMips: Neural Mixture

This PyTorch package implements MoEBERT: from BERT to Mixture-of-Experts via Importance-Guided Adaptation (NAACL 2022).

MoEBERT This PyTorch package implements MoEBERT: from BERT to Mixture-of-Experts via Importance-Guided Adaptation (NAACL 2022). Installation Create an

Audio Source Separation is the process of separating a mixture into isolated sounds from individual sources

Audio Source Separation is the process of separating a mixture into isolated sounds from individual sources (e.g. just the lead vocals).

This repository contains code to train and render Mixture of Volumetric Primitives (MVP) models

Mixture of Volumetric Primitives -- Training and Evaluation This repository contains code to train and render Mixture of Volumetric Primitives (MVP) m

To provide 100 JAX exercises over different sections structured as a course or tutorials to teach and learn for beginners, intermediates as well as experts

JaxTon 💯 JAX exercises Mission 🚀 To provide 100 JAX exercises over different sections structured as a course or tutorials to teach and learn for beg

Scaling Vision with Sparse Mixture of Experts

Scaling Vision with Sparse Mixture of Experts This repository contains the code for training and fine-tuning Sparse MoE models for vision (V-MoE) on I

Code for paper [ACE: Ally Complementary Experts for Solving Long-Tailed Recognition in One-Shot] (ICCV 2021, oral))

ACE: Ally Complementary Experts for Solving Long-Tailed Recognition in One-Shot This repository is the official PyTorch implementation of ICCV-21 pape

The implementation of the lifelong infinite mixture model

Lifelong infinite mixture model 📋 This is the implementation of the Lifelong infinite mixture model 📋 Accepted by ICCV 2021 Title : Lifelong Infinit

Machine learning library for fast and efficient Gaussian mixture models

This repository contains code which implements the Stochastic Gaussian Mixture Model (S-GMM) for event-based datasets Dependencies CMake Premake4 Blaz

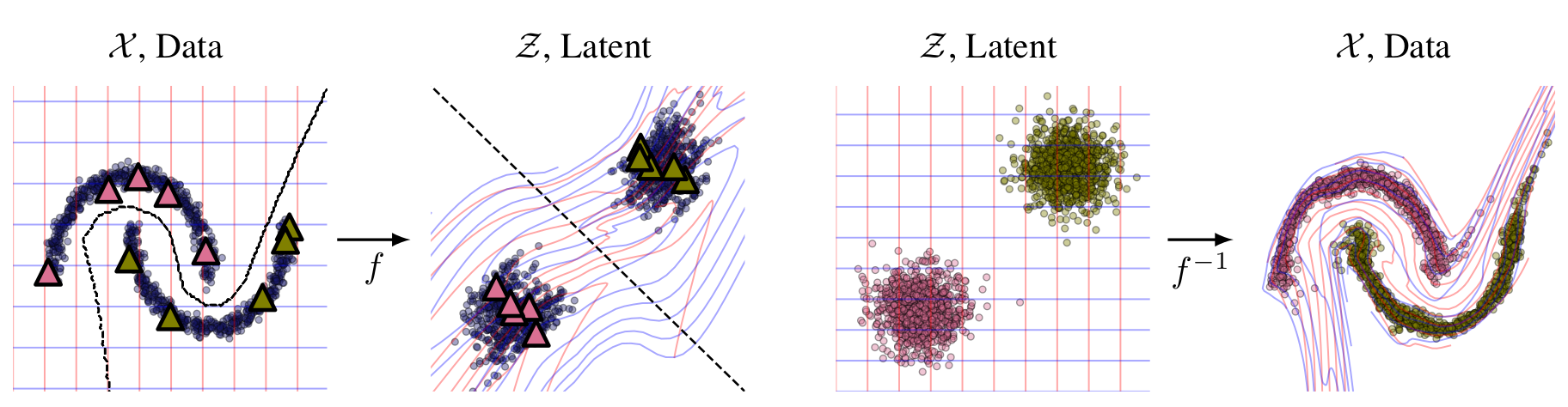

PyTorch implementation of the Flow Gaussian Mixture Model (FlowGMM) model from our paper

Flow Gaussian Mixture Model (FlowGMM) This repository contains a PyTorch implementation of the Flow Gaussian Mixture Model (FlowGMM) model from our pa

Code for "Learning From Multiple Experts: Self-paced Knowledge Distillation for Long-tailed Classification", ECCV 2020 Spotlight

Learning From Multiple Experts: Self-paced Knowledge Distillation for Long-tailed Classification Implementation of "Learning From Multiple Experts: Se

![[ICLR 2021 Spotlight] Pytorch implementation for](https://github.com/frank-xwang/RIDE-LongTailRecognition/raw/main/title-img.png)

[ICLR 2021 Spotlight] Pytorch implementation for "Long-tailed Recognition by Routing Diverse Distribution-Aware Experts."

RIDE: Long-tailed Recognition by Routing Diverse Distribution-Aware Experts. by Xudong Wang, Long Lian, Zhongqi Miao, Ziwei Liu and Stella X. Yu at UC

Interactive Visualization to empower domain experts to align ML model behaviors with their knowledge.

An interactive visualization system designed to helps domain experts responsibly edit Generalized Additive Models (GAMs). For more information, check

Generative Handwriting using LSTM Mixture Density Network with TensorFlow

Generative Handwriting Demo using TensorFlow An attempt to implement the random handwriting generation portion of Alex Graves' paper. See my blog post

'Aligned mixture of latent dynamical systems' (amLDS) for stimulus decoding probabilistic manifold alignment across animals. P. Herrero-Vidal et al. NeurIPS 2021 code.

Across-animal odor decoding by probabilistic manifold alignment (NeurIPS 2021) This repository is the official implementation of aligned mixture of la

This repository uses a mixture of numbers, alphabets, and other symbols found on the computer keyboard

This repository uses a mixture of numbers, alphabets, and other symbols found on the computer keyboard to form a 16-character password which is unpredictable and cannot easily be memorised.

Code and results accompanying our paper titled Mixture Proportion Estimation and PU Learning: A Modern Approach at Neurips 2021 (Spotlight)

Mixture Proportion Estimation and PU Learning: A Modern Approach This repository is the official implementation of Mixture Proportion Estimation and P

Image inpainting using Gaussian Mixture Models

dmfa_inpainting Source code for: MisConv: Convolutional Neural Networks for Missing Data (to be published at WACV 2022) Estimating conditional density

Implementation of several Bayesian multi-target tracking algorithms, including Poisson multi-Bernoulli mixture filters for sets of targets and sets of trajectories. The repository also includes the GOSPA metric and a metric for sets of trajectories to evaluate performance.

This repository contains the Matlab implementations for the following multi-target filtering/tracking algorithms: - Folder PMBM contains the implemen

Modeling CNN layers activity with Gaussian mixture model

GMM-CNN This code package implements the modeling of CNN layers activity with Gaussian mixture model and Inference Graphs visualization technique from

Implementation of ICCV 2021 oral paper -- A Novel Self-Supervised Learning for Gaussian Mixture Model

SS-GMM Implementation of ICCV 2021 oral paper -- Self-Supervised Image Prior Learning with GMM from a Single Noisy Image with supplementary material R

This package implements THOR: Transformer with Stochastic Experts.

THOR: Transformer with Stochastic Experts This PyTorch package implements Taming Sparsely Activated Transformer with Stochastic Experts. Installation

EMNLP 2021: Single-dataset Experts for Multi-dataset Question-Answering

MADE (Multi-Adapter Dataset Experts) This repository contains the implementation of MADE (Multi-adapter dataset experts), which is described in the pa

EMNLP 2021: Single-dataset Experts for Multi-dataset Question-Answering

MADE (Multi-Adapter Dataset Experts) This repository contains the implementation of MADE (Multi-adapter dataset experts), which is described in the pa

Tutel MoE: An Optimized Mixture-of-Experts Implementation

Project Tutel Tutel MoE: An Optimized Mixture-of-Experts Implementation. Supported Framework: Pytorch Supported GPUs: CUDA(fp32 + fp16), ROCm(fp32) Ho

Official Pytorch implementation of Test-Agnostic Long-Tailed Recognition by Test-Time Aggregating Diverse Experts with Self-Supervision.

This repository is the official Pytorch implementation of Test-Agnostic Long-Tailed Recognition by Test-Time Aggregating Diverse Experts with Self-Supervision.

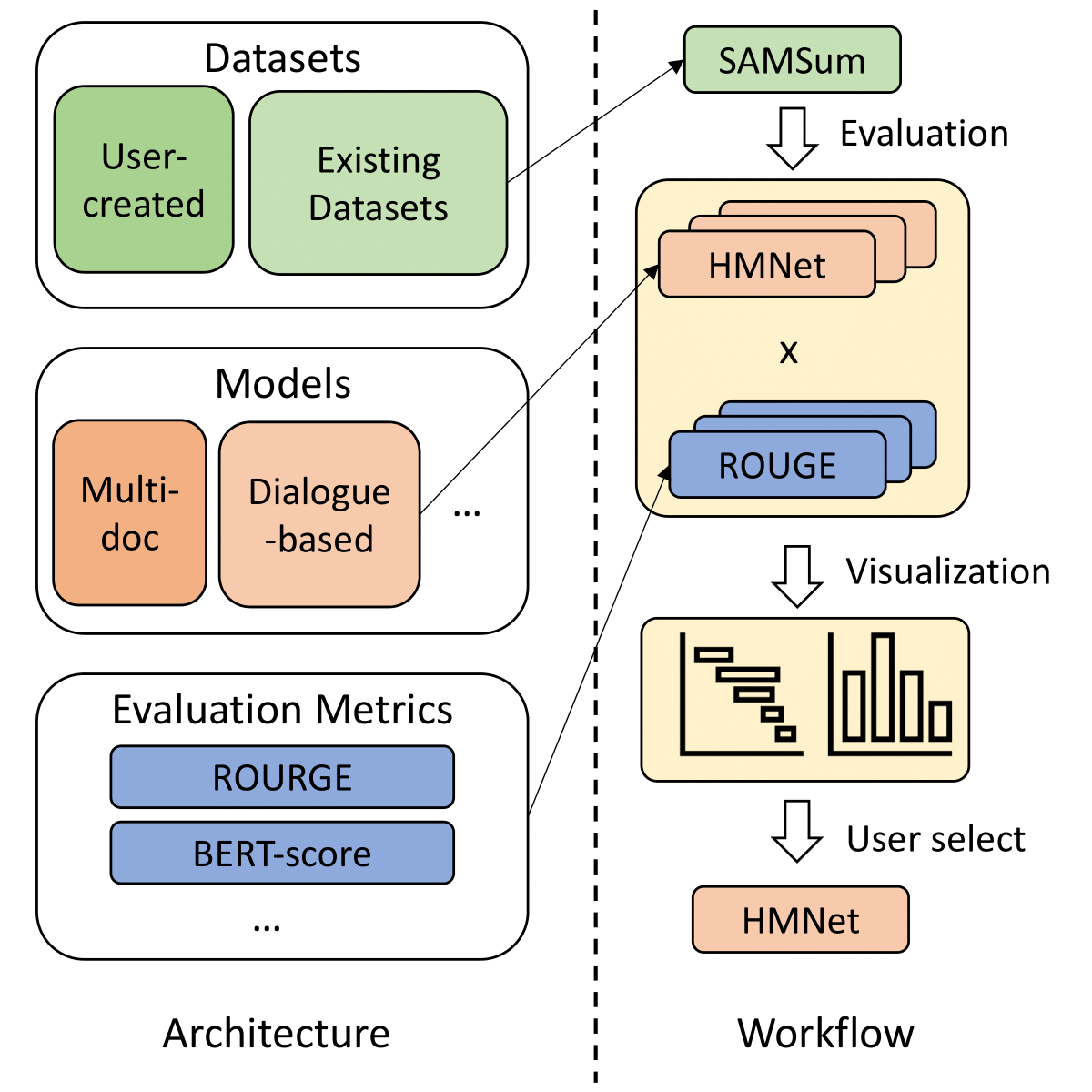

SummerTime - Text Summarization Toolkit for Non-experts

A library to help users choose appropriate summarization tools based on their specific tasks or needs. Includes models, evaluation metrics, and datasets.

SMD-Nets: Stereo Mixture Density Networks

SMD-Nets: Stereo Mixture Density Networks This repository contains a Pytorch implementation of "SMD-Nets: Stereo Mixture Density Networks" (CVPR 2021)

30 Days Of Machine Learning Using Pytorch

Objective of the repository is to learn and build machine learning models using Pytorch. 30DaysofML Using Pytorch

This repository holds the code for the paper "Deep Conditional Gaussian Mixture Model forConstrained Clustering".

Deep Conditional Gaussian Mixture Model for Constrained Clustering. This repository holds the code for the paper Deep Conditional Gaussian Mixture Mod

Objective of the repository is to learn and build machine learning models using Pytorch. 30DaysofML Using Pytorch

30 Days Of Machine Learning Using Pytorch Objective of the repository is to learn and build machine learning models using Pytorch. List of Algorithms

Official PyTorch implementation of MX-Font (Multiple Heads are Better than One: Few-shot Font Generation with Multiple Localized Experts)

Introduction Pytorch implementation of Multiple Heads are Better than One: Few-shot Font Generation with Multiple Localized Expert. | paper Song Park1

Decentralized deep learning in PyTorch. Built to train models on thousands of volunteers across the world.

Hivemind: decentralized deep learning in PyTorch Hivemind is a PyTorch library to train large neural networks across the Internet. Its intended usage

Machine learning, in numpy

numpy-ml Ever wish you had an inefficient but somewhat legible collection of machine learning algorithms implemented exclusively in NumPy? No? Install