1875 Repositories

Python neural-backed-decision-trees Libraries

PyTorch - Python + Nim

Master Release Pytorch - Py + Nim A Nim frontend for pytorch, aiming to be mostly auto-generated and internally using ATen. Because Nim compiles to C+

Kaggle | 9th place single model solution for TGS Salt Identification Challenge

UNet for segmenting salt deposits from seismic images with PyTorch. General We, tugstugi and xuyuan, have participated in the Kaggle competition TGS S

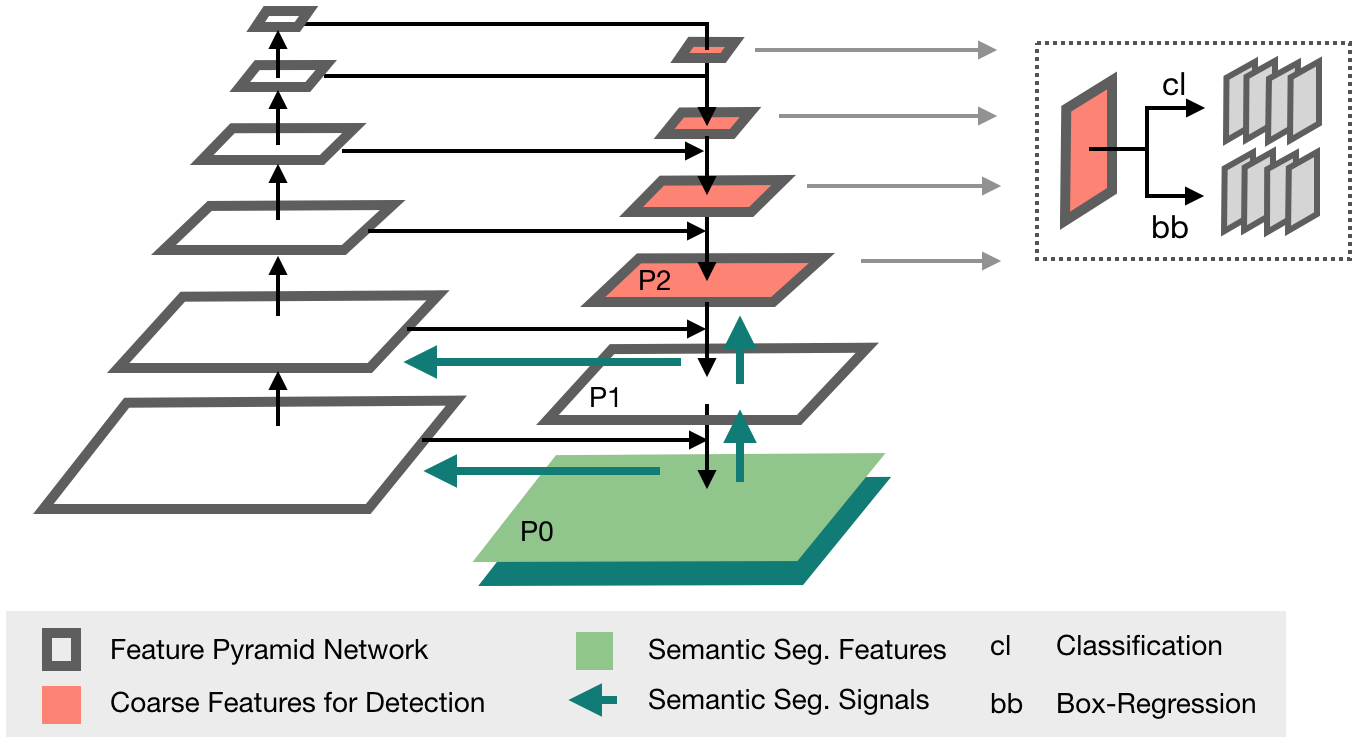

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

ocaml-torch provides some ocaml bindings for the PyTorch tensor library.

ocaml-torch provides some ocaml bindings for the PyTorch tensor library. This brings to OCaml NumPy-like tensor computations with GPU acceleration and tape-based automatic differentiation.

Rust bindings for the C++ api of PyTorch.

tch-rs Rust bindings for the C++ api of PyTorch. The goal of the tch crate is to provide some thin wrappers around the C++ PyTorch api (a.k.a. libtorc

🛠 All-in-one web-based IDE specialized for machine learning and data science.

All-in-one web-based development environment for machine learning Getting Started • Features & Screenshots • Support • Report a Bug • FAQ • Known Issu

Geometry-Consistent Neural Shape Representation with Implicit Displacement Fields

Geometry-Consistent Neural Shape Representation with Implicit Displacement Fields [project page][paper][cite] Geometry-Consistent Neural Shape Represe

HyperPose is a library for building high-performance custom pose estimation applications.

HyperPose is a library for building high-performance custom pose estimation applications.

PyTorch implementation of Densely Connected Time Delay Neural Network

Densely Connected Time Delay Neural Network PyTorch implementation of Densely Connected Time Delay Neural Network (D-TDNN) in our paper "Densely Conne

NU-Wave: A Diffusion Probabilistic Model for Neural Audio Upsampling @ INTERSPEECH 2021 Accepted

NU-Wave — Official PyTorch Implementation NU-Wave: A Diffusion Probabilistic Model for Neural Audio Upsampling Junhyeok Lee, Seungu Han @ MINDsLab Inc

Neural Logic Inductive Learning

Neural Logic Inductive Learning This is the implementation of the Neural Logic Inductive Learning model (NLIL) proposed in the ICLR 2020 paper: Learn

Code release for DS-NeRF (Depth-supervised Neural Radiance Fields)

Depth-supervised NeRF: Fewer Views and Faster Training for Free Project | Paper | YouTube Pytorch implementation of our method for learning neural rad

Official PyTorch implementation of "Rapid Neural Architecture Search by Learning to Generate Graphs from Datasets" (ICLR 2021)

Rapid Neural Architecture Search by Learning to Generate Graphs from Datasets This is the official PyTorch implementation for the paper Rapid Neural A

Code for paper "Vocabulary Learning via Optimal Transport for Neural Machine Translation"

**Codebase and data are uploaded in progress. ** VOLT(-py) is a vocabulary learning codebase that allows researchers and developers to automaticaly ge

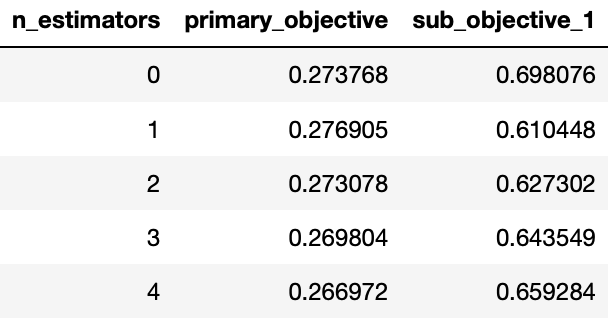

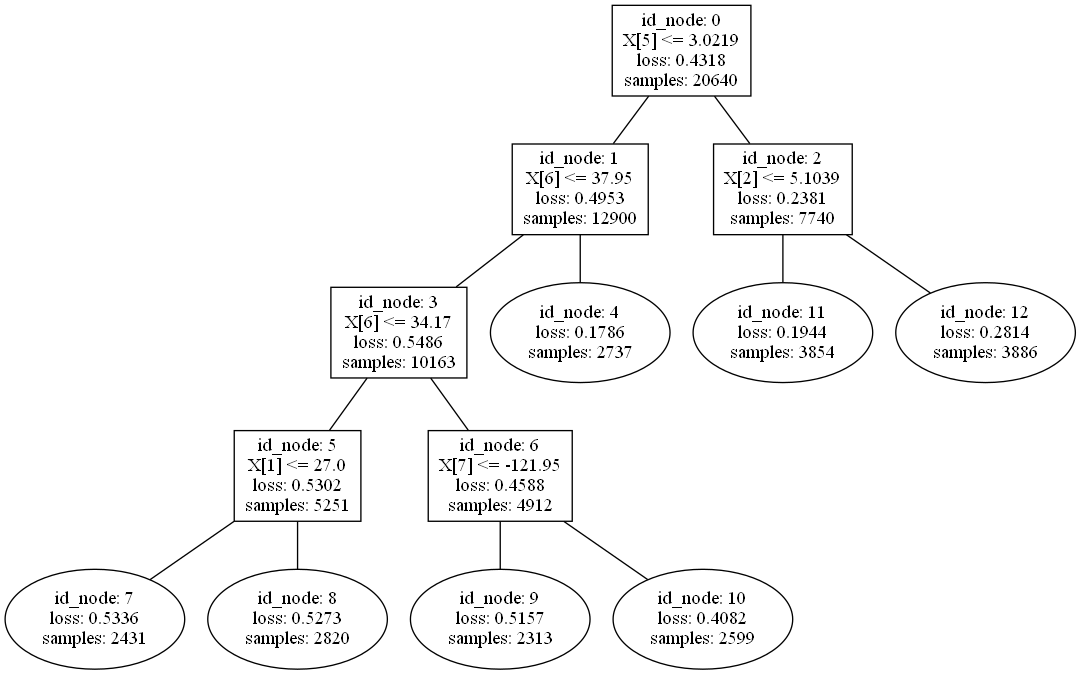

MooGBT is a library for Multi-objective optimization in Gradient Boosted Trees.

MooGBT is a library for Multi-objective optimization in Gradient Boosted Trees. MooGBT optimizes for multiple objectives by defining constraints on sub-objective(s) along with a primary objective. The constraints are defined as upper bounds on sub-objective loss function. MooGBT uses a Augmented Lagrangian(AL) based constrained optimization framework with Gradient Boosted Trees, to optimize for multiple objectives.

Official PyTorch implementation of the preprint paper "Stylized Neural Painting", accepted to CVPR 2021.

Official PyTorch implementation of the preprint paper "Stylized Neural Painting", accepted to CVPR 2021.

Classify bird species based on their songs using SIamese Networks and 1D dilated convolutions.

The goal is to classify different birds species based on their songs/calls. Spectrograms have been extracted from the audio samples and used as features for classification.

This is my reading list for my PhD in AI, NLP, Deep Learning and more.

This is my reading list for my PhD in AI, NLP, Deep Learning and more.

This is the source code for our ICLR2021 paper: Adaptive Universal Generalized PageRank Graph Neural Network.

GPRGNN This is the source code for our ICLR2021 paper: Adaptive Universal Generalized PageRank Graph Neural Network. Hidden state feature extraction i

Code for the paper "Balancing Training for Multilingual Neural Machine Translation, ACL 2020"

Balancing Training for Multilingual Neural Machine Translation Implementation of the paper Balancing Training for Multilingual Neural Machine Translat

[ACL 20] Probing Linguistic Features of Sentence-level Representations in Neural Relation Extraction

REval Table of Contents Introduction Overview Requirements Installation Probing Usage Citation License 🎓 Introduction REval is a simple framework for

Code for the paper "Implicit Representations of Meaning in Neural Language Models"

Implicit Representations of Meaning in Neural Language Models Preliminaries Create and set up a conda environment as follows: conda create -n state-pr

R-Drop: Regularized Dropout for Neural Networks

R-Drop: Regularized Dropout for Neural Networks R-drop is a simple yet very effective regularization method built upon dropout, by minimizing the bidi

PyTorch Implementation of NCSOFT's FastPitchFormant: Source-filter based Decomposed Modeling for Speech Synthesis

FastPitchFormant - PyTorch Implementation PyTorch Implementation of FastPitchFormant: Source-filter based Decomposed Modeling for Speech Synthesis. Qu

An Efficient Implementation of Analytic Mesh Algorithm for 3D Iso-surface Extraction from Neural Networks

AnalyticMesh Analytic Marching is an exact meshing solution from neural networks. Compared to standard methods, it completely avoids geometric and top

Official repository for the paper "Going Beyond Linear Transformers with Recurrent Fast Weight Programmers"

Recurrent Fast Weight Programmers This is the official repository containing the code we used to produce the experimental results reported in the pape

PPLNN is a Primitive Library for Neural Network is a high-performance deep-learning inference engine for efficient AI inferencing

PPLNN is a Primitive Library for Neural Network is a high-performance deep-learning inference engine for efficient AI inferencing

Graph4nlp is the library for the easy use of Graph Neural Networks for NLP

Graph4NLP Graph4NLP is an easy-to-use library for R&D at the intersection of Deep Learning on Graphs and Natural Language Processing (i.e., DLG4NLP).

PyTorch implementation of ARM-Net: Adaptive Relation Modeling Network for Structured Data.

A ready-to-use framework of latest models for structured (tabular) data learning with PyTorch. Applications include recommendation, CRT prediction, healthcare analytics, and etc.

A python library to build Model Trees with Linear Models at the leaves.

A python library to build Model Trees with Linear Models at the leaves.

This is a template for the Non-autoregressive Deep Learning-Based TTS model (in PyTorch).

Non-autoregressive Deep Learning-Based TTS Template This is a template for the Non-autoregressive TTS model. It contains Data Preprocessing Pipeline D

EdMIPS: Rethinking Differentiable Search for Mixed-Precision Neural Networks

EdMIPS is an efficient algorithm to search the optimal mixed-precision neural network directly without proxy task on ImageNet given computation budgets. It can be applied to many popular network architectures, including ResNet, GoogLeNet, and Inception-V3.

Pytorch Implementation of Spiking Neural Networks Calibration, ICML 2021

SNN_Calibration Pytorch Implementation of Spiking Neural Networks Calibration, ICML 2021 Feature Comparison of SNN calibration: Features SNN Direct Tr

This repository contains a PyTorch implementation of "AD-NeRF: Audio Driven Neural Radiance Fields for Talking Head Synthesis".

AD-NeRF: Audio Driven Neural Radiance Fields for Talking Head Synthesis | Project Page | Paper | PyTorch implementation for the paper "AD-NeRF: Audio

30 Days Of Machine Learning Using Pytorch

Objective of the repository is to learn and build machine learning models using Pytorch. 30DaysofML Using Pytorch

PyTorch implementation of Soft-DTW: a Differentiable Loss Function for Time-Series in CUDA

Soft DTW Loss Function for PyTorch in CUDA This is a Pytorch Implementation of Soft-DTW: a Differentiable Loss Function for Time-Series which is batch

Orthogonal Over-Parameterized Training

The inductive bias of a neural network is largely determined by the architecture and the training algorithm. To achieve good generalization, how to effectively train a neural network is of great importance. We propose a novel orthogonal over-parameterized training (OPT) framework that can provably minimize the hyperspherical energy which characterizes the diversity of neurons on a hypersphere. See our previous work -- MHE for an in-depth introduction.

DeepProbLog is an extension of ProbLog that integrates Probabilistic Logic Programming with deep learning by introducing the neural predicate.

DeepProbLog DeepProbLog is an extension of ProbLog that integrates Probabilistic Logic Programming with deep learning by introducing the neural predic

Grapheme-to-phoneme (G2P) conversion is the process of generating pronunciation for words based on their written form.

Neural G2P to portuguese language Grapheme-to-phoneme (G2P) conversion is the process of generating pronunciation for words based on their written for

PyTorch Implementation of Google Brain's WaveGrad 2: Iterative Refinement for Text-to-Speech Synthesis

WaveGrad2 - PyTorch Implementation PyTorch Implementation of Google Brain's WaveGrad 2: Iterative Refinement for Text-to-Speech Synthesis. Status (202

MetaBalance: High-Performance Neural Networks for Class-Imbalanced Data

This repository is the official PyTorch implementation of Meta-Balance. Find the paper on arxiv MetaBalance: High-Performance Neural Networks for Clas

Learning Neural Network Subspaces

Learning Neural Network Subspaces Welcome to the codebase for Learning Neural Network Subspaces by Mitchell Wortsman, Maxwell Horton, Carlos Guestrin,

Random Walk Graph Neural Networks

Random Walk Graph Neural Networks This repository is the official implementation of Random Walk Graph Neural Networks. Requirements Code is written in

JittorVis is a deep neural network computational graph visualization library based on Jittor.

JittorVis - Visual understanding of deep learning model.

The `rtdl` library + The official implementation of the paper

The `rtdl` library + The official implementation of the paper "Revisiting Deep Learning Models for Tabular Data"

PyTorch implementation of "ContextNet: Improving Convolutional Neural Networks for Automatic Speech Recognition with Global Context" (INTERSPEECH 2020)

ContextNet ContextNet has CNN-RNN-transducer architecture and features a fully convolutional encoder that incorporates global context information into

Continuous Diffusion Graph Neural Network

We present Graph Neural Diffusion (GRAND) that approaches deep learning on graphs as a continuous diffusion process and treats Graph Neural Networks (GNNs) as discretisations of an underlying PDE.

Jina allows you to build deep learning-powered search-as-a-service in just minutes

Cloud-native neural search framework for any kind of data

In this repository, I have developed an end to end Automatic speech recognition project. I have developed the neural network model for automatic speech recognition with PyTorch and used MLflow to manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry.

End to End Automatic Speech Recognition In this repository, I have developed an end to end Automatic speech recognition project. I have developed the

Segmentation and Identification of Vertebrae in CT Scans using CNN, k-means Clustering and k-NN

Segmentation and Identification of Vertebrae in CT Scans using CNN, k-means Clustering and k-NN If you use this code for your research, please cite ou

Neural Surface Maps

Neural Surface Maps Official implementation of Neural Surface Maps - Luca Morreale, Noam Aigerman, Vladimir Kim, Niloy J. Mitra [Paper] [Project Page]

Unofficial PyTorch implementation of Neural Additive Models (NAM) by Agarwal, et al.

nam-pytorch Unofficial PyTorch implementation of Neural Additive Models (NAM) by Agarwal, et al. [abs, pdf] Installation You can access nam-pytorch vi

The code for the CVPR 2021 paper Neural Deformation Graphs, a novel approach for globally-consistent deformation tracking and 3D reconstruction of non-rigid objects.

Neural Deformation Graphs Project Page | Paper | Video Neural Deformation Graphs for Globally-consistent Non-rigid Reconstruction Aljaž Božič, Pablo P

![A PyTorch implementation of EventProp [https://arxiv.org/abs/2009.08378], a method to train Spiking Neural Networks](https://github.com/lolemacs/pytorch-eventprop/raw/master/images/forward.png)

A PyTorch implementation of EventProp [https://arxiv.org/abs/2009.08378], a method to train Spiking Neural Networks

Spiking Neural Network training with EventProp This is an unofficial PyTorch implemenation of EventProp, a method to compute exact gradients for Spiki

NBEATSx: Neural basis expansion analysis with exogenous variables

NBEATSx: Neural basis expansion analysis with exogenous variables We extend the NBEATS model to incorporate exogenous factors. The resulting method, c

![[ICML 2021]](https://github.com/Shen-Lab/GraphCL_Automated/raw/master/joao.png)

[ICML 2021] "Graph Contrastive Learning Automated" by Yuning You, Tianlong Chen, Yang Shen, Zhangyang Wang

Graph Contrastive Learning Automated PyTorch implementation for Graph Contrastive Learning Automated [talk] [poster] [appendix] Yuning You, Tianlong C

NAS Benchmark in "Prioritized Architecture Sampling with Monto-Carlo Tree Search", CVPR2021

NAS-Bench-Macro This repository includes the benchmark and code for NAS-Bench-Macro in paper "Prioritized Architecture Sampling with Monto-Carlo Tree

🔮 Execution time predictions for deep neural network training iterations across different GPUs.

Habitat: A Runtime-Based Computational Performance Predictor for Deep Neural Network Training Habitat is a tool that predicts a deep neural network's

UnivNet: A Neural Vocoder with Multi-Resolution Spectrogram Discriminators for High-Fidelity Waveform Generation

UnivNet UnivNet: A Neural Vocoder with Multi-Resolution Spectrogram Discriminators for High-Fidelity Waveform Generation. Training python train.py --c

CVPR '21: In the light of feature distributions: Moment matching for Neural Style Transfer

In the light of feature distributions: Moment matching for Neural Style Transfer (CVPR 2021) This repository provides code to recreate results present

This is the official repository of XVFI (eXtreme Video Frame Interpolation)

XVFI This is the official repository of XVFI (eXtreme Video Frame Interpolation), https://arxiv.org/abs/2103.16206 Last Update: 20210607 We provide th

Advances in Neural Information Processing Systems (NeurIPS), 2020.

What is being transferred in transfer learning? This repo contains the code for the following paper: Behnam Neyshabur*, Hanie Sedghi*, Chiyuan Zhang*.

A Neural Language Style Transfer framework to transfer natural language text smoothly between fine-grained language styles like formal/casual, active/passive, and many more. Created by Prithiviraj Damodaran. Open to pull requests and other forms of collaboration.

Styleformer A Neural Language Style Transfer framework to transfer natural language text smoothly between fine-grained language styles like formal/cas

Deep reinforcement learning library built on top of Neural Network Libraries

Deep Reinforcement Learning Library built on top of Neural Network Libraries NNablaRL is a deep reinforcement learning library built on top of Neural

Yet Another Neural Machine Translation Toolkit

YANMTT YANMTT is short for Yet Another Neural Machine Translation Toolkit. For a backstory how I ended up creating this toolkit scroll to the bottom o

EPSANet:An Efficient Pyramid Split Attention Block on Convolutional Neural Network

EPSANet:An Efficient Pyramid Split Attention Block on Convolutional Neural Network This repo contains the official Pytorch implementaion code and conf

PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation

StyleSpeech - PyTorch Implementation PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation. Status (2021.06.13

Neural Ensemble Search for Performant and Calibrated Predictions

Neural Ensemble Search Introduction This repo contains the code accompanying the paper: Neural Ensemble Search for Performant and Calibrated Predictio

Code for Deterministic Neural Networks with Appropriate Inductive Biases Capture Epistemic and Aleatoric Uncertainty

Deep Deterministic Uncertainty This repository contains the code for Deterministic Neural Networks with Appropriate Inductive Biases Capture Epistemic

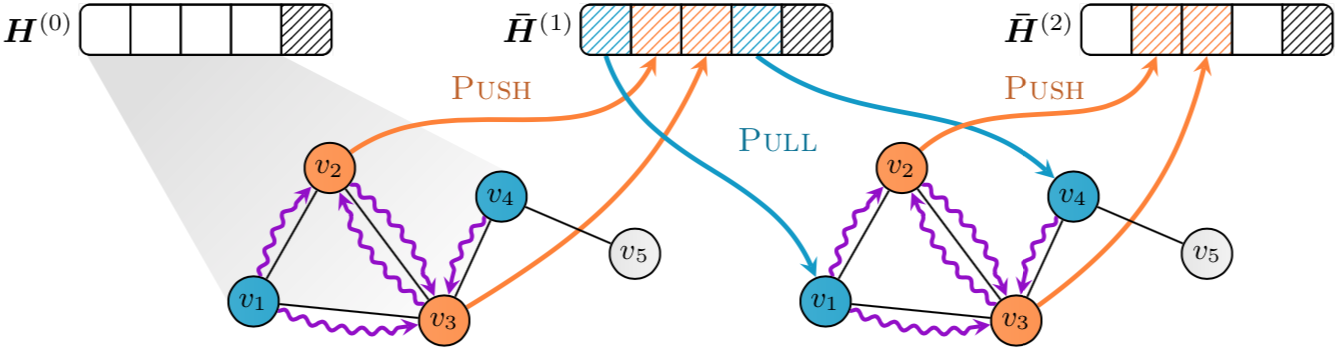

Implementation of "GNNAutoScale: Scalable and Expressive Graph Neural Networks via Historical Embeddings" in PyTorch

PyGAS: Auto-Scaling GNNs in PyG PyGAS is the practical realization of our G NN A uto S cale (GAS) framework, which scales arbitrary message-passing GN

Implementation of Segformer, Attention + MLP neural network for segmentation, in Pytorch

Segformer - Pytorch Implementation of Segformer, Attention + MLP neural network for segmentation, in Pytorch. Install $ pip install segformer-pytorch

MERLOT: Multimodal Neural Script Knowledge Models

merlot MERLOT: Multimodal Neural Script Knowledge Models MERLOT is a model for learning what we are calling "neural script knowledge" -- representatio

The official PyTorch implementation of recent paper - SAINT: Improved Neural Networks for Tabular Data via Row Attention and Contrastive Pre-Training

This repository is the official PyTorch implementation of SAINT. Find the paper on arxiv SAINT: Improved Neural Networks for Tabular Data via Row Atte

banditml is a lightweight contextual bandit & reinforcement learning library designed to be used in production Python services.

banditml is a lightweight contextual bandit & reinforcement learning library designed to be used in production Python services. This library is developed by Bandit ML and ex-authors of Facebook's applied reinforcement learning platform, Reagent.

small collection of functions for neural networks

neurobiba other languages: RU small collection of functions for neural networks. very easy to use! Installation: pip install neurobiba See examples h

Intruder detection systems are common place now, and readily available in industry, but how do they work? They must detect people and large animals, but not generate false alarms in the presence of small animals, changes in lighting, environmental motion such as trees, or melting snow. To work correctly, the system must learn the background, in order to differentiate foreground objects.

Intruder-Detection Intruder detection systems are common place now, and readily available in industry, but how do they work? They must detect people a

PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation

StyleSpeech - PyTorch Implementation PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation. Status (2021.06.09

Handwritten Text Recognition (HTR) system implemented with TensorFlow (TF) and trained on the IAM off-line HTR dataset. This Neural Network (NN) model recognizes the text contained in the images of segmented words.

Handwritten-Text-Recognition Handwritten Text Recognition (HTR) system implemented with TensorFlow (TF) and trained on the IAM off-line HTR dataset. T

Unofficial TensorFlow implementation of Protein Interface Prediction using Graph Convolutional Networks.

[TensorFlow] Protein Interface Prediction using Graph Convolutional Networks Unofficial TensorFlow implementation of Protein Interface Prediction usin

The source code of the paper "Understanding Graph Neural Networks from Graph Signal Denoising Perspectives"

GSDN-F and GSDN-EF This repository provides a reference implementation of GSDN-F and GSDN-EF as described in the paper "Understanding Graph Neural Net

Sharpness-Aware Minimization for Efficiently Improving Generalization

Sharpness-Aware-Minimization-TensorFlow This repository provides a minimal implementation of sharpness-aware minimization (SAM) (Sharpness-Aware Minim

Pytorch implementation of MaskFlownet

MaskFlownet-Pytorch Unofficial PyTorch implementation of MaskFlownet (https://github.com/microsoft/MaskFlownet). Tested with: PyTorch 1.5.0 CUDA 10.1

Benchmarks for semi-supervised domain generalization.

Semi-Supervised Domain Generalization This code is the official implementation of the following paper: Semi-Supervised Domain Generalization with Stoc

Neural Factorization of Shape and Reflectance Under An Unknown Illumination

NeRFactor [Paper] [Video] [Project] This is the authors' code release for: NeRFactor: Neural Factorization of Shape and Reflectance Under an Unknown I

Degree-Quant: Quantization-Aware Training for Graph Neural Networks.

Degree-Quant This repo provides a clean re-implementation of the code associated with the paper Degree-Quant: Quantization-Aware Training for Graph Ne

PyTorch Code of "Memory In Memory: A Predictive Neural Network for Learning Higher-Order Non-Stationarity from Spatiotemporal Dynamics"

Memory In Memory Networks It is based on the paper Memory In Memory: A Predictive Neural Network for Learning Higher-Order Non-Stationarity from Spati

Boosted neural network for tabular data

XBNet - Xtremely Boosted Network Boosted neural network for tabular data XBNet is an open source project which is built with PyTorch which tries to co

Pytorch Implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension)

DiffSinger - PyTorch Implementation PyTorch implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension). Status

Deep learning-based approach to discovering Granger causality networks in multivariate time series

Granger causality discovery for neural networks.

Pytorch implementation of Generative Models as Distributions of Functions 🌿

Generative Models as Distributions of Functions This repo contains code to reproduce all experiments in Generative Models as Distributions of Function

Disagreement-Regularized Imitation Learning

Due to a normalization bug the expert trajectories have lower performance than the rl_baseline_zoo reported experts. Please see the following link in

Official codebase for Decision Transformer: Reinforcement Learning via Sequence Modeling.

Decision Transformer Lili Chen*, Kevin Lu*, Aravind Rajeswaran, Kimin Lee, Aditya Grover, Michael Laskin, Pieter Abbeel, Aravind Srinivas†, and Igor M

SparseML is a libraries for applying sparsification recipes to neural networks with a few lines of code, enabling faster and smaller models

SparseML is a toolkit that includes APIs, CLIs, scripts and libraries that apply state-of-the-art sparsification algorithms such as pruning and quantization to any neural network. General, recipe-driven approaches built around these algorithms enable the simplification of creating faster and smaller models for the ML performance community at large.

🔬 A curated list of awesome machine learning strategies & tools in financial market.

🔬 A curated list of awesome machine learning strategies & tools in financial market.

All course materials for the Zero to Mastery Deep Learning with TensorFlow course.

All course materials for the Zero to Mastery Deep Learning with TensorFlow course.

This is the repo for the paper `SumGNN: Multi-typed Drug Interaction Prediction via Efficient Knowledge Graph Summarization'. (published in Bioinformatics'21)

SumGNN: Multi-typed Drug Interaction Prediction via Efficient Knowledge Graph Summarization This is the code for our paper ``SumGNN: Multi-typed Drug

Official code for "Mean Shift for Self-Supervised Learning"

MSF Official code for "Mean Shift for Self-Supervised Learning" Requirements Python = 3.7.6 PyTorch = 1.4 torchvision = 0.5.0 faiss-gpu = 1.6.1 In

Code release to accompany paper "Geometry-Aware Gradient Algorithms for Neural Architecture Search."

Geometry-Aware Gradient Algorithms for Neural Architecture Search This repository contains the code required to run the experiments for the DARTS sear

A supplementary code for Editable Neural Networks, an ICLR 2020 submission.

Editable neural networks A supplementary code for Editable Neural Networks, an ICLR 2020 submission by Anton Sinitsin, Vsevolod Plokhotnyuk, Dmitry Py

An implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks in PyTorch.

Neural Attention Distillation This is an implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep