2838 Repositories

Python neural-topic-models Libraries

An implementation of quantum convolutional neural network with MindQuantum. Huawei, classifying MNIST dataset

关于实现的一点说明 山东大学 2020级 苏博南 www.subonan.com 文件说明 tools.py 这里面主要有两个函数: resize(a, lenb) 这其实是我找同学写的一个小算法hhh。给出一个$28\times 28$的方阵a,返回一个$lenb\times lenb$的方阵。因

A foreign language learning aid using a neural network to predict probability of translating foreign words

Langy Langy is a reading-focused foreign language learning aid orientated towards young children. Reading is an activity that every child knows. It is

Formulae is a Python library that implements Wilkinson's formulas for mixed-effects models.

formulae formulae is a Python library that implements Wilkinson's formulas for mixed-effects models. The main difference with other implementations li

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm.

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm. It contains the code to reproduce the results presented in the original paper: https://arxiv.org/abs/2112.03670

Convert onnx models to pytorch.

onnx2torch onnx2torch is an ONNX to PyTorch converter. Our converter: Is easy to use – Convert the ONNX model with the function call convert; Is easy

A web application using [FastAPI + streamlit + Docker] Neural Style Transfer (NST) refers to a class of software algorithms that manipulate digital images

Neural Style Transfer Web App - [FastAPI + streamlit + Docker] NST - application based on the Perceptual Losses for Real-Time Style Transfer and Super

A PaddlePaddle version of Neural Renderer, refer to its PyTorch version

Neural 3D Mesh Renderer in PadddlePaddle A PaddlePaddle version of Neural Renderer, refer to its PyTorch version Install Run: pip install neural-rende

Robbing the FED: Directly Obtaining Private Data in Federated Learning with Modified Models

Robbing the FED: Directly Obtaining Private Data in Federated Learning with Modified Models This repo contains a barebones implementation for the atta

An official source code for paper Deep Graph Clustering via Dual Correlation Reduction, accepted by AAAI 2022

Dual Correlation Reduction Network An official source code for paper Deep Graph Clustering via Dual Correlation Reduction, accepted by AAAI 2022. Any

The python SDK for Eto, the AI focused data platform for teams bringing AI models to production

Eto Labs Python SDK This is the python SDK for Eto, the AI focused data platform for teams bringing AI models to production. The python SDK makes it e

Code for Temporally Abstract Partial Models

Code for Temporally Abstract Partial Models Accompanies the code for the experimental section of the paper: Temporally Abstract Partial Models, Khetar

Code for WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models.

WECHSEL Code for WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models. arXiv: https://arx

Experiments on continual learning from a stream of pretrained models.

Ex-model CL Ex-model continual learning is a setting where a stream of experts (i.e. model's parameters) is available and a CL model learns from them

![[AAAI 2022] Sparse Structure Learning via Graph Neural Networks for Inductive Document Classification](https://github.com/qkrdmsghk/TextSSL/raw/master/TextSSL.png)

[AAAI 2022] Sparse Structure Learning via Graph Neural Networks for Inductive Document Classification

Sparse Structure Learning via Graph Neural Networks for inductive document classification Make graph dataset create co-occurrence graph for datasets.

Discovering Explanatory Sentences in Legal Case Decisions Using Pre-trained Language Models.

Statutory Interpretation Data Set This repository contains the data set created for the following research papers: Savelka, Jaromir, and Kevin D. Ashl

A high-performance distributed deep learning system targeting large-scale and automated distributed training.

HETU Documentation | Examples Hetu is a high-performance distributed deep learning system targeting trillions of parameters DL model training, develop

Code for "Typilus: Neural Type Hints" PLDI 2020

Typilus A deep learning algorithm for predicting types in Python. Please find a preprint here. This repository contains its implementation (src/) and

AI4Good project for detecting waste in the environment

Detect waste AI4Good project for detecting waste in environment. www.detectwaste.ml. Our latest results were published in Waste Management journal in

AdamW optimizer for bfloat16 models in pytorch.

Image source AdamW optimizer for bfloat16 models in pytorch. Bfloat16 is currently an optimal tradeoff between range and relative error for deep netwo

The source code of the paper "SHGNN: Structure-Aware Heterogeneous Graph Neural Network"

SHGNN: Structure-Aware Heterogeneous Graph Neural Network The source code and dataset of the paper: SHGNN: Structure-Aware Heterogeneous Graph Neural

Code for WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models.

WECHSEL Code for WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models. arXiv: https://arx

Some code of the implements of Geological Modeling Using 3D Pixel-Adaptive and Deformable Convolutional Neural Network

3D-GMPDCNN Geological Modeling Using 3D Pixel-Adaptive and Deformable Convolutional Neural Network PyTorch implementation of "Geological Modeling Usin

MLReef is an open source ML-Ops platform that helps you collaborate, reproduce and share your Machine Learning work with thousands of other users.

The collaboration platform for Machine Learning MLReef is an open source ML-Ops platform that helps you collaborate, reproduce and share your Machine

PyGAD, a Python 3 library for building the genetic algorithm and training machine learning algorithms (Keras & PyTorch).

PyGAD: Genetic Algorithm in Python PyGAD is an open-source easy-to-use Python 3 library for building the genetic algorithm and optimizing machine lear

Selecting Parallel In-domain Sentences for Neural Machine Translation Using Monolingual Texts

DataSelection-NMT Selecting Parallel In-domain Sentences for Neural Machine Translation Using Monolingual Texts Quick update: The paper got accepted o

Programming with Neural Surrogates of Programs

Programming with Neural Surrogates of Programs

Twin-deep neural network for semi-supervised learning of materials properties

Deep Semi-Supervised Teacher-Student Material Synthesizability Prediction Citation: Semi-supervised teacher-student deep neural network for materials

Centroid-UNet is deep neural network model to detect centroids from satellite images.

Centroid UNet - Locating Object Centroids in Aerial/Serial Images Introduction Centroid-UNet is deep neural network model to detect centroids from Aer

Integrate GraphQL with your Pydantic models

graphene-pydantic A Pydantic integration for Graphene. Installation pip install "graphene-pydantic" Examples Here is a simple Pydantic model: import u

PyG (PyTorch Geometric) - A library built upon PyTorch to easily write and train Graph Neural Networks (GNNs)

PyG (PyTorch Geometric) is a library built upon PyTorch to easily write and train Graph Neural Networks (GNNs) for a wide range of applications related to structured data.

Using image super resolution models with vapoursynth and speeding them up with TensorRT

vs-RealEsrganAnime-tensorrt-docker Using image super resolution models with vapoursynth and speeding them up with TensorRT. Also a docker image since

This repository is an individual project made at BME with the topic of self-driving car simulator and control algorithm.

BME individual project - NEAT based self-driving car This repository is an individual project made at BME with the topic of self-driving car simulator

Official implementation of the article "Unsupervised JPEG Domain Adaptation For Practical Digital Forensics"

Unsupervised JPEG Domain Adaptation for Practical Digital Image Forensics @WIFS2021 (Montpellier, France) Rony Abecidan, Vincent Itier, Jeremie Boulan

Code for paper: "Spinning Language Models for Propaganda-As-A-Service"

Spinning Language Models for Propaganda-As-A-Service This is the source code for the Arxiv version of the paper. You can use this Google Colab to expl

Official Implementation of "LUNAR: Unifying Local Outlier Detection Methods via Graph Neural Networks"

LUNAR Official Implementation of "LUNAR: Unifying Local Outlier Detection Methods via Graph Neural Networks" Adam Goodge, Bryan Hooi, Ng See Kiong and

A FAIR dataset of TCV experimental results for validating edge/divertor turbulence models.

TCV-X21 validation for divertor turbulence simulations Quick links Intro Welcome to TCV-X21. We're glad you've found us! This repository is designed t

Pydantic models for pywttr and aiopywttr.

Pydantic models for pywttr and aiopywttr.

A minimal, standalone viewer for 3D animations stored as stop-motion sequences of individual .obj mesh files.

ObjSequenceViewer V0.5 A minimal, standalone viewer for 3D animations stored as stop-motion sequences of individual .obj mesh files. Installation: pip

PyTorch implementation of normalizing flow models

PyTorch implementation of normalizing flow models

Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts

t5-japanese Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts. The following is a list of models that

A PyTorch library and evaluation platform for end-to-end compression research

CompressAI CompressAI (compress-ay) is a PyTorch library and evaluation platform for end-to-end compression research. CompressAI currently provides: c

PyAbsorp is a python module that has the main focus to help estimate the Sound Absorption Coefficient.

This is a package developed to be use to find the Sound Absorption Coefficient through some implemented models, like Biot-Allard, Johnson-Champoux and

SeqAttack: a framework for adversarial attacks on token classification models

A framework for adversarial attacks against token classification models

A library for researching neural networks compression and acceleration methods.

A library for researching neural networks compression and acceleration methods.

Users can free try their models on SIDD dataset based on this code

SIDD benchmark 1 Train python train.py If you want to train your network, just modify the yaml in the options folder. 2 Validation python validation.p

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

Neural-PIL: Neural Pre-Integrated Lighting for Reflectance Decomposition - NeurIPS2021

Neural-PIL: Neural Pre-Integrated Lighting for Reflectance Decomposition Project Page | Video | Paper Implementation for Neural-PIL. A novel method wh

A `Neural = Symbolic` framework for sound and complete weighted real-value logic

Logical Neural Networks LNNs are a novel Neuro = symbolic framework designed to seamlessly provide key properties of both neural nets (learning) and s

A small library for doing fluid simulation with neural networks.

Neural Fluid Fields This is a small library for doing fluid simulation with neural fields. Check out our review paper, Neural Fields in Visual Computi

easyNeuron is a simple way to create powerful machine learning models, analyze data and research cutting-edge AI.

easyNeuron is a simple way to create powerful machine learning models, analyze data and research cutting-edge AI.

A lightweight library designed to accelerate the process of training PyTorch models by providing a minimal

A lightweight library designed to accelerate the process of training PyTorch models by providing a minimal, but extensible training loop which is flexible enough to handle the majority of use cases, and capable of utilizing different hardware options with no code changes required.

Official implementation of the paper "Light Field Networks: Neural Scene Representations with Single-Evaluation Rendering"

Light Field Networks Project Page | Paper | Data | Pretrained Models Vincent Sitzmann*, Semon Rezchikov*, William Freeman, Joshua Tenenbaum, Frédo Dur

Official code for "Maximum Likelihood Training of Score-Based Diffusion Models", NeurIPS 2021 (spotlight)

Maximum Likelihood Training of Score-Based Diffusion Models This repo contains the official implementation for the paper Maximum Likelihood Training o

Autoregressive Models in PyTorch.

Autoregressive This repository contains all the necessary PyTorch code, tailored to my presentation, to train and generate data from WaveNet-like auto

Models, datasets and tools for Facial keypoints detection

Template for Data Science Project This repo aims to give a robust starting point to any Data Science related project. It contains readymade tools setu

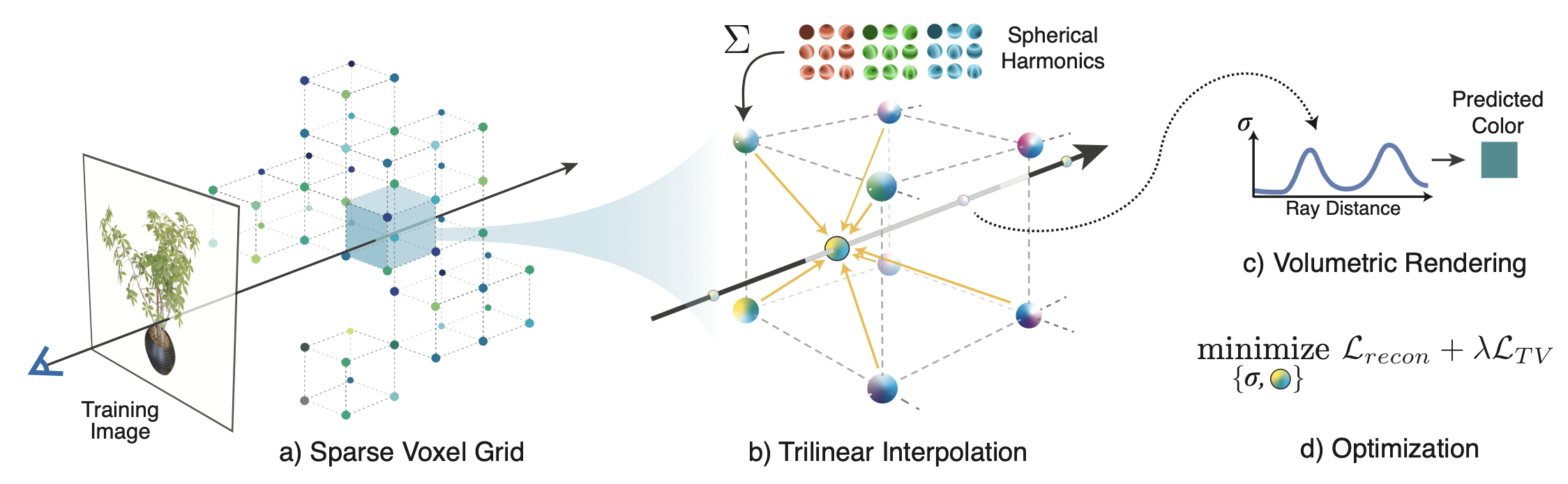

Plenoxels: Radiance Fields without Neural Networks

Plenoxels: Radiance Fields without Neural Networks Alex Yu*, Sara Fridovich-Keil*, Matthew Tancik, Qinhong Chen, Benjamin Recht, Angjoo Kanazawa UC Be

A modular PyTorch library for optical flow estimation using neural networks

A modular PyTorch library for optical flow estimation using neural networks

i-SpaSP: Structured Neural Pruning via Sparse Signal Recovery

i-SpaSP: Structured Neural Pruning via Sparse Signal Recovery This is a public code repository for the publication: i-SpaSP: Structured Neural Pruning

The source code for Adaptive Kernel Graph Neural Network at AAAI2022

AKGNN The source code for Adaptive Kernel Graph Neural Network at AAAI2022. Please cite our paper if you think our work is helpful to you: @inproceedi

Explainability of the Implications of Supervised and Unsupervised Face Image Quality Estimations Through Activation Map Variation Analyses in Face Recognition Models

Explainable_FIQA_WITH_AMVA Note This is the official repository of the paper: Explainability of the Implications of Supervised and Unsupervised Face I

Music Source Separation; Train & Eval & Inference piplines and pretrained models we used for 2021 ISMIR MDX Challenge.

Introduction 1. Usage (For MSS) 1.1 Prepare running environment 1.2 Use pretrained model 1.3 Train new MSS models from scratch 1.3.1 How to train 1.3.

This is the paddle code for SeBoW(Self-Born wiring for neural trees), a kind of neural tree born form a large search space

SeBoW: Self-Born Wiring for neural trees(PaddlePaddle version) This is the paddle code for SeBoW(Self-Born wiring for neural trees), a kind of neural

Implementation of the method proposed in the paper "Neural Descriptor Fields: SE(3)-Equivariant Object Representations for Manipulation"

Neural Descriptor Fields (NDF) PyTorch implementation for training continuous 3D neural fields to represent dense correspondence across objects, and u

Visual Adversarial Imitation Learning using Variational Models (VMAIL)

Visual Adversarial Imitation Learning using Variational Models (VMAIL) This is the official implementation of the NeurIPS 2021 paper. Project website

A collection of models, views, middlewares, and forms to help secure a Django project.

Django-Security This package offers a number of models, views, middlewares and forms to facilitate security hardening of Django applications. Full doc

Supplemental learning materials for "Fourier Feature Networks and Neural Volume Rendering"

Fourier Feature Networks and Neural Volume Rendering This repository is a companion to a lecture given at the University of Cambridge Engineering Depa

Plenoxels: Radiance Fields without Neural Networks, Code release WIP

Plenoxels: Radiance Fields without Neural Networks Alex Yu*, Sara Fridovich-Keil*, Matthew Tancik, Qinhong Chen, Benjamin Recht, Angjoo Kanazawa UC Be

![[KDD 2021, Research Track] DiffMG: Differentiable Meta Graph Search for Heterogeneous Graph Neural Networks](https://user-images.githubusercontent.com/22978940/122879585-a77ed280-d36b-11eb-9b49-6e8fed99faba.png)

[KDD 2021, Research Track] DiffMG: Differentiable Meta Graph Search for Heterogeneous Graph Neural Networks

DiffMG This repository contains the code for our KDD 2021 Research Track paper: DiffMG: Differentiable Meta Graph Search for Heterogeneous Graph Neura

RuleBERT: Teaching Soft Rules to Pre-Trained Language Models

RuleBERT: Teaching Soft Rules to Pre-Trained Language Models (Paper) (Slides) (Video) RuleBERT is a pre-trained language model that has been fine-tune

Experiments for Neural Flows paper

Neural Flows: Efficient Alternative to Neural ODEs [arxiv] TL;DR: We directly model the neural ODE solutions with neural flows, which is much faster a

An MLOps framework to package, deploy, monitor and manage thousands of production machine learning models

Seldon Core: Blazing Fast, Industry-Ready ML An open source platform to deploy your machine learning models on Kubernetes at massive scale. Overview S

Uni-Fold: Training your own deep protein-folding models.

Uni-Fold: Training your own deep protein-folding models. This package provides and implementation of a trainable, Transformer-based deep protein foldi

Torchrecipes provides a set of reproduci-able, re-usable, ready-to-run RECIPES for training different types of models, across multiple domains, on PyTorch Lightning.

Recipes are a standard, well supported set of blueprints for machine learning engineers to rapidly train models using the latest research techniques without significant engineering overhead.Specifically, recipes aims to provide- Consistent access to pre-trained SOTA models ready for production- Reference implementations for SOTA research reproducibility, and infrastructure to guarantee correctness, efficiency, and interoperability.

Neural search engine for AI papers

Papers search Neural search engine for ML papers. Demo Usage is simple: input an abstract, get the matching papers. The following demo also showcases

State-of-the-art NLP through transformer models in a modular design and consistent APIs.

Trapper (Transformers wRAPPER) Trapper is an NLP library that aims to make it easier to train transformer based models on downstream tasks. It wraps h

Official Code for AdvRush: Searching for Adversarially Robust Neural Architectures (ICCV '21)

AdvRush Official Code for AdvRush: Searching for Adversarially Robust Neural Architectures (ICCV '21) Environmental Set-up Python == 3.6.12, PyTorch =

EMNLP 2021 paper Models and Datasets for Cross-Lingual Summarisation.

This repository contains data and code for our EMNLP 2021 paper Models and Datasets for Cross-Lingual Summarisation. Please contact me at [email protected]

A modular application for performing anomaly detection in networks

Deep-Learning-Models-for-Network-Annomaly-Detection The modular app consists for mainly three annomaly detection algorithms. The system supports model

Model Validation Toolkit is a collection of tools to assist with validating machine learning models prior to deploying them to production and monitoring them after deployment to production.

Model Validation Toolkit is a collection of tools to assist with validating machine learning models prior to deploying them to production and monitoring them after deployment to production.

Official Implementation of "LUNAR: Unifying Local Outlier Detection Methods via Graph Neural Networks"

LUNAR Official Implementation of "LUNAR: Unifying Local Outlier Detection Methods via Graph Neural Networks" Adam Goodge, Bryan Hooi, Ng See Kiong and

This repository is an implementation of paper : Improving the Training of Graph Neural Networks with Consistency Regularization

CRGNN Paper : Improving the Training of Graph Neural Networks with Consistency Regularization Environments Implementing environment: GeForce RTX™ 3090

Pairwise learning neural link prediction for ogb link prediction

Pairwise Learning for Neural Link Prediction for OGB (PLNLP-OGB) This repository provides evaluation codes of PLNLP for OGB link property prediction t

Rapid experimentation and scaling of deep learning models on molecular and crystal graphs.

LitMatter A template for rapid experimentation and scaling deep learning models on molecular and crystal graphs. How to use Clone this repository and

Markov Attention Models

Introduction This repo contains code for reproducing the results in the paper Graphical Models with Attention for Context-Specific Independence and an

The best solution of the Weather Prediction track in the Yandex Shifts challenge

yandex-shifts-weather The repository contains information about my solution for the Weather Prediction track in the Yandex Shifts challenge https://re

RID-Noise: Towards Robust Inverse Design under Noisy Environments

This is code of RID-Noise. Reproduce RID-Noise Results Toy tasks Please refer to the notebook ridnoise.ipynb to view experiments on three toy tasks. B

Unsupervised Representation Learning via Neural Activation Coding

Neural Activation Coding This repository contains the code for the paper "Unsupervised Representation Learning via Neural Activation Coding" published

A new framework, collaborative cascade prediction based on graph neural networks (CCasGNN) to jointly utilize the structural characteristics, sequence features, and user profiles.

CCasGNN A new framework, collaborative cascade prediction based on graph neural networks (CCasGNN) to jointly utilize the structural characteristics,

"Graph Neural Controlled Differential Equations for Traffic Forecasting", AAAI 2022

Graph Neural Controlled Differential Equations for Traffic Forecasting Setup Python environment for STG-NCDE Install python environment $ conda env cr

Pytorch code for paper "Image Compressed Sensing Using Non-local Neural Network" TMM 2021.

NL-CSNet-Pytorch Pytorch code for paper "Image Compressed Sensing Using Non-local Neural Network" TMM 2021. Note: this repo only shows the strategy of

SHRIMP: Sparser Random Feature Models via Iterative Magnitude Pruning

SHRIMP: Sparser Random Feature Models via Iterative Magnitude Pruning This repository is the official implementation of "SHRIMP: Sparser Random Featur

S-attack library. Official implementation of two papers "Are socially-aware trajectory prediction models really socially-aware?" and "Vehicle trajectory prediction works, but not everywhere".

S-attack library: A library for evaluating trajectory prediction models This library contains two research projects to assess the trajectory predictio

This repository is an implementation of paper : Improving the Training of Graph Neural Networks with Consistency Regularization

CRGNN Paper : Improving the Training of Graph Neural Networks with Consistency Regularization Environments Implementing environment: GeForce RTX™ 3090

Self-Supervised Learning of Event-based Optical Flow with Spiking Neural Networks

Self-Supervised Learning of Event-based Optical Flow with Spiking Neural Networks Work accepted at NeurIPS'21 [paper, video]. If you use this code in

Machine translation models released by the Gourmet project

Gourmet Models Overview The Gourmet project has released several machine translation models to translate low-resource languages. This repository conta

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Transformer-in-Transformer An Implementation of the Transformer in Transformer paper by Han et al. for image classification, attention inside local pa

PyTorch implementation for OCT-GAN Neural ODE-based Conditional Tabular GANs (WWW 2021)

OCT-GAN: Neural ODE-based Conditional Tabular GANs (OCT-GAN) Code for reproducing the experiments in the paper: Jayoung Kim*, Jinsung Jeon*, Jaehoon L

Uni-Fold: Training your own deep protein-folding models

Uni-Fold: Training your own deep protein-folding models. This package provides an implementation of a trainable, Transformer-based deep protein foldin

Federated learning on graph, especially on graph neural networks (GNNs), knowledge graph, and private GNN.

Federated learning on graph, especially on graph neural networks (GNNs), knowledge graph, and private GNN.

Papers about explainability of GNNs

Papers about explainability of GNNs