33 Repositories

Python scrapy-x Libraries

Scrapy-soccer-games - Scraping information about soccer games from a few websites

scrapy-soccer-games Esse projeto tem por finalidade pegar informação de tabela d

A scrapy pipeline that provides an easy way to store files and images using various folder structures.

scrapy-folder-tree This is a scrapy pipeline that provides an easy way to store files and images using various folder structures. Supported folder str

Amazon web scraping using Scrapy Framework

Amazon-web-scraping-using-Scrapy-Framework Scrapy Scrapy is an application framework for crawling web sites and extracting structured data which can b

Iptvcrawl - A scrapy project for crawl IPTV playlist

iptvcrawl a scrapy project for crawl IPTV playlist. Dependency Python3 pip insta

Video Games Web Scraper is a project that crawls websites and APIs and extracts video game related data from their pages.

Video Games Web Scraper Video Games Web Scraper is a project that crawls websites and APIs and extracts video game related data from their pages. This

This is a web scraper, using Python framework Scrapy, built to extract data from the Deals of the Day section on Mercado Livre website.

Deals of the Day This is a web scraper, using the Python framework Scrapy, built to extract data such as price and product name from the Deals of the

High available distributed ip proxy pool, powerd by Scrapy and Redis

高可用IP代理池 README | 中文文档 本项目所采集的IP资源都来自互联网,愿景是为大型爬虫项目提供一个高可用低延迟的高匿IP代理池。 项目亮点 代理来源丰富 代理抓取提取精准 代理校验严格合理 监控完备,鲁棒性强 架构灵活,便于扩展 各个组件分布式部署 快速开始 注意,代码请在release

X-news - Pipeline data use scrapy, kafka, spark streaming, spark ML and elasticsearch, Kibana

X-news - Pipeline data use scrapy, kafka, spark streaming, spark ML and elasticsearch, Kibana

Bigdata - This Scrapy project uses Redis and Kafka to create a distributed on demand scraping cluster

Scrapy Cluster This Scrapy project uses Redis and Kafka to create a distributed

This Scrapy project uses Redis and Kafka to create a distributed on demand scraping cluster

This Scrapy project uses Redis and Kafka to create a distributed on demand scraping cluster.

Recommend recipes based on what ingredients you have at home

🌱 MyChef 📦 Overview MyChef is an application that helps you decide what meal to make based on what you have at home. Simply enter in ingredients you

Downloader Middleware to support Playwright in Scrapy & Gerapy

Gerapy Playwright This is a package for supporting Playwright in Scrapy, also this package is a module in Gerapy. Installation pip3 install gerapy-pla

Amazon scraper using scrapy, a python framework for crawling websites.

#Amazon-web-scraper This is a python program, which use scrapy python framework to crawl all pages of the product and scrap products data. This progra

This Spider/Bot is developed using Python and based on Scrapy Framework to Fetch some items information from Amazon

- Hello, This Project Contains Amazon Web-bot. - I've developed this bot for fething some items information on Amazon. - Scrapy Framework in Python is

Chatbot construido com o framework Rasa para responder dúvidas referentes ao COVID-19.

Racom Chatbot Chatbot construido com o framework Rasa. Como executar Necessário instalar Docker e Docker Compose. Para inicializar a aplicação, basta

Creating Scrapy scrapers via the Django admin interface

django-dynamic-scraper Django Dynamic Scraper (DDS) is an app for Django which builds on top of the scraping framework Scrapy and lets you create and

A Django api to display items and their current up-to-date prices from different online retailers in one platform.

A Django api to display items and their current up-to-date prices from different online retailers in one platform. Utilizing scrapy to periodically scrape the latest prices from different online retailers. Store in a PostgreSQL database and make available via an API.

Scrapy uses Request and Response objects for crawling web sites.

Requests and Responses¶ Scrapy uses Request and Response objects for crawling web sites. Typically, Request objects are generated in the spiders and p

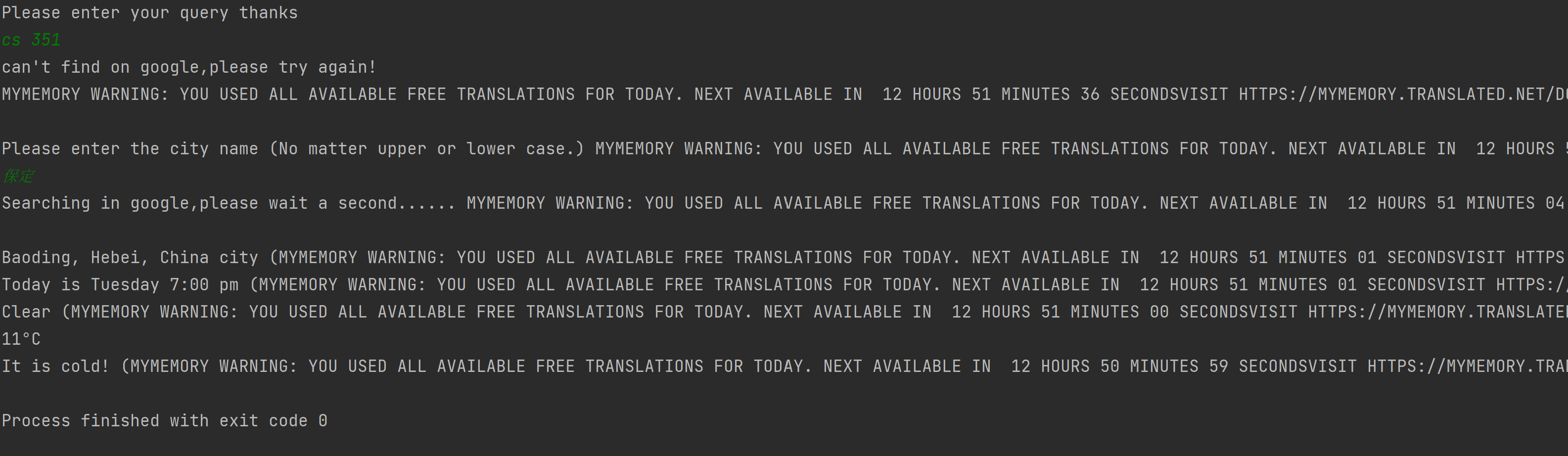

Searching info from Google using Python Scrapy

Python-Search-Engine-Scrapy || Python-爬虫-索引/利用爬虫获取谷歌信息**/ Searching info from Google using Python Scrapy /* 利用 PYTHON 爬虫获取天气信息,以及城市信息和资料**/ translatio

Scrapy-based cyber security news finder

Cyber-Security-News-Scraper Scrapy-based cyber security news finder Goal To keep up to date on the constant barrage of information within the field of

Crawler do site Fundamentus.com com o uso do framework scrapy, tanto da aba detalhada como a de resumo.

Crawler do site Fundamentus.com com o uso do framework scrapy, tanto da aba detalhada como a de resumo. (Todas as infomações)

Fundamentus scrapy

Fundamentus_scrapy Baixa informacões que os outros scrapys do fundamentus não realizam. Para iniciar (python main.py), sera criado um arquivo chamado

a Scrapy spider that utilizes Postgres as a DB, Squid as a proxy server, Redis for de-duplication and Splash to render JavaScript. All in a microservices architecture utilizing Docker and Docker Compose

This is George's Scraping Project To get started cd into the theZoo file and run: chmod +x script.sh then: ./script.sh This will spin up a Postgres co

Scraping news from Ucsal portal with Scrapy.

NewsScraping Esse é um projeto de raspagem das últimas noticias, de 2021, do portal da universidade Ucsal http://noosfero.ucsal.br/institucional Tecno

download NCERT books using scrapy

download_ncert_books download NCERT books using scrapy Downloading Books: You can either use the spider by cloning this repo and following the instruc

Snowflake database loading utility with Scrapy integration

Snowflake Stage Exporter Snowflake database loading utility with Scrapy integration. Meant for streaming ingestion of JSON serializable objects into S

a high-performance, lightweight and human friendly serving engine for scrapy

a high-performance, lightweight and human friendly serving engine for scrapy

An experiment to deploy a serverless infrastructure for a scrapy project.

Serverless Scrapy project This project aims to evaluate the feasibility of an architecture based on serverless technology for a web crawler using scra

feapder 是一款简单、快速、轻量级的爬虫框架。以开发快速、抓取快速、使用简单、功能强大为宗旨。支持分布式爬虫、批次爬虫、多模板爬虫,以及完善的爬虫报警机制。

feapder 是一款简单、快速、轻量级的爬虫框架。起名源于 fast、easy、air、pro、spider的缩写,以开发快速、抓取快速、使用简单、功能强大为宗旨,历时4年倾心打造。支持轻量爬虫、分布式爬虫、批次爬虫、爬虫集成,以及完善的爬虫报警机制。 之

Backend, modern REST API for obtaining match and odds data crawled from multiple sites. Using FastAPI, MongoDB as database, Motor as async MongoDB client, Scrapy as crawler and Docker.

Introduction Apiestas is a project composed of a backend powered by the awesome framework FastAPI and a crawler powered by Scrapy. This project has fo

Distributed Crawler Management Framework Based on Scrapy, Scrapyd, Django and Vue.js

Gerapy Distributed Crawler Management Framework Based on Scrapy, Scrapyd, Scrapyd-Client, Scrapyd-API, Django and Vue.js. Documentation Documentation

Scrapy, a fast high-level web crawling & scraping framework for Python.

Scrapy Overview Scrapy is a fast high-level web crawling and web scraping framework, used to crawl websites and extract structured data from their pag

Visual scraping for Scrapy

Portia Portia is a tool that allows you to visually scrape websites without any programming knowledge required. With Portia you can annotate a web pag