378 Repositories

Python speech-transcription Libraries

In this repository, I have developed an end to end Automatic speech recognition project. I have developed the neural network model for automatic speech recognition with PyTorch and used MLflow to manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry.

End to End Automatic Speech Recognition In this repository, I have developed an end to end Automatic speech recognition project. I have developed the

easySpeech is an open-source Python wrapper for google speech to text API that doesn't require PyAudio(So you especially windows user don't have to deal with the errors while installing PyAudio) and also works with hugging face transformers

easySpeech easySpeech is an open source python wrapper for google speech to text api that doesn't require PyAaudio(So you specially windows user don't

UnivNet: A Neural Vocoder with Multi-Resolution Spectrogram Discriminators for High-Fidelity Waveform Generation

UnivNet UnivNet: A Neural Vocoder with Multi-Resolution Spectrogram Discriminators for High-Fidelity Waveform Generation. Training python train.py --c

PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation

StyleSpeech - PyTorch Implementation PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation. Status (2021.06.13

HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis

HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis Jungil Kong, Jaehyeon Kim, Jaekyoung Bae In our paper, we p

VITS: Conditional Variational Autoencoder with Adversarial Learning for End-to-End Text-to-Speech

VITS: Conditional Variational Autoencoder with Adversarial Learning for End-to-End Text-to-Speech Jaehyeon Kim, Jungil Kong, and Juhee Son In our rece

Open-Source Toolkit for End-to-End Speech Recognition leveraging PyTorch-Lightning and Hydra.

OpenSpeech provides reference implementations of various ASR modeling papers and three languages recipe to perform tasks on automatic speech recogniti

PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation

StyleSpeech - PyTorch Implementation PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation. Status (2021.06.09

Open-Source Toolkit for End-to-End Speech Recognition leveraging PyTorch-Lightning and Hydra.

OpenSpeech provides reference implementations of various ASR modeling papers and three languages recipe to perform tasks on automatic speech recogniti

Pytorch Implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension)

DiffSinger - PyTorch Implementation PyTorch implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension). Status

A PyTorch implementation of paper "Learning Shared Semantic Space for Speech-to-Text Translation", ACL (Findings) 2021

Chimera: Learning Shared Semantic Space for Speech-to-Text Translation This is a Pytorch implementation for the "Chimera" paper Learning Shared Semant

Simple, hackable offline speech to text - using the VOSK-API.

Nerd Dictation Offline Speech to Text for Desktop Linux. This is a utility that provides simple access speech to text for using in Linux without being

Official codes for the paper "Learning Hierarchical Discrete Linguistic Units from Visually-Grounded Speech"

ResDAVEnet-VQ Official PyTorch implementation of Learning Hierarchical Discrete Linguistic Units from Visually-Grounded Speech What is in this repo? M

ERISHA is a mulitilingual multispeaker expressive speech synthesis framework. It can transfer the expressivity to the speaker's voice for which no expressive speech corpus is available.

ERISHA: Multilingual Multispeaker Expressive Text-to-Speech Library ERISHA is a multilingual multispeaker expressive speech synthesis framework. It ca

voice2json is a collection of command-line tools for offline speech/intent recognition on Linux

Command-line tools for speech and intent recognition on Linux

Pytorch Implementation of Google's Parallel Tacotron 2: A Non-Autoregressive Neural TTS Model with Differentiable Duration Modeling

Parallel Tacotron2 Pytorch Implementation of Google's Parallel Tacotron 2: A Non-Autoregressive Neural TTS Model with Differentiable Duration Modeling

Python codes for Lite Audio-Visual Speech Enhancement.

Lite Audio-Visual Speech Enhancement (Interspeech 2020) Introduction This is the PyTorch implementation of Lite Audio-Visual Speech Enhancement (LAVSE

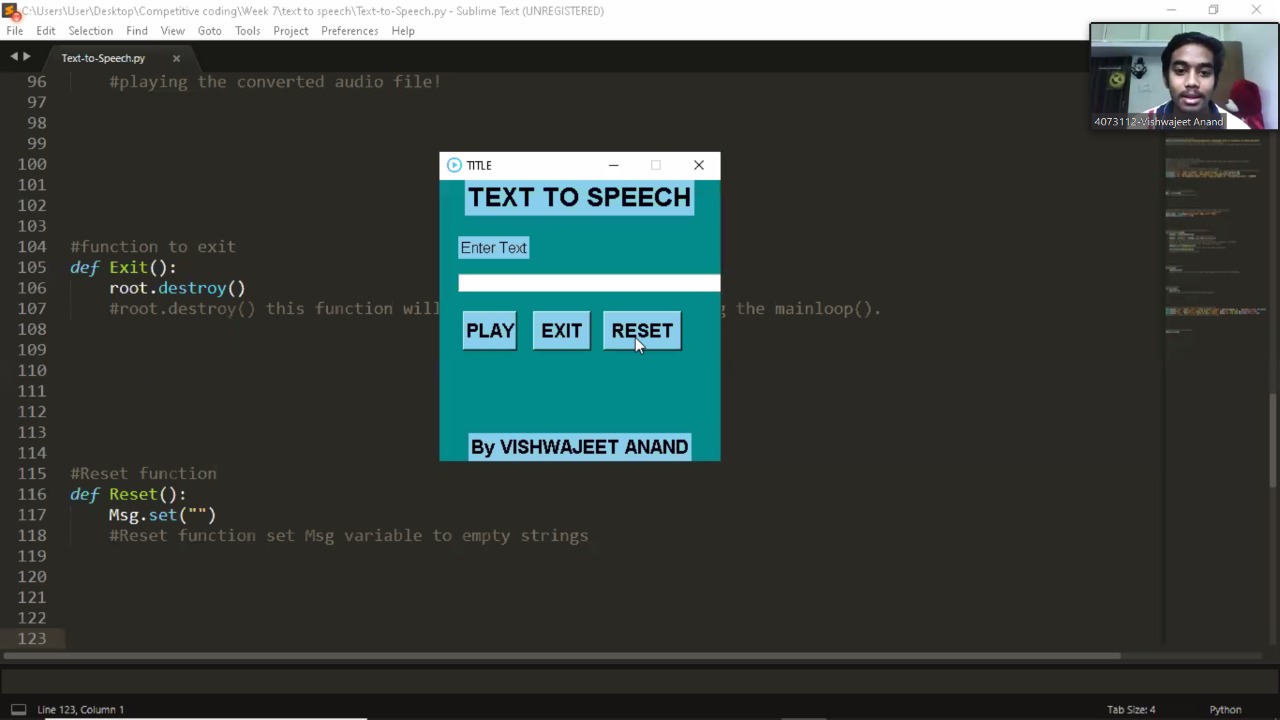

Text to speech is a process to convert any text into voice. Text to speech project takes words on digital devices and convert them into audio. Here I have used Google-text-to-speech library popularly known as gTTS library to convert text file to .mp3 file. Hope you like my project!

Text to speech (using Python) Text to speech is a process to convert any text into voice. Text to speech project takes words on digital devices and co

A fast Text-to-Speech (TTS) model. Work well for English, Mandarin/Chinese, Japanese, Korean, Russian and Tibetan (so far). 快速语音合成模型,适用于英语、普通话/中文、日语、韩语、俄语和藏语(当前已测试)。

简体中文 | English 并行语音合成 [TOC] 新进展 2021/04/20 合并 wavegan 分支到 main 主分支,删除 wavegan 分支! 2021/04/13 创建 encoder 分支用于开发语音风格迁移模块! 2021/04/13 softdtw 分支 支持使用 Sof

This repository describes our reproducible framework for assessing self-supervised representation learning from speech

LeBenchmark: a reproducible framework for assessing SSL from speech Self-Supervised Learning (SSL) using huge unlabeled data has been successfully exp

Modular and extensible speech recognition library leveraging pytorch-lightning and hydra.

Lightning ASR Modular and extensible speech recognition library leveraging pytorch-lightning and hydra What is Lightning ASR • Installation • Get Star

Identify the emotion of multiple speakers in an Audio Segment

MevonAI - Speech Emotion Recognition

Espresso: A Fast End-to-End Neural Speech Recognition Toolkit

Espresso Espresso is an open-source, modular, extensible end-to-end neural automatic speech recognition (ASR) toolkit based on the deep learning libra

pytorch-kaldi is a project for developing state-of-the-art DNN/RNN hybrid speech recognition systems. The DNN part is managed by pytorch, while feature extraction, label computation, and decoding are performed with the kaldi toolkit.

The PyTorch-Kaldi Speech Recognition Toolkit PyTorch-Kaldi is an open-source repository for developing state-of-the-art DNN/HMM speech recognition sys

Unofficial PyTorch implementation of Google AI's VoiceFilter system

VoiceFilter Note from Seung-won (2020.10.25) Hi everyone! It's Seung-won from MINDs Lab, Inc. It's been a long time since I've released this open-sour

End-to-End Speech Processing Toolkit

ESPnet: end-to-end speech processing toolkit system/pytorch ver. 1.0.1 1.1.0 1.2.0 1.3.1 1.4.0 1.5.1 1.6.0 1.7.1 1.8.1 ubuntu18/python3.8/pip ubuntu18

Neural building blocks for speaker diarization: speech activity detection, speaker change detection, overlapped speech detection, speaker embedding

⚠️ Checkout develop branch to see what is coming in pyannote.audio 2.0: a much smaller and cleaner codebase Python-first API (the good old pyannote-au

Pytorch implementation of Tacotron

Tacotron-pytorch A pytorch implementation of Tacotron: A Fully End-to-End Text-To-Speech Synthesis Model. Requirements Install python 3 Install pytorc

Sequence-to-Sequence Framework in PyTorch

nmtpytorch allows training of various end-to-end neural architectures including but not limited to neural machine translation, image captioning and au

A PyTorch Implementation of End-to-End Models for Speech-to-Text

speech Speech is an open-source package to build end-to-end models for automatic speech recognition. Sequence-to-sequence models with attention, Conne

A method to generate speech across multiple speakers

VoiceLoop PyTorch implementation of the method described in the paper VoiceLoop: Voice Fitting and Synthesis via a Phonological Loop. VoiceLoop is a n

Data manipulation and transformation for audio signal processing, powered by PyTorch

torchaudio: an audio library for PyTorch The aim of torchaudio is to apply PyTorch to the audio domain. By supporting PyTorch, torchaudio follows the

AdaSpeech 2: Adaptive Text to Speech with Untranscribed Data

AdaSpeech 2: Adaptive Text to Speech with Untranscribed Data [WIP] Unofficial Pytorch implementation of AdaSpeech 2. Requirements : All code written i

HiFi-GAN: High Fidelity Denoising and Dereverberation Based on Speech Deep Features in Adversarial Networks

HiFiGAN Denoiser This is a Unofficial Pytorch implementation of the paper HiFi-GAN: High Fidelity Denoising and Dereverberation Based on Speech Deep F

Codes for our paper "SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge" (EMNLP 2020)

SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge Introduction SentiLARE is a sentiment-aware pre-trained language

Speech Algorithms Collections

Speech Algorithms Collections

Binaural Speech Synthesis

Binaural Speech Synthesis This repository contains code to train a mono-to-binaural neural sound renderer. If you use this code or the provided datase

TalkNet 2: Non-Autoregressive Depth-Wise Separable Convolutional Model for Speech Synthesis with Explicit Pitch and Duration Prediction.

TalkNet 2 [WIP] TalkNet 2: Non-Autoregressive Depth-Wise Separable Convolutional Model for Speech Synthesis with Explicit Pitch and Duration Predictio

PyTorch Lightning implementation of Automatic Speech Recognition

lasr Lightening Automatic Speech Recognition An MIT License ASR research library, built on PyTorch-Lightning, for developing end-to-end ASR models. In

SC-GlowTTS: an Efficient Zero-Shot Multi-Speaker Text-To-Speech Model

SC-GlowTTS: an Efficient Zero-Shot Multi-Speaker Text-To-Speech Model Edresson Casanova, Christopher Shulby, Eren Gölge, Nicolas Michael Müller, Frede

A Paper List for Speech Translation

Keyword: Speech Translation, Spoken Language Processing, Natural Language Processing

TTS is a library for advanced Text-to-Speech generation.

TTS is a library for advanced Text-to-Speech generation. It's built on the latest research, was designed to achieve the best trade-off among ease-of-training, speed and quality. TTS comes with pretrained models, tools for measuring dataset quality and already used in 20+ languages for products and research projects.

Chinese Mandarin tts text-to-speech 中文 (普通话) 语音 合成 , by fastspeech 2 , implemented in pytorch, using waveglow as vocoder,

Chinese mandarin text to speech based on Fastspeech2 and Unet This is a modification and adpation of fastspeech2 to mandrin(普通话). Many modifications t

STYLER: Style Factor Modeling with Rapidity and Robustness via Speech Decomposition for Expressive and Controllable Neural Text to Speech

STYLER: Style Factor Modeling with Rapidity and Robustness via Speech Decomposition for Expressive and Controllable Neural Text to Speech Keon Lee, Ky

Some bits of javascript to transcribe scanned pages using PageXML

nashi (nasḫī) Some bits of javascript to transcribe scanned pages using PageXML. Both ltr and rtl languages are supported. Try it! But wait, there's m

kaldi-asr/kaldi is the official location of the Kaldi project.

Kaldi Speech Recognition Toolkit To build the toolkit: see ./INSTALL. These instructions are valid for UNIX systems including various flavors of Linux

中文语音识别系列,读者可以借助它快速训练属于自己的中文语音识别模型,或直接使用预训练模型测试效果。

MASR中文语音识别(pytorch版) 开箱即用 自行训练 使用与训练分离(增量训练) 识别率高 说明:因为每个人电脑机器不同,而且有些安装包安装起来比较麻烦,强烈建议直接用我编译好的docker环境跑 目前docker基础环境为ubuntu-cuda10.1-cudnn7-pytorch1.6.

TensorFlowTTS: Real-Time State-of-the-art Speech Synthesis for Tensorflow 2 (supported including English, Korean, Chinese, German and Easy to adapt for other languages)

🤪 TensorFlowTTS provides real-time state-of-the-art speech synthesis architectures such as Tacotron-2, Melgan, Multiband-Melgan, FastSpeech, FastSpeech2 based-on TensorFlow 2. With Tensorflow 2, we can speed-up training/inference progress, optimizer further by using fake-quantize aware and pruning, make TTS models can be run faster than real-time and be able to deploy on mobile devices or embedded systems.

SpeechBrain is an open-source and all-in-one speech toolkit based on PyTorch.

The goal is to create a single, flexible, and user-friendly toolkit that can be used to easily develop state-of-the-art speech technologies, including systems for speech recognition, speaker recognition, speech enhancement, multi-microphone signal processing and many others.

Speech Enhancement Generative Adversarial Network Based on Asymmetric AutoEncoder

ASEGAN: Speech Enhancement Generative Adversarial Network Based on Asymmetric AutoEncoder 中文版简介 Readme with English Version 介绍 基于SEGAN模型的改进版本,使用自主设计的非

LightSpeech: Lightweight and Fast Text to Speech with Neural Architecture Search

LightSpeech UnOfficial PyTorch implementation of LightSpeech: Lightweight and Fast Text to Speech with Neural Architecture Search.

Conferencing Speech Challenge

ConferencingSpeech 2021 challenge This repository contains the datasets list and scripts required for the ConferencingSpeech challenge. For more detai

Bidirectional LSTM-CRF and ELMo for Named-Entity Recognition, Part-of-Speech Tagging and so on.

anaGo anaGo is a Python library for sequence labeling(NER, PoS Tagging,...), implemented in Keras. anaGo can solve sequence labeling tasks such as nam

DELTA is a deep learning based natural language and speech processing platform.

DELTA - A DEep learning Language Technology plAtform What is DELTA? DELTA is a deep learning based end-to-end natural language and speech processing p

NeMo: a toolkit for conversational AI

NVIDIA NeMo Introduction NeMo is a toolkit for creating Conversational AI applications. NeMo product page. Introductory video. The toolkit comes with

State of the Art Natural Language Processing

Spark NLP: State of the Art Natural Language Processing Spark NLP is a Natural Language Processing library built on top of Apache Spark ML. It provide

Simple, Pythonic, text processing--Sentiment analysis, part-of-speech tagging, noun phrase extraction, translation, and more.

TextBlob: Simplified Text Processing Homepage: https://textblob.readthedocs.io/ TextBlob is a Python (2 and 3) library for processing textual data. It

Speech recognition module for Python, supporting several engines and APIs, online and offline.

SpeechRecognition Library for performing speech recognition, with support for several engines and APIs, online and offline. Speech recognition engine/

Neural building blocks for speaker diarization: speech activity detection, speaker change detection, overlapped speech detection, speaker embedding

⚠️ Checkout develop branch to see what is coming in pyannote.audio 2.0: a much smaller and cleaner codebase Python-first API (the good old pyannote-au

DeepSpeech is an open source embedded (offline, on-device) speech-to-text engine which can run in real time on devices ranging from a Raspberry Pi 4 to high power GPU servers.

Project DeepSpeech DeepSpeech is an open-source Speech-To-Text engine, using a model trained by machine learning techniques based on Baidu's Deep Spee

:speech_balloon: SpeechPy - A Library for Speech Processing and Recognition: http://speechpy.readthedocs.io/en/latest/

SpeechPy Official Project Documentation Table of Contents Documentation Which Python versions are supported Citation How to Install? Local Installatio

This library provides common speech features for ASR including MFCCs and filterbank energies.

python_speech_features This library provides common speech features for ASR including MFCCs and filterbank energies. If you are not sure what MFCCs ar

Data manipulation and transformation for audio signal processing, powered by PyTorch

torchaudio: an audio library for PyTorch The aim of torchaudio is to apply PyTorch to the audio domain. By supporting PyTorch, torchaudio follows the

Python library for handling audio datasets.

AUDIOMATE Audiomate is a library for easy access to audio datasets. It provides the datastructures for accessing/loading different datasets in a gener

AI grand challenge 2020 Repo (Speech Recognition Track)

KorBERT를 활용한 한국어 텍스트 기반 위협 상황인지(2020 인공지능 그랜드 챌린지) 본 프로젝트는 ETRI에서 제공된 한국어 korBERT 모델을 활용하여 폭력 기반 한국어 텍스트를 분류하는 다양한 분류 모델들을 제공합니다. 본 개발자들이 참여한 2020 인공지

Bidirectional LSTM-CRF and ELMo for Named-Entity Recognition, Part-of-Speech Tagging and so on.

anaGo anaGo is a Python library for sequence labeling(NER, PoS Tagging,...), implemented in Keras. anaGo can solve sequence labeling tasks such as nam

DELTA is a deep learning based natural language and speech processing platform.

DELTA - A DEep learning Language Technology plAtform What is DELTA? DELTA is a deep learning based end-to-end natural language and speech processing p

NeMo: a toolkit for conversational AI

NVIDIA NeMo Introduction NeMo is a toolkit for creating Conversational AI applications. NeMo product page. Introductory video. The toolkit comes with

State of the Art Natural Language Processing

Spark NLP: State of the Art Natural Language Processing Spark NLP is a Natural Language Processing library built on top of Apache Spark ML. It provide

Simple, Pythonic, text processing--Sentiment analysis, part-of-speech tagging, noun phrase extraction, translation, and more.

TextBlob: Simplified Text Processing Homepage: https://textblob.readthedocs.io/ TextBlob is a Python (2 and 3) library for processing textual data. It

PORORO: Platform Of neuRal mOdels for natuRal language prOcessing

PORORO: Platform Of neuRal mOdels for natuRal language prOcessing pororo performs Natural Language Processing and Speech-related tasks. It is easy to

Trankit is a Light-Weight Transformer-based Python Toolkit for Multilingual Natural Language Processing

Trankit: A Light-Weight Transformer-based Python Toolkit for Multilingual Natural Language Processing Trankit is a light-weight Transformer-based Pyth

PIKA: a lightweight speech processing toolkit based on Pytorch and (Py)Kaldi

PIKA: a lightweight speech processing toolkit based on Pytorch and (Py)Kaldi PIKA is a lightweight speech processing toolkit based on Pytorch and (Py)

Bidirectional Variational Inference for Non-Autoregressive Text-to-Speech (BVAE-TTS)

Bidirectional Variational Inference for Non-Autoregressive Text-to-Speech (BVAE-TTS) Yoonhyung Lee, Joongbo Shin, Kyomin Jung Abstract: Although early

PhoNLP: A BERT-based multi-task learning toolkit for part-of-speech tagging, named entity recognition and dependency parsing

PhoNLP is a multi-task learning model for joint part-of-speech (POS) tagging, named entity recognition (NER) and dependency parsing. Experiments on Vietnamese benchmark datasets show that PhoNLP produces state-of-the-art results, outperforming a single-task learning approach that fine-tunes the pre-trained Vietnamese language model PhoBERT for each task independently.

Pseudo-Visual Speech Denoising

Pseudo-Visual Speech Denoising This code is for our paper titled: Visual Speech Enhancement Without A Real Visual Stream published at WACV 2021. Autho

PyTorch implementation of "A Full-Band and Sub-Band Fusion Model for Real-Time Single-Channel Speech Enhancement."

FullSubNet This Git repository for the official PyTorch implementation of "A Full-Band and Sub-Band Fusion Model for Real-Time Single-Channel Speech E

PyTorch implementation of "Conformer: Convolution-augmented Transformer for Speech Recognition" (INTERSPEECH 2020)

PyTorch implementation of Conformer: Convolution-augmented Transformer for Speech Recognition. Transformer models are good at capturing content-based